Erik Blasch

Air Force Office of Scientific Research

LASS-ODE: Scaling ODE Computations to Connect Foundation Models with Dynamical Physical Systems

Feb 01, 2026Abstract:Foundation models have transformed language, vision, and time series data analysis, yet progress on dynamic predictions for physical systems remains limited. Given the complexity of physical constraints, two challenges stand out. $(i)$ Physics-computation scalability: physics-informed learning can enforce physical regularization, but its computation (e.g., ODE integration) does not scale to extensive systems. $(ii)$ Knowledge-sharing efficiency: the attention mechanism is primarily computed within each system, which limits the extraction of shared ODE structures across systems. We show that enforcing ODE consistency does not require expensive nonlinear integration: a token-wise locally linear ODE representation preserves physical fidelity while scaling to foundation-model regimes. Thus, we propose novel token representations that respect locally linear ODE evolution. Such linearity substantially accelerates integration while accurately approximating the local data manifold. Second, we introduce a simple yet effective inter-system attention that augments attention with a common structure hub (CSH) that stores shared tokens and aggregates knowledge across systems. The resulting model, termed LASS-ODE (\underline{LA}rge-\underline{S}cale \underline{S}mall \underline{ODE}), is pretrained on our $40$GB ODE trajectory collections to enable strong in-domain performance, zero-shot generalization across diverse ODE systems, and additional improvements through fine-tuning.

Autonomous Self-Healing UAV Swarms for Robust 6G Non-Terrestrial Networks

Jan 19, 2026Abstract:Recent years have seen an increased interest in the use of Non-terrestrial networks (NTNs), especially the unmanned aerial vehicles (UAVs) to provide cost-effective global connectivity in next-generation wireless networks. We introduce a resilient, adaptive, self-healing network design (RASHND) to optimize signal quality under dynamic interference and adversarial conditions. RASHND leverages inter-node communication and an intelligent algorithm selection process, incorporating combining techniques like distributed-Maximal Ratio Combining (d-MRC), distributed-Linear Minimum Mean Squared Error Estimation(d-LMMSE), and Selection Combining (SC). These algorithms are selected to improve performance by adapting to changing network conditions. To evaluate the effectiveness of the proposed RASHND solutions, a software-defined radio (SDR)-based hardware testbed afforded initial testing and evaluations. Additionally, we present results from UAV tests conducted on the AERPAW testbed to validate our solutions in real-world scenarios. The results demonstrate that RASHND significantly enhances the reliability and interference resilience of UAV networks, making them well-suited for critical communications.

Stone Soup: ADS-B-based Multi-Target Tracking with Stochastic Integration Filter

Jun 09, 2025Abstract:This paper focuses on the multi-target tracking using the Stone Soup framework. In particular, we aim at evaluation of two multi-target tracking scenarios based on the simulated class-B dataset and ADS-B class-A dataset provided by OpenSky Network. The scenarios are evaluated w.r.t. selection of a local state estimator using a range of the Stone Soup metrics. Source code with scenario definitions and Stone Soup set-up are provided along with the paper.

Towards Trustworthy Retrieval Augmented Generation for Large Language Models: A Survey

Feb 08, 2025

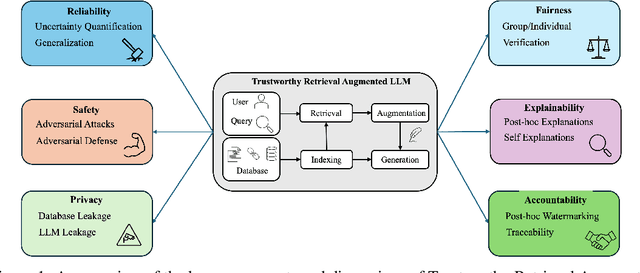

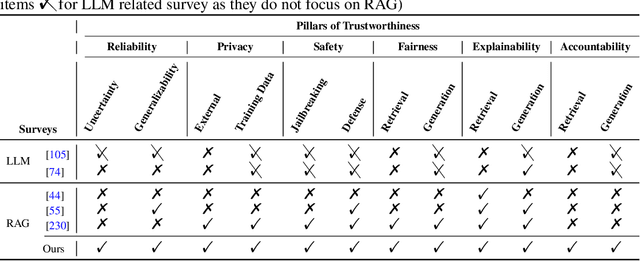

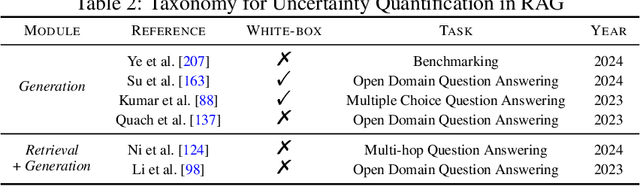

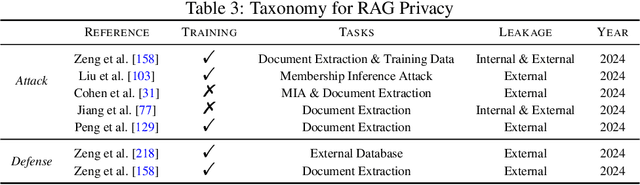

Abstract:Retrieval-Augmented Generation (RAG) is an advanced technique designed to address the challenges of Artificial Intelligence-Generated Content (AIGC). By integrating context retrieval into content generation, RAG provides reliable and up-to-date external knowledge, reduces hallucinations, and ensures relevant context across a wide range of tasks. However, despite RAG's success and potential, recent studies have shown that the RAG paradigm also introduces new risks, including robustness issues, privacy concerns, adversarial attacks, and accountability issues. Addressing these risks is critical for future applications of RAG systems, as they directly impact their trustworthiness. Although various methods have been developed to improve the trustworthiness of RAG methods, there is a lack of a unified perspective and framework for research in this topic. Thus, in this paper, we aim to address this gap by providing a comprehensive roadmap for developing trustworthy RAG systems. We place our discussion around five key perspectives: reliability, privacy, safety, fairness, explainability, and accountability. For each perspective, we present a general framework and taxonomy, offering a structured approach to understanding the current challenges, evaluating existing solutions, and identifying promising future research directions. To encourage broader adoption and innovation, we also highlight the downstream applications where trustworthy RAG systems have a significant impact.

Stochastic Integration Based Estimator: Robust Design and Stone Soup Implementation

Dec 10, 2024Abstract:This paper deals with state estimation of nonlinear stochastic dynamic models. In particular, the stochastic integration rule, which provides asymptotically unbiased estimates of the moments of nonlinearly transformed Gaussian random variables, is reviewed together with the recently introduced stochastic integration filter (SIF). Using SIF, the respective multi-step prediction and smoothing algorithms are developed in full and efficient square-root form. The stochastic-integration-rule-based algorithms are implemented in Python (within the Stone Soup framework) and in MATLAB and are numerically evaluated and compared with the well-known unscented and extended Kalman filters using the Stone Soup defined tracking scenario.

Towards Trustworthy Knowledge Graph Reasoning: An Uncertainty Aware Perspective

Oct 11, 2024Abstract:Recently, Knowledge Graphs (KGs) have been successfully coupled with Large Language Models (LLMs) to mitigate their hallucinations and enhance their reasoning capability, such as in KG-based retrieval-augmented frameworks. However, current KG-LLM frameworks lack rigorous uncertainty estimation, limiting their reliable deployment in high-stakes applications. Directly incorporating uncertainty quantification into KG-LLM frameworks presents challenges due to their complex architectures and the intricate interactions between the knowledge graph and language model components. To address this gap, we propose a new trustworthy KG-LLM framework, Uncertainty Aware Knowledge-Graph Reasoning (UAG), which incorporates uncertainty quantification into the KG-LLM framework. We design an uncertainty-aware multi-step reasoning framework that leverages conformal prediction to provide a theoretical guarantee on the prediction set. To manage the error rate of the multi-step process, we additionally introduce an error rate control module to adjust the error rate within the individual components. Extensive experiments show that our proposed UAG can achieve any pre-defined coverage rate while reducing the prediction set/interval size by 40% on average over the baselines.

Efficient Temporal Action Segmentation via Boundary-aware Query Voting

May 25, 2024Abstract:Although the performance of Temporal Action Segmentation (TAS) has improved in recent years, achieving promising results often comes with a high computational cost due to dense inputs, complex model structures, and resource-intensive post-processing requirements. To improve the efficiency while keeping the performance, we present a novel perspective centered on per-segment classification. By harnessing the capabilities of Transformers, we tokenize each video segment as an instance token, endowed with intrinsic instance segmentation. To realize efficient action segmentation, we introduce BaFormer, a boundary-aware Transformer network. It employs instance queries for instance segmentation and a global query for class-agnostic boundary prediction, yielding continuous segment proposals. During inference, BaFormer employs a simple yet effective voting strategy to classify boundary-wise segments based on instance segmentation. Remarkably, as a single-stage approach, BaFormer significantly reduces the computational costs, utilizing only 6% of the running time compared to state-of-the-art method DiffAct, while producing better or comparable accuracy over several popular benchmarks. The code for this project is publicly available at https://github.com/peiyao-w/BaFormer.

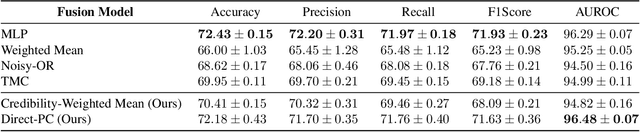

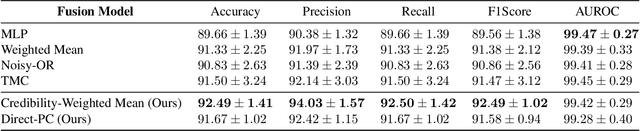

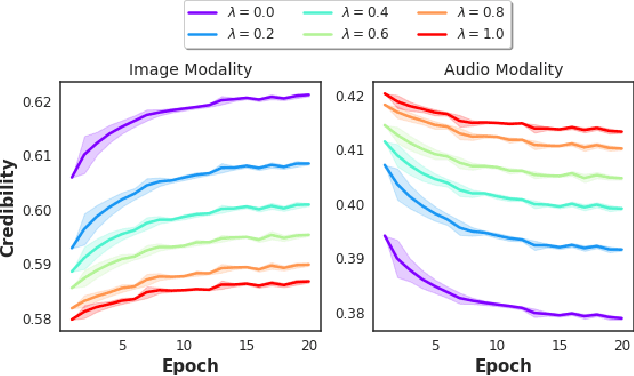

Credibility-Aware Multi-Modal Fusion Using Probabilistic Circuits

Mar 05, 2024

Abstract:We consider the problem of late multi-modal fusion for discriminative learning. Motivated by noisy, multi-source domains that require understanding the reliability of each data source, we explore the notion of credibility in the context of multi-modal fusion. We propose a combination function that uses probabilistic circuits (PCs) to combine predictive distributions over individual modalities. We also define a probabilistic measure to evaluate the credibility of each modality via inference queries over the PC. Our experimental evaluation demonstrates that our fusion method can reliably infer credibility while maintaining competitive performance with the state-of-the-art.

Image Prior and Posterior Conditional Probability Representation for Efficient Damage Assessment

Oct 26, 2023

Abstract:It is important to quantify Damage Assessment (DA) for Human Assistance and Disaster Response (HADR) applications. In this paper, to achieve efficient and scalable DA in HADR, an image prior and posterior conditional probability (IP2CP) is developed as an effective computational imaging representation. Equipped with the IP2CP representation, the matching pre- and post-disaster images are effectively encoded into one image that is then processed using deep learning approaches to determine the damage levels. Two scenarios of crucial importance for the practical use of DA in HADR applications are examined: pixel-wise semantic segmentation and patch-based contrastive learning-based global damage classification. Results achieved by IP2CP in both scenarios demonstrate promising performances, showing that our IP2CP-based methods within the deep learning framework can effectively achieve data and computational efficiency, which is of utmost importance for the DA in HADR applications.

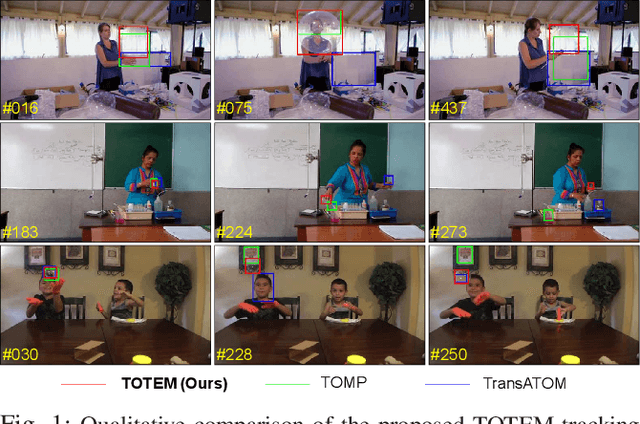

Transparent Object Tracking with Enhanced Fusion Module

Sep 13, 2023

Abstract:Accurate tracking of transparent objects, such as glasses, plays a critical role in many robotic tasks such as robot-assisted living. Due to the adaptive and often reflective texture of such objects, traditional tracking algorithms that rely on general-purpose learned features suffer from reduced performance. Recent research has proposed to instill transparency awareness into existing general object trackers by fusing purpose-built features. However, with the existing fusion techniques, the addition of new features causes a change in the latent space making it impossible to incorporate transparency awareness on trackers with fixed latent spaces. For example, many of the current days transformer-based trackers are fully pre-trained and are sensitive to any latent space perturbations. In this paper, we present a new feature fusion technique that integrates transparency information into a fixed feature space, enabling its use in a broader range of trackers. Our proposed fusion module, composed of a transformer encoder and an MLP module, leverages key query-based transformations to embed the transparency information into the tracking pipeline. We also present a new two-step training strategy for our fusion module to effectively merge transparency features. We propose a new tracker architecture that uses our fusion techniques to achieve superior results for transparent object tracking. Our proposed method achieves competitive results with state-of-the-art trackers on TOTB, which is the largest transparent object tracking benchmark recently released. Our results and the implementation of code will be made publicly available at https://github.com/kalyan0510/TOTEM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge