Saurabh Mathur

A Unified Framework for Human-Allied Learning of Probabilistic Circuits

May 03, 2024

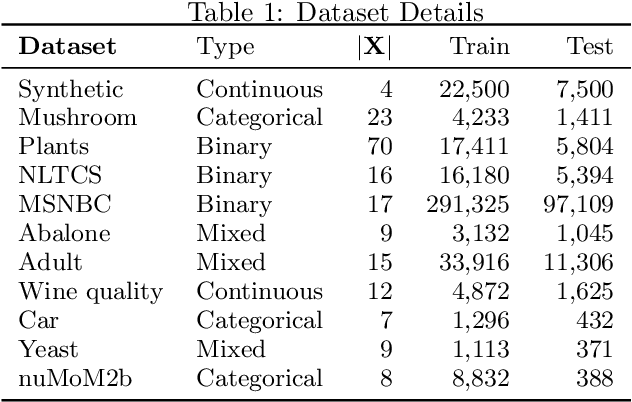

Abstract:Probabilistic Circuits (PCs) have emerged as an efficient framework for representing and learning complex probability distributions. Nevertheless, the existing body of research on PCs predominantly concentrates on data-driven parameter learning, often neglecting the potential of knowledge-intensive learning, a particular issue in data-scarce/knowledge-rich domains such as healthcare. To bridge this gap, we propose a novel unified framework that can systematically integrate diverse domain knowledge into the parameter learning process of PCs. Experiments on several benchmarks as well as real world datasets show that our proposed framework can both effectively and efficiently leverage domain knowledge to achieve superior performance compared to purely data-driven learning approaches.

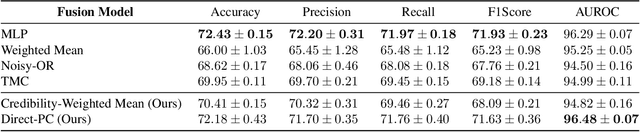

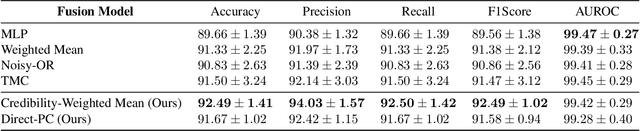

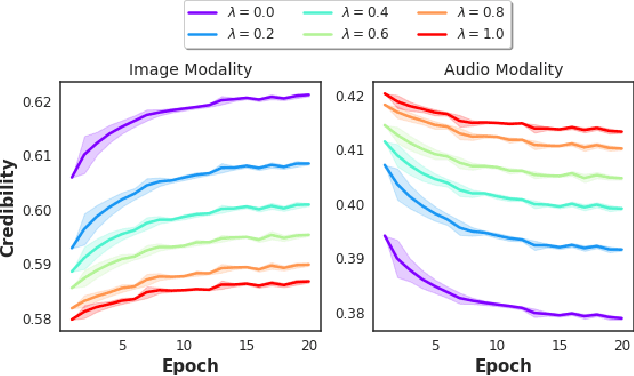

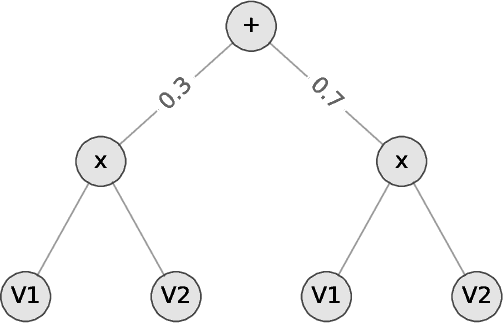

Credibility-Aware Multi-Modal Fusion Using Probabilistic Circuits

Mar 05, 2024

Abstract:We consider the problem of late multi-modal fusion for discriminative learning. Motivated by noisy, multi-source domains that require understanding the reliability of each data source, we explore the notion of credibility in the context of multi-modal fusion. We propose a combination function that uses probabilistic circuits (PCs) to combine predictive distributions over individual modalities. We also define a probabilistic measure to evaluate the credibility of each modality via inference queries over the PC. Our experimental evaluation demonstrates that our fusion method can reliably infer credibility while maintaining competitive performance with the state-of-the-art.

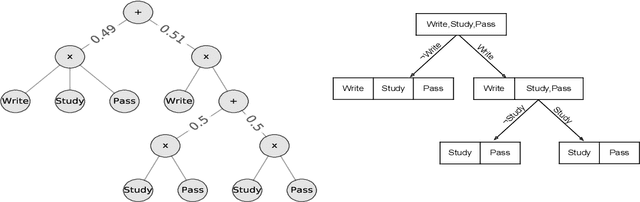

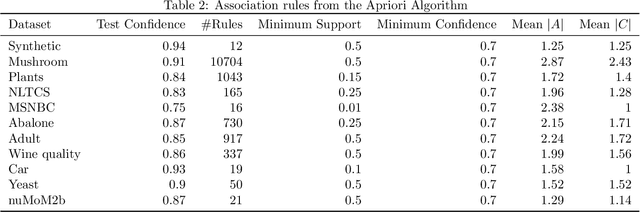

Explaining Deep Tractable Probabilistic Models: The sum-product network case

Oct 19, 2021

Abstract:We consider the problem of explaining a tractable deep probabilistic model, the Sum-Product Networks (SPNs).To this effect, we define the notion of a context-specific independence tree and present an iterative algorithm that converts an SPN to a CSI-tree. The resulting CSI-tree is both interpretable and explainable to the domain expert. To further compress the tree, we approximate the CSIs by fitting a supervised classifier. Our extensive empirical evaluations on synthetic, standard, and real-world clinical data sets demonstrate that the resulting models exhibit superior explainability without loss in performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge