Debin Zhao

MRT: Learning Compact Representations with Mixed RWKV-Transformer for Extreme Image Compression

Nov 14, 2025Abstract:Recent advances in extreme image compression have revealed that mapping pixel data into highly compact latent representations can significantly improve coding efficiency. However, most existing methods compress images into 2-D latent spaces via convolutional neural networks (CNNs) or Swin Transformers, which tend to retain substantial spatial redundancy, thereby limiting overall compression performance. In this paper, we propose a novel Mixed RWKV-Transformer (MRT) architecture that encodes images into more compact 1-D latent representations by synergistically integrating the complementary strengths of linear-attention-based RWKV and self-attention-based Transformer models. Specifically, MRT partitions each image into fixed-size windows, utilizing RWKV modules to capture global dependencies across windows and Transformer blocks to model local redundancies within each window. The hierarchical attention mechanism enables more efficient and compact representation learning in the 1-D domain. To further enhance compression efficiency, we introduce a dedicated RWKV Compression Model (RCM) tailored to the structure characteristics of the intermediate 1-D latent features in MRT. Extensive experiments on standard image compression benchmarks validate the effectiveness of our approach. The proposed MRT framework consistently achieves superior reconstruction quality at bitrates below 0.02 bits per pixel (bpp). Quantitative results based on the DISTS metric show that MRT significantly outperforms the state-of-the-art 2-D architecture GLC, achieving bitrate savings of 43.75%, 30.59% on the Kodak and CLIC2020 test datasets, respectively.

Perceptual Quality Assessment of 3D Gaussian Splatting: A Subjective Dataset and Prediction Metric

Nov 11, 2025

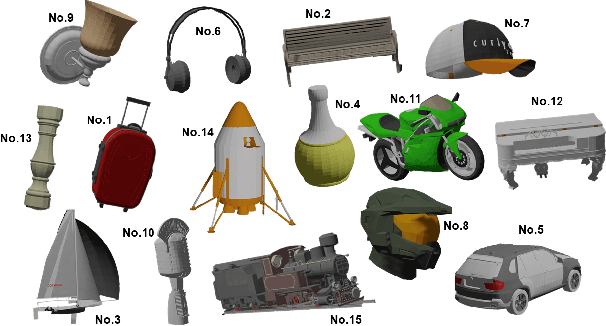

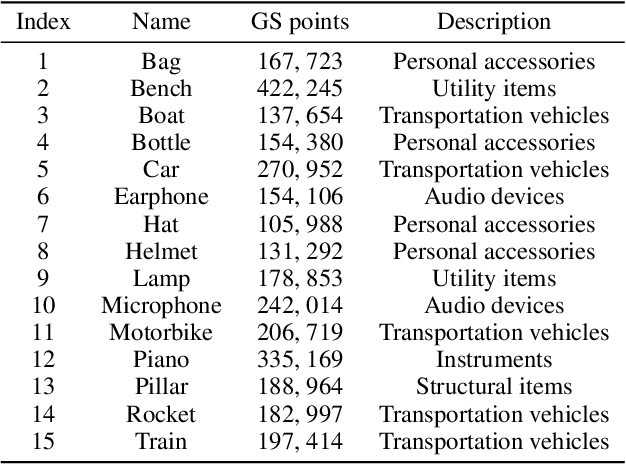

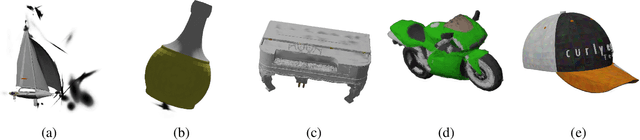

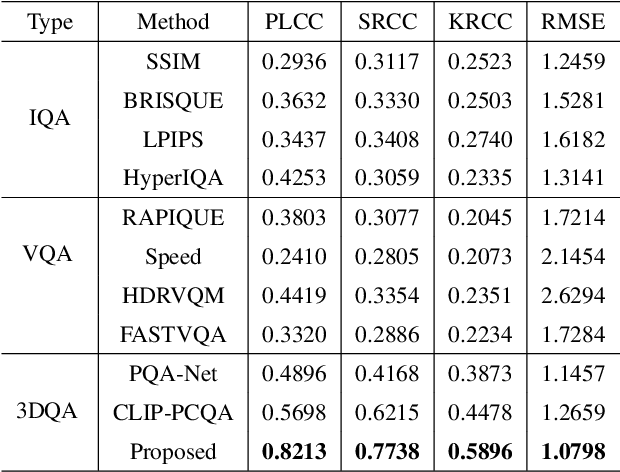

Abstract:With the rapid advancement of 3D visualization, 3D Gaussian Splatting (3DGS) has emerged as a leading technique for real-time, high-fidelity rendering. While prior research has emphasized algorithmic performance and visual fidelity, the perceptual quality of 3DGS-rendered content, especially under varying reconstruction conditions, remains largely underexplored. In practice, factors such as viewpoint sparsity, limited training iterations, point downsampling, noise, and color distortions can significantly degrade visual quality, yet their perceptual impact has not been systematically studied. To bridge this gap, we present 3DGS-QA, the first subjective quality assessment dataset for 3DGS. It comprises 225 degraded reconstructions across 15 object types, enabling a controlled investigation of common distortion factors. Based on this dataset, we introduce a no-reference quality prediction model that directly operates on native 3D Gaussian primitives, without requiring rendered images or ground-truth references. Our model extracts spatial and photometric cues from the Gaussian representation to estimate perceived quality in a structure-aware manner. We further benchmark existing quality assessment methods, spanning both traditional and learning-based approaches. Experimental results show that our method consistently achieves superior performance, highlighting its robustness and effectiveness for 3DGS content evaluation. The dataset and code are made publicly available at https://github.com/diaoyn/3DGSQA to facilitate future research in 3DGS quality assessment.

Feature-aligned Motion Transformation for Efficient Dynamic Point Cloud Compression

Sep 18, 2025Abstract:Dynamic point clouds are widely used in applications such as immersive reality, robotics, and autonomous driving. Efficient compression largely depends on accurate motion estimation and compensation, yet the irregular structure and significant local variations of point clouds make this task highly challenging. Current methods often rely on explicit motion estimation, whose encoded vectors struggle to capture intricate dynamics and fail to fully exploit temporal correlations. To overcome these limitations, we introduce a Feature-aligned Motion Transformation (FMT) framework for dynamic point cloud compression. FMT replaces explicit motion vectors with a spatiotemporal alignment strategy that implicitly models continuous temporal variations, using aligned features as temporal context within a latent-space conditional encoding framework. Furthermore, we design a random access (RA) reference strategy that enables bidirectional motion referencing and layered encoding, thereby supporting frame-level parallel compression. Extensive experiments demonstrate that our method surpasses D-DPCC and AdaDPCC in both encoding and decoding efficiency, while also achieving BD-Rate reductions of 20% and 9.4%, respectively. These results highlight the effectiveness of FMT in jointly improving compression efficiency and processing performance.

Region-Level Context-Aware Multimodal Understanding

Aug 17, 2025Abstract:Despite significant progress, existing research on Multimodal Large Language Models (MLLMs) mainly focuses on general visual understanding, overlooking the ability to integrate textual context associated with objects for a more context-aware multimodal understanding -- an ability we refer to as Region-level Context-aware Multimodal Understanding (RCMU). To address this limitation, we first formulate the RCMU task, which requires models to respond to user instructions by integrating both image content and textual information of regions or objects. To equip MLLMs with RCMU capabilities, we propose Region-level Context-aware Visual Instruction Tuning (RCVIT), which incorporates object information into the model input and enables the model to utilize bounding box coordinates to effectively associate objects' visual content with their textual information. To address the lack of datasets, we introduce the RCMU dataset, a large-scale visual instruction tuning dataset that covers multiple RCMU tasks. We also propose RC\&P-Bench, a comprehensive benchmark that can evaluate the performance of MLLMs in RCMU and multimodal personalized understanding tasks. Additionally, we propose a reference-free evaluation metric to perform a comprehensive and fine-grained evaluation of the region-level context-aware image descriptions. By performing RCVIT on Qwen2-VL models with the RCMU dataset, we developed RC-Qwen2-VL models. Experimental results indicate that RC-Qwen2-VL models not only achieve outstanding performance on multiple RCMU tasks but also demonstrate successful applications in multimodal RAG and personalized conversation. Our data, model and benchmark are available at https://github.com/hongliang-wei/RC-MLLM

T-GVC: Trajectory-Guided Generative Video Coding at Ultra-Low Bitrates

Jul 10, 2025

Abstract:Recent advances in video generation techniques have given rise to an emerging paradigm of generative video coding, aiming to achieve semantically accurate reconstructions in Ultra-Low Bitrate (ULB) scenarios by leveraging strong generative priors. However, most existing methods are limited by domain specificity (e.g., facial or human videos) or an excessive dependence on high-level text guidance, which often fails to capture motion details and results in unrealistic reconstructions. To address these challenges, we propose a Trajectory-Guided Generative Video Coding framework (dubbed T-GVC). T-GVC employs a semantic-aware sparse motion sampling pipeline to effectively bridge low-level motion tracking with high-level semantic understanding by extracting pixel-wise motion as sparse trajectory points based on their semantic importance, not only significantly reducing the bitrate but also preserving critical temporal semantic information. In addition, by incorporating trajectory-aligned loss constraints into diffusion processes, we introduce a training-free latent space guidance mechanism to ensure physically plausible motion patterns without sacrificing the inherent capabilities of generative models. Experimental results demonstrate that our framework outperforms both traditional codecs and state-of-the-art end-to-end video compression methods under ULB conditions. Furthermore, additional experiments confirm that our approach achieves more precise motion control than existing text-guided methods, paving the way for a novel direction of generative video coding guided by geometric motion modeling.

FLAM: Foundation Model-Based Body Stabilization for Humanoid Locomotion and Manipulation

Mar 28, 2025Abstract:Humanoid robots have attracted significant attention in recent years. Reinforcement Learning (RL) is one of the main ways to control the whole body of humanoid robots. RL enables agents to complete tasks by learning from environment interactions, guided by task rewards. However, existing RL methods rarely explicitly consider the impact of body stability on humanoid locomotion and manipulation. Achieving high performance in whole-body control remains a challenge for RL methods that rely solely on task rewards. In this paper, we propose a Foundation model-based method for humanoid Locomotion And Manipulation (FLAM for short). FLAM integrates a stabilizing reward function with a basic policy. The stabilizing reward function is designed to encourage the robot to learn stable postures, thereby accelerating the learning process and facilitating task completion. Specifically, the robot pose is first mapped to the 3D virtual human model. Then, the human pose is stabilized and reconstructed through a human motion reconstruction model. Finally, the pose before and after reconstruction is used to compute the stabilizing reward. By combining this stabilizing reward with the task reward, FLAM effectively guides policy learning. Experimental results on a humanoid robot benchmark demonstrate that FLAM outperforms state-of-the-art RL methods, highlighting its effectiveness in improving stability and overall performance.

Deep Network for Image Compressed Sensing Coding Using Local Structural Sampling

Feb 29, 2024Abstract:Existing image compressed sensing (CS) coding frameworks usually solve an inverse problem based on measurement coding and optimization-based image reconstruction, which still exist the following two challenges: 1) The widely used random sampling matrix, such as the Gaussian Random Matrix (GRM), usually leads to low measurement coding efficiency. 2) The optimization-based reconstruction methods generally maintain a much higher computational complexity. In this paper, we propose a new CNN based image CS coding framework using local structural sampling (dubbed CSCNet) that includes three functional modules: local structural sampling, measurement coding and Laplacian pyramid reconstruction. In the proposed framework, instead of GRM, a new local structural sampling matrix is first developed, which is able to enhance the correlation between the measurements through a local perceptual sampling strategy. Besides, the designed local structural sampling matrix can be jointly optimized with the other functional modules during training process. After sampling, the measurements with high correlations are produced, which are then coded into final bitstreams by the third-party image codec. At last, a Laplacian pyramid reconstruction network is proposed to efficiently recover the target image from the measurement domain to the image domain. Extensive experimental results demonstrate that the proposed scheme outperforms the existing state-of-the-art CS coding methods, while maintaining fast computational speed.

Probability-based Distance Estimation Model for 3D DV-Hop Localization in WSNs

Jan 11, 2024Abstract:Localization is one of the pivotal issues in wireless sensor network applications. In 3D localization studies, most algorithms focus on enhancing the location prediction process, lacking theoretical derivation of the detection distance of an anchor node at the varying hops, engenders a localization performance bottleneck. To address this issue, we propose a probability-based average distance estimation (PADE) model that utilizes the probability distribution of node distances detected by an anchor node. The aim is to mathematically derive the average distances of nodes detected by an anchor node at different hops. First, we develop a probability-based maximum distance estimation (PMDE) model to calculate the upper bound of the distance detected by an anchor node. Then, we present the PADE model, which relies on the upper bound obtained of the distance by the PMDE model. Finally, the obtained average distance is used to construct a distance loss function, and it is embedded with the traditional distance loss function into a multi-objective genetic algorithm to predict the locations of unknown nodes. The experimental results demonstrate that the proposed method achieves state-of-the-art performance in random and multimodal distributed sensor networks. The average localization accuracy is improved by 3.49\%-12.66\% and 3.99%-22.34%, respectively.

Myriad: Large Multimodal Model by Applying Vision Experts for Industrial Anomaly Detection

Nov 01, 2023

Abstract:Existing industrial anomaly detection (IAD) methods predict anomaly scores for both anomaly detection and localization. However, they struggle to perform a multi-turn dialog and detailed descriptions for anomaly regions, e.g., color, shape, and categories of industrial anomalies. Recently, large multimodal (i.e., vision and language) models (LMMs) have shown eminent perception abilities on multiple vision tasks such as image captioning, visual understanding, visual reasoning, etc., making it a competitive potential choice for more comprehensible anomaly detection. However, the knowledge about anomaly detection is absent in existing general LMMs, while training a specific LMM for anomaly detection requires a tremendous amount of annotated data and massive computation resources. In this paper, we propose a novel large multi-modal model by applying vision experts for industrial anomaly detection (dubbed Myriad), which leads to definite anomaly detection and high-quality anomaly description. Specifically, we adopt MiniGPT-4 as the base LMM and design an Expert Perception module to embed the prior knowledge from vision experts as tokens which are intelligible to Large Language Models (LLMs). To compensate for the errors and confusions of vision experts, we introduce a domain adapter to bridge the visual representation gaps between generic and industrial images. Furthermore, we propose a Vision Expert Instructor, which enables the Q-Former to generate IAD domain vision-language tokens according to vision expert prior. Extensive experiments on MVTec-AD and VisA benchmarks demonstrate that our proposed method not only performs favorably against state-of-the-art methods under the 1-class and few-shot settings, but also provide definite anomaly prediction along with detailed descriptions in IAD domain.

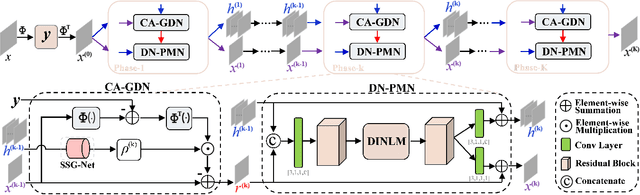

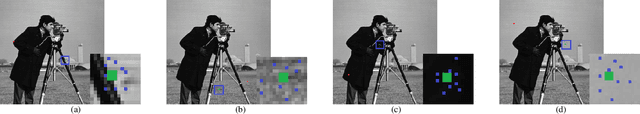

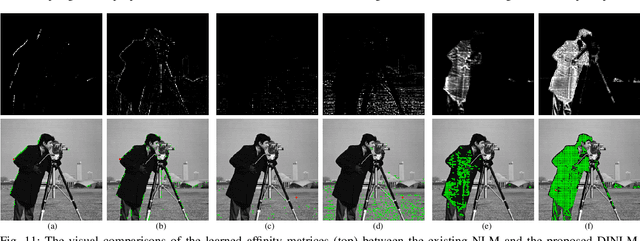

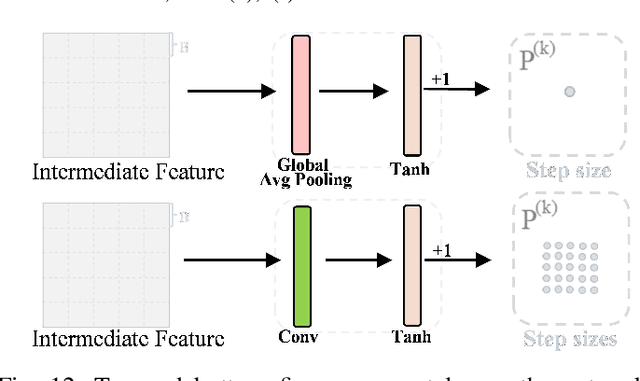

Deep Unfolding Network for Image Compressed Sensing by Content-adaptive Gradient Updating and Deformation-invariant Non-local Modeling

Oct 16, 2023

Abstract:Inspired by certain optimization solvers, the deep unfolding network (DUN) has attracted much attention in recent years for image compressed sensing (CS). However, there still exist the following two issues: 1) In existing DUNs, most hyperparameters are usually content independent, which greatly limits their adaptability for different input contents. 2) In each iteration, a plain convolutional neural network is usually adopted, which weakens the perception of wider context prior and therefore depresses the expressive ability. In this paper, inspired by the traditional Proximal Gradient Descent (PGD) algorithm, a novel DUN for image compressed sensing (dubbed DUN-CSNet) is proposed to solve the above two issues. Specifically, for the first issue, a novel content adaptive gradient descent network is proposed, in which a well-designed step size generation sub-network is developed to dynamically allocate the corresponding step sizes for different textures of input image by generating a content-aware step size map, realizing a content-adaptive gradient updating. For the second issue, considering the fact that many similar patches exist in an image but have undergone a deformation, a novel deformation-invariant non-local proximal mapping network is developed, which can adaptively build the long-range dependencies between the nonlocal patches by deformation-invariant non-local modeling, leading to a wider perception on context priors. Extensive experiments manifest that the proposed DUN-CSNet outperforms existing state-of-the-art CS methods by large margins.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge