Dawei Zhang

Domain Adaptation in Agricultural Image Analysis: A Comprehensive Review from Shallow Models to Deep Learning

Jun 06, 2025Abstract:With the increasing use of computer vision in agriculture, image analysis has become crucial for tasks like crop health monitoring and pest detection. However, significant domain shifts between source and target domains-due to environmental differences, crop types, and data acquisition methods-pose challenges. These domain gaps limit the ability of models to generalize across regions, seasons, and complex agricultural environments. This paper explores how Domain Adaptation (DA) techniques can address these challenges, focusing on their role in enhancing the cross-domain transferability of agricultural image analysis. DA has gained attention in agricultural vision tasks due to its potential to mitigate domain heterogeneity. The paper systematically reviews recent advances in DA for agricultural imagery, particularly its practical applications in complex agricultural environments. We examine the key drivers for adopting DA in agriculture, such as limited labeled data, weak model transferability, and dynamic environmental conditions. We also discuss its use in crop health monitoring, pest detection, and fruit recognition, highlighting improvements in performance across regions and seasons. The paper categorizes DA methods into shallow and deep learning models, with further divisions into supervised, semi-supervised, and unsupervised approaches. A special focus is given to adversarial learning-based DA methods, which have shown great promise in challenging agricultural scenarios. Finally, we review key public datasets in agricultural imagery, analyzing their value and limitations in DA research. This review provides a comprehensive framework for researchers, offering insights into current research gaps and supporting the advancement of DA methods in agricultural image analysis.

Diffusion Model in Hyperspectral Image Processing and Analysis: A Review

May 16, 2025Abstract:Hyperspectral image processing and analysis has important application value in remote sensing, agriculture and environmental monitoring, but its high dimensionality, data redundancy and noise interference etc. bring great challenges to the analysis. Traditional models have limitations in dealing with these complex data, and it is difficult to meet the increasing demand for analysis. In recent years, Diffusion Model, as an emerging generative model, has shown unique advantages in hyperspectral image processing. By simulating the diffusion process of data in time, the Diffusion Model can effectively process high-dimensional data, generate high-quality samples, and perform well in denoising and data enhancement. In this paper, we review the recent research advances in diffusion modeling for hyperspectral image processing and analysis, and discuss its applications in tasks such as high-dimensional data processing, noise removal, classification, and anomaly detection. The performance of diffusion-based models on image processing is compared and the challenges are summarized. It is shown that the diffusion model can significantly improve the accuracy and efficiency of hyperspectral image analysis, providing a new direction for future research.

Adversarial Attack for RGB-Event based Visual Object Tracking

Apr 19, 2025Abstract:Visual object tracking is a crucial research topic in the fields of computer vision and multi-modal fusion. Among various approaches, robust visual tracking that combines RGB frames with Event streams has attracted increasing attention from researchers. While striving for high accuracy and efficiency in tracking, it is also important to explore how to effectively conduct adversarial attacks and defenses on RGB-Event stream tracking algorithms, yet research in this area remains relatively scarce. To bridge this gap, in this paper, we propose a cross-modal adversarial attack algorithm for RGB-Event visual tracking. Because of the diverse representations of Event streams, and given that Event voxels and frames are more commonly used, this paper will focus on these two representations for an in-depth study. Specifically, for the RGB-Event voxel, we first optimize the perturbation by adversarial loss to generate RGB frame adversarial examples. For discrete Event voxel representations, we propose a two-step attack strategy, more in detail, we first inject Event voxels into the target region as initialized adversarial examples, then, conduct a gradient-guided optimization by perturbing the spatial location of the Event voxels. For the RGB-Event frame based tracking, we optimize the cross-modal universal perturbation by integrating the gradient information from multimodal data. We evaluate the proposed approach against attacks on three widely used RGB-Event Tracking datasets, i.e., COESOT, FE108, and VisEvent. Extensive experiments show that our method significantly reduces the performance of the tracker across numerous datasets in both unimodal and multimodal scenarios. The source code will be released on https://github.com/Event-AHU/Adversarial_Attack_Defense

PD-SORT: Occlusion-Robust Multi-Object Tracking Using Pseudo-Depth Cues

Jan 20, 2025

Abstract:Multi-object tracking (MOT) is a rising topic in video processing technologies and has important application value in consumer electronics. Currently, tracking-by-detection (TBD) is the dominant paradigm for MOT, which performs target detection and association frame by frame. However, the association performance of TBD methods degrades in complex scenes with heavy occlusions, which hinders the application of such methods in real-world scenarios.To this end, we incorporate pseudo-depth cues to enhance the association performance and propose Pseudo-Depth SORT (PD-SORT). First, we extend the Kalman filter state vector with pseudo-depth states. Second, we introduce a novel depth volume IoU (DVIoU) by combining the conventional 2D IoU with pseudo-depth. Furthermore, we develop a quantized pseudo-depth measurement (QPDM) strategy for more robust data association. Besides, we also integrate camera motion compensation (CMC) to handle dynamic camera situations. With the above designs, PD-SORT significantly alleviates the occlusion-induced ambiguous associations and achieves leading performances on DanceTrack, MOT17, and MOT20. Note that the improvement is especially obvious on DanceTrack, where objects show complex motions, similar appearances, and frequent occlusions. The code is available at https://github.com/Wangyc2000/PD_SORT.

Cross-Dataset Generalization in Deep Learning

Oct 15, 2024

Abstract:Deep learning has been extensively used in various fields, such as phase imaging, 3D imaging reconstruction, phase unwrapping, and laser speckle reduction, particularly for complex problems that lack analytic models. Its data-driven nature allows for implicit construction of mathematical relationships within the network through training with abundant data. However, a critical challenge in practical applications is the generalization issue, where a network trained on one dataset struggles to recognize an unknown target from a different dataset. In this study, we investigate imaging through scattering media and discover that the mathematical relationship learned by the network is an approximation dependent on the training dataset, rather than the true mapping relationship of the model. We demonstrate that enhancing the diversity of the training dataset can improve this approximation, thereby achieving generalization across different datasets, as the mapping relationship of a linear physical model is independent of inputs. This study elucidates the nature of generalization across different datasets and provides insights into the design of training datasets to ultimately address the generalization issue in various deep learning-based applications.

Taylor-Sensus Network: Embracing Noise to Enlighten Uncertainty for Scientific Data

Sep 12, 2024

Abstract:Uncertainty estimation is crucial in scientific data for machine learning. Current uncertainty estimation methods mainly focus on the model's inherent uncertainty, while neglecting the explicit modeling of noise in the data. Furthermore, noise estimation methods typically rely on temporal or spatial dependencies, which can pose a significant challenge in structured scientific data where such dependencies among samples are often absent. To address these challenges in scientific research, we propose the Taylor-Sensus Network (TSNet). TSNet innovatively uses a Taylor series expansion to model complex, heteroscedastic noise and proposes a deep Taylor block for aware noise distribution. TSNet includes a noise-aware contrastive learning module and a data density perception module for aleatoric and epistemic uncertainty. Additionally, an uncertainty combination operator is used to integrate these uncertainties, and the network is trained using a novel heteroscedastic mean square error loss. TSNet demonstrates superior performance over mainstream and state-of-the-art methods in experiments, highlighting its potential in scientific research and noise resistance. It will be open-source to facilitate the community of "AI for Science".

Safe and Stable Teleoperation of Quadrotor UAVs under Haptic Shared Autonomy

Mar 22, 2024Abstract:We present a novel approach that aims to address both safety and stability of a haptic teleoperation system within a framework of Haptic Shared Autonomy (HSA). We use Control Barrier Functions (CBFs) to generate the control input that follows the user's input as closely as possible while guaranteeing safety. In the context of stability of the human-in-the-loop system, we limit the force feedback perceived by the user via a small $L_2$-gain, which is achieved by limiting the control and the force feedback via a differential constraint. Specifically, with the property of HSA, we propose two pathways to design the control and the force feedback: Sequential Control Force (SCF) and Joint Control Force (JCF). Both designs can achieve safety and stability but with different responses to the user's commands. We conducted experimental simulations to evaluate and investigate the properties of the designed methods. We also tested the proposed method on a physical quadrotor UAV and a haptic interface.

Bridging the Semantic-Numerical Gap: A Numerical Reasoning Method of Cross-modal Knowledge Graph for Material Property Prediction

Dec 15, 2023

Abstract:Using machine learning (ML) techniques to predict material properties is a crucial research topic. These properties depend on numerical data and semantic factors. Due to the limitations of small-sample datasets, existing methods typically adopt ML algorithms to regress numerical properties or transfer other pre-trained knowledge graphs (KGs) to the material. However, these methods cannot simultaneously handle semantic and numerical information. In this paper, we propose a numerical reasoning method for material KGs (NR-KG), which constructs a cross-modal KG using semantic nodes and numerical proxy nodes. It captures both types of information by projecting KG into a canonical KG and utilizes a graph neural network to predict material properties. In this process, a novel projection prediction loss is proposed to extract semantic features from numerical information. NR-KG facilitates end-to-end processing of cross-modal data, mining relationships and cross-modal information in small-sample datasets, and fully utilizes valuable experimental data to enhance material prediction. We further propose two new High-Entropy Alloys (HEA) property datasets with semantic descriptions. NR-KG outperforms state-of-the-art (SOTA) methods, achieving relative improvements of 25.9% and 16.1% on two material datasets. Besides, NR-KG surpasses SOTA methods on two public physical chemistry molecular datasets, showing improvements of 22.2% and 54.3%, highlighting its potential application and generalizability. We hope the proposed datasets, algorithms, and pre-trained models can facilitate the communities of KG and AI for materials.

Adaptive Pseudo-Siamese Policy Network for Temporal Knowledge Prediction

Apr 26, 2022

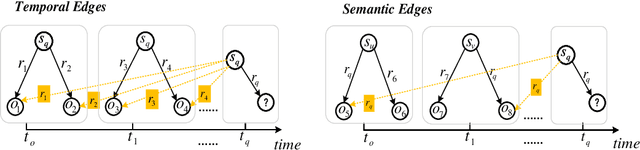

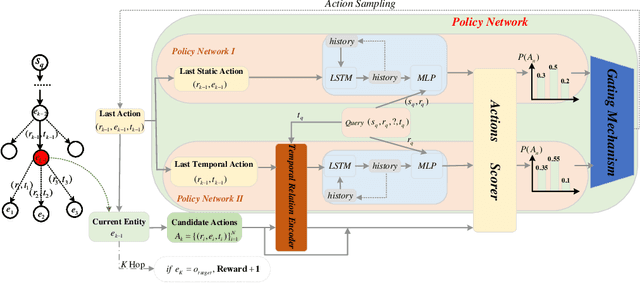

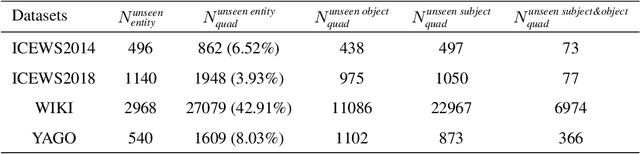

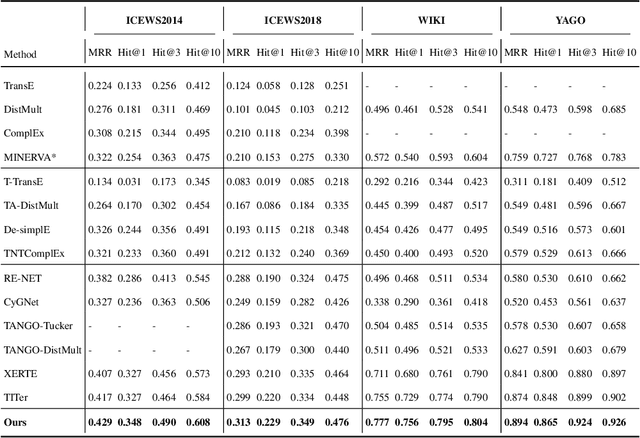

Abstract:Temporal knowledge prediction is a crucial task for the event early warning that has gained increasing attention in recent years, which aims to predict the future facts by using relevant historical facts on the temporal knowledge graphs. There are two main difficulties in this prediction task. First, from the historical facts point of view, how to model the evolutionary patterns of the facts to predict the query accurately. Second, from the query perspective, how to handle the two cases where the query contains seen and unseen entities in a unified framework. Driven by the two problems, we propose a novel adaptive pseudo-siamese policy network for temporal knowledge prediction based on reinforcement learning. Specifically, we design the policy network in our model as a pseudo-siamese policy network that consists of two sub-policy networks. In sub-policy network I, the agent searches for the answer for the query along the entity-relation paths to capture the static evolutionary patterns. And in sub-policy network II, the agent searches for the answer for the query along the relation-time paths to deal with unseen entities. Moreover, we develop a temporal relation encoder to capture the temporal evolutionary patterns. Finally, we design a gating mechanism to adaptively integrate the results of the two sub-policy networks to help the agent focus on the destination answer. To assess our model performance, we conduct link prediction on four benchmark datasets, the experimental results demonstrate that our method obtains considerable performance compared with existing methods.

MixKG: Mixing for harder negative samples in knowledge graph

Feb 19, 2022

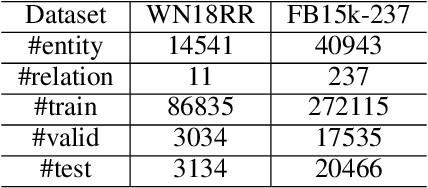

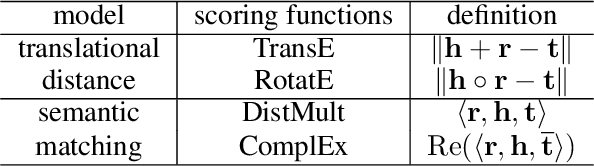

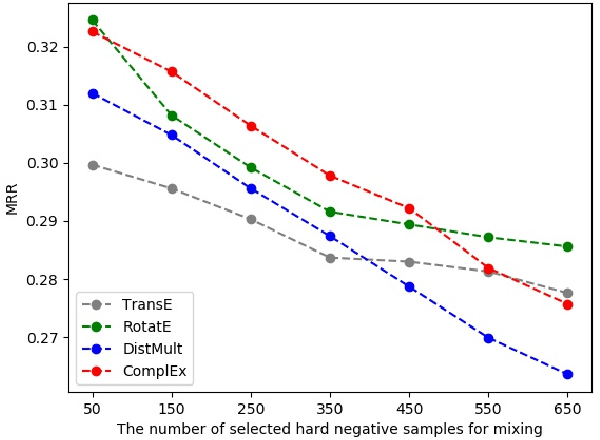

Abstract:Knowledge graph embedding~(KGE) aims to represent entities and relations into low-dimensional vectors for many real-world applications. The representations of entities and relations are learned via contrasting the positive and negative triplets. Thus, high-quality negative samples are extremely important in KGE. However, the present KGE models either rely on simple negative sampling methods, which makes it difficult to obtain informative negative triplets; or employ complex adversarial methods, which requires more training data and strategies. In addition, these methods can only construct negative triplets using the existing entities, which limits the potential to explore harder negative triplets. To address these issues, we adopt mixing operation in generating harder negative samples for knowledge graphs and introduce an inexpensive but effective method called MixKG. Technically, MixKG first proposes two kinds of criteria to filter hard negative triplets among the sampled negatives: based on scoring function and based on correct entity similarity. Then, MixKG synthesizes harder negative samples via the convex combinations of the paired selected hard negatives. Experiments on two public datasets and four classical KGE methods show MixKG is superior to previous negative sampling algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge