David Nguyen

LLMs Are Not Yet Ready for Deepfake Image Detection

Jun 12, 2025Abstract:The growing sophistication of deepfakes presents substantial challenges to the integrity of media and the preservation of public trust. Concurrently, vision-language models (VLMs), large language models enhanced with visual reasoning capabilities, have emerged as promising tools across various domains, sparking interest in their applicability to deepfake detection. This study conducts a structured zero-shot evaluation of four prominent VLMs: ChatGPT, Claude, Gemini, and Grok, focusing on three primary deepfake types: faceswap, reenactment, and synthetic generation. Leveraging a meticulously assembled benchmark comprising authentic and manipulated images from diverse sources, we evaluate each model's classification accuracy and reasoning depth. Our analysis indicates that while VLMs can produce coherent explanations and detect surface-level anomalies, they are not yet dependable as standalone detection systems. We highlight critical failure modes, such as an overemphasis on stylistic elements and vulnerability to misleading visual patterns like vintage aesthetics. Nevertheless, VLMs exhibit strengths in interpretability and contextual analysis, suggesting their potential to augment human expertise in forensic workflows. These insights imply that although general-purpose models currently lack the reliability needed for autonomous deepfake detection, they hold promise as integral components in hybrid or human-in-the-loop detection frameworks.

Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce

Jun 06, 2025Abstract:The rapid rise of compound AI systems (a.k.a., AI agents) is reshaping the labor market, raising concerns about job displacement, diminished human agency, and overreliance on automation. Yet, we lack a systematic understanding of the evolving landscape. In this paper, we address this gap by introducing a novel auditing framework to assess which occupational tasks workers want AI agents to automate or augment, and how those desires align with the current technological capabilities. Our framework features an audio-enhanced mini-interview to capture nuanced worker desires and introduces the Human Agency Scale (HAS) as a shared language to quantify the preferred level of human involvement. Using this framework, we construct the WORKBank database, building on the U.S. Department of Labor's O*NET database, to capture preferences from 1,500 domain workers and capability assessments from AI experts across over 844 tasks spanning 104 occupations. Jointly considering the desire and technological capability divides tasks in WORKBank into four zones: Automation "Green Light" Zone, Automation "Red Light" Zone, R&D Opportunity Zone, Low Priority Zone. This highlights critical mismatches and opportunities for AI agent development. Moving beyond a simple automate-or-not dichotomy, our results reveal diverse HAS profiles across occupations, reflecting heterogeneous expectations for human involvement. Moreover, our study offers early signals of how AI agent integration may reshape the core human competencies, shifting from information-focused skills to interpersonal ones. These findings underscore the importance of aligning AI agent development with human desires and preparing workers for evolving workplace dynamics.

High Speed Robotic Table Tennis Swinging Using Lightweight Hardware with Model Predictive Control

May 02, 2025

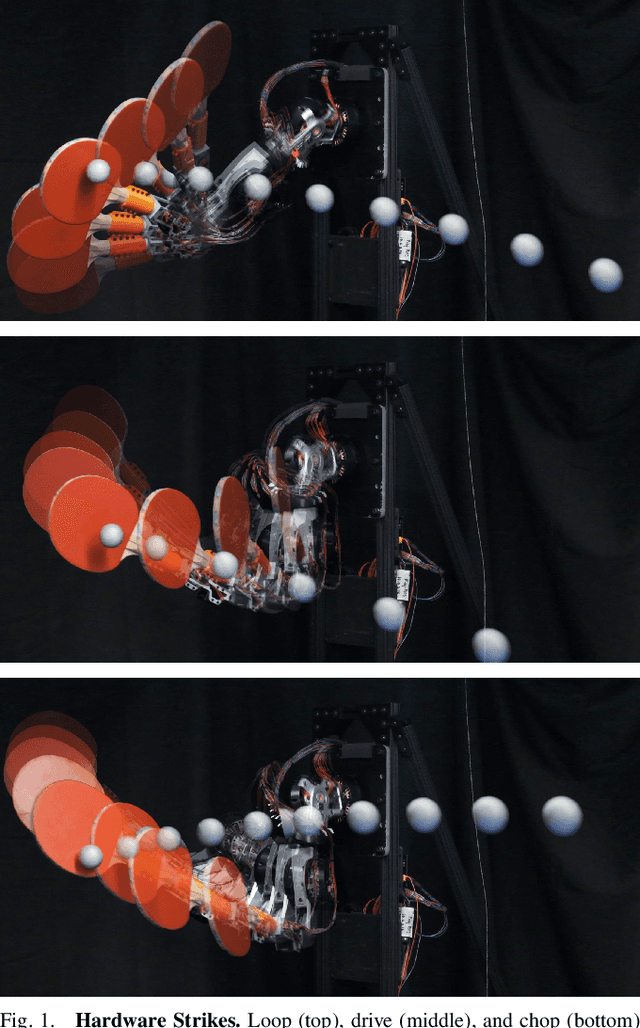

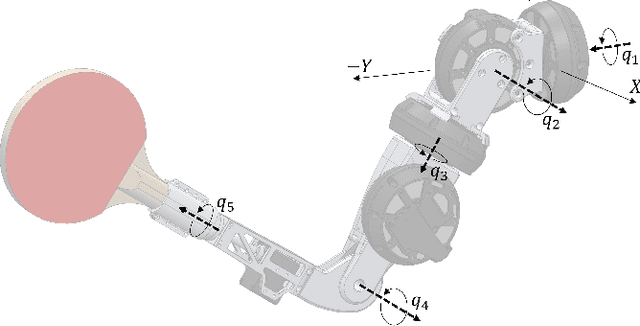

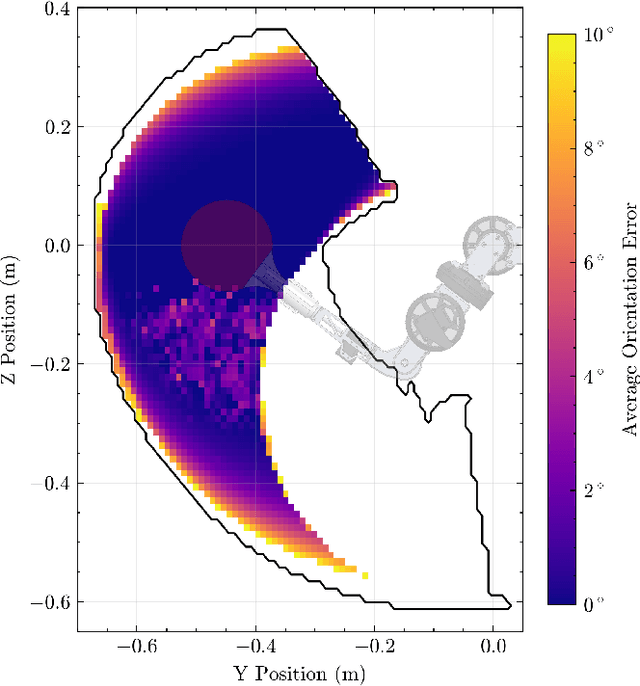

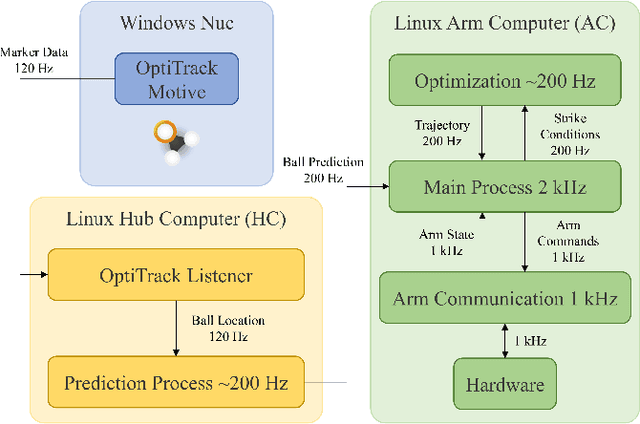

Abstract:We present a robotic table tennis platform that achieves a variety of hit styles and ball-spins with high precision, power, and consistency. This is enabled by a custom lightweight, high-torque, low rotor inertia, five degree-of-freedom arm capable of high acceleration. To generate swing trajectories, we formulate an optimal control problem (OCP) that constrains the state of the paddle at the time of the strike. The terminal position is given by a predicted ball trajectory, and the terminal orientation and velocity of the paddle are chosen to match various possible styles of hits: loops (topspin), drives (flat), and chops (backspin). Finally, we construct a fixed-horizon model predictive controller (MPC) around this OCP to allow the hardware to quickly react to changes in the predicted ball trajectory. We validate on hardware that the system is capable of hitting balls with an average exit velocity of 11 m/s at an 88% success rate across the three swing types.

Quantum Down Sampling Filter for Variational Auto-encoder

Jan 09, 2025Abstract:Variational Autoencoders (VAEs) are essential tools in generative modeling and image reconstruction, with their performance heavily influenced by the encoder-decoder architecture. This study aims to improve the quality of reconstructed images by enhancing their resolution and preserving finer details, particularly when working with low-resolution inputs (16x16 pixels), where traditional VAEs often yield blurred or in-accurate results. To address this, we propose a hybrid model that combines quantum computing techniques in the VAE encoder with convolutional neural networks (CNNs) in the decoder. By upscaling the resolution from 16x16 to 32x32 during the encoding process, our approach evaluates how the model reconstructs images with enhanced resolution while maintaining key features and structures. This method tests the model's robustness in handling image reconstruction and its ability to preserve essential details despite training on lower-resolution data. We evaluate our proposed down sampling filter for Quantum VAE (Q-VAE) on the MNIST and USPS datasets and compare it with classical VAEs and a variant called Classical Direct Passing VAE (CDP-VAE), which uses windowing pooling filters in the encoding process. Performance is assessed using metrics such as the Fr\'echet Inception Distance (FID) and Mean Squared Error (MSE), which measure the fidelity of reconstructed images. Our results demonstrate that the Q-VAE consistently outperforms both the Classical VAE and CDP-VAE, achieving significantly lower FID and MSE scores. Additionally, CDP-VAE yields better performance than C-VAE. These findings highlight the potential of quantum-enhanced VAEs to improve image reconstruction quality by enhancing resolution and preserving essential features, offering a promising direction for future applications in computer vision and synthetic data generation.

ThreatModeling-LLM: Automating Threat Modeling using Large Language Models for Banking System

Nov 26, 2024Abstract:Threat modeling is a crucial component of cybersecurity, particularly for industries such as banking, where the security of financial data is paramount. Traditional threat modeling approaches require expert intervention and manual effort, often leading to inefficiencies and human error. The advent of Large Language Models (LLMs) offers a promising avenue for automating these processes, enhancing both efficiency and efficacy. However, this transition is not straightforward due to three main challenges: (1) the lack of publicly available, domain-specific datasets, (2) the need for tailored models to handle complex banking system architectures, and (3) the requirement for real-time, adaptive mitigation strategies that align with compliance standards like NIST 800-53. In this paper, we introduce ThreatModeling-LLM, a novel and adaptable framework that automates threat modeling for banking systems using LLMs. ThreatModeling-LLM operates in three stages: 1) dataset creation, 2) prompt engineering and 3) model fine-tuning. We first generate a benchmark dataset using Microsoft Threat Modeling Tool (TMT). Then, we apply Chain of Thought (CoT) and Optimization by PROmpting (OPRO) on the pre-trained LLMs to optimize the initial prompt. Lastly, we fine-tune the LLM using Low-Rank Adaptation (LoRA) based on the benchmark dataset and the optimized prompt to improve the threat identification and mitigation generation capabilities of pre-trained LLMs.

Team Northeastern's Approach to ANA XPRIZE Avatar Final Testing: A Holistic Approach to Telepresence and Lessons Learned

Mar 08, 2023

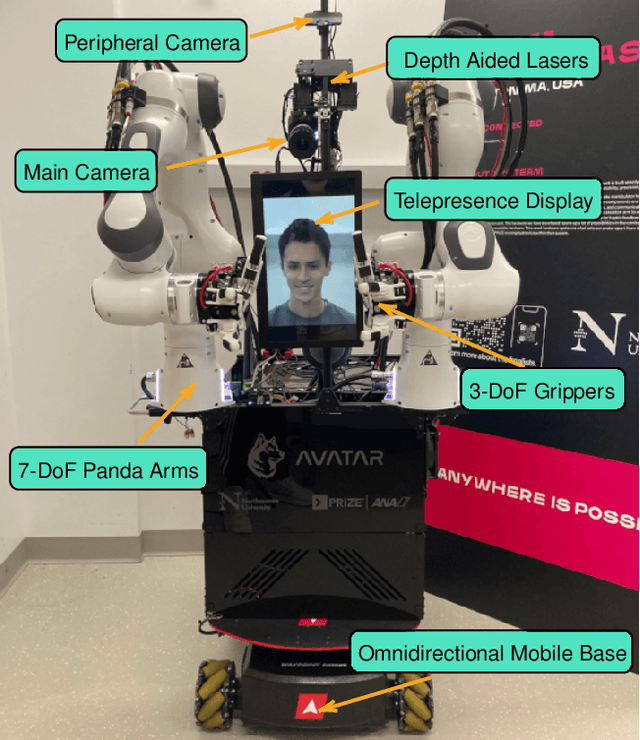

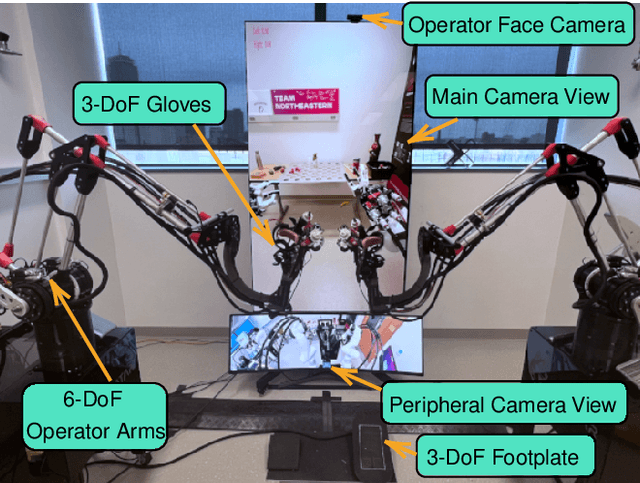

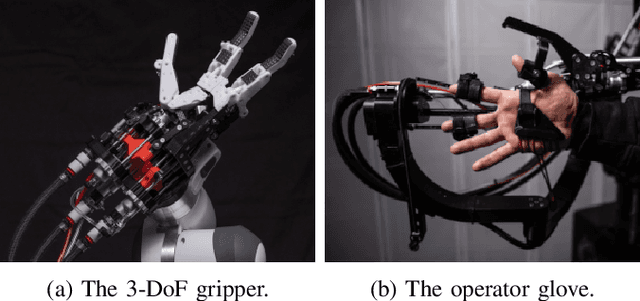

Abstract:This paper reports on Team Northeastern's Avatar system for telepresence, and our holistic approach to meet the ANA Avatar XPRIZE Final testing task requirements. The system features a dual-arm configuration with hydraulically actuated glove-gripper pair for haptic force feedback. Our proposed Avatar system was evaluated in the ANA Avatar XPRIZE Finals and completed all 10 tasks, scored 14.5 points out of 15.0, and received the 3rd Place Award. We provide the details of improvements over our first generation Avatar, covering manipulation, perception, locomotion, power, network, and controller design. We also extensively discuss the major lessons learned during our participation in the competition.

Deception for Cyber Defence: Challenges and Opportunities

Aug 15, 2022

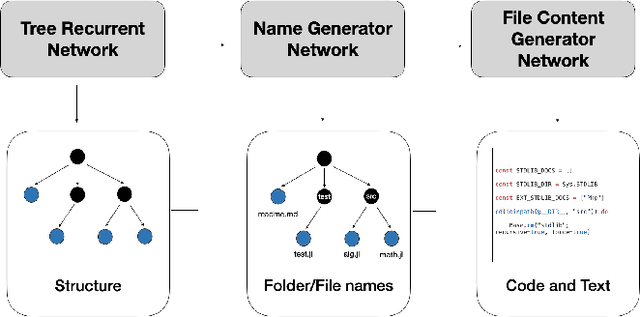

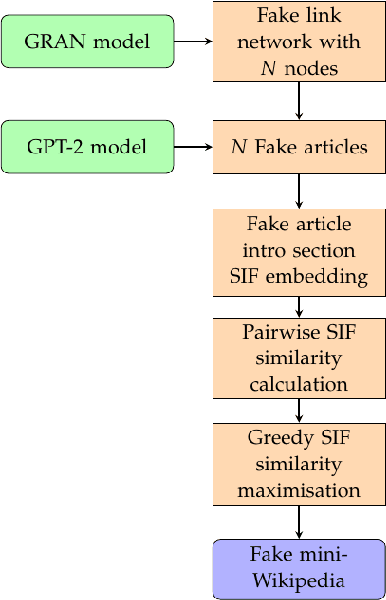

Abstract:Deception is rapidly growing as an important tool for cyber defence, complementing existing perimeter security measures to rapidly detect breaches and data theft. One of the factors limiting the use of deception has been the cost of generating realistic artefacts by hand. Recent advances in Machine Learning have, however, created opportunities for scalable, automated generation of realistic deceptions. This vision paper describes the opportunities and challenges involved in developing models to mimic many common elements of the IT stack for deception effects.

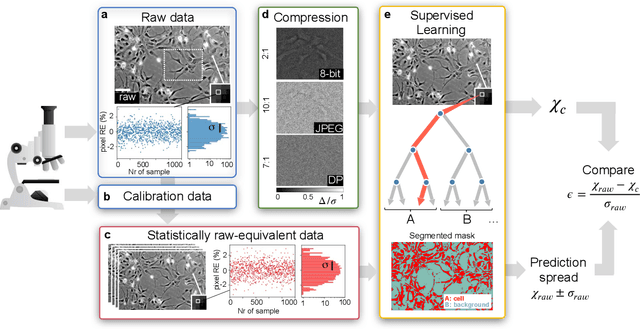

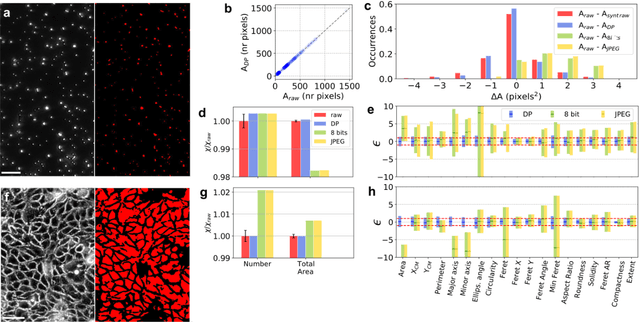

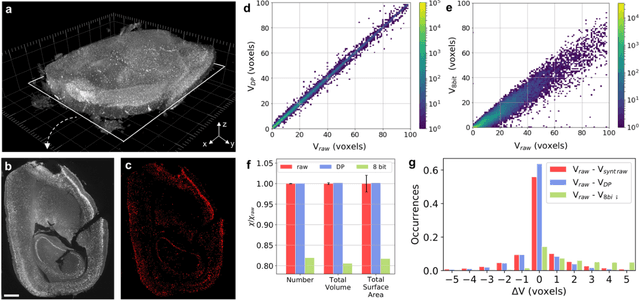

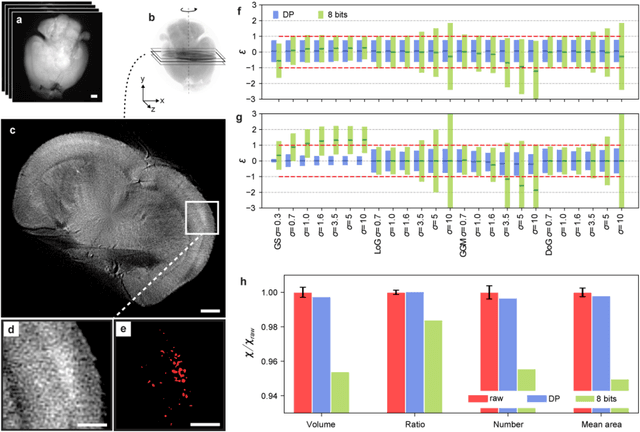

Quantifying the effect of image compression on supervised learning applications in optical microscopy

Sep 26, 2020

Abstract:The impressive growth of data throughput in optical microscopy has triggered a widespread use of supervised learning (SL) models running on compressed image datasets for efficient automated analysis. However, since lossy image compression risks to produce unpredictable artifacts, quantifying the effect of data compression on SL applications is of pivotal importance to assess their reliability, especially for clinical use. We propose an experimental method to evaluate the tolerability of image compression distortions in 2D and 3D cell segmentation SL tasks: predictions on compressed data are compared to the raw predictive uncertainty, which is numerically estimated from the raw noise statistics measured through sensor calibration. We show that predictions on object- and image-specific segmentation parameters can be altered by up to 15% and more than 10 standard deviations after 16-to-8 bits downsampling or JPEG compression. In contrast, a recently developed lossless compression algorithm provides a prediction spread which is statistically equivalent to that stemming from raw noise, while providing a compression ratio of up to 10:1. By setting a lower bound to the SL predictive uncertainty, our technique can be generalized to validate a variety of data analysis pipelines in SL-assisted fields.

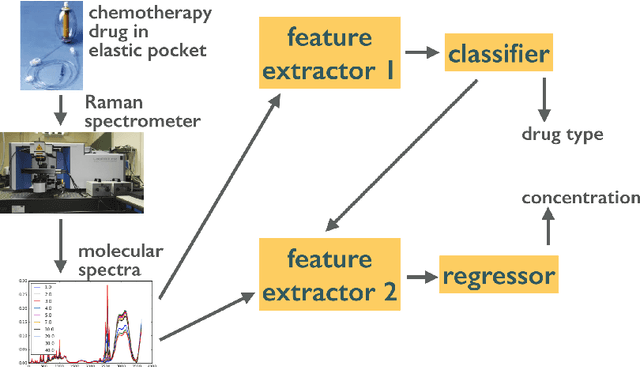

Machine learning for classification and quantification of monoclonal antibody preparations for cancer therapy

May 31, 2017

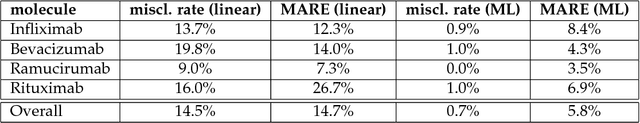

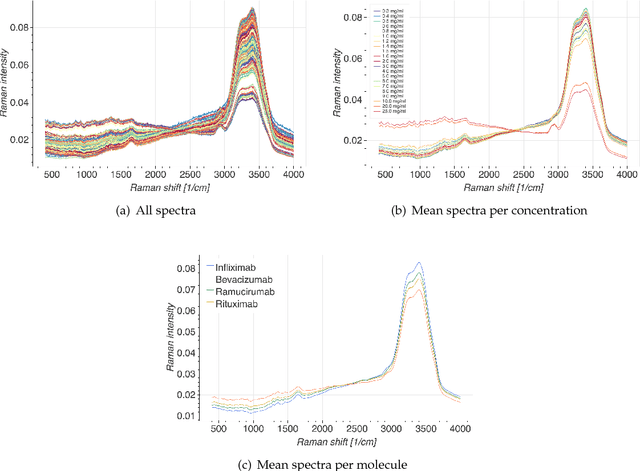

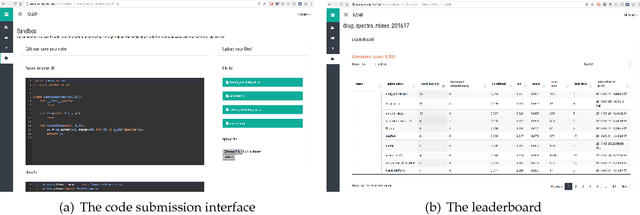

Abstract:Monoclonal antibodies constitute one of the most important strategies to treat patients suffering from cancers such as hematological malignancies and solid tumors. In order to guarantee the quality of those preparations prepared at hospital, quality control has to be developed. The aim of this study was to explore a noninvasive, nondestructive, and rapid analytical method to ensure the quality of the final preparation without causing any delay in the process. We analyzed four mAbs (Inlfiximab, Bevacizumab, Ramucirumab and Rituximab) diluted at therapeutic concentration in chloride sodium 0.9% using Raman spectroscopy. To reduce the prediction errors obtained with traditional chemometric data analysis, we explored a data-driven approach using statistical machine learning methods where preprocessing and predictive models are jointly optimized. We prepared a data analytics workflow and submitted the problem to a collaborative data challenge platform called Rapid Analytics and Model Prototyping (RAMP). This allowed to use solutions from about 300 data scientists during five days of collaborative work. The prediction of the four mAbs samples was considerably improved with a misclassification rate and the mean error rate of 0.8% and 4%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge