Christoph Clausen

Data Models for Dataset Drift Controls in Machine Learning With Images

Nov 04, 2022

Abstract:Camera images are ubiquitous in machine learning research. They also play a central role in the delivery of important services spanning medicine and environmental surveying. However, the application of machine learning models in these domains has been limited because of robustness concerns. A primary failure mode are performance drops due to differences between the training and deployment data. While there are methods to prospectively validate the robustness of machine learning models to such dataset drifts, existing approaches do not account for explicit models of the primary object of interest: the data. This makes it difficult to create physically faithful drift test cases or to provide specifications of data models that should be avoided when deploying a machine learning model. In this study, we demonstrate how these shortcomings can be overcome by pairing machine learning robustness validation with physical optics. We examine the role raw sensor data and differentiable data models can play in controlling performance risks related to image dataset drift. The findings are distilled into three applications. First, drift synthesis enables the controlled generation of physically faithful drift test cases. The experiments presented here show that the average decrease in model performance is ten to four times less severe than under post-hoc augmentation testing. Second, the gradient connection between task and data models allows for drift forensics that can be used to specify performance-sensitive data models which should be avoided during deployment of a machine learning model. Third, drift adjustment opens up the possibility for processing adjustments in the face of drift. This can lead to speed up and stabilization of classifier training at a margin of up to 20% in validation accuracy. A guide to access the open code and datasets is available at https://github.com/aiaudit-org/raw2logit.

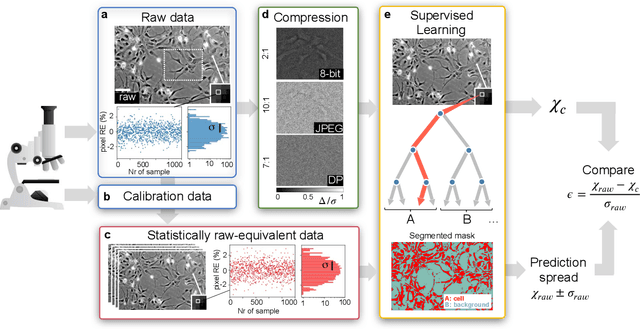

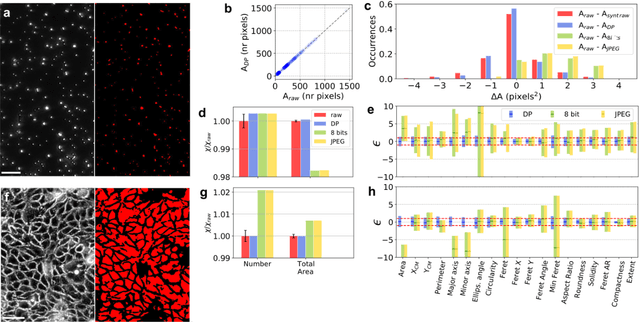

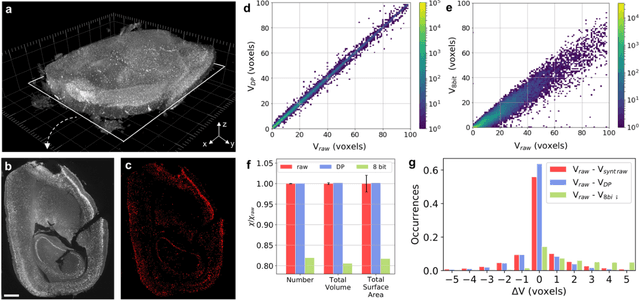

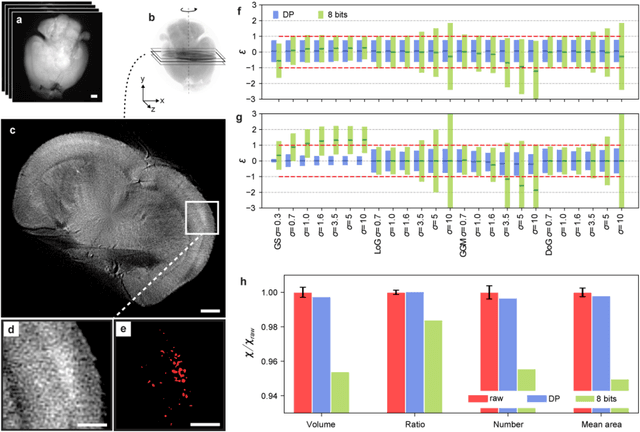

Quantifying the effect of image compression on supervised learning applications in optical microscopy

Sep 26, 2020

Abstract:The impressive growth of data throughput in optical microscopy has triggered a widespread use of supervised learning (SL) models running on compressed image datasets for efficient automated analysis. However, since lossy image compression risks to produce unpredictable artifacts, quantifying the effect of data compression on SL applications is of pivotal importance to assess their reliability, especially for clinical use. We propose an experimental method to evaluate the tolerability of image compression distortions in 2D and 3D cell segmentation SL tasks: predictions on compressed data are compared to the raw predictive uncertainty, which is numerically estimated from the raw noise statistics measured through sensor calibration. We show that predictions on object- and image-specific segmentation parameters can be altered by up to 15% and more than 10 standard deviations after 16-to-8 bits downsampling or JPEG compression. In contrast, a recently developed lossless compression algorithm provides a prediction spread which is statistically equivalent to that stemming from raw noise, while providing a compression ratio of up to 10:1. By setting a lower bound to the SL predictive uncertainty, our technique can be generalized to validate a variety of data analysis pipelines in SL-assisted fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge