Taşkın Padır

Forest Biomass Mapping with Terrestrial Hyperspectral Imaging for Wildfire Risk Monitoring

Nov 25, 2024

Abstract:With the rapid increase in wildfires in the past decade, it has become necessary to detect and predict these disasters to mitigate losses to ecosystems and human lives. In this paper, we present a novel solution -- Hyper-Drive3D -- consisting of snapshot hyperspectral imaging and LiDAR, mounted on an Unmanned Ground Vehicle (UGV) that identifies areas inside forests at risk of becoming fuel for a forest fire. This system enables more accurate classification by analyzing the spectral signatures of forest vegetation. We conducted field trials in a controlled environment simulating forest conditions, yielding valuable insights into the system's effectiveness. Extensive data collection was also performed in a dense forest across varying environmental conditions and topographies to enhance the system's predictive capabilities for fire hazards and support a risk-informed, proactive forest management strategy. Additionally, we propose a framework for extracting moisture data from hyperspectral imagery and projecting it into 3D space.

PROSPECT: Precision Robot Spectroscopy Exploration and Characterization Tool

Mar 25, 2024Abstract:Near Infrared (NIR) spectroscopy is widely used in industrial quality control and automation to test the purity and material quality of items. In this research, we propose a novel sensorized end effector and acquisition strategy to capture spectral signatures from objects and register them with a 3D point cloud. Our methodology first takes a 3D scan of an object generated by a time-of-flight depth camera and decomposes the object into a series of planned viewpoints covering the surface. We generate motion plans for a robot manipulator and end-effector to visit these viewpoints while maintaining a fixed distance and surface normal to ensure maximal spectral signal quality enabled by the spherical motion of the end-effector. By continuously acquiring surface reflectance values as the end-effector scans the target object, the autonomous system develops a four-dimensional model of the target object: position in an R^3 coordinate frame, and a wavelength vector denoting the associated spectral signature. We demonstrate this system in building spectral-spatial object profiles of increasingly complex geometries. As a point of comparison, we show our proposed system and spectral acquisition planning yields more consistent signal signals than naive point scanning strategies for capturing spectral information over complex surface geometries. Our work represents a significant step towards high-resolution spectral-spatial sensor fusion for automated quality assessment.

Battery-Swapping Multi-Agent System for Sustained Operation of Large Planetary Fleets

Jan 16, 2024Abstract:We propose a novel, heterogeneous multi-agent architecture that miniaturizes rovers by outsourcing power generation to a central hub. By delegating power generation and distribution functions to this hub, the size, weight, power, and cost (SWAP-C) per rover are reduced, enabling efficient fleet scaling. As these rovers conduct mission tasks around the terrain, the hub charges an array of replacement battery modules. When a rover requires charging, it returns to the hub to initiate an autonomous docking sequence and exits with a fully charged battery. This confers an advantage over direct charging methods, such as wireless or wired charging, by replenishing a rover in minutes as opposed to hours, increasing net rover uptime. This work shares an open-source platform developed to demonstrate battery swapping on unknown field terrain. We detail our design methodologies utilized for increasing system reliability, with a focus on optimization, robust mechanical design, and verification. Optimization of the system is discussed, including the design of passive guide rails through simulation-based optimization methods which increase the valid docking configuration space by 258%. The full system was evaluated during integrated testing, where an average servicing time of 98 seconds was achieved on surfaces with a gradient up to 10{\deg}. We conclude by briefly proposing flight considerations for advancing the system toward a space-ready design. In sum, this prototype represents a proof of concept for autonomous docking and battery transfer on field terrain, advancing its Technology Readiness Level (TRL) from 1 to 3.

HASHI: Highly Adaptable Seafood Handling Instrument for Manipulation in Industrial Settings

Nov 03, 2023Abstract:The seafood processing industry provides fertile ground for robotics to impact the future-of-work from multiple perspectives including productivity, worker safety, and quality of work life. The robotics research challenge is the realization of flexible and reliable manipulation of soft, deformable, slippery, spiky and scaly objects. In this paper, we propose a novel robot end effector, called HASHI, that employs chopstick-like appendages for precise and dexterous manipulation. This gripper is capable of in-hand manipulation by rotating its two constituent sticks relative to each other and offers control of objects in all three axes of rotation by imitating human use of chopsticks. HASHI delicately positions and orients food through embedded 6-axis force-torque sensors. We derive and validate the kinematic model for HASHI, as well as demonstrate grip force and torque readings from the sensorization of each chopstick. We also evaluate the versatility of HASHI through grasping trials of a variety of real and simulated food items with varying geometry, weight, and firmness.

Hyper-Drive: Visible-Short Wave Infrared Hyperspectral Imaging Datasets for Robots in Unstructured Environments

Aug 15, 2023Abstract:Hyperspectral sensors have enjoyed widespread use in the realm of remote sensing; however, they must be adapted to a format in which they can be operated onboard mobile robots. In this work, we introduce a first-of-its-kind system architecture with snapshot hyperspectral cameras and point spectrometers to efficiently generate composite datacubes from a robotic base. Our system collects and registers datacubes spanning the visible to shortwave infrared (660-1700 nm) spectrum while simultaneously capturing the ambient solar spectrum reflected off a white reference tile. We collect and disseminate a large dataset of more than 500 labeled datacubes from on-road and off-road terrain compliant with the ATLAS ontology to further the integration and demonstration of hyperspectral imaging (HSI) as beneficial in terrain class separability. Our analysis of this data demonstrates that HSI is a significant opportunity to increase understanding of scene composition from a robot-centric context. All code and data are open source online: https://river-lab.github.io/hyper_drive_data

A Vision for Cleaner Rivers: Harnessing Snapshot Hyperspectral Imaging to Detect Macro-Plastic Litter

Jul 22, 2023Abstract:Plastic waste entering the riverine harms local ecosystems leading to negative ecological and economic impacts. Large parcels of plastic waste are transported from inland to oceans leading to a global scale problem of floating debris fields. In this context, efficient and automatized monitoring of mismanaged plastic waste is paramount. To address this problem, we analyze the feasibility of macro-plastic litter detection using computational imaging approaches in river-like scenarios. We enable near-real-time tracking of partially submerged plastics by using snapshot Visible-Shortwave Infrared hyperspectral imaging. Our experiments indicate that imaging strategies associated with machine learning classification approaches can lead to high detection accuracy even in challenging scenarios, especially when leveraging hyperspectral data and nonlinear classifiers. All code, data, and models are available online: https://github.com/RIVeR-Lab/hyperspectral_macro_plastic_detection.

Team Northeastern's Approach to ANA XPRIZE Avatar Final Testing: A Holistic Approach to Telepresence and Lessons Learned

Mar 08, 2023Abstract:This paper reports on Team Northeastern's Avatar system for telepresence, and our holistic approach to meet the ANA Avatar XPRIZE Final testing task requirements. The system features a dual-arm configuration with hydraulically actuated glove-gripper pair for haptic force feedback. Our proposed Avatar system was evaluated in the ANA Avatar XPRIZE Finals and completed all 10 tasks, scored 14.5 points out of 15.0, and received the 3rd Place Award. We provide the details of improvements over our first generation Avatar, covering manipulation, perception, locomotion, power, network, and controller design. We also extensively discuss the major lessons learned during our participation in the competition.

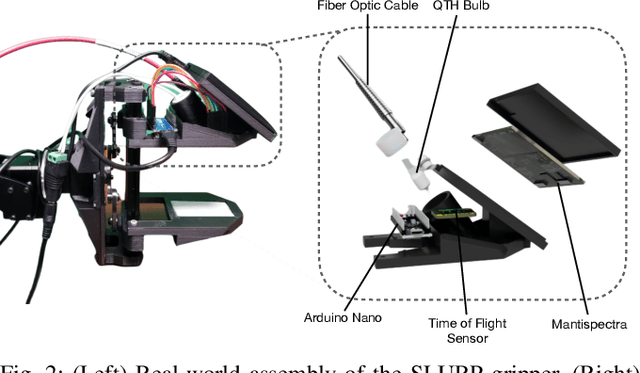

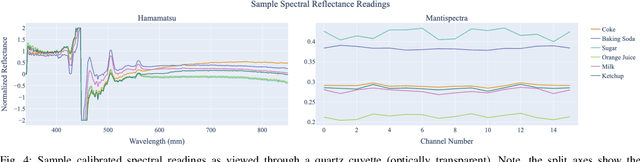

SLURP! Spectroscopy of Liquids Using Robot Pre-Touch Sensing

Oct 10, 2022

Abstract:Liquids and granular media are pervasive throughout human environments. Their free-flowing nature causes people to constrain them into containers. We do so with thousands of different types of containers made out of different materials with varying sizes, shapes, and colors. In this work, we present a state-of-the-art sensing technique for robots to perceive what liquid is inside of an unknown container. We do so by integrating Visible to Near Infrared (VNIR) reflectance spectroscopy into a robot's end effector. We introduce a hierarchical model for inferring the material classes of both containers and internal contents given spectral measurements from two integrated spectrometers. To train these inference models, we capture and open source a dataset of spectral measurements from over 180 different combinations of containers and liquids. Our technique demonstrates over 85% accuracy in identifying 13 different liquids and granular media contained within 13 different containers. The sensitivity of our spectral readings allow our model to also identify the material composition of the containers themselves with 96% accuracy. Overall, VNIR spectroscopy presents a promising method to give household robots a general-purpose ability to infer the liquids inside of containers, without needing to open or manipulate the containers.

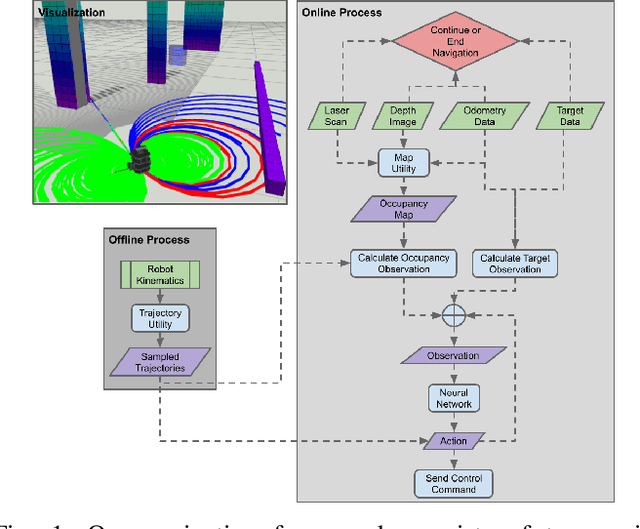

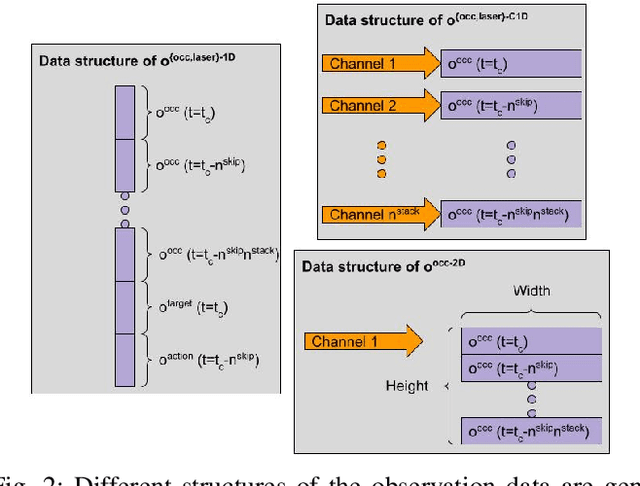

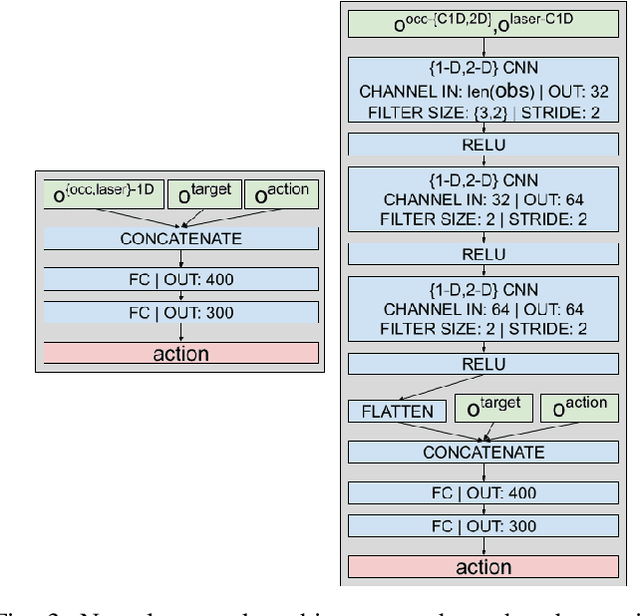

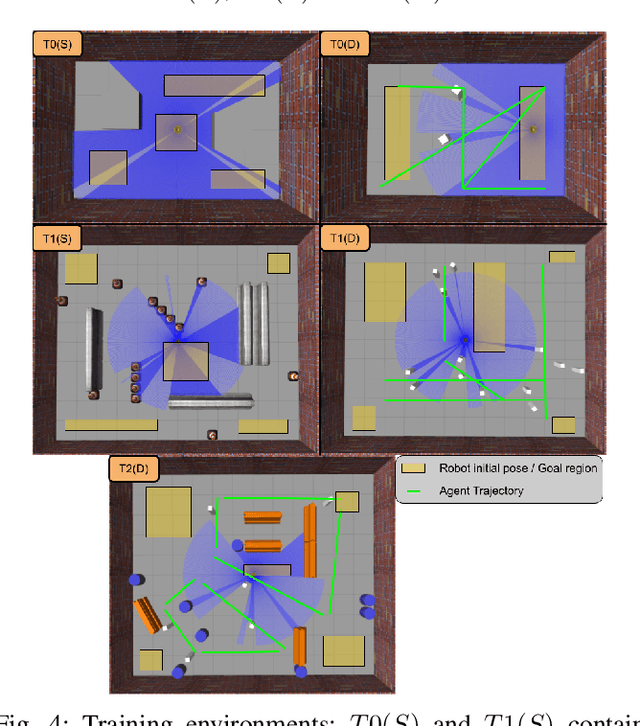

Deep Reinforcement Learning based Robot Navigation in Dynamic Environments using Occupancy Values of Motion Primitives

Aug 17, 2022

Abstract:This paper presents a Deep Reinforcement Learning based navigation approach in which we define the occupancy observations as heuristic evaluations of motion primitives, rather than using raw sensor data. Our method enables fast mapping of the occupancy data, generated by multi-sensor fusion, into trajectory values in 3D workspace. The computationally efficient trajectory evaluation allows dense sampling of the action space. We utilize our occupancy observations in different data structures to analyze their effects on both training process and navigation performance. We train and test our methodology on two different robots within challenging physics-based simulation environments including static and dynamic obstacles. We benchmark our occupancy representations with other conventional data structures from state-of-the-art methods. The trained navigation policies are also validated successfully with physical robots in dynamic environments. The results show that our method not only decreases the required training time but also improves the navigation performance as compared to other occupancy representations. The open-source implementation of our work and all related info are available at \url{https://github.com/RIVeR-Lab/tentabot}.

Reactive Navigation Framework for Mobile Robots by Heuristically Evaluated Pre-sampled Trajectories

May 17, 2021

Abstract:This paper describes and analyzes a reactive navigation framework for mobile robots in unknown environments. The approach does not rely on a global map and only considers the local occupancy in its robot-centered 3D grid structure. The proposed algorithm enables fast navigation by heuristic evaluations of pre-sampled trajectories on-the-fly. At each cycle, these paths are evaluated by a weighted cost function, based on heuristic features such as closeness to the goal, previously selected trajectories, and nearby obstacles. This paper introduces a systematic method to calculate a feasible pose on the selected trajectory, before sending it to the controller for the motion execution. Defining the structures in the framework and providing the implementation details, the paper also explains how to adjust its offline and online parameters. To demonstrate the versatility and adaptability of the algorithm in unknown environments, physics-based simulations on various maps are presented. Benchmark tests show the superior performance of the proposed algorithm over its previous iteration and another state-of-art method. The open-source implementation of the algorithm and the benchmark data can be found at \url{https://github.com/RIVeR-Lab/tentabot}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge