Akhil Padmanabha

EgoCHARM: Resource-Efficient Hierarchical Activity Recognition using an Egocentric IMU Sensor

Apr 24, 2025Abstract:Human activity recognition (HAR) on smartglasses has various use cases, including health/fitness tracking and input for context-aware AI assistants. However, current approaches for egocentric activity recognition suffer from low performance or are resource-intensive. In this work, we introduce a resource (memory, compute, power, sample) efficient machine learning algorithm, EgoCHARM, for recognizing both high level and low level activities using a single egocentric (head-mounted) Inertial Measurement Unit (IMU). Our hierarchical algorithm employs a semi-supervised learning strategy, requiring primarily high level activity labels for training, to learn generalizable low level motion embeddings that can be effectively utilized for low level activity recognition. We evaluate our method on 9 high level and 3 low level activities achieving 0.826 and 0.855 F1 scores on high level and low level activity recognition respectively, with just 63k high level and 22k low level model parameters, allowing the low level encoder to be deployed directly on current IMU chips with compute. Lastly, we present results and insights from a sensitivity analysis and highlight the opportunities and limitations of activity recognition using egocentric IMUs.

Towards Wearable Interfaces for Robotic Caregiving

Feb 07, 2025

Abstract:Physically assistive robots in home environments can enhance the autonomy of individuals with impairments, allowing them to regain the ability to conduct self-care and household tasks. Individuals with physical limitations may find existing interfaces challenging to use, highlighting the need for novel interfaces that can effectively support them. In this work, we present insights on the design and evaluation of an active control wearable interface named HAT, Head-Worn Assistive Teleoperation. To tackle challenges in user workload while using such interfaces, we propose and evaluate a shared control algorithm named Driver Assistance. Finally, we introduce the concept of passive control, in which wearable interfaces detect implicit human signals to inform and guide robotic actions during caregiving tasks, with the aim of reducing user workload while potentially preserving the feeling of control.

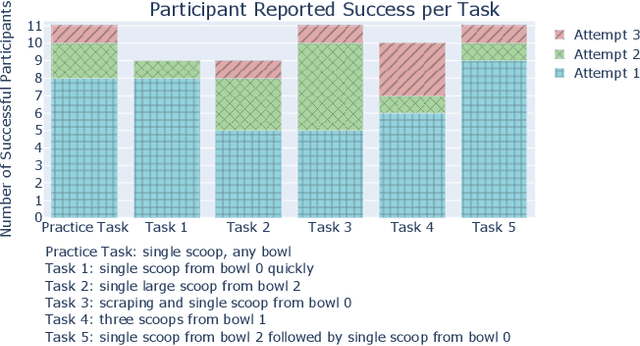

Towards an LLM-Based Speech Interface for Robot-Assisted Feeding

Oct 27, 2024Abstract:Physically assistive robots present an opportunity to significantly increase the well-being and independence of individuals with motor impairments or other forms of disability who are unable to complete activities of daily living (ADLs). Speech interfaces, especially ones that utilize Large Language Models (LLMs), can enable individuals to effectively and naturally communicate high-level commands and nuanced preferences to robots. In this work, we demonstrate an LLM-based speech interface for a commercially available assistive feeding robot. Our system is based on an iteratively designed framework, from the paper "VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots," that incorporates human-centric elements for integrating LLMs as interfaces for robots. It has been evaluated through a user study with 11 older adults at an independent living facility. Videos are located on our project website: https://sites.google.com/andrew.cmu.edu/voicepilot/.

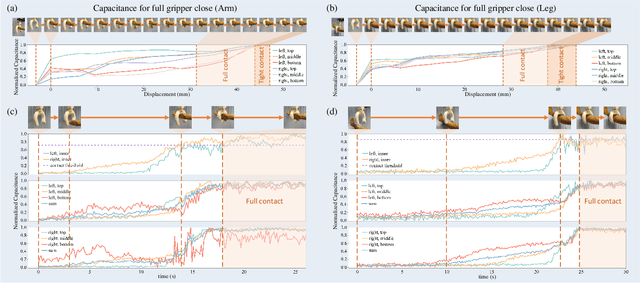

RoboCAP: Robotic Classification and Precision Pouring of Diverse Liquids and Granular Media with Capacitive Sensing

May 13, 2024Abstract:Liquids and granular media are pervasive throughout human environments, yet remain particularly challenging for robots to sense and manipulate precisely. In this work, we present a systematic approach at integrating capacitive sensing within robotic end effectors to enable robust sensing and precise manipulation of liquids and granular media. We introduce the parallel-jaw RoboCAP Gripper with embedded capacitive sensing arrays that enable a robot to directly sense the materials and dynamics of liquids inside of diverse containers, including some visually opaque. When coupled with model-based control, we demonstrate that the proposed system enables a robotic manipulator to achieve state-of-the-art precision pouring accuracy for a range of substances with varying dynamics properties. Code, designs, and build details are available on the project website.

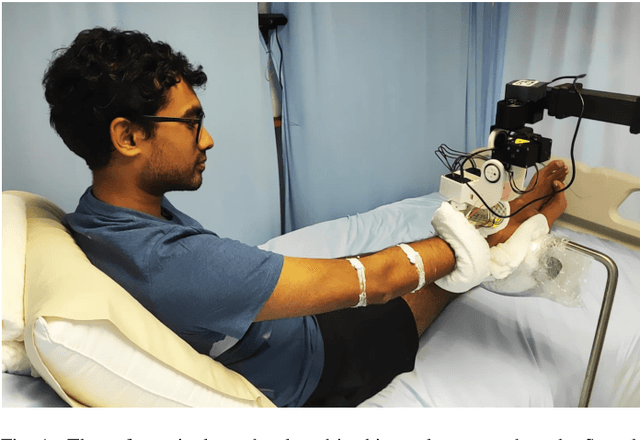

SkinGrip: An Adaptive Soft Robotic Manipulator with Capacitive Sensing for Whole-Limb Bathing Assistance

May 04, 2024

Abstract:Robotics presents a promising opportunity for enhancing bathing assistance, potentially to alleviate labor shortages and reduce care costs, while offering consistent and gentle care for individuals with physical disabilities. However, ensuring flexible and efficient cleaning of the human body poses challenges as it involves direct physical contact between the human and the robot, and necessitates simple, safe, and effective control. In this paper, we introduce a soft, expandable robotic manipulator with embedded capacitive proximity sensing arrays, designed for safe and efficient bathing assistance. We conduct a thorough evaluation of our soft manipulator, comparing it with a baseline rigid end effector in a human study involving 12 participants across $96$ bathing trails. Our soft manipulator achieves an an average cleaning effectiveness of 88.8% on arms and 81.4% on legs, far exceeding the performance of the baseline. Participant feedback further validates the manipulator's ability to maintain safety, comfort, and thorough cleaning.

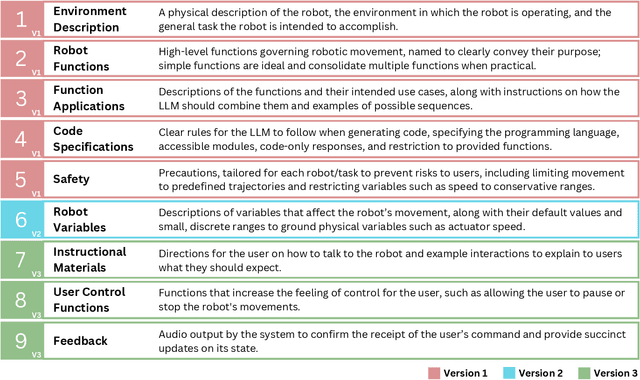

VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots

Apr 05, 2024

Abstract:Physically assistive robots present an opportunity to significantly increase the well-being and independence of individuals with motor impairments or other forms of disability who are unable to complete activities of daily living. Speech interfaces, especially ones that utilize Large Language Models (LLMs), can enable individuals to effectively and naturally communicate high-level commands and nuanced preferences to robots. Frameworks for integrating LLMs as interfaces to robots for high level task planning and code generation have been proposed, but fail to incorporate human-centric considerations which are essential while developing assistive interfaces. In this work, we present a framework for incorporating LLMs as speech interfaces for physically assistive robots, constructed iteratively with 3 stages of testing involving a feeding robot, culminating in an evaluation with 11 older adults at an independent living facility. We use both quantitative and qualitative data from the final study to validate our framework and additionally provide design guidelines for using LLMs as speech interfaces for assistive robots. Videos and supporting files are located on our project website: https://sites.google.com/andrew.cmu.edu/voicepilot/

Independence in the Home: A Wearable Interface for a Person with Quadriplegia to Teleoperate a Mobile Manipulator

Jan 02, 2024

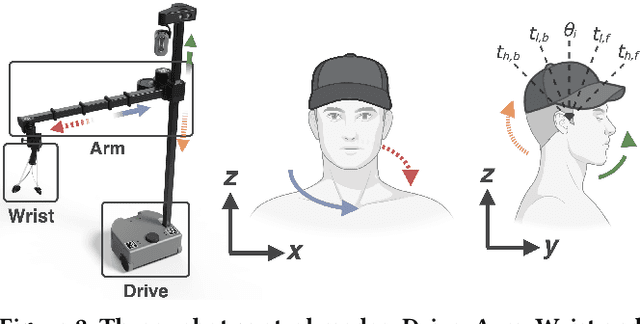

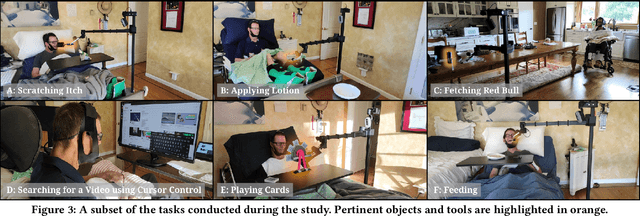

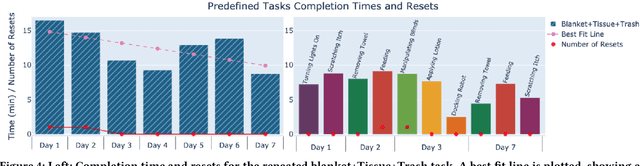

Abstract:Teleoperation of mobile manipulators within a home environment can significantly enhance the independence of individuals with severe motor impairments, allowing them to regain the ability to perform self-care and household tasks. There is a critical need for novel teleoperation interfaces to offer effective alternatives for individuals with impairments who may encounter challenges in using existing interfaces due to physical limitations. In this work, we iterate on one such interface, HAT (Head-Worn Assistive Teleoperation), an inertial-based wearable integrated into any head-worn garment. We evaluate HAT through a 7-day in-home study with Henry Evans, a non-speaking individual with quadriplegia who has participated extensively in assistive robotics studies. We additionally evaluate HAT with a proposed shared control method for mobile manipulators termed Driver Assistance and demonstrate how the interface generalizes to other physical devices and contexts. Our results show that HAT is a strong teleoperation interface across key metrics including efficiency, errors, learning curve, and workload. Code and videos are located on our project website.

A Multimodal Sensing Ring for Quantification of Scratch Intensity

Feb 08, 2023Abstract:An objective measurement of the debilitating symptom, chronic itch, is necessary for improvements in patient care for numerous medical conditions. While wearable devices have shown promise for scratch detection, they are currently unable to estimate scratch intensity, preventing a comprehensive understanding of the effect of itch on an individual. In this work, we present a framework for the estimation of scratch intensity in addition to scratch detection consisting of a multimodal wearable ring device and machine learning algorithms for regression of scratch intensity on a 0-600 mW mechanical power scale that can be mapped to a 0-10 continuous scale. We evaluate the performance of our algorithms on 20 individuals using Leave One Subject Out (LOSO) Cross Validation (CV) and using data from 14 additional participants, we show that our algorithms achieve clinically-relevant discrimination of scratching intensity levels. This work demonstrates that a finger-worn device can provide multidimensional, objective, real-time measures for the action of scratching.

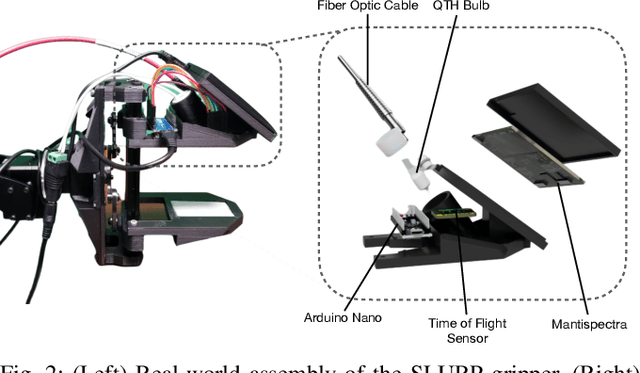

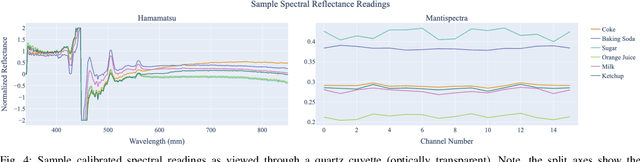

SLURP! Spectroscopy of Liquids Using Robot Pre-Touch Sensing

Oct 10, 2022

Abstract:Liquids and granular media are pervasive throughout human environments. Their free-flowing nature causes people to constrain them into containers. We do so with thousands of different types of containers made out of different materials with varying sizes, shapes, and colors. In this work, we present a state-of-the-art sensing technique for robots to perceive what liquid is inside of an unknown container. We do so by integrating Visible to Near Infrared (VNIR) reflectance spectroscopy into a robot's end effector. We introduce a hierarchical model for inferring the material classes of both containers and internal contents given spectral measurements from two integrated spectrometers. To train these inference models, we capture and open source a dataset of spectral measurements from over 180 different combinations of containers and liquids. Our technique demonstrates over 85% accuracy in identifying 13 different liquids and granular media contained within 13 different containers. The sensitivity of our spectral readings allow our model to also identify the material composition of the containers themselves with 96% accuracy. Overall, VNIR spectroscopy presents a promising method to give household robots a general-purpose ability to infer the liquids inside of containers, without needing to open or manipulate the containers.

HAT: Head-Worn Assistive Teleoperation of Mobile Manipulators

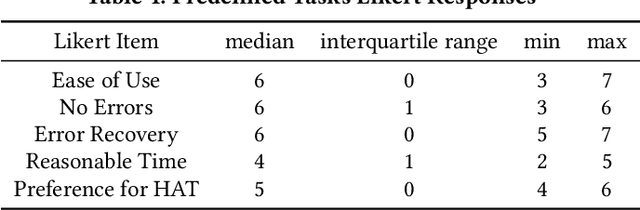

Sep 27, 2022Abstract:Mobile manipulators in the home can provide increased autonomy to individuals with severe motor impairments, who often cannot complete activities of daily living (ADLs) without the help of a caregiver. Teleoperation of an assistive mobile manipulator could enable an individual with motor impairments to independently perform self-care and household tasks, yet limited motor function can impede one's ability to interface with a robot. In this work, we present a unique inertial-based wearable assistive interface, embedded in a familiar head-worn garment, for individuals with severe motor impairments to teleoperate and perform physical tasks with a mobile manipulator. We evaluate this wearable interface with both able-bodied (N = 16) and individuals with motor impairments (N = 2) for performing ADLs and everyday household tasks. Our results show that the wearable interface enabled participants to complete physical tasks with low error rates, high perceived ease of use, and low workload measures. Overall, this inertial-based wearable serves as a new assistive interface option for control of mobile manipulators in the home.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge