Carmel Majidi

A Unified Framework for Simulating Strongly-Coupled Fluid-Robot Multiphysics

Jun 05, 2025Abstract:We present a framework for simulating fluid-robot multiphysics as a single, unified optimization problem. The coupled manipulator and incompressible Navier-Stokes equations governing the robot and fluid dynamics are derived together from a single Lagrangian using the principal of least action. We then employ discrete variational mechanics to derive a stable, implicit time-integration scheme for jointly simulating both the fluid and robot dynamics, which are tightly coupled by a constraint that enforces the no-slip boundary condition at the fluid-robot interface. Extending the classical immersed boundary method, we derive a new formulation of the no-slip constraint that is numerically well-conditioned and physically accurate for multibody systems commonly found in robotics. We demonstrate our approach's physical accuracy on benchmark computational fluid-dynamics problems, including Poiseuille flow and a disc in free stream. We then design a locomotion policy for a novel swimming robot in simulation and validate results on real-world hardware, showcasing our framework's sim-to-real capability for robotics tasks.

Towards Wearable Interfaces for Robotic Caregiving

Feb 07, 2025

Abstract:Physically assistive robots in home environments can enhance the autonomy of individuals with impairments, allowing them to regain the ability to conduct self-care and household tasks. Individuals with physical limitations may find existing interfaces challenging to use, highlighting the need for novel interfaces that can effectively support them. In this work, we present insights on the design and evaluation of an active control wearable interface named HAT, Head-Worn Assistive Teleoperation. To tackle challenges in user workload while using such interfaces, we propose and evaluate a shared control algorithm named Driver Assistance. Finally, we introduce the concept of passive control, in which wearable interfaces detect implicit human signals to inform and guide robotic actions during caregiving tasks, with the aim of reducing user workload while potentially preserving the feeling of control.

Q-learning-based Model-free Safety Filter

Nov 29, 2024Abstract:Ensuring safety via safety filters in real-world robotics presents significant challenges, particularly when the system dynamics is complex or unavailable. To handle this issue, learning-based safety filters recently gained popularity, which can be classified as model-based and model-free methods. Existing model-based approaches requires various assumptions on system model (e.g., control-affine), which limits their application in complex systems, and existing model-free approaches need substantial modifications to standard RL algorithms and lack versatility. This paper proposes a simple, plugin-and-play, and effective model-free safety filter learning framework. We introduce a novel reward formulation and use Q-learning to learn Q-value functions to safeguard arbitrary task specific nominal policies via filtering out their potentially unsafe actions. The threshold used in the filtering process is supported by our theoretical analysis. Due to its model-free nature and simplicity, our framework can be seamlessly integrated with various RL algorithms. We validate the proposed approach through simulations on double integrator and Dubin's car systems and demonstrate its effectiveness in real-world experiments with a soft robotic limb.

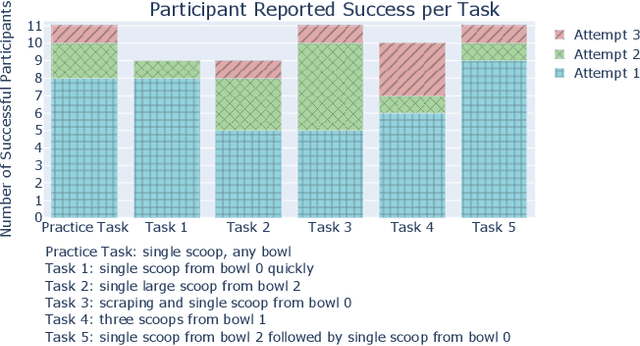

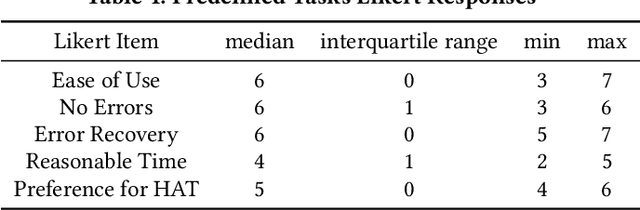

Towards an LLM-Based Speech Interface for Robot-Assisted Feeding

Oct 27, 2024Abstract:Physically assistive robots present an opportunity to significantly increase the well-being and independence of individuals with motor impairments or other forms of disability who are unable to complete activities of daily living (ADLs). Speech interfaces, especially ones that utilize Large Language Models (LLMs), can enable individuals to effectively and naturally communicate high-level commands and nuanced preferences to robots. In this work, we demonstrate an LLM-based speech interface for a commercially available assistive feeding robot. Our system is based on an iteratively designed framework, from the paper "VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots," that incorporates human-centric elements for integrating LLMs as interfaces for robots. It has been evaluated through a user study with 11 older adults at an independent living facility. Videos are located on our project website: https://sites.google.com/andrew.cmu.edu/voicepilot/.

1 Modular Parallel Manipulator for Long-Term Soft Robotic Data Collection

Sep 05, 2024

Abstract:Performing long-term experimentation or large-scale data collection for machine learning in the field of soft robotics is challenging, due to the hardware robustness and experimental flexibility required. In this work, we propose a modular parallel robotic manipulation platform suitable for such large-scale data collection and compatible with various soft-robotic fabrication methods. Considering the computational and theoretical difficulty of replicating the high-fidelity, faster-than-real-time simulations that enable large-scale data collection in rigid robotic systems, a robust soft-robotic hardware platform becomes a high priority development task for the field. The platform's modules consist of a pair of off-the-shelf electrical motors which actuate a customizable finger consisting of a compliant parallel structure. The parallel mechanism of the finger can be as simple as a single 3D-printed urethane or molded silicone bulk structure, due to the motors being able to fully actuate a passive structure. This design flexibility allows experimentation with soft mechanism varied geometries, bulk properties and surface properties. Additionally, while the parallel mechanism does not require separate electronics or additional parts, these can be included, and it can be constructed using multi-functional soft materials to study compatible soft sensors and actuators in the learning process. In this work, we validate the platform's ability to be used for policy gradient reinforcement learning directly on hardware in a benchmark 2D manipulation task. We additionally demonstrate compatibility with multiple fingers and characterize the design constraints for compatible extensions.

VoicePilot: Harnessing LLMs as Speech Interfaces for Physically Assistive Robots

Apr 05, 2024

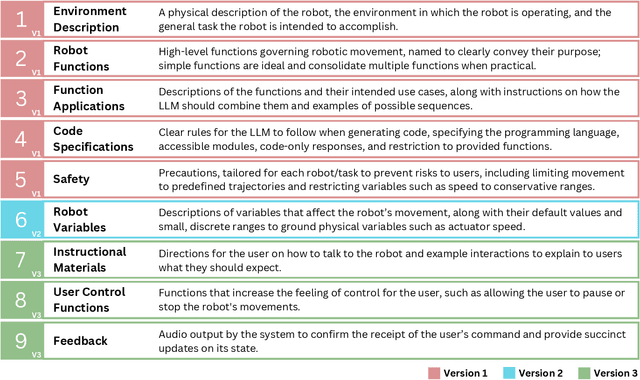

Abstract:Physically assistive robots present an opportunity to significantly increase the well-being and independence of individuals with motor impairments or other forms of disability who are unable to complete activities of daily living. Speech interfaces, especially ones that utilize Large Language Models (LLMs), can enable individuals to effectively and naturally communicate high-level commands and nuanced preferences to robots. Frameworks for integrating LLMs as interfaces to robots for high level task planning and code generation have been proposed, but fail to incorporate human-centric considerations which are essential while developing assistive interfaces. In this work, we present a framework for incorporating LLMs as speech interfaces for physically assistive robots, constructed iteratively with 3 stages of testing involving a feeding robot, culminating in an evaluation with 11 older adults at an independent living facility. We use both quantitative and qualitative data from the final study to validate our framework and additionally provide design guidelines for using LLMs as speech interfaces for assistive robots. Videos and supporting files are located on our project website: https://sites.google.com/andrew.cmu.edu/voicepilot/

Hierarchical State Space Models for Continuous Sequence-to-Sequence Modeling

Feb 15, 2024Abstract:Reasoning from sequences of raw sensory data is a ubiquitous problem across fields ranging from medical devices to robotics. These problems often involve using long sequences of raw sensor data (e.g. magnetometers, piezoresistors) to predict sequences of desirable physical quantities (e.g. force, inertial measurements). While classical approaches are powerful for locally-linear prediction problems, they often fall short when using real-world sensors. These sensors are typically non-linear, are affected by extraneous variables (e.g. vibration), and exhibit data-dependent drift. For many problems, the prediction task is exacerbated by small labeled datasets since obtaining ground-truth labels requires expensive equipment. In this work, we present Hierarchical State-Space Models (HiSS), a conceptually simple, new technique for continuous sequential prediction. HiSS stacks structured state-space models on top of each other to create a temporal hierarchy. Across six real-world sensor datasets, from tactile-based state prediction to accelerometer-based inertial measurement, HiSS outperforms state-of-the-art sequence models such as causal Transformers, LSTMs, S4, and Mamba by at least 23% on MSE. Our experiments further indicate that HiSS demonstrates efficient scaling to smaller datasets and is compatible with existing data-filtering techniques. Code, datasets and videos can be found on https://hiss-csp.github.io.

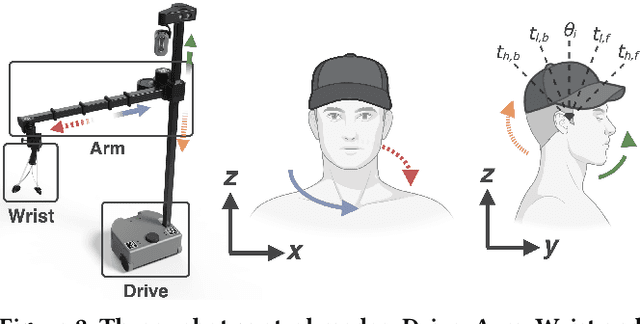

Independence in the Home: A Wearable Interface for a Person with Quadriplegia to Teleoperate a Mobile Manipulator

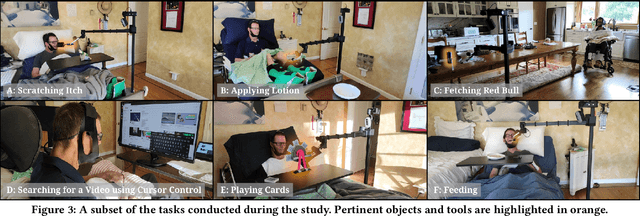

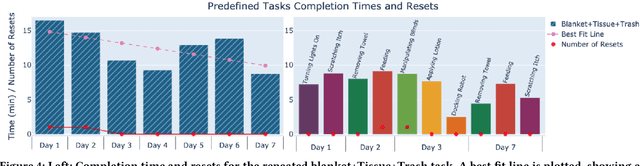

Jan 02, 2024

Abstract:Teleoperation of mobile manipulators within a home environment can significantly enhance the independence of individuals with severe motor impairments, allowing them to regain the ability to perform self-care and household tasks. There is a critical need for novel teleoperation interfaces to offer effective alternatives for individuals with impairments who may encounter challenges in using existing interfaces due to physical limitations. In this work, we iterate on one such interface, HAT (Head-Worn Assistive Teleoperation), an inertial-based wearable integrated into any head-worn garment. We evaluate HAT through a 7-day in-home study with Henry Evans, a non-speaking individual with quadriplegia who has participated extensively in assistive robotics studies. We additionally evaluate HAT with a proposed shared control method for mobile manipulators termed Driver Assistance and demonstrate how the interface generalizes to other physical devices and contexts. Our results show that HAT is a strong teleoperation interface across key metrics including efficiency, errors, learning curve, and workload. Code and videos are located on our project website.

A Multimodal Sensing Ring for Quantification of Scratch Intensity

Feb 08, 2023Abstract:An objective measurement of the debilitating symptom, chronic itch, is necessary for improvements in patient care for numerous medical conditions. While wearable devices have shown promise for scratch detection, they are currently unable to estimate scratch intensity, preventing a comprehensive understanding of the effect of itch on an individual. In this work, we present a framework for the estimation of scratch intensity in addition to scratch detection consisting of a multimodal wearable ring device and machine learning algorithms for regression of scratch intensity on a 0-600 mW mechanical power scale that can be mapped to a 0-10 continuous scale. We evaluate the performance of our algorithms on 20 individuals using Leave One Subject Out (LOSO) Cross Validation (CV) and using data from 14 additional participants, we show that our algorithms achieve clinically-relevant discrimination of scratching intensity levels. This work demonstrates that a finger-worn device can provide multidimensional, objective, real-time measures for the action of scratching.

Design and Characterization of Viscoelastic McKibben Actuators with Tunable Force-Velocity Curves

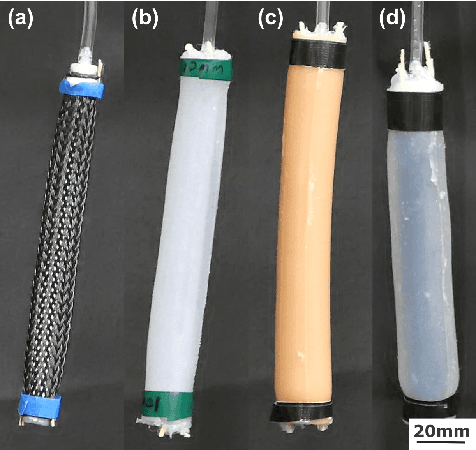

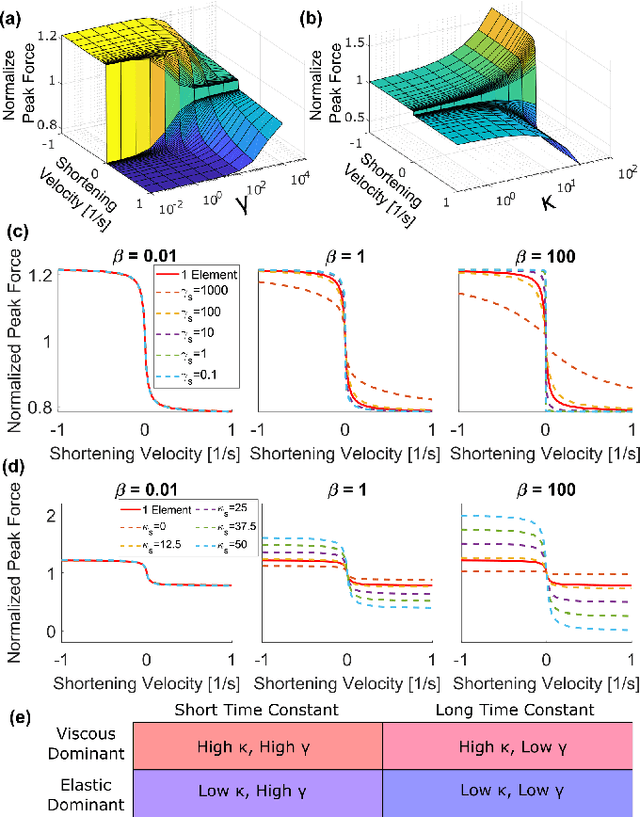

Jan 11, 2023

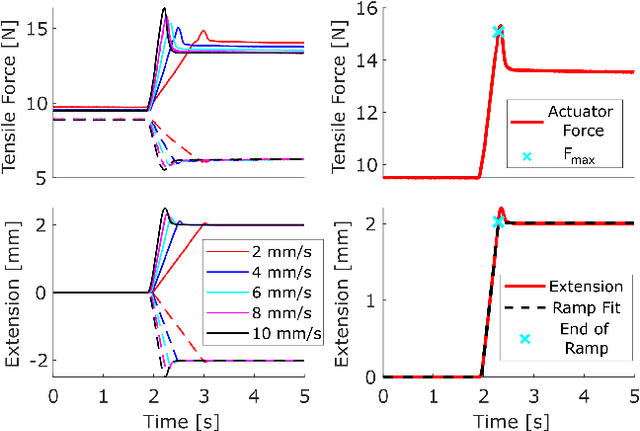

Abstract:The McKibben pneumatic artificial muscle is a commonly studied soft robotic actuator, and its quasistatic force-length properties have been well characterized and modeled. However, its damping and force-velocity properties are less well studied. Understanding these properties will allow for more robust dynamic modeling of soft robotic systems. The force-velocity response of these actuators is of particular interest because these actuators are often used as hardware models of skeletal muscles for bioinspired robots, and this force-velocity relationship is fundamental to muscle physiology. In this work, we investigated the force-velocity response of McKibben actuators and the ability to tune this response through the use of viscoelastic polymer sheaths. These viscoelastic McKibben actuators (VMAs) were characterized using iso-velocity experiments inspired by skeletal muscle physiology tests. A simplified 1D model of the actuators was developed to connect the shape of the force-velocity curve to the material parameters of the actuator and sheaths. Using these viscoelastic materials, we were able to modulate the shape and magnitude of the actuators' force-velocity curves, and using the developed model, these changes were connected back to the material properties of the sheaths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge