Austin Allison

SCANS: A Soft Gripper with Curvature and Spectroscopy Sensors for In-Hand Material Differentiation

Oct 02, 2025

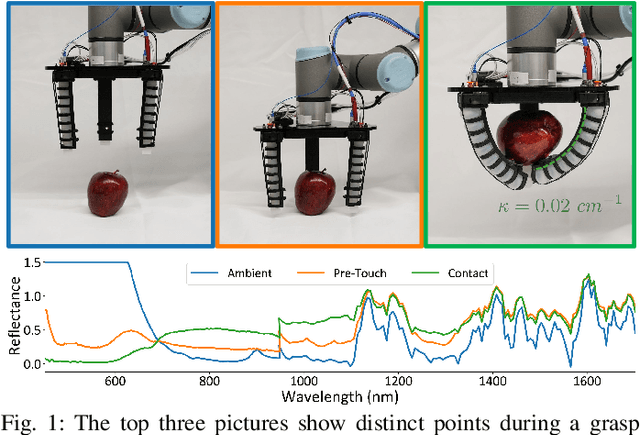

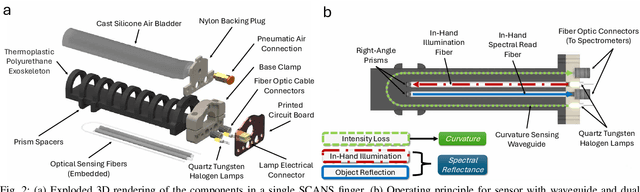

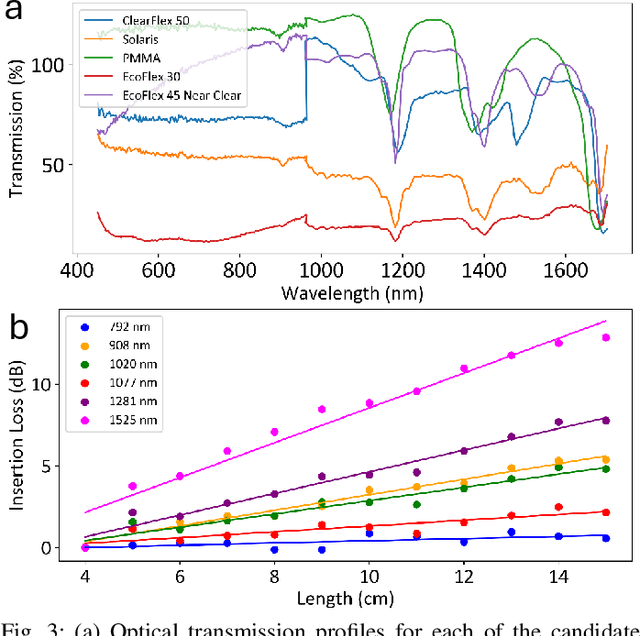

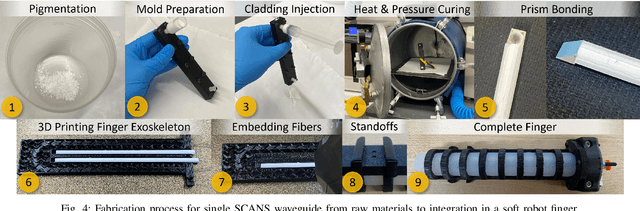

Abstract:We introduce the soft curvature and spectroscopy (SCANS) system: a versatile, electronics-free, fluidically actuated soft manipulator capable of assessing the spectral properties of objects either in hand or through pre-touch caging. This platform offers a wider spectral sensing capability than previous soft robotic counterparts. We perform a material analysis to explore optimal soft substrates for spectral sensing, and evaluate both pre-touch and in-hand performance. Experiments demonstrate explainable, statistical separation across diverse object classes and sizes (metal, wood, plastic, organic, paper, foam), with large spectral angle differences between items. Through linear discriminant analysis, we show that sensitivity in the near-infrared wavelengths is critical to distinguishing visually similar objects. These capabilities advance the potential of optics as a multi-functional sensory modality for soft robots. The complete parts list, assembly guidelines, and processing code for the SCANS gripper are accessible at: https://parses-lab.github.io/scans/.

HASHI: Highly Adaptable Seafood Handling Instrument for Manipulation in Industrial Settings

Nov 03, 2023

Abstract:The seafood processing industry provides fertile ground for robotics to impact the future-of-work from multiple perspectives including productivity, worker safety, and quality of work life. The robotics research challenge is the realization of flexible and reliable manipulation of soft, deformable, slippery, spiky and scaly objects. In this paper, we propose a novel robot end effector, called HASHI, that employs chopstick-like appendages for precise and dexterous manipulation. This gripper is capable of in-hand manipulation by rotating its two constituent sticks relative to each other and offers control of objects in all three axes of rotation by imitating human use of chopsticks. HASHI delicately positions and orients food through embedded 6-axis force-torque sensors. We derive and validate the kinematic model for HASHI, as well as demonstrate grip force and torque readings from the sensorization of each chopstick. We also evaluate the versatility of HASHI through grasping trials of a variety of real and simulated food items with varying geometry, weight, and firmness.

Mobile MoCap: Retroreflector Localization On-The-Go

Mar 23, 2023

Abstract:Motion capture (MoCap) through tracking retroreflectors obtains high precision pose estimation, which is frequently used in robotics. Unlike MoCap, fiducial marker-based tracking methods do not require a static camera setup to perform relative localization. Popular pose-estimating systems based on fiducial markers have lower localization accuracy than MoCap. As a solution, we propose Mobile MoCap, a system that employs inexpensive near-infrared cameras for precise relative localization in dynamic environments. We present a retroreflector feature detector that performs 6-DoF (six degrees-of-freedom) tracking and operates with minimal camera exposure times to reduce motion blur. To evaluate different localization techniques in a mobile robot setup, we mount our Mobile MoCap system, as well as a standard RGB camera, onto a precision-controlled linear rail for the purposes of retroreflective and fiducial marker tracking, respectively. We benchmark the two systems against each other, varying distance, marker viewing angle, and relative velocities. Our stereo-based Mobile MoCap approach obtains higher position and orientation accuracy than the fiducial approach. The code for Mobile MoCap is implemented in ROS 2 and made publicly available at https://github.com/RIVeR-Lab/mobile_mocap.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge