Mark Zolotas

A Systematic Study of Data Modalities and Strategies for Co-training Large Behavior Models for Robot Manipulation

Feb 01, 2026Abstract:Large behavior models have shown strong dexterous manipulation capabilities by extending imitation learning to large-scale training on multi-task robot data, yet their generalization remains limited by the insufficient robot data coverage. To expand this coverage without costly additional data collection, recent work relies on co-training: jointly learning from target robot data and heterogeneous data modalities. However, how different co-training data modalities and strategies affect policy performance remains poorly understood. We present a large-scale empirical study examining five co-training data modalities: standard vision-language data, dense language annotations for robot trajectories, cross-embodiment robot data, human videos, and discrete robot action tokens across single- and multi-phase training strategies. Our study leverages 4,000 hours of robot and human manipulation data and 50M vision-language samples to train vision-language-action policies. We evaluate 89 policies over 58,000 simulation rollouts and 2,835 real-world rollouts. Our results show that co-training with forms of vision-language and cross-embodiment robot data substantially improves generalization to distribution shifts, unseen tasks, and language following, while discrete action token variants yield no significant benefits. Combining effective modalities produces cumulative gains and enables rapid adaptation to unseen long-horizon dexterous tasks via fine-tuning. Training exclusively on robot data degrades the visiolinguistic understanding of the vision-language model backbone, while co-training with effective modalities restores these capabilities. Explicitly conditioning action generation on chain-of-thought traces learned from co-training data does not improve performance in our simulation benchmark. Together, these results provide practical guidance for building scalable generalist robot policies.

Re4MPC: Reactive Nonlinear MPC for Multi-model Motion Planning via Deep Reinforcement Learning

Jun 10, 2025Abstract:Traditional motion planning methods for robots with many degrees-of-freedom, such as mobile manipulators, are often computationally prohibitive for real-world settings. In this paper, we propose a novel multi-model motion planning pipeline, termed Re4MPC, which computes trajectories using Nonlinear Model Predictive Control (NMPC). Re4MPC generates trajectories in a computationally efficient manner by reactively selecting the model, cost, and constraints of the NMPC problem depending on the complexity of the task and robot state. The policy for this reactive decision-making is learned via a Deep Reinforcement Learning (DRL) framework. We introduce a mathematical formulation to integrate NMPC into this DRL framework. To validate our methodology and design choices, we evaluate DRL training and test outcomes in a physics-based simulation involving a mobile manipulator. Experimental results demonstrate that Re4MPC is more computationally efficient and achieves higher success rates in reaching end-effector goals than the NMPC baseline, which computes whole-body trajectories without our learning mechanism.

Data-Driven Sampling Based Stochastic MPC for Skid-Steer Mobile Robot Navigation

Nov 05, 2024

Abstract:Traditional approaches to motion modeling for skid-steer robots struggle with capturing nonlinear tire-terrain dynamics, especially during high-speed maneuvers. In this paper, we tackle such nonlinearities by enhancing a dynamic unicycle model with Gaussian Process (GP) regression outputs. This enables us to develop an adaptive, uncertainty-informed navigation formulation. We solve the resultant stochastic optimal control problem using a chance-constrained Model Predictive Path Integral (MPPI) control method. This approach formulates both obstacle avoidance and path-following as chance constraints, accounting for residual uncertainties from the GP to ensure safety and reliability in control. Leveraging GPU acceleration, we efficiently manage the non-convex nature of the problem, ensuring real-time performance. Our approach unifies path-following and obstacle avoidance across different terrains, unlike prior works which typically focus on one or the other. We compare our GP-MPPI method against unicycle and data-driven kinematic models within the MPPI framework. In simulations, our approach shows superior tracking accuracy and obstacle avoidance. We further validate our approach through hardware experiments on a skid-steer robot platform, demonstrating its effectiveness in high-speed navigation. The GPU implementation of the proposed method and supplementary video footage are available at https: //stochasticmppi.github.io.

Chance-Constrained Convex MPC for Robust Quadruped Locomotion Under Parametric and Additive Uncertainties

Nov 05, 2024

Abstract:Recent advances in quadrupedal locomotion have focused on improving stability and performance across diverse environments. However, existing methods often lack adequate safety analysis and struggle to adapt to varying payloads and complex terrains, typically requiring extensive tuning. To overcome these challenges, we propose a Chance-Constrained Model Predictive Control (CCMPC) framework that explicitly models payload and terrain variability as distributions of parametric and additive disturbances within the single rigid body dynamics (SRBD) model. Our approach ensures safe and consistent performance under uncertain dynamics by expressing the model friction cone constraints, which define the feasible set of ground reaction forces, as chance constraints. Moreover, we solve the resulting stochastic control problem using a computationally efficient quadratic programming formulation. Extensive Monte Carlo simulations of quadrupedal locomotion across varying payloads and complex terrains demonstrate that CCMPC significantly outperforms two competitive benchmarks: Linear MPC (LMPC) and MPC with hand-tuned safety margins to maintain stability, reduce foot slippage, and track the center of mass. Hardware experiments on the Unitree Go1 robot show successful locomotion across various indoor and outdoor terrains with unknown loads exceeding 50% of the robot body weight, despite no additional parameter tuning. A video of the results and accompanying code can be found at: https://cc-mpc.github.io/.

User-customizable Shared Control for Fine Teleoperation via Virtual Reality

Mar 19, 2024

Abstract:Shared control can ease and enhance a human operator's ability to teleoperate robots, particularly for intricate tasks demanding fine control over multiple degrees of freedom. However, the arbitration process dictating how much autonomous assistance to administer in shared control can confuse novice operators and impede their understanding of the robot's behavior. To overcome these adverse side-effects, we propose a novel formulation of shared control that enables operators to tailor the arbitration to their unique capabilities and preferences. Unlike prior approaches to customizable shared control where users could indirectly modify the latent parameters of the arbitration function by issuing a feedback command, we instead make these parameters observable and directly editable via a virtual reality (VR) interface. We present our user-customizable shared control method for a teleoperation task in SE(3), known as the buzz wire game. A user study is conducted with participants teleoperating a robotic arm in VR to complete the game. The experiment spanned two weeks per subject to investigate longitudinal trends. Our findings reveal that users allowed to interactively tune the arbitration parameters across trials generalize well to adaptations in the task, exhibiting improvements in precision and fluency over direct teleoperation and conventional shared control.

A Probabilistic Motion Model for Skid-Steer Wheeled Mobile Robot Navigation on Off-Road Terrains

Feb 29, 2024Abstract:Skid-Steer Wheeled Mobile Robots (SSWMRs) are increasingly being used for off-road autonomy applications. When turning at high speeds, these robots tend to undergo significant skidding and slipping. In this work, using Gaussian Process Regression (GPR) and Sigma-Point Transforms, we estimate the non-linear effects of tire-terrain interaction on robot velocities in a probabilistic fashion. Using the mean estimates from GPR, we propose a data-driven dynamic motion model that is more accurate at predicting future robot poses than conventional kinematic motion models. By efficiently solving a convex optimization problem based on the history of past robot motion, the GPR augmented motion model generalizes to previously unseen terrain conditions. The output distribution from the proposed motion model can be used for local motion planning approaches, such as stochastic model predictive control, leveraging model uncertainty to make safe decisions. We validate our work on a benchmark real-world multi-terrain SSWMR dataset. Our results show that the model generalizes to three different terrains while significantly reducing errors in linear and angular motion predictions. As shown in the attached video, we perform a separate set of experiments on a physical robot to demonstrate the robustness of the proposed algorithm.

Shared Affordance-awareness via Augmented Reality for Proactive Assistance in Human-robot Collaboration

Dec 20, 2023Abstract:Enabling humans and robots to collaborate effectively requires purposeful communication and an understanding of each other's affordances. Prior work in human-robot collaboration has incorporated knowledge of human affordances, i.e., their action possibilities in the current context, into autonomous robot decision-making. This "affordance awareness" is especially promising for service robots that need to know when and how to assist a person that cannot independently complete a task. However, robots still fall short in performing many common tasks autonomously. In this work-in-progress paper, we propose an augmented reality (AR) framework that bridges the gap in an assistive robot's capabilities by actively engaging with a human through a shared affordance-awareness representation. Leveraging the different perspectives from a human wearing an AR headset and a robot's equipped sensors, we can build a perceptual representation of the shared environment and model regions of respective agent affordances. The AR interface can also allow both agents to communicate affordances with one another, as well as prompt for assistance when attempting to perform an action outside their affordance region. This paper presents the main components of the proposed framework and discusses its potential through a domestic cleaning task experiment.

Mobile MoCap: Retroreflector Localization On-The-Go

Mar 23, 2023

Abstract:Motion capture (MoCap) through tracking retroreflectors obtains high precision pose estimation, which is frequently used in robotics. Unlike MoCap, fiducial marker-based tracking methods do not require a static camera setup to perform relative localization. Popular pose-estimating systems based on fiducial markers have lower localization accuracy than MoCap. As a solution, we propose Mobile MoCap, a system that employs inexpensive near-infrared cameras for precise relative localization in dynamic environments. We present a retroreflector feature detector that performs 6-DoF (six degrees-of-freedom) tracking and operates with minimal camera exposure times to reduce motion blur. To evaluate different localization techniques in a mobile robot setup, we mount our Mobile MoCap system, as well as a standard RGB camera, onto a precision-controlled linear rail for the purposes of retroreflective and fiducial marker tracking, respectively. We benchmark the two systems against each other, varying distance, marker viewing angle, and relative velocities. Our stereo-based Mobile MoCap approach obtains higher position and orientation accuracy than the fiducial approach. The code for Mobile MoCap is implemented in ROS 2 and made publicly available at https://github.com/RIVeR-Lab/mobile_mocap.

Disentangled Sequence Clustering for Human Intention Inference

Jan 23, 2021

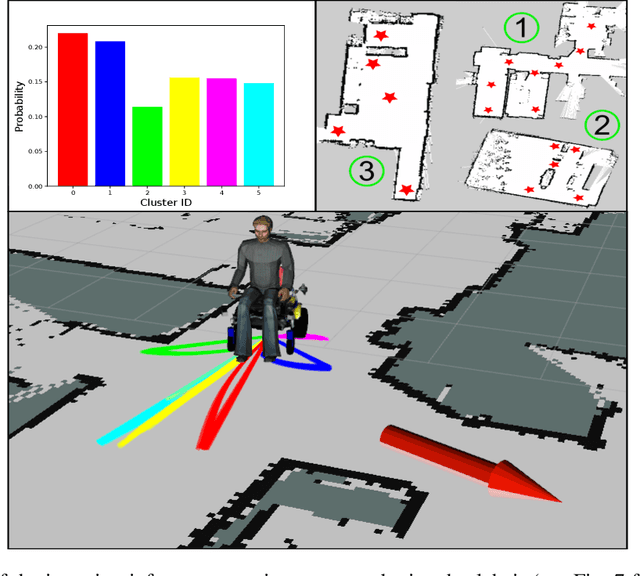

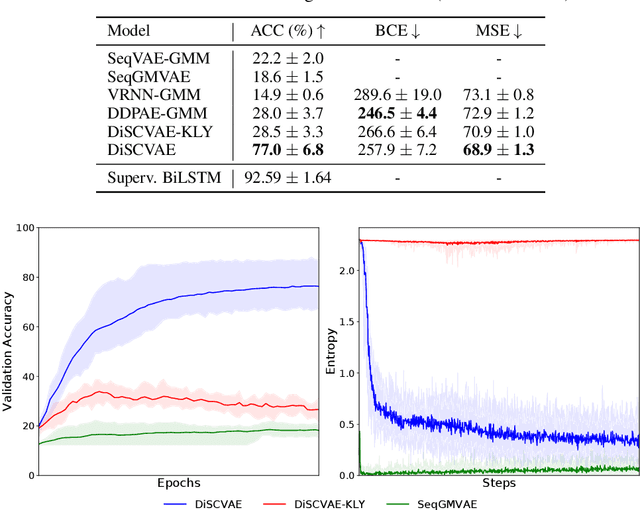

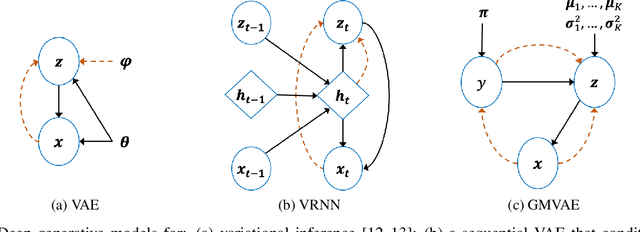

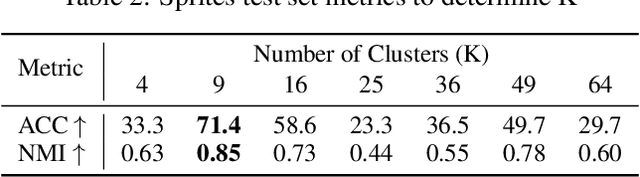

Abstract:Equipping robots with the ability to infer human intent is a vital precondition for effective collaboration. Most computational approaches towards this objective employ probabilistic reasoning to recover a distribution of "intent" conditioned on the robot's perceived sensory state. However, these approaches typically assume task-specific notions of human intent (e.g. labelled goals) are known a priori. To overcome this constraint, we propose the Disentangled Sequence Clustering Variational Autoencoder (DiSCVAE), a clustering framework that can be used to learn such a distribution of intent in an unsupervised manner. The DiSCVAE leverages recent advances in unsupervised learning to derive a disentangled latent representation of sequential data, separating time-varying local features from time-invariant global aspects. Though unlike previous frameworks for disentanglement, the proposed variant also infers a discrete variable to form a latent mixture model and enable clustering of global sequence concepts, e.g. intentions from observed human behaviour. To evaluate the DiSCVAE, we first validate its capacity to discover classes from unlabelled sequences using video datasets of bouncing digits and 2D animations. We then report results from a real-world human-robot interaction experiment conducted on a robotic wheelchair. Our findings glean insights into how the inferred discrete variable coincides with human intent and thus serves to improve assistance in collaborative settings, such as shared control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge