Taskin Padir

ViTa-Zero: Zero-shot Visuotactile Object 6D Pose Estimation

Apr 17, 2025Abstract:Object 6D pose estimation is a critical challenge in robotics, particularly for manipulation tasks. While prior research combining visual and tactile (visuotactile) information has shown promise, these approaches often struggle with generalization due to the limited availability of visuotactile data. In this paper, we introduce ViTa-Zero, a zero-shot visuotactile pose estimation framework. Our key innovation lies in leveraging a visual model as its backbone and performing feasibility checking and test-time optimization based on physical constraints derived from tactile and proprioceptive observations. Specifically, we model the gripper-object interaction as a spring-mass system, where tactile sensors induce attractive forces, and proprioception generates repulsive forces. We validate our framework through experiments on a real-world robot setup, demonstrating its effectiveness across representative visual backbones and manipulation scenarios, including grasping, object picking, and bimanual handover. Compared to the visual models, our approach overcomes some drastic failure modes while tracking the in-hand object pose. In our experiments, our approach shows an average increase of 55% in AUC of ADD-S and 60% in ADD, along with an 80% lower position error compared to FoundationPose.

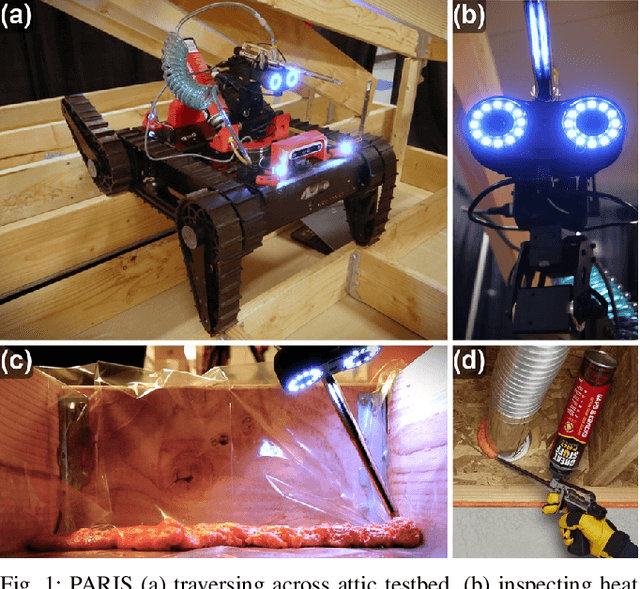

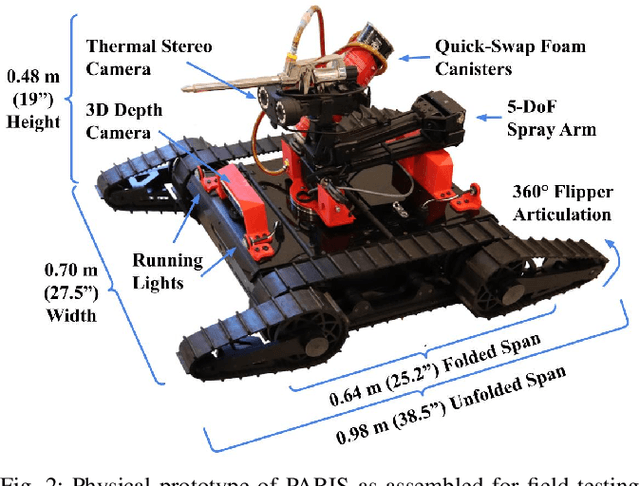

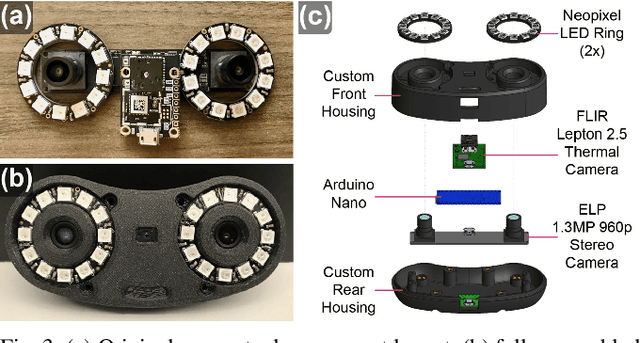

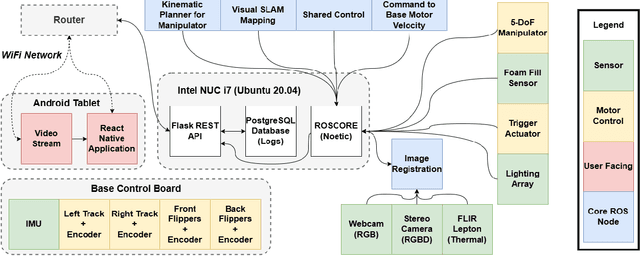

Use-Inspired Mobile Robot to Improve Safety of Building Retrofit Workforce in Constrained Spaces

Nov 25, 2024

Abstract:The inspection of confined critical infrastructure such as attics or crawlspaces is challenging for human operators due to insufficient task space, limited visibility, and the presence of hazardous materials. This paper introduces a prototype of PARIS (Precision Application Robot for Inaccessible Spaces): a use-inspired teleoperated mobile robot manipulator system that was conceived, developed, and tested for and selected as a Phase I winner of the U.S. Department of Energy's E-ROBOT Prize. To improve the thermal efficiency of buildings, the PARIS platform supports: 1) teleoperated mapping and navigation, enabling the human operator to explore compact spaces; 2) inspection and sensing, facilitating the identification and localization of under-insulated areas; and 3) air-sealing targeted gaps and cracks through which thermal energy is lost. The resulting versatile platform can also be tailored for targeted application of treatments and remediation in constrained spaces.

Data-Driven Sampling Based Stochastic MPC for Skid-Steer Mobile Robot Navigation

Nov 05, 2024

Abstract:Traditional approaches to motion modeling for skid-steer robots struggle with capturing nonlinear tire-terrain dynamics, especially during high-speed maneuvers. In this paper, we tackle such nonlinearities by enhancing a dynamic unicycle model with Gaussian Process (GP) regression outputs. This enables us to develop an adaptive, uncertainty-informed navigation formulation. We solve the resultant stochastic optimal control problem using a chance-constrained Model Predictive Path Integral (MPPI) control method. This approach formulates both obstacle avoidance and path-following as chance constraints, accounting for residual uncertainties from the GP to ensure safety and reliability in control. Leveraging GPU acceleration, we efficiently manage the non-convex nature of the problem, ensuring real-time performance. Our approach unifies path-following and obstacle avoidance across different terrains, unlike prior works which typically focus on one or the other. We compare our GP-MPPI method against unicycle and data-driven kinematic models within the MPPI framework. In simulations, our approach shows superior tracking accuracy and obstacle avoidance. We further validate our approach through hardware experiments on a skid-steer robot platform, demonstrating its effectiveness in high-speed navigation. The GPU implementation of the proposed method and supplementary video footage are available at https: //stochasticmppi.github.io.

Chance-Constrained Convex MPC for Robust Quadruped Locomotion Under Parametric and Additive Uncertainties

Nov 05, 2024

Abstract:Recent advances in quadrupedal locomotion have focused on improving stability and performance across diverse environments. However, existing methods often lack adequate safety analysis and struggle to adapt to varying payloads and complex terrains, typically requiring extensive tuning. To overcome these challenges, we propose a Chance-Constrained Model Predictive Control (CCMPC) framework that explicitly models payload and terrain variability as distributions of parametric and additive disturbances within the single rigid body dynamics (SRBD) model. Our approach ensures safe and consistent performance under uncertain dynamics by expressing the model friction cone constraints, which define the feasible set of ground reaction forces, as chance constraints. Moreover, we solve the resulting stochastic control problem using a computationally efficient quadratic programming formulation. Extensive Monte Carlo simulations of quadrupedal locomotion across varying payloads and complex terrains demonstrate that CCMPC significantly outperforms two competitive benchmarks: Linear MPC (LMPC) and MPC with hand-tuned safety margins to maintain stability, reduce foot slippage, and track the center of mass. Hardware experiments on the Unitree Go1 robot show successful locomotion across various indoor and outdoor terrains with unknown loads exceeding 50% of the robot body weight, despite no additional parameter tuning. A video of the results and accompanying code can be found at: https://cc-mpc.github.io/.

Predictive Mapping of Spectral Signatures from RGB Imagery for Off-Road Terrain Analysis

May 08, 2024Abstract:Accurate identification of complex terrain characteristics, such as soil composition and coefficient of friction, is essential for model-based planning and control of mobile robots in off-road environments. Spectral signatures leverage distinct patterns of light absorption and reflection to identify various materials, enabling precise characterization of their inherent properties. Recent research in robotics has explored the adoption of spectroscopy to enhance perception and interaction with environments. However, the significant cost and elaborate setup required for mounting these sensors present formidable barriers to widespread adoption. In this study, we introduce RS-Net (RGB to Spectral Network), a deep neural network architecture designed to map RGB images to corresponding spectral signatures. We illustrate how RS-Net can be synergistically combined with Co-Learning techniques for terrain property estimation. Initial results demonstrate the effectiveness of this approach in characterizing spectral signatures across an extensive off-road real-world dataset. These findings highlight the feasibility of terrain property estimation using only RGB cameras.

Learning a Stable, Safe, Distributed Feedback Controller for a Heterogeneous Platoon of Vehicles

Apr 18, 2024Abstract:Platooning of autonomous vehicles has the potential to increase safety and fuel efficiency on highways. The goal of platooning is to have each vehicle drive at some speed (set by the leader) while maintaining a safe distance from its neighbors. Many prior works have analyzed various controllers for platooning, most commonly linear feedback and distributed model predictive controllers. In this work, we introduce an algorithm for learning a stable, safe, distributed controller for a heterogeneous platoon. Our algorithm relies on recent developments in learning neural network stability and safety certificates. We train a controller for autonomous platooning in simulation and evaluate its performance on hardware with a platoon of four F1Tenth vehicles. We then perform further analysis in simulation with a platoon of 100 vehicles. Experimental results demonstrate the practicality of the algorithm and the learned controller by comparing the performance of the neural network controller to linear feedback and distributed model predictive controllers.

User-customizable Shared Control for Fine Teleoperation via Virtual Reality

Mar 19, 2024

Abstract:Shared control can ease and enhance a human operator's ability to teleoperate robots, particularly for intricate tasks demanding fine control over multiple degrees of freedom. However, the arbitration process dictating how much autonomous assistance to administer in shared control can confuse novice operators and impede their understanding of the robot's behavior. To overcome these adverse side-effects, we propose a novel formulation of shared control that enables operators to tailor the arbitration to their unique capabilities and preferences. Unlike prior approaches to customizable shared control where users could indirectly modify the latent parameters of the arbitration function by issuing a feedback command, we instead make these parameters observable and directly editable via a virtual reality (VR) interface. We present our user-customizable shared control method for a teleoperation task in SE(3), known as the buzz wire game. A user study is conducted with participants teleoperating a robotic arm in VR to complete the game. The experiment spanned two weeks per subject to investigate longitudinal trends. Our findings reveal that users allowed to interactively tune the arbitration parameters across trials generalize well to adaptations in the task, exhibiting improvements in precision and fluency over direct teleoperation and conventional shared control.

StereoNavNet: Learning to Navigate using Stereo Cameras with Auxiliary Occupancy Voxels

Mar 18, 2024Abstract:Visual navigation has received significant attention recently. Most of the prior works focus on predicting navigation actions based on semantic features extracted from visual encoders. However, these approaches often rely on large datasets and exhibit limited generalizability. In contrast, our approach draws inspiration from traditional navigation planners that operate on geometric representations, such as occupancy maps. We propose StereoNavNet (SNN), a novel visual navigation approach employing a modular learning framework comprising perception and policy modules. Within the perception module, we estimate an auxiliary 3D voxel occupancy grid from stereo RGB images and extract geometric features from it. These features, along with user-defined goals, are utilized by the policy module to predict navigation actions. Through extensive empirical evaluation, we demonstrate that SNN outperforms baseline approaches in terms of success rates, success weighted by path length, and navigation error. Furthermore, SNN exhibits better generalizability, characterized by maintaining leading performance when navigating across previously unseen environments.

Design and Realization of a Benchmarking Testbed for Evaluating Autonomous Platooning Algorithms

Feb 14, 2024Abstract:Autonomous vehicle platoons present near- and long-term opportunities to enhance operational efficiencies and save lives. The past 30 years have seen rapid development in the autonomous driving space, enabling new technologies that will alleviate the strain placed on human drivers and reduce vehicle emissions. This paper introduces a testbed for evaluating and benchmarking platooning algorithms on 1/10th scale vehicles with onboard sensors. To demonstrate the testbed's utility, we evaluate three algorithms, linear feedback and two variations of distributed model predictive control, and compare their results on a typical platooning scenario where the lead vehicle tracks a reference trajectory that changes speed multiple times. We validate our algorithms in simulation to analyze the performance as the platoon size increases, and find that the distributed model predictive control algorithms outperform linear feedback on hardware and in simulation.

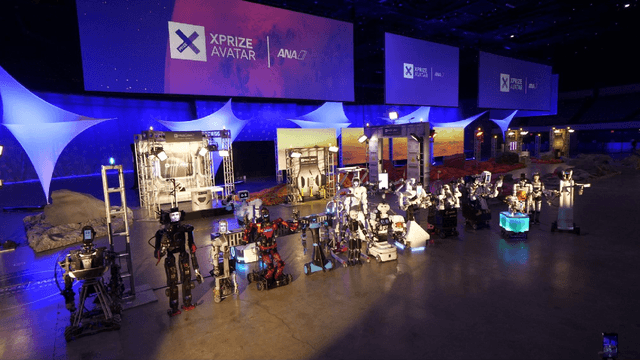

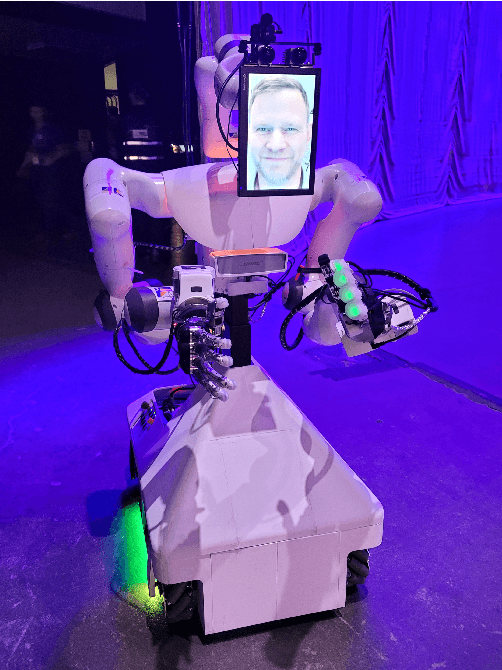

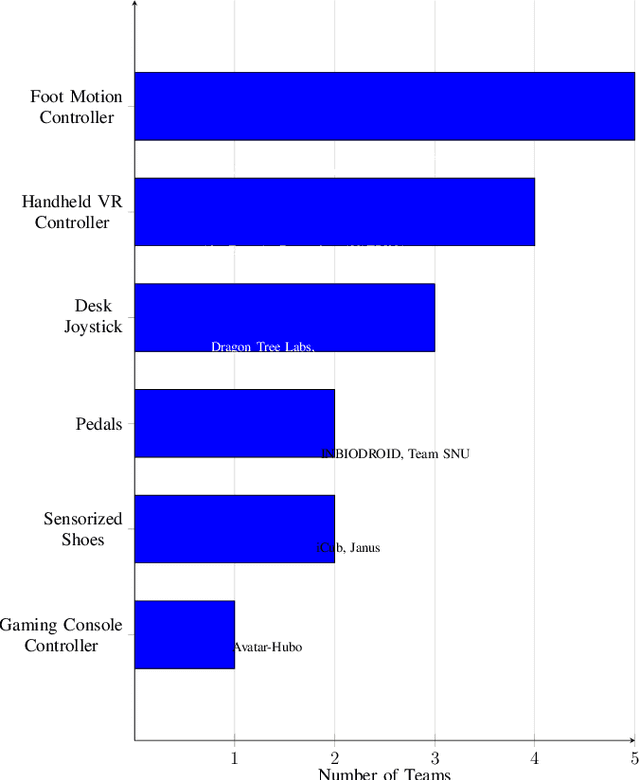

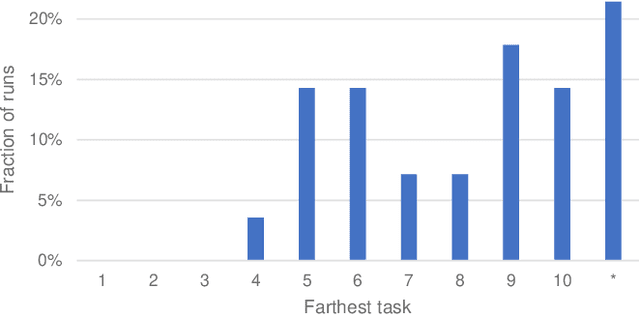

Analysis and Perspectives on the ANA Avatar XPRIZE Competition

Jan 10, 2024

Abstract:The ANA Avatar XPRIZE was a four-year competition to develop a robotic "avatar" system to allow a human operator to sense, communicate, and act in a remote environment as though physically present. The competition featured a unique requirement that judges would operate the avatars after less than one hour of training on the human-machine interfaces, and avatar systems were judged on both objective and subjective scoring metrics. This paper presents a unified summary and analysis of the competition from technical, judging, and organizational perspectives. We study the use of telerobotics technologies and innovations pursued by the competing teams in their avatar systems, and correlate the use of these technologies with judges' task performance and subjective survey ratings. It also summarizes perspectives from team leads, judges, and organizers about the competition's execution and impact to inform the future development of telerobotics and telepresence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge