Cheng Feng

Following the Teacher's Footsteps: Scheduled Checkpoint Distillation for Domain-Specific LLMs

Jan 15, 2026Abstract:Large language models (LLMs) are challenging to deploy for domain-specific tasks due to their massive scale. While distilling a fine-tuned LLM into a smaller student model is a promising alternative, the capacity gap between teacher and student often leads to suboptimal performance. This raises a key question: when and how can a student model match or even surpass its teacher on domain-specific tasks? In this work, we propose a novel theoretical insight: a student can outperform its teacher if its advantage on a Student-Favored Subdomain (SFS) outweighs its deficit on the Teacher-Favored Subdomain (TFS). Guided by this insight, we propose Scheduled Checkpoint Distillation (SCD), which reduces the TFS deficit by emulating the teacher's convergence process during supervised fine-tuning (SFT) on the domain task, and a sample-wise Adaptive Weighting (AW) mechanism to preserve student strengths on SFS. Experiments across diverse domain tasks--including QA, NER, and text classification in multiple languages--show that our method consistently outperforms existing distillation approaches, allowing the student model to match or even exceed the performance of its fine-tuned teacher.

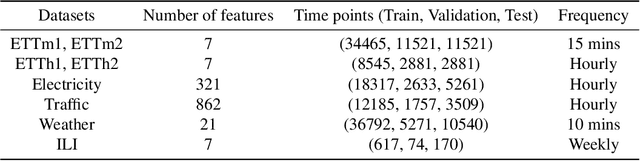

TSFM in-context learning for time-series classification of bearing-health status

Nov 19, 2025Abstract:This paper introduces a classification method using in-context learning in time-series foundation models (TSFM). We show how data, which was not part of the TSFM training data corpus, can be classified without the need of finetuning the model. Examples are represented in the form of targets (class id) and covariates (data matrix) within the prompt of the model, which enables to classify an unknown covariate data pattern alongside the forecast axis through in-context learning. We apply this method to vibration data for assessing the health state of a bearing within a servo-press motor. The method transforms frequency domain reference signals into pseudo time-series patterns, generates aligned covariate and target signals, and uses the TSFM to predict probabilities how classified data corresponds to predefined labels. Leveraging the scalability of pre-trained models this method demonstrates efficacy across varied operational conditions. This marks significant progress beyond custom narrow AI solutions towards broader, AI-driven maintenance systems.

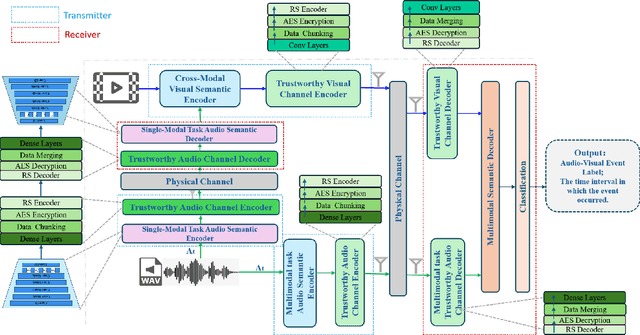

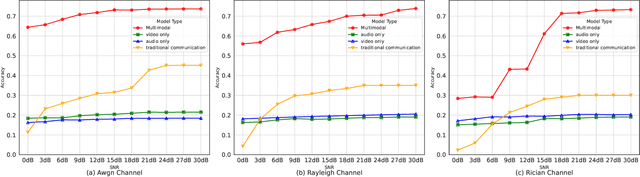

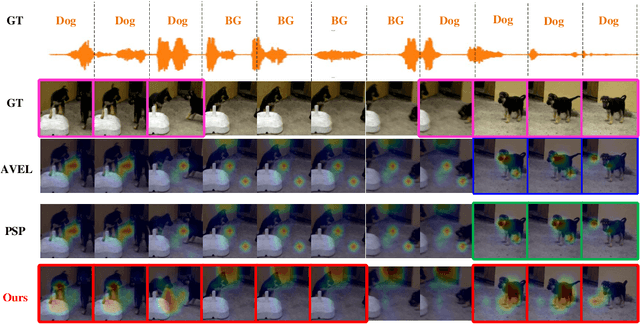

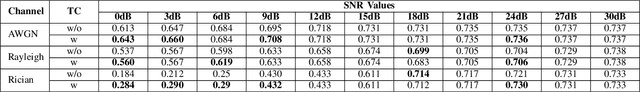

Multimodal Trustworthy Semantic Communication for Audio-Visual Event Localization

Nov 04, 2024

Abstract:The exponential growth in wireless data traffic, driven by the proliferation of mobile devices and smart applications, poses significant challenges for modern communication systems. Ensuring the secure and reliable transmission of multimodal semantic information is increasingly critical, particularly for tasks like Audio-Visual Event (AVE) localization. This letter introduces MMTrustSC, a novel framework designed to address these challenges by enhancing the security and reliability of multimodal communication. MMTrustSC incorporates advanced semantic encoding techniques to safeguard data integrity and privacy. It features a two-level coding scheme that combines error-correcting codes with conventional encoders to improve the accuracy and reliability of multimodal data transmission. Additionally, MMTrustSC employs hybrid encryption, integrating both asymmetric and symmetric encryption methods, to secure semantic information and ensure its confidentiality and integrity across potentially hostile networks. Simulation results validate MMTrustSC's effectiveness, demonstrating substantial improvements in data transmission accuracy and reliability for AVE localization tasks. This framework represents a significant advancement in managing intermodal information complementarity and mitigating physical noise, thus enhancing overall system performance.

Only the Curve Shape Matters: Training Foundation Models for Zero-Shot Multivariate Time Series Forecasting through Next Curve Shape Prediction

Feb 19, 2024

Abstract:We present General Time Transformer (GTT), an encoder-only style foundation model for zero-shot multivariate time series forecasting. GTT is pretrained on a large dataset of 200M high-quality time series samples spanning diverse domains. In our proposed framework, the task of multivariate time series forecasting is formulated as a channel-wise next curve shape prediction problem, where each time series sample is represented as a sequence of non-overlapping curve shapes with a unified numerical magnitude. GTT is trained to predict the next curve shape based on a window of past curve shapes in a channel-wise manner. Experimental results demonstrate that GTT exhibits superior zero-shot multivariate forecasting capabilities on unseen time series datasets, even surpassing state-of-the-art supervised baselines. Additionally, we investigate the impact of varying GTT model parameters and training dataset scales, observing that the scaling law also holds in the context of zero-shot multivariate time series forecasting.

PARs: Predicate-based Association Rules for Efficient and Accurate Model-Agnostic Anomaly Explanation

Dec 18, 2023

Abstract:While new and effective methods for anomaly detection are frequently introduced, many studies prioritize the detection task without considering the need for explainability. Yet, in real-world applications, anomaly explanation, which aims to provide explanation of why specific data instances are identified as anomalies, is an equally important task. In this work, we present a novel approach for efficient and accurate model-agnostic anomaly explanation for tabular data using Predicate-based Association Rules (PARs). PARs can provide intuitive explanations not only about which features of the anomaly instance are abnormal, but also the reasons behind their abnormality. Our user study indicates that the anomaly explanation form of PARs is better comprehended and preferred by regular users of anomaly detection systems as compared to existing model-agnostic explanation options. Furthermore, we conduct extensive experiments on various benchmark datasets, demonstrating that PARs compare favorably to state-of-the-art model-agnostic methods in terms of computing efficiency and explanation accuracy on anomaly explanation tasks. The code for PARs tool is available at https://github.com/NSIBF/PARs-EXAD.

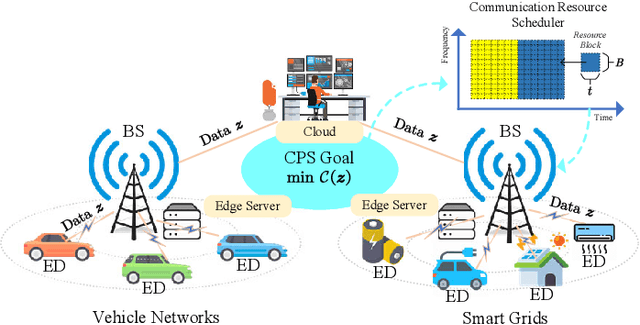

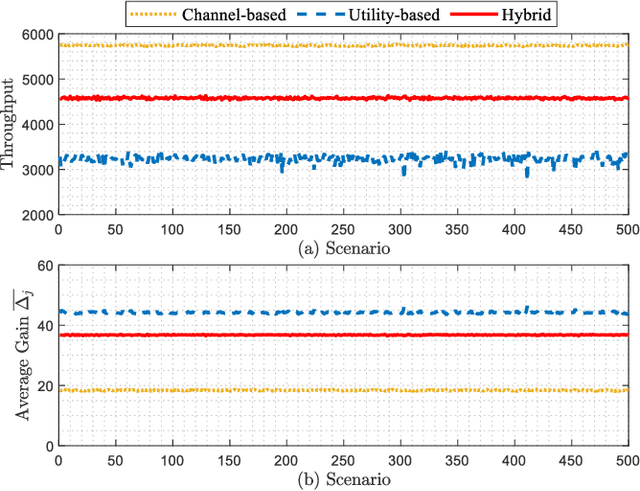

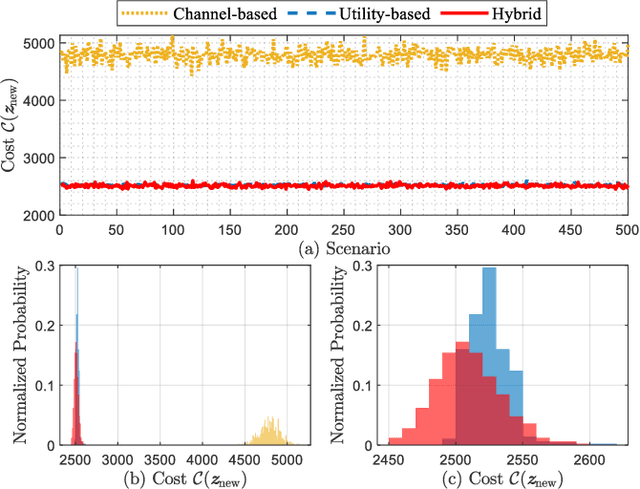

Goal-Oriented Wireless Communication Resource Allocation for Cyber-Physical Systems

Nov 06, 2023

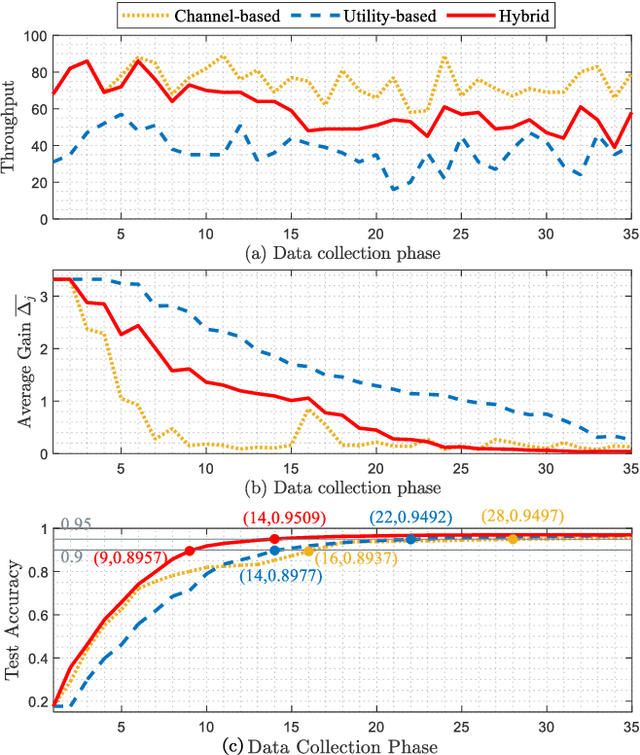

Abstract:The proliferation of novel industrial applications at the wireless edge, such as smart grids and vehicle networks, demands the advancement of cyber-physical systems. The performance of CPSs is closely linked to the last-mile wireless communication networks, which often become bottlenecks due to their inherent limited resources. Current CPS operations often treat wireless communication networks as unpredictable and uncontrollable variables, ignoring the potential adaptability of wireless networks, which results in inefficient and overly conservative CPS operations. Meanwhile, current wireless communications often focus more on throughput and other transmission-related metrics instead of CPS goals. In this study, we introduce the framework of goal-oriented wireless communication resource allocations, accounting for the semantics and significance of data for CPS operation goals. This guarantees optimal CPS performance from a cybernetic standpoint. We formulate a bandwidth allocation problem aimed at maximizing the information utility gain of transmitted data brought to CPS operation goals. Since the goal-oriented bandwidth allocation problem is a large-scale combinational problem, we propose a divide-and-conquer and greedy solution algorithm. The information utility gain is first approximately decomposed into marginal utility information gains and computed in a parallel manner. Subsequently, the bandwidth allocation problem is reformulated as a knapsack problem, which can be further solved greedily with a guaranteed sub-optimality gap. We further demonstrate how our proposed goal-oriented bandwidth allocation algorithm can be applied in four potential CPS applications, including data-driven decision-making, edge learning, federated learning, and distributed optimization.

RT-MonoDepth: Real-time Monocular Depth Estimation on Embedded Systems

Aug 21, 2023

Abstract:Depth sensing is a crucial function of unmanned aerial vehicles and autonomous vehicles. Due to the small size and simple structure of monocular cameras, there has been a growing interest in depth estimation from a single RGB image. However, state-of-the-art monocular CNN-based depth estimation methods using fairly complex deep neural networks are too slow for real-time inference on embedded platforms. This paper addresses the problem of real-time depth estimation on embedded systems. We propose two efficient and lightweight encoder-decoder network architectures, RT-MonoDepth and RT-MonoDepth-S, to reduce computational complexity and latency. Our methodologies demonstrate that it is possible to achieve similar accuracy as prior state-of-the-art works on depth estimation at a faster inference speed. Our proposed networks, RT-MonoDepth and RT-MonoDepth-S, runs at 18.4\&30.5 FPS on NVIDIA Jetson Nano and 253.0\&364.1 FPS on NVIDIA Jetson AGX Orin on a single RGB image of resolution 640$\times$192, and achieve relative state-of-the-art accuracy on the KITTI dataset. To the best of the authors' knowledge, this paper achieves the best accuracy and fastest inference speed compared with existing fast monocular depth estimation methods.

Towards Interpretable Anomaly Detection via Invariant Rule Mining

Nov 24, 2022Abstract:In the research area of anomaly detection, novel and promising methods are frequently developed. However, most existing studies, especially those leveraging deep neural networks, exclusively focus on the detection task only and ignore the interpretability of the underlying models as well as their detection results. However, anomaly interpretation, which aims to provide explanation of why specific data instances are identified as anomalies, is an equally (if not more) important task in many real-world applications. In this work, we pursue highly interpretable anomaly detection via invariant rule mining. Specifically, we leverage decision tree learning and association rule mining to automatically generate invariant rules that are consistently satisfied by the underlying data generation process. The generated invariant rules can provide explicit explanation of anomaly detection results and thus are extremely useful for subsequent decision-making. Furthermore, our empirical evaluation shows that the proposed method can also achieve comparable performance in terms of AUC and partial AUC with popular anomaly detection models in various benchmark datasets.

Robust Learning of Deep Time Series Anomaly Detection Models with Contaminated Training Data

Aug 03, 2022

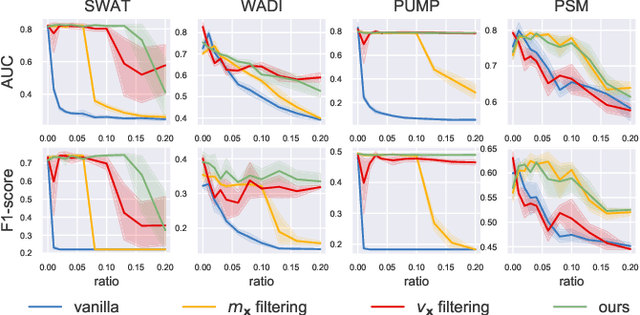

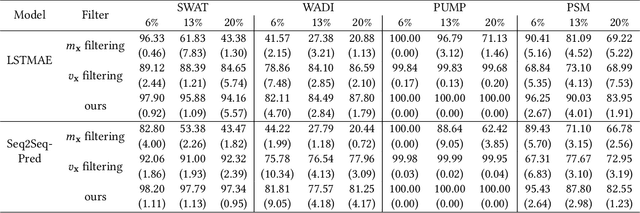

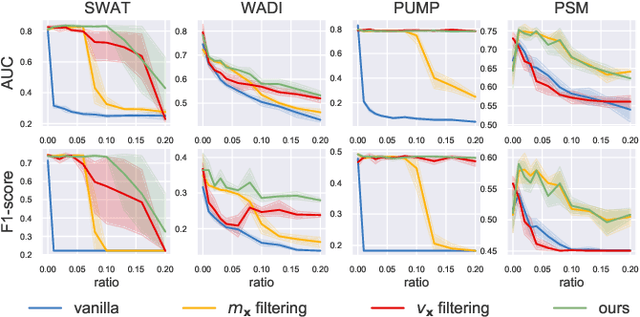

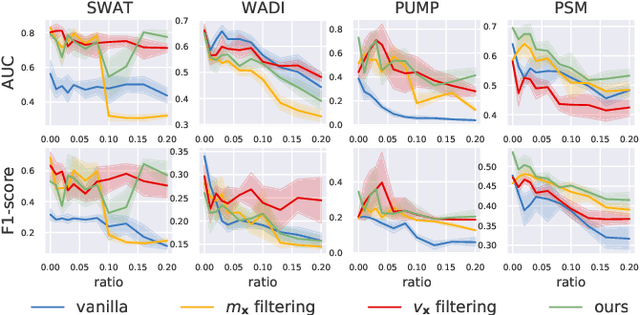

Abstract:Time series anomaly detection (TSAD) is an important data mining task with numerous applications in the IoT era. In recent years, a large number of deep neural network-based methods have been proposed, demonstrating significantly better performance than conventional methods on addressing challenging TSAD problems in a variety of areas. Nevertheless, these deep TSAD methods typically rely on a clean training dataset that is not polluted by anomalies to learn the "normal profile" of the underlying dynamics. This requirement is nontrivial since a clean dataset can hardly be provided in practice. Moreover, without the awareness of their robustness, blindly applying deep TSAD methods with potentially contaminated training data can possibly incur significant performance degradation in the detection phase. In this work, to tackle this important challenge, we firstly investigate the robustness of commonly used deep TSAD methods with contaminated training data which provides a guideline for applying these methods when the provided training data are not guaranteed to be anomaly-free. Furthermore, we propose a model-agnostic method which can effectively improve the robustness of learning mainstream deep TSAD models with potentially contaminated data. Experiment results show that our method can consistently prevent or mitigate performance degradation of mainstream deep TSAD models on widely used benchmark datasets.

Reliable Offline Model-based Optimization for Industrial Process Control

May 15, 2022

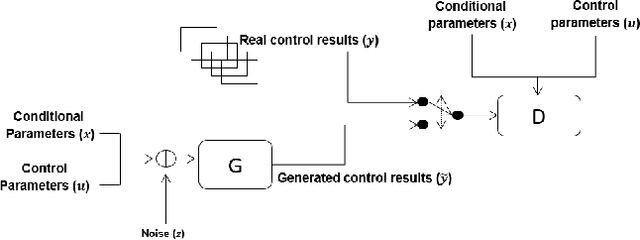

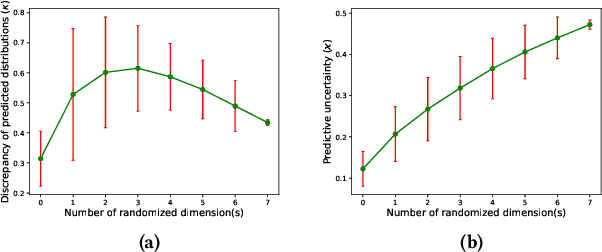

Abstract:In the research area of offline model-based optimization, novel and promising methods are frequently developed. However, implementing such methods in real-world industrial systems such as production lines for process control is oftentimes a frustrating process. In this work, we address two important problems to extend the current success of offline model-based optimization to industrial process control problems: 1) how to learn a reliable dynamics model from offline data for industrial processes? 2) how to learn a reliable but not over-conservative control policy from offline data by utilizing existing model-based optimization algorithms? Specifically, we propose a dynamics model based on ensemble of conditional generative adversarial networks to achieve accurate reward calculation in industrial scenarios. Furthermore, we propose an epistemic-uncertainty-penalized reward evaluation function which can effectively avoid giving over-estimated rewards to out-of-distribution inputs during the learning/searching of the optimal control policy. We provide extensive experiments with the proposed method on two representative cases (a discrete control case and a continuous control case), showing that our method compares favorably to several baselines in offline policy learning for industrial process control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge