Bidan Huang

A novel tactile palm for robotic object manipulation

Aug 10, 2023Abstract:Tactile sensing is of great importance during human hand usage such as object exploration, grasping and manipulation. Different types of tactile sensors have been designed during the past decades, which are mainly focused on either the fingertips for grasping or the upper-body for human-robot interaction. In this paper, a novel soft tactile sensor has been designed to mimic the functionality of human palm that can estimate the contact state of different objects. The tactile palm mainly consists of three parts including an electrode array, a soft cover skin and the conductive sponge. The design principle are described in details, with a number of experiments showcasing the effectiveness of the proposed design.

Crossing the Reality Gap in Tactile-Based Learning

May 23, 2023Abstract:Tactile sensors are believed to be essential in robotic manipulation, and prior works often rely on experts to reason the sensor feedback and design a controller. With the recent advancement in data-driven approaches, complicated manipulation can be realised, but an accurate and efficient tactile simulation is necessary for policy training. To this end, we present an approach to model a commonly used pressure sensor array in simulation and to train a tactile-based manipulation policy with sim-to-real transfer in mind. Each taxel in our model is represented as a mass-spring-damper system, in which the parameters are iteratively identified as plausible ranges. This allows a policy to be trained with domain randomisation which improves its robustness to different environments. Then, we introduce encoders to further align the critical tactile features in a latent space. Finally, our experiments answer questions on tactile-based manipulation, tactile modelling and sim-to-real performance.

Dexterous In-Hand Manipulation of Slender Cylindrical Objects through Deep Reinforcement Learning with Tactile Sensing

Apr 11, 2023Abstract:Continuous in-hand manipulation is an important physical interaction skill, where tactile sensing provides indispensable contact information to enable dexterous manipulation of small objects. This work proposed a framework for end-to-end policy learning with tactile feedback and sim-to-real transfer, which achieved fine in-hand manipulation that controls the pose of a thin cylindrical object, such as a long stick, to track various continuous trajectories through multiple contacts of three fingertips of a dexterous robot hand with tactile sensor arrays. We estimated the central contact position between the stick and each fingertip from the high-dimensional tactile information and showed that the learned policies achieved effective manipulation performance with the processed tactile feedback. The policies were trained with deep reinforcement learning in simulation and successfully transferred to real-world experiments, using coordinated model calibration and domain randomization. We evaluated the effectiveness of tactile information via comparative studies and validated the sim-to-real performance through real-world experiments.

TacGNN:Learning Tactile-based In-hand Manipulation with a Blind Robot

Apr 03, 2023

Abstract:In this paper, we propose a novel framework for tactile-based dexterous manipulation learning with a blind anthropomorphic robotic hand, i.e. without visual sensing. First, object-related states were extracted from the raw tactile signals by a graph-based perception model - TacGNN. The resulting tactile features were then utilized in the policy learning of an in-hand manipulation task in the second stage. This method was examined by a Baoding ball task - simultaneously manipulating two spheres around each other by 180 degrees in hand. We conducted experiments on object states prediction and in-hand manipulation using a reinforcement learning algorithm (PPO). Results show that TacGNN is effective in predicting object-related states during manipulation by decreasing the RMSE of prediction to 0.096cm comparing to other methods, such as MLP, CNN, and GCN. Finally, the robot hand could finish an in-hand manipulation task solely relying on the robotic own perception - tactile sensing and proprioception. In addition, our methods are tested on three tasks with different difficulty levels and transferred to the real robot without further training.

Sim-to-Real Transfer for Robotic Manipulation with Tactile Sensory

Feb 28, 2021

Abstract:Reinforcement Learning (RL) methods have been widely applied for robotic manipulations via sim-to-real transfer, typically with proprioceptive and visual information. However, the incorporation of tactile sensing into RL for contact-rich tasks lacks investigation. In this paper, we model a tactile sensor in simulation and study the effects of its feedback in RL-based robotic control via a zero-shot sim-to-real approach with domain randomization. We demonstrate that learning and controlling with feedback from tactile sensor arrays at the gripper, both in simulation and reality, can enhance grasping stability, which leads to a significant improvement in robotic manipulation performance for a door opening task. In real-world experiments, the door open angle was increased by 45% on average for transferred policies with tactile sensing over those without it.

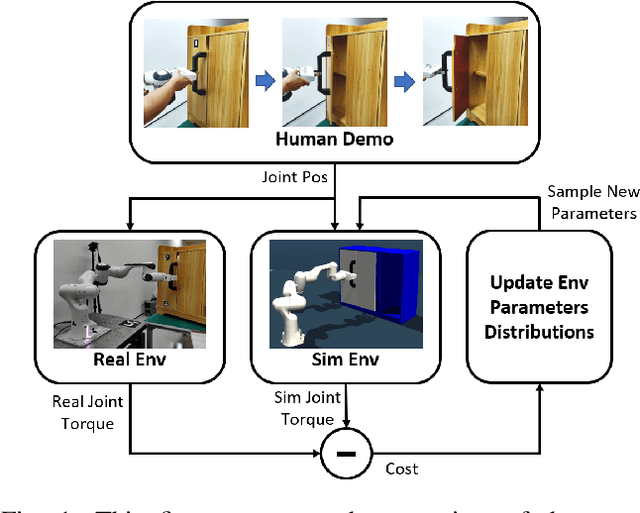

DROID: Minimizing the Reality Gap using Single-Shot Human Demonstration

Feb 23, 2021

Abstract:Reinforcement learning (RL) has demonstrated great success in the past several years. However, most of the scenarios focus on simulated environments. One of the main challenges of transferring the policy learned in a simulated environment to real world, is the discrepancy between the dynamics of the two environments. In prior works, Domain Randomization (DR) has been used to address the reality gap for both robotic locomotion and manipulation tasks. In this paper, we propose Domain Randomization Optimization IDentification (DROID), a novel framework to exploit single-shot human demonstration for identifying the simulator's distribution of dynamics parameters, and apply it to training a policy on a door opening task. Our results show that the proposed framework can identify the difference in dynamics between the simulated and the real worlds, and thus improve policy transfer by optimizing the simulator's randomization ranges. We further illustrate that based on these same identified parameters, our method can generalize the learned policy to different but related tasks.

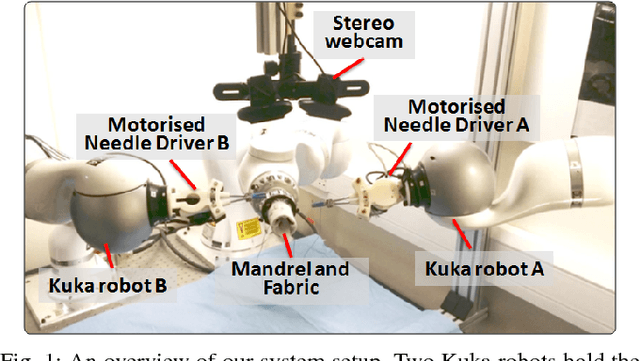

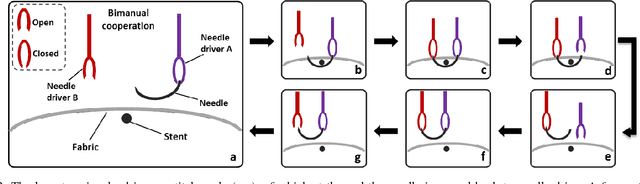

A Multi-Robot Cooperation Framework for Sewing Personalized Stent Grafts

Nov 08, 2017

Abstract:This paper presents a multi-robot system for manufacturing personalized medical stent grafts. The proposed system adopts a modular design, which includes: a (personalized) mandrel module, a bimanual sewing module, and a vision module. The mandrel module incorporates the personalized geometry of patients, while the bimanual sewing module adopts a learning-by-demonstration approach to transfer human hand-sewing skills to the robots. The human demonstrations were firstly observed by the vision module and then encoded using a statistical model to generate the reference motion trajectories. During autonomous robot sewing, the vision module plays the role of coordinating multi-robot collaboration. Experiment results show that the robots can adapt to generalized stent designs. The proposed system can also be used for other manipulation tasks, especially for flexible production of customized products and where bimanual or multi-robot cooperation is required.

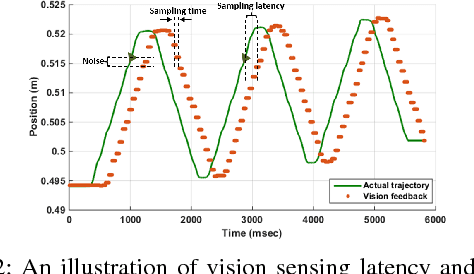

A Vision-Guided Multi-Robot Cooperation Framework for Learning-by-Demonstration and Task Reproduction

Jun 01, 2017

Abstract:This paper presents a vision-based learning-by-demonstration approach to enable robots to learn and complete a manipulation task cooperatively. With this method, a vision system is involved in both the task demonstration and reproduction stages. An expert first demonstrates how to use tools to perform a task, while the tool motion is observed using a vision system. The demonstrations are then encoded using a statistical model to generate a reference motion trajectory. Equipped with the same tools and the learned model, the robot is guided by vision to reproduce the task. The task performance was evaluated in terms of both accuracy and speed. However, simply increasing the robot's speed could decrease the reproduction accuracy. To this end, a dual-rate Kalman filter is employed to compensate for latency between the robot and vision system. More importantly, the sampling rates of the reference trajectory and the robot speed are optimised adaptively according to the learned motion model. We demonstrate the effectiveness of our approach by performing two tasks: a trajectory reproduction task and a bimanual sewing task. We show that using our vision-based approach, the robots can conduct effective learning by demonstrations and perform accurate and fast task reproduction. The proposed approach is generalisable to other manipulation tasks, where bimanual or multi-robot cooperation is required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge