Wang Wei Lee

Crossing the Reality Gap in Tactile-Based Learning

May 23, 2023Abstract:Tactile sensors are believed to be essential in robotic manipulation, and prior works often rely on experts to reason the sensor feedback and design a controller. With the recent advancement in data-driven approaches, complicated manipulation can be realised, but an accurate and efficient tactile simulation is necessary for policy training. To this end, we present an approach to model a commonly used pressure sensor array in simulation and to train a tactile-based manipulation policy with sim-to-real transfer in mind. Each taxel in our model is represented as a mass-spring-damper system, in which the parameters are iteratively identified as plausible ranges. This allows a policy to be trained with domain randomisation which improves its robustness to different environments. Then, we introduce encoders to further align the critical tactile features in a latent space. Finally, our experiments answer questions on tactile-based manipulation, tactile modelling and sim-to-real performance.

Dexterous In-Hand Manipulation of Slender Cylindrical Objects through Deep Reinforcement Learning with Tactile Sensing

Apr 11, 2023Abstract:Continuous in-hand manipulation is an important physical interaction skill, where tactile sensing provides indispensable contact information to enable dexterous manipulation of small objects. This work proposed a framework for end-to-end policy learning with tactile feedback and sim-to-real transfer, which achieved fine in-hand manipulation that controls the pose of a thin cylindrical object, such as a long stick, to track various continuous trajectories through multiple contacts of three fingertips of a dexterous robot hand with tactile sensor arrays. We estimated the central contact position between the stick and each fingertip from the high-dimensional tactile information and showed that the learned policies achieved effective manipulation performance with the processed tactile feedback. The policies were trained with deep reinforcement learning in simulation and successfully transferred to real-world experiments, using coordinated model calibration and domain randomization. We evaluated the effectiveness of tactile information via comparative studies and validated the sim-to-real performance through real-world experiments.

TacGNN:Learning Tactile-based In-hand Manipulation with a Blind Robot

Apr 03, 2023

Abstract:In this paper, we propose a novel framework for tactile-based dexterous manipulation learning with a blind anthropomorphic robotic hand, i.e. without visual sensing. First, object-related states were extracted from the raw tactile signals by a graph-based perception model - TacGNN. The resulting tactile features were then utilized in the policy learning of an in-hand manipulation task in the second stage. This method was examined by a Baoding ball task - simultaneously manipulating two spheres around each other by 180 degrees in hand. We conducted experiments on object states prediction and in-hand manipulation using a reinforcement learning algorithm (PPO). Results show that TacGNN is effective in predicting object-related states during manipulation by decreasing the RMSE of prediction to 0.096cm comparing to other methods, such as MLP, CNN, and GCN. Finally, the robot hand could finish an in-hand manipulation task solely relying on the robotic own perception - tactile sensing and proprioception. In addition, our methods are tested on three tasks with different difficulty levels and transferred to the real robot without further training.

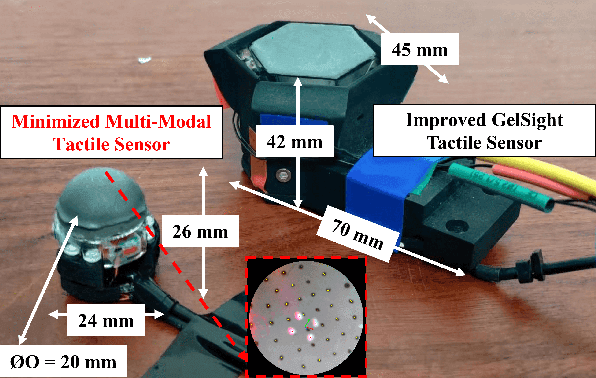

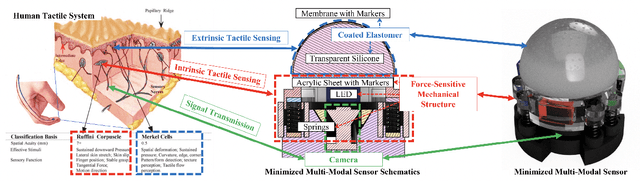

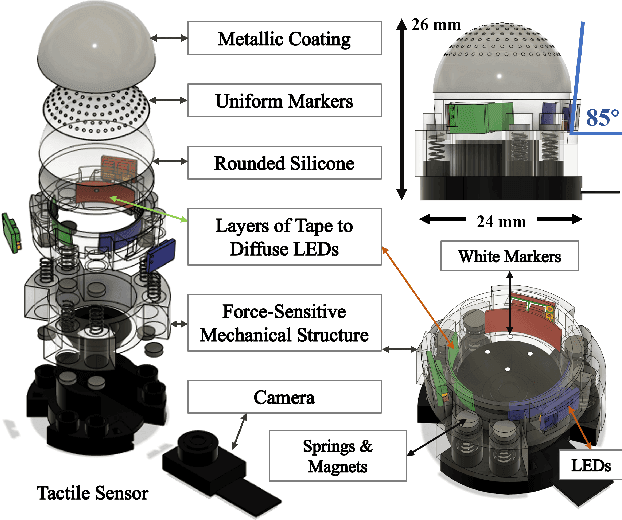

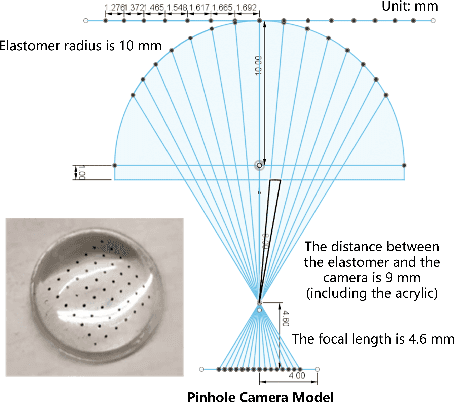

A Miniaturised Camera-based Multi-Modal Tactile Sensor

Mar 06, 2023

Abstract:In conjunction with huge recent progress in camera and computer vision technology, camera-based sensors have increasingly shown considerable promise in relation to tactile sensing. In comparison to competing technologies (be they resistive, capacitive or magnetic based), they offer super-high-resolution, while suffering from fewer wiring problems. The human tactile system is composed of various types of mechanoreceptors, each able to perceive and process distinct information such as force, pressure, texture, etc. Camera-based tactile sensors such as GelSight mainly focus on high-resolution geometric sensing on a flat surface, and their force measurement capabilities are limited by the hysteresis and non-linearity of the silicone material. In this paper, we present a miniaturised dome-shaped camera-based tactile sensor that allows accurate force and tactile sensing in a single coherent system. The key novelty of the sensor design is as follows. First, we demonstrate how to build a smooth silicone hemispheric sensing medium with uniform markers on its curved surface. Second, we enhance the illumination of the rounded silicone with diffused LEDs. Third, we construct a force-sensitive mechanical structure in a compact form factor with usage of springs to accurately perceive forces. Our multi-modal sensor is able to acquire tactile information from multi-axis forces, local force distribution, and contact geometry, all in real-time. We apply an end-to-end deep learning method to process all the information.

Sim-to-Real Transfer for Robotic Manipulation with Tactile Sensory

Feb 28, 2021

Abstract:Reinforcement Learning (RL) methods have been widely applied for robotic manipulations via sim-to-real transfer, typically with proprioceptive and visual information. However, the incorporation of tactile sensing into RL for contact-rich tasks lacks investigation. In this paper, we model a tactile sensor in simulation and study the effects of its feedback in RL-based robotic control via a zero-shot sim-to-real approach with domain randomization. We demonstrate that learning and controlling with feedback from tactile sensor arrays at the gripper, both in simulation and reality, can enhance grasping stability, which leads to a significant improvement in robotic manipulation performance for a door opening task. In real-world experiments, the door open angle was increased by 45% on average for transferred policies with tactile sensing over those without it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge