Chaoyang Song

One-DoF Robotic Design of Overconstrained Limbs with Energy-Efficient, Self-Collision-Free Motion

Sep 26, 2025Abstract:While it is expected to build robotic limbs with multiple degrees of freedom (DoF) inspired by nature, a single DoF design remains fundamental, providing benefits that include, but are not limited to, simplicity, robustness, cost-effectiveness, and efficiency. Mechanisms, especially those with multiple links and revolute joints connected in closed loops, play an enabling factor in introducing motion diversity for 1-DoF systems, which are usually constrained by self-collision during a full-cycle range of motion. This study presents a novel computational approach to designing one-degree-of-freedom (1-DoF) overconstrained robotic limbs for a desired spatial trajectory, while achieving energy-efficient, self-collision-free motion in full-cycle rotations. Firstly, we present the geometric optimization problem of linkage-based robotic limbs in a generalized formulation for self-collision-free design. Next, we formulate the spatial trajectory generation problem with the overconstrained linkages by optimizing the similarity and dynamic-related metrics. We further optimize the geometric shape of the overconstrained linkage to ensure smooth and collision-free motion driven by a single actuator. We validated our proposed method through various experiments, including personalized automata and bio-inspired hexapod robots. The resulting hexapod robot, featuring overconstrained robotic limbs, demonstrated outstanding energy efficiency during forward walking.

On Flange-based 3D Hand-Eye Calibration for Soft Robotic Tactile Welding

Jul 27, 2024

Abstract:This paper investigates the direct application of standardized designs on the robot for conducting robot hand-eye calibration by employing 3D scanners with collaborative robots. The well-established geometric features of the robot flange are exploited by directly capturing its point cloud data. In particular, an iterative method is proposed to facilitate point cloud processing toward a refined calibration outcome. Several extensive experiments are conducted over a range of collaborative robots, including Universal Robots UR5 & UR10 e-series, Franka Emika, and AUBO i5 using an industrial-grade 3D scanner Photoneo Phoxi S & M and a commercial-grade 3D scanner Microsoft Azure Kinect DK. Experimental results show that translational and rotational errors converge efficiently to less than 0.28 mm and 0.25 degrees, respectively, achieving a hand-eye calibration accuracy as high as the camera's resolution, probing the hardware limit. A welding seam tracking system is presented, combining the flange-based calibration method with soft tactile sensing. The experiment results show that the system enables the robot to adjust its motion in real-time, ensuring consistent weld quality and paving the way for more efficient and adaptable manufacturing processes.

Optimizing Robotic Manipulation with Decision-RWKV: A Recurrent Sequence Modeling Approach for Lifelong Learning

Jul 23, 2024Abstract:Models based on the Transformer architecture have seen widespread application across fields such as natural language processing, computer vision, and robotics, with large language models like ChatGPT revolutionizing machine understanding of human language and demonstrating impressive memory and reproduction capabilities. Traditional machine learning algorithms struggle with catastrophic forgetting, which is detrimental to the diverse and generalized abilities required for robotic deployment. This paper investigates the Receptance Weighted Key Value (RWKV) framework, known for its advanced capabilities in efficient and effective sequence modeling, and its integration with the decision transformer and experience replay architectures. It focuses on potential performance enhancements in sequence decision-making and lifelong robotic learning tasks. We introduce the Decision-RWKV (DRWKV) model and conduct extensive experiments using the D4RL database within the OpenAI Gym environment and on the D'Claw platform to assess the DRWKV model's performance in single-task tests and lifelong learning scenarios, showcasing its ability to handle multiple subtasks efficiently. The code for all algorithms, training, and image rendering in this study is open-sourced at https://github.com/ancorasir/DecisionRWKV.

Evolutionary Morphology Towards Overconstrained Locomotion via Large-Scale, Multi-Terrain Deep Reinforcement Learning

Jul 01, 2024

Abstract:While the animals' Fin-to-Limb evolution has been well-researched in biology, such morphological transformation remains under-adopted in the modern design of advanced robotic limbs. This paper investigates a novel class of overconstrained locomotion from a design and learning perspective inspired by evolutionary morphology, aiming to integrate the concept of `intelligent design under constraints' - hereafter referred to as constraint-driven design intelligence - in developing modern robotic limbs with superior energy efficiency. We propose a 3D-printable design of robotic limbs parametrically reconfigurable as a classical planar 4-bar linkage, an overconstrained Bennett linkage, and a spherical 4-bar linkage. These limbs adopt a co-axial actuation, identical to the modern legged robot platforms, with the added capability of upgrading into a wheel-legged system. Then, we implemented a large-scale, multi-terrain deep reinforcement learning framework to train these reconfigurable limbs for a comparative analysis of overconstrained locomotion in energy efficiency. Results show that the overconstrained limbs exhibit more efficient locomotion than planar limbs during forward and sideways walking over different terrains, including floors, slopes, and stairs, with or without random noises, by saving at least 22% mechanical energy in completing the traverse task, with the spherical limbs being the least efficient. It also achieves the highest average speed of 0.85 meters per second on flat terrain, which is 20% faster than the planar limbs. This study paves the path for an exciting direction for future research in overconstrained robotics leveraging evolutionary morphology and reconfigurable mechanism intelligence when combined with state-of-the-art methods in deep reinforcement learning.

One Fling to Goal: Environment-aware Dynamics for Goal-conditioned Fabric Flinging

Jun 20, 2024Abstract:Fabric manipulation dynamically is commonly seen in manufacturing and domestic settings. While dynamically manipulating a fabric piece to reach a target state is highly efficient, this task presents considerable challenges due to the varying properties of different fabrics, complex dynamics when interacting with environments, and meeting required goal conditions. To address these challenges, we present \textit{One Fling to Goal}, an algorithm capable of handling fabric pieces with diverse shapes and physical properties across various scenarios. Our method learns a graph-based dynamics model equipped with environmental awareness. With this dynamics model, we devise a real-time controller to enable high-speed fabric manipulation in one attempt, requiring less than 3 seconds to finish the goal-conditioned task. We experimentally validate our method on a goal-conditioned manipulation task in five diverse scenarios. Our method significantly improves this goal-conditioned task, achieving an average error of 13.2mm in complex scenarios. Our method can be seamlessly transferred to real-world robotic systems and generalized to unseen scenarios in a zero-shot manner.

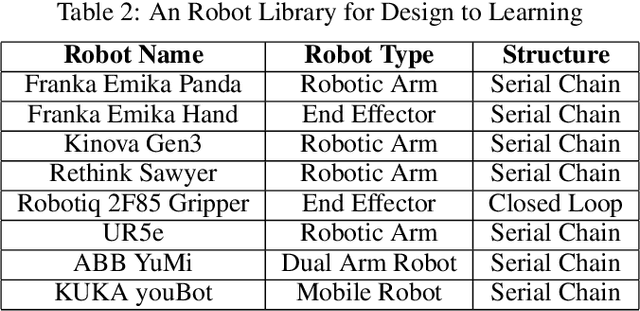

Describing Robots from Design to Learning: Towards an Interactive Lifecycle Representation of Robots

Dec 19, 2023

Abstract:The robot development process is divided into several stages, which create barriers to the exchange of information between these different stages. We advocate for an interactive lifecycle representation, extending from robot morphology design to learning, and introduce the role of robot description formats in facilitating information transfer throughout this pipeline. We analyzed the relationship between design and simulation, enabling us to employ robot process automation methods for transferring information from the design phase to the learning phase in simulation. As part of this effort, we have developed an open-source plugin called ACDC4Robot for Fusion 360, which automates this process and transforms Fusion 360 into a user-friendly graphical interface for creating and editing robot description formats. Additionally, we offer an out-of-the-box robot model library to streamline and reduce repetitive tasks. All codes are hosted open-source. (\url{https://github.com/bionicdl-sustech/ACDC4Robot})

SeeThruFinger: See and Grasp Anything with a Soft Touch

Dec 15, 2023Abstract:We present SeeThruFinger, a soft robotic finger with an in-finger vision for multi-modal perception, including visual perception and tactile sensing, for geometrically adaptive and real-time reactive grasping. Multi-modal perception of intrinsic and extrinsic interactions is critical in building intelligent robots that learn. Instead of adding various sensors for different modalities, a preferred solution is to integrate them into one elegant and coherent design, which is a challenging task. This study leverages the Soft Polyhedral Network design as a robotic finger, capable of omni-directional adaptation with an unobstructed view of the finger's spatial deformation from the inside. By embedding a miniature camera underneath, we achieve the visual perception of the external environment by inpainting the finger mask using E2FGV, which can be used for object detection in the downstream tasks for grasping. After contacting the objects, we use real-time object segmentation algorithms, such as XMem, to track the soft finger's spatial deformations. We also learned a Supervised Variational Autoencoder to enable tactile sensing of 6D forces and torques for reactive grasp. As a result, we achieved multi-modal perception, including visual perception and tactile sensing, and soft, adaptive object grasping within a single vision-based soft finger design compatible with multi-fingered robotic grippers.

Proprioceptive State Estimation for Amphibious Tactile Sensing

Dec 15, 2023

Abstract:This paper presents a novel vision-based proprioception approach for a soft robotic finger capable of estimating and reconstructing tactile interactions in terrestrial and aquatic environments. The key to this system lies in the finger's unique metamaterial structure, which facilitates omni-directional passive adaptation during grasping, protecting delicate objects across diverse scenarios. A compact in-finger camera captures high-framerate images of the finger's deformation during contact, extracting crucial tactile data in real time. We present a method of the volumetric discretized model of the soft finger and use the geometry constraints captured by the camera to find the optimal estimation of the deformed shape. The approach is benchmarked with a motion-tracking system with sparse markers and a haptic device with dense measurements. Both results show state-of-the-art accuracies, with a median error of 1.96 mm for overall body deformation, corresponding to 2.1$\%$ of the finger's length. More importantly, the state estimation is robust in both on-land and underwater environments as we demonstrate its usage for underwater object shape sensing. This combination of passive adaptation and real-time tactile sensing paves the way for amphibious robotic grasping applications.

Active Surface with Passive Omni-Directional Adaptation of Soft Polyhedral Fingers for In-Hand Manipulation

Nov 25, 2023Abstract:Track systems effectively distribute loads, augmenting traction and maneuverability on unstable terrains, leveraging their expansive contact areas. This tracked locomotion capability also aids in hand manipulation of not only regular objects but also irregular objects. In this study, we present the design of a soft robotic finger with an active surface on an omni-adaptive network structure, which can be easily installed on existing grippers and achieve stability and dexterity for in-hand manipulation. The system's active surfaces initially transfer the object from the fingertip segment with less compliance to the middle segment of the finger with superior adaptability. Despite the omni-directional deformation of the finger, in-hand manipulation can still be executed with controlled active surfaces. We characterized the soft finger's stiffness distribution and simplified models to assess the feasibility of repositioning and reorienting a grasped object. A set of experiments on in-hand manipulation was performed with the proposed fingers, demonstrating the dexterity and robustness of the strategy.

Overconstrained Robotic Limb with Energy-Efficient, Omni-directional Locomotion

Oct 15, 2023

Abstract:This paper studies the design, modeling, and control of a novel quadruped, featuring overconstrained robotic limbs employing the Bennett linkage for motion and power transmission. The modular limb design allows the robot to morph into reptile- or mammal-inspired forms. In contrast to the prevailing focus on planar limbs, this research delves into the classical overconstrained linkages, which have strong theoretical foundations in advanced kinematics but limited engineering applications. The study showcases the morphological superiority of overconstrained robotic limbs that can transform into planar or spherical limbs, exemplifying the Bennett linkage. By conducting kinematic and dynamic modeling, we apply model predictive control to simulate a range of locomotion tasks, revealing that overconstrained limbs outperform planar designs in omni-directional tasks like forward trotting, lateral trotting, and turning on the spot when considering foothold distances. These findings highlight the biological distinctions in limb design between reptiles and mammals and represent the first documented instance of overconstrained robotic limbs outperforming planar designs in dynamic locomotion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge