Ziqian Wang

Self-Supervised Path Planning in Unstructured Environments via Global-Guided Differentiable Hard Constraint Projection

Jan 27, 2026Abstract:Deploying deep learning agents for autonomous navigation in unstructured environments faces critical challenges regarding safety, data scarcity, and limited computational resources. Traditional solvers often suffer from high latency, while emerging learning-based approaches struggle to ensure deterministic feasibility. To bridge the gap from embodied to embedded intelligence, we propose a self-supervised framework incorporating a differentiable hard constraint projection layer for runtime assurance. To mitigate data scarcity, we construct a Global-Guided Artificial Potential Field (G-APF), which provides dense supervision signals without manual labeling. To enforce actuator limitations and geometric constraints efficiently, we employ an adaptive neural projection layer, which iteratively rectifies the coarse network output onto the feasible manifold. Extensive benchmarks on a test set of 20,000 scenarios demonstrate an 88.75\% success rate, substantiating the enhanced operational safety. Closed-loop experiments in CARLA further validate the physical realizability of the planned paths under dynamic constraints. Furthermore, deployment verification on an NVIDIA Jetson Orin NX confirms an inference latency of 94 ms, showing real-time feasibility on resource-constrained embedded hardware. This framework offers a generalized paradigm for embedding physical laws into neural architectures, providing a viable direction for solving constrained optimization in mechatronics. Source code is available at: https://github.com/wzq-13/SSHC.git.

S$^2$Voice: Style-Aware Autoregressive Modeling with Enhanced Conditioning for Singing Style Conversion

Jan 20, 2026Abstract:We present S$^2$Voice, the winning system of the Singing Voice Conversion Challenge (SVCC) 2025 for both the in-domain and zero-shot singing style conversion tracks. Built on the strong two-stage Vevo baseline, S$^2$Voice advances style control and robustness through several contributions. First, we integrate style embeddings into the autoregressive large language model (AR LLM) via a FiLM-style layer-norm conditioning and a style-aware cross-attention for enhanced fine-grained style modeling. Second, we introduce a global speaker embedding into the flow-matching transformer to improve timbre similarity. Third, we curate a large, high-quality singing corpus via an automated pipeline for web harvesting, vocal separation, and transcript refinement. Finally, we employ a multi-stage training strategy combining supervised fine-tuning (SFT) and direct preference optimization (DPO). Subjective listening tests confirm our system's superior performance: leading in style similarity and singer similarity for Task 1, and across naturalness, style similarity, and singer similarity for Task 2. Ablation studies demonstrate the effectiveness of our contributions in enhancing style fidelity, timbre preservation, and generalization. Audio samples are available~\footnote{https://honee-w.github.io/SVC-Challenge-Demo/}.

UniFlow: Unifying Speech Front-End Tasks via Continuous Generative Modeling

Aug 11, 2025Abstract:Generative modeling has recently achieved remarkable success across image, video, and audio domains, demonstrating powerful capabilities for unified representation learning. Yet speech front-end tasks such as speech enhancement (SE), target speaker extraction (TSE), acoustic echo cancellation (AEC), and language-queried source separation (LASS) remain largely tackled by disparate, task-specific solutions. This fragmentation leads to redundant engineering effort, inconsistent performance, and limited extensibility. To address this gap, we introduce UniFlow, a unified framework that employs continuous generative modeling to tackle diverse speech front-end tasks in a shared latent space. Specifically, UniFlow utilizes a waveform variational autoencoder (VAE) to learn a compact latent representation of raw audio, coupled with a Diffusion Transformer (DiT) that predicts latent updates. To differentiate the speech processing task during the training, learnable condition embeddings indexed by a task ID are employed to enable maximal parameter sharing while preserving task-specific adaptability. To balance model performance and computational efficiency, we investigate and compare three generative objectives: denoising diffusion, flow matching, and mean flow within the latent domain. We validate UniFlow on multiple public benchmarks, demonstrating consistent gains over state-of-the-art baselines. UniFlow's unified latent formulation and conditional design make it readily extensible to new tasks, providing an integrated foundation for building and scaling generative speech processing pipelines. To foster future research, we will open-source our codebase.

EchoFree: Towards Ultra Lightweight and Efficient Neural Acoustic Echo Cancellation

Aug 08, 2025Abstract:In recent years, neural networks (NNs) have been widely applied in acoustic echo cancellation (AEC). However, existing approaches struggle to meet real-world low-latency and computational requirements while maintaining performance. To address this challenge, we propose EchoFree, an ultra lightweight neural AEC framework that combines linear filtering with a neural post filter. Specifically, we design a neural post-filter operating on Bark-scale spectral features. Furthermore, we introduce a two-stage optimization strategy utilizing self-supervised learning (SSL) models to improve model performance. We evaluate our method on the blind test set of the ICASSP 2023 AEC Challenge. The results demonstrate that our model, with only 278K parameters and 30 MMACs computational complexity, outperforms existing low-complexity AEC models and achieves performance comparable to that of state-of-the-art lightweight model DeepVQE-S. The audio examples are available.

FlowSE: Efficient and High-Quality Speech Enhancement via Flow Matching

May 26, 2025Abstract:Generative models have excelled in audio tasks using approaches such as language models, diffusion, and flow matching. However, existing generative approaches for speech enhancement (SE) face notable challenges: language model-based methods suffer from quantization loss, leading to compromised speaker similarity and intelligibility, while diffusion models require complex training and high inference latency. To address these challenges, we propose FlowSE, a flow-matching-based model for SE. Flow matching learns a continuous transformation between noisy and clean speech distributions in a single pass, significantly reducing inference latency while maintaining high-quality reconstruction. Specifically, FlowSE trains on noisy mel spectrograms and optional character sequences, optimizing a conditional flow matching loss with ground-truth mel spectrograms as supervision. It implicitly learns speech's temporal-spectral structure and text-speech alignment. During inference, FlowSE can operate with or without textual information, achieving impressive results in both scenarios, with further improvements when transcripts are available. Extensive experiments demonstrate that FlowSE significantly outperforms state-of-the-art generative methods, establishing a new paradigm for generative-based SE and demonstrating the potential of flow matching to advance the field. Our code, pre-trained checkpoints, and audio samples are available.

U-SAM: An audio language Model for Unified Speech, Audio, and Music Understanding

May 20, 2025Abstract:The text generation paradigm for audio tasks has opened new possibilities for unified audio understanding. However, existing models face significant challenges in achieving a comprehensive understanding across diverse audio types, such as speech, general audio events, and music. Furthermore, their exclusive reliance on cross-entropy loss for alignment often falls short, as it treats all tokens equally and fails to account for redundant audio features, leading to weaker cross-modal alignment. To deal with the above challenges, this paper introduces U-SAM, an advanced audio language model that integrates specialized encoders for speech, audio, and music with a pre-trained large language model (LLM). U-SAM employs a Mixture of Experts (MoE) projector for task-aware feature fusion, dynamically routing and integrating the domain-specific encoder outputs. Additionally, U-SAM incorporates a Semantic-Aware Contrastive Loss Module, which explicitly identifies redundant audio features under language supervision and rectifies their semantic and spectral representations to enhance cross-modal alignment. Extensive experiments demonstrate that U-SAM consistently outperforms both specialized models and existing audio language models across multiple benchmarks. Moreover, it exhibits emergent capabilities on unseen tasks, showcasing its generalization potential. Code is available (https://github.com/Honee-W/U-SAM/).

Causally-informed Deep Learning towards Explainable and Generalizable Outcomes Prediction in Critical Care

Feb 04, 2025

Abstract:Recent advances in deep learning (DL) have prompted the development of high-performing early warning score (EWS) systems, predicting clinical deteriorations such as acute kidney injury, acute myocardial infarction, or circulatory failure. DL models have proven to be powerful tools for various tasks but come with the cost of lacking interpretability and limited generalizability, hindering their clinical applications. To develop a practical EWS system applicable to various outcomes, we propose causally-informed explainable early prediction model, which leverages causal discovery to identify the underlying causal relationships of prediction and thus owns two unique advantages: demonstrating the explicit interpretation of the prediction while exhibiting decent performance when applied to unfamiliar environments. Benefiting from these features, our approach achieves superior accuracy for 6 different critical deteriorations and achieves better generalizability across different patient groups, compared to various baseline algorithms. Besides, we provide explicit causal pathways to serve as references for assistant clinical diagnosis and potential interventions. The proposed approach enhances the practical application of deep learning in various medical scenarios.

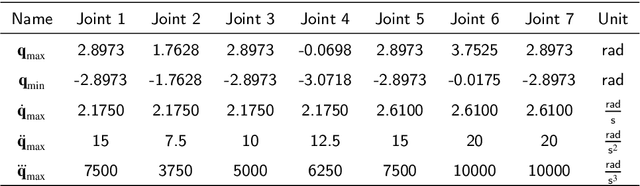

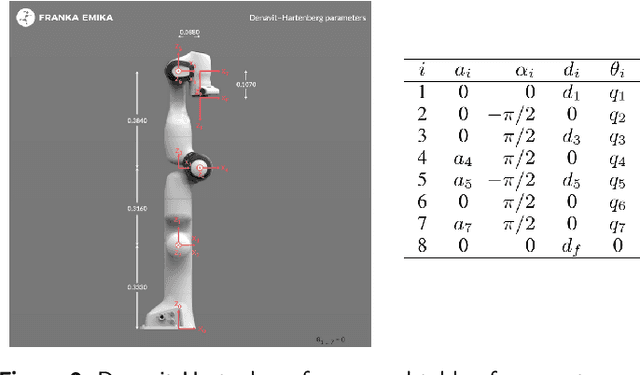

Dynamic Programming-Based Redundancy Resolution for Path Planning of Redundant Manipulators Considering Breakpoints

Nov 26, 2024Abstract:This paper proposes a redundancy resolution algorithm for a redundant manipulator based on dynamic programming. This algorithm can compute the desired joint angles at each point on a pre-planned discrete path in Cartesian space, while ensuring that the angles, velocities, and accelerations of each joint do not exceed the manipulator's constraints. We obtain the analytical solution to the inverse kinematics problem of the manipulator using a parameterization method, transforming the redundancy resolution problem into an optimization problem of determining the parameters at each path point. The constraints on joint velocity and acceleration serve as constraints for the optimization problem. Then all feasible inverse kinematic solutions for each pose under the joint angle constraints of the manipulator are obtained through parameterization methods, and the globally optimal solution to this problem is obtained through the dynamic programming algorithm. On the other hand, if a feasible joint-space path satisfying the constraints does not exist, the proposed algorithm can compute the minimum number of breakpoints required for the path and partition the path with as few breakpoints as possible to facilitate the manipulator's operation along the path. The algorithm can also determine the optimal selection of breakpoints to minimize the global cost function, rather than simply interrupting when the manipulator is unable to continue operating. The proposed algorithm is tested using a manipulator produced by a certain manufacturer, demonstrating the effectiveness of the algorithm.

Dynamic Programming-Based Offline Redundancy Resolution of Redundant Manipulators Along Prescribed Paths with Real-Time Adjustment

Nov 26, 2024

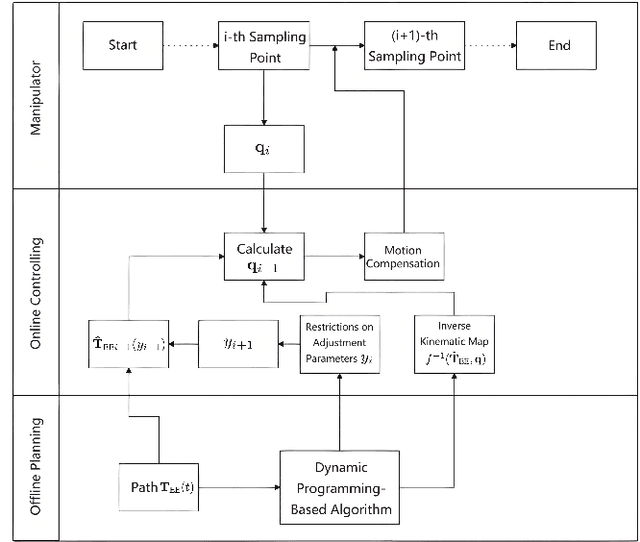

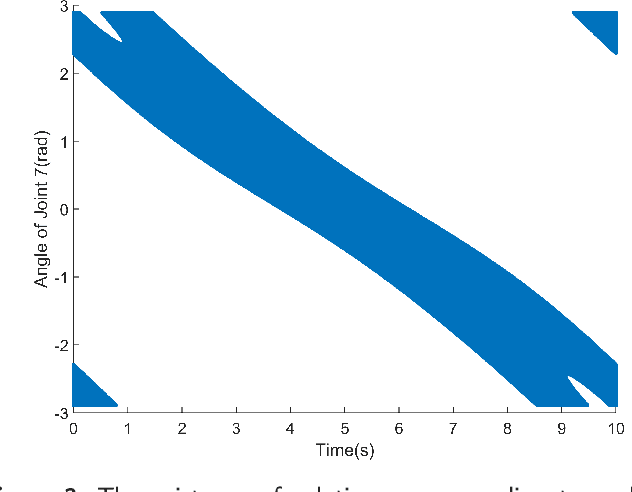

Abstract:Traditional offline redundancy resolution of trajectories for redundant manipulators involves computing inverse kinematic solutions for Cartesian space paths, constraining the manipulator to a fixed path without real-time adjustments. Online redundancy resolution can achieve real-time adjustment of paths, but it cannot consider subsequent path points, leading to the possibility of the manipulator being forced to stop mid-motion due to joint constraints. To address this, this paper introduces a dynamic programming-based offline redundancy resolution for redundant manipulators along prescribed paths with real-time adjustment. The proposed method allows the manipulator to move along a prescribed path while implementing real-time adjustment along the normal to the path. Using Dynamic Programming, the proposed approach computes a global maximum for the variation of adjustment coefficients. As long as the coefficient variation between adjacent sampling path points does not exceed this limit, the algorithm provides the next path point's joint angles based on the current joint angles, enabling the end-effector to achieve the adjusted Cartesian pose. The main innovation of this paper lies in augmenting traditional offline optimal planning with real-time adjustment capabilities, achieving a fusion of offline planning and online planning.

DualSep: A Light-weight dual-encoder convolutional recurrent network for real-time in-car speech separation

Sep 13, 2024

Abstract:Advancements in deep learning and voice-activated technologies have driven the development of human-vehicle interaction. Distributed microphone arrays are widely used in in-car scenarios because they can accurately capture the voices of passengers from different speech zones. However, the increase in the number of audio channels, coupled with the limited computational resources and low latency requirements of in-car systems, presents challenges for in-car multi-channel speech separation. To migrate the problems, we propose a lightweight framework that cascades digital signal processing (DSP) and neural networks (NN). We utilize fixed beamforming (BF) to reduce computational costs and independent vector analysis (IVA) to provide spatial prior. We employ dual encoders for dual-branch modeling, with spatial encoder capturing spatial cues and spectral encoder preserving spectral information, facilitating spatial-spectral fusion. Our proposed system supports both streaming and non-streaming modes. Experimental results demonstrate the superiority of the proposed system across various metrics. With only 0.83M parameters and 0.39 real-time factor (RTF) on an Intel Core i7 (2.6GHz) CPU, it effectively separates speech into distinct speech zones. Our demos are available at https://honee-w.github.io/DualSep/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge