Zhenyu Xu

AnalogSeeker: An Open-source Foundation Language Model for Analog Circuit Design

Aug 14, 2025Abstract:In this paper, we propose AnalogSeeker, an effort toward an open-source foundation language model for analog circuit design, with the aim of integrating domain knowledge and giving design assistance. To overcome the scarcity of data in this field, we employ a corpus collection strategy based on the domain knowledge framework of analog circuits. High-quality, accessible textbooks across relevant subfields are systematically curated and cleaned into a textual domain corpus. To address the complexity of knowledge of analog circuits, we introduce a granular domain knowledge distillation method. Raw, unlabeled domain corpus is decomposed into typical, granular learning nodes, where a multi-agent framework distills implicit knowledge embedded in unstructured text into question-answer data pairs with detailed reasoning processes, yielding a fine-grained, learnable dataset for fine-tuning. To address the unexplored challenges in training analog circuit foundation models, we explore and share our training methods through both theoretical analysis and experimental validation. We finally establish a fine-tuning-centric training paradigm, customizing and implementing a neighborhood self-constrained supervised fine-tuning algorithm. This approach enhances training outcomes by constraining the perturbation magnitude between the model's output distributions before and after training. In practice, we train the Qwen2.5-32B-Instruct model to obtain AnalogSeeker, which achieves 85.04% accuracy on AMSBench-TQA, the analog circuit knowledge evaluation benchmark, with a 15.67% point improvement over the original model and is competitive with mainstream commercial models. Furthermore, AnalogSeeker also shows effectiveness in the downstream operational amplifier design task. AnalogSeeker is open-sourced at https://huggingface.co/analogllm/analogseeker for research use.

CodeVision: Detecting LLM-Generated Code Using 2D Token Probability Maps and Vision Models

Jan 06, 2025

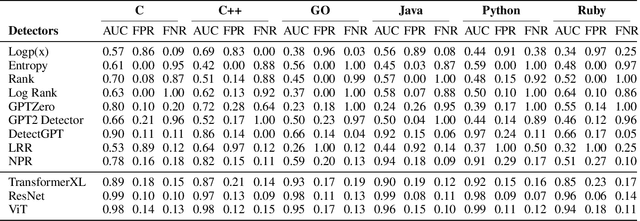

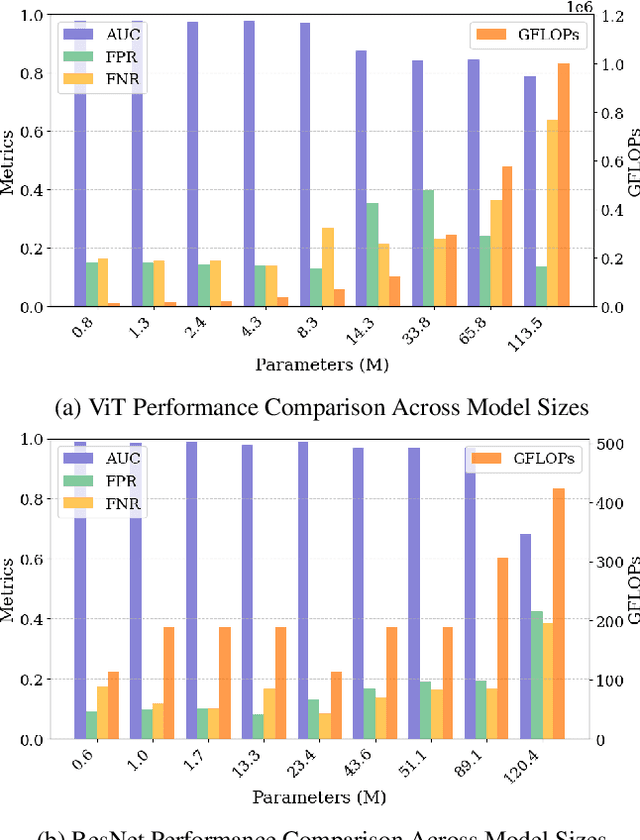

Abstract:The rise of large language models (LLMs) like ChatGPT has significantly improved automated code generation, enhancing software development efficiency. However, this introduces challenges in academia, particularly in distinguishing between human-written and LLM-generated code, which complicates issues of academic integrity. Existing detection methods, such as pre-trained models and watermarking, face limitations in adaptability and computational efficiency. In this paper, we propose a novel detection method using 2D token probability maps combined with vision models, preserving spatial code structures such as indentation and brackets. By transforming code into log probability matrices and applying vision models like Vision Transformers (ViT) and ResNet, we capture both content and structure for more accurate detection. Our method shows robustness across multiple programming languages and improves upon traditional detectors, offering a scalable and computationally efficient solution for identifying LLM-generated code.

LecPrompt: A Prompt-based Approach for Logical Error Correction with CodeBERT

Oct 10, 2024

Abstract:Logical errors in programming don't raise compiler alerts, making them hard to detect. These silent errors can disrupt a program's function or cause run-time issues. Their correction requires deep insight into the program's logic, highlighting the importance of automated detection and repair. In this paper, we introduce LecPrompt to localize and repair logical errors, an prompt-based approach that harnesses the capabilities of CodeBERT, a transformer-based large language model trained on code. First, LecPrompt leverages a large language model to calculate perplexity and log probability metrics, pinpointing logical errors at both token and line levels. Through statistical analysis, it identifies tokens and lines that deviate significantly from the expected patterns recognized by large language models, marking them as potential error sources. Second, by framing the logical error correction challenge as a Masked Language Modeling (MLM) task, LecPrompt employs CodeBERT to autoregressively repair the identified error tokens. Finally, the soft-prompt method provides a novel solution in low-cost scenarios, ensuring that the model can be fine-tuned to the specific nuances of the logical error correction task without incurring high computational costs. To evaluate LecPrompt's performance, we created a method to introduce logical errors into correct code and applying this on QuixBugs to produce the QuixBugs-LE dataset. Our evaluations on the QuixBugs-LE dataset for both Python and Java highlight the impressive capabilities of our method, LecPrompt. For Python, LecPrompt achieves a noteworthy 74.58% top-1 token-level repair accuracy and 27.4% program-level repair accuracy. In Java, LecPrompt delivers a 69.23\% top-1 token-level repair accuracy and 24.7% full program-level repair accuracy.

Signal Watermark on Large Language Models

Oct 09, 2024

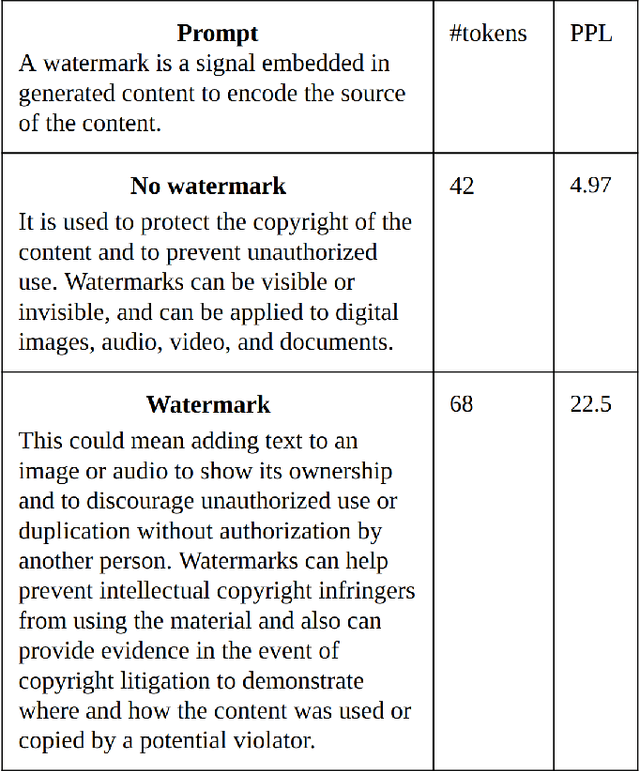

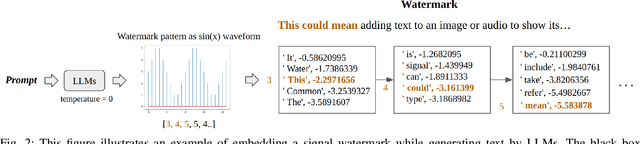

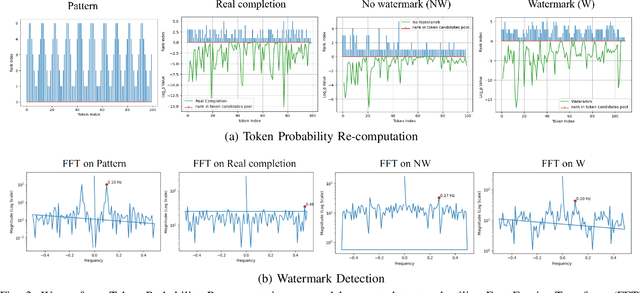

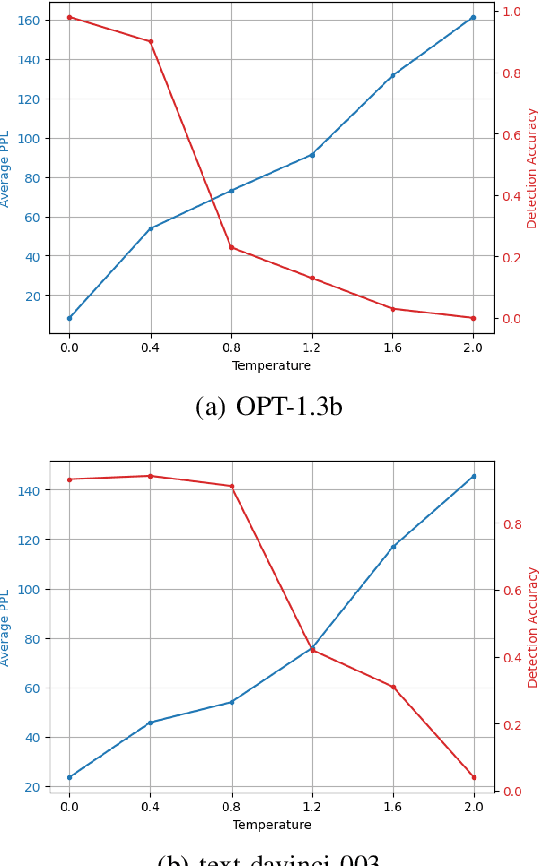

Abstract:As Large Language Models (LLMs) become increasingly sophisticated, they raise significant security concerns, including the creation of fake news and academic misuse. Most detectors for identifying model-generated text are limited by their reliance on variance in perplexity and burstiness, and they require substantial computational resources. In this paper, we proposed a watermarking method embedding a specific watermark into the text during its generation by LLMs, based on a pre-defined signal pattern. This technique not only ensures the watermark's invisibility to humans but also maintains the quality and grammatical integrity of model-generated text. We utilize LLMs and Fast Fourier Transform (FFT) for token probability computation and detection of the signal watermark. The unique application of signal processing principles within the realm of text generation by LLMs allows for subtle yet effective embedding of watermarks, which do not compromise the quality or coherence of the generated text. Our method has been empirically validated across multiple LLMs, consistently maintaining high detection accuracy, even with variations in temperature settings during text generation. In the experiment of distinguishing between human-written and watermarked text, our method achieved an AUROC score of 0.97, significantly outperforming existing methods like GPTZero, which scored 0.64. The watermark's resilience to various attacking scenarios further confirms its robustness, addressing significant challenges in model-generated text authentication.

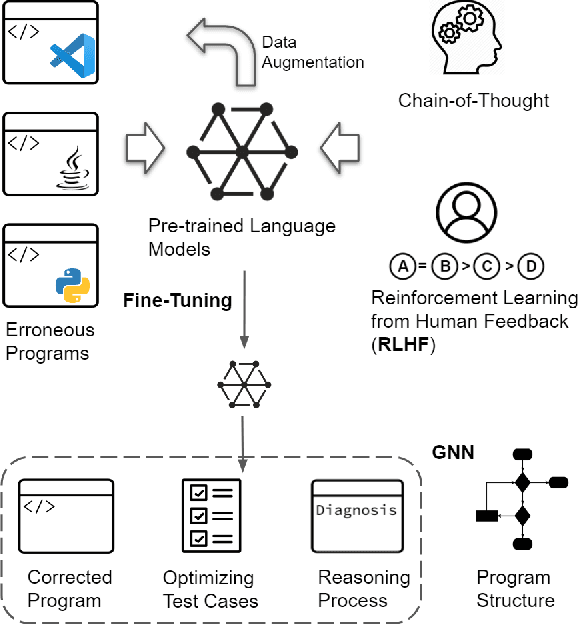

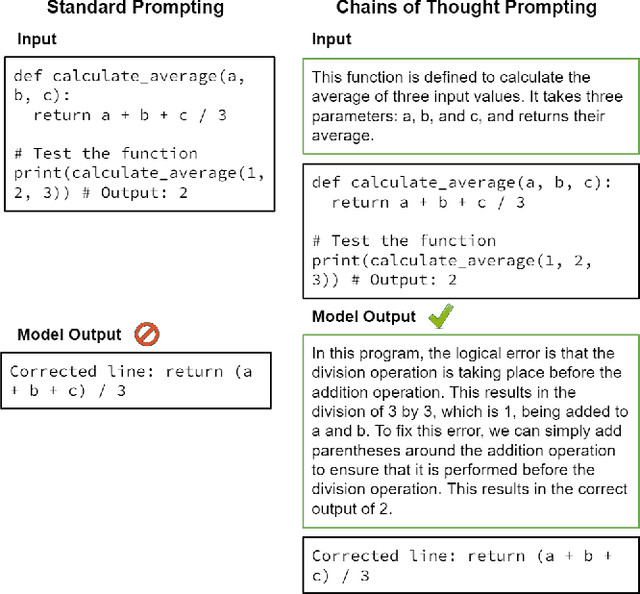

Multi-Task Program Error Repair and Explanatory Diagnosis

Oct 09, 2024

Abstract:Program errors can occur in any type of programming, and can manifest in a variety of ways, such as unexpected output, crashes, or performance issues. And program error diagnosis can often be too abstract or technical for developers to understand, especially for beginners. The goal of this paper is to present a novel machine-learning approach for Multi-task Program Error Repair and Explanatory Diagnosis (mPRED). A pre-trained language model is used to encode the source code, and a downstream model is specifically designed to identify and repair errors. Programs and test cases will be augmented and optimized from several perspectives. Additionally, our approach incorporates a "chain of thoughts" method, which enables the models to produce intermediate reasoning explanations before providing the final correction. To aid in visualizing and analyzing the program structure, we use a graph neural network for program structure visualization. Overall, our approach offers a promising approach for repairing program errors across different programming languages and providing helpful explanations to programmers.

FreqMark: Frequency-Based Watermark for Sentence-Level Detection of LLM-Generated Text

Oct 09, 2024

Abstract:The increasing use of Large Language Models (LLMs) for generating highly coherent and contextually relevant text introduces new risks, including misuse for unethical purposes such as disinformation or academic dishonesty. To address these challenges, we propose FreqMark, a novel watermarking technique that embeds detectable frequency-based watermarks in LLM-generated text during the token sampling process. The method leverages periodic signals to guide token selection, creating a watermark that can be detected with Short-Time Fourier Transform (STFT) analysis. This approach enables accurate identification of LLM-generated content, even in mixed-text scenarios with both human-authored and LLM-generated segments. Our experiments demonstrate the robustness and precision of FreqMark, showing strong detection capabilities against various attack scenarios such as paraphrasing and token substitution. Results show that FreqMark achieves an AUC improvement of up to 0.98, significantly outperforming existing detection methods.

A New Method in Facial Registration in Clinics Based on Structure Light Images

May 23, 2024Abstract:Background and Objective: In neurosurgery, fusing clinical images and depth images that can improve the information and details is beneficial to surgery. We found that the registration of face depth images was invalid frequently using existing methods. To abundant traditional image methods with depth information, a method in registering with depth images and traditional clinical images was investigated. Methods: We used the dlib library, a C++ library that could be used in face recognition, and recognized the key points on faces from the structure light camera and CT image. The two key point clouds were registered for coarse registration by the ICP method. Fine registration was finished after coarse registration by the ICP method. Results: RMSE after coarse and fine registration is as low as 0.995913 mm. Compared with traditional methods, it also takes less time. Conclusions: The new method successfully registered the facial depth image from structure light images and CT with a low error, and that would be promising and efficient in clinical application of neurosurgery.

VisDrone-CC2020: The Vision Meets Drone Crowd Counting Challenge Results

Jul 19, 2021

Abstract:Crowd counting on the drone platform is an interesting topic in computer vision, which brings new challenges such as small object inference, background clutter and wide viewpoint. However, there are few algorithms focusing on crowd counting on the drone-captured data due to the lack of comprehensive datasets. To this end, we collect a large-scale dataset and organize the Vision Meets Drone Crowd Counting Challenge (VisDrone-CC2020) in conjunction with the 16th European Conference on Computer Vision (ECCV 2020) to promote the developments in the related fields. The collected dataset is formed by $3,360$ images, including $2,460$ images for training, and $900$ images for testing. Specifically, we manually annotate persons with points in each video frame. There are $14$ algorithms from $15$ institutes submitted to the VisDrone-CC2020 Challenge. We provide a detailed analysis of the evaluation results and conclude the challenge. More information can be found at the website: \url{http://www.aiskyeye.com/}.

* The method description of A7 Mutil-Scale Aware based SFANet (M-SFANet) is updated and missing references are added

A High-Performance, Reconfigurable, Fully Integrated Time-Domain Reflectometry Architecture Using Digital I/Os

May 01, 2021

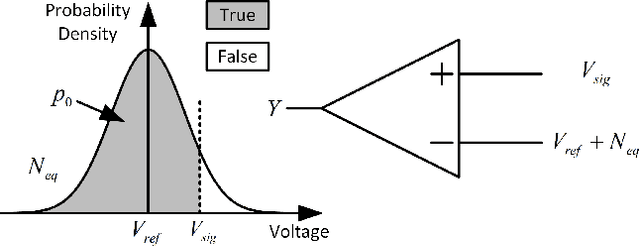

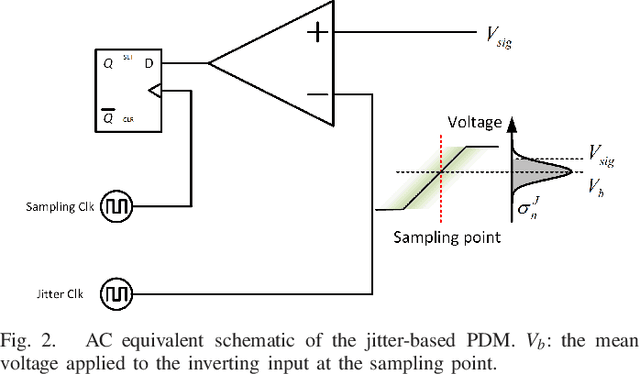

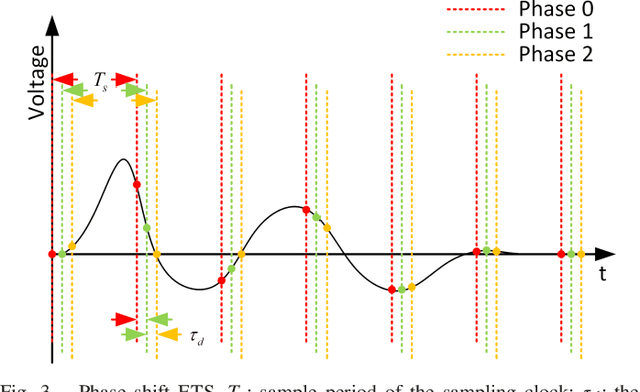

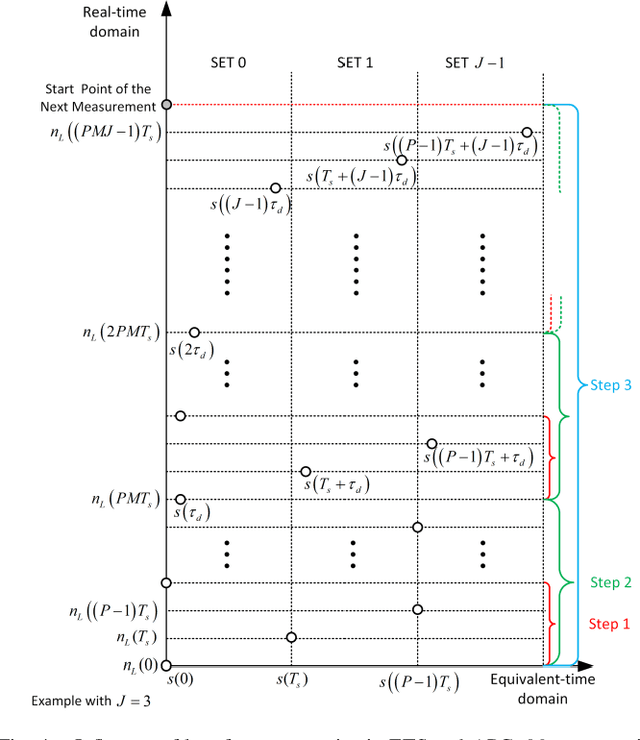

Abstract:Time-domain reflectometry (TDR) is an established means of measuring impedance inhomogeneity of a variety of waveguides, providing critical data necessary to characterize and optimize the performance of high-bandwidth computational and communication systems. However, TDR systems with both the high spatial resolution (sub-cm) and voltage resolution (sub-$\muV$) required to evaluate high-performance waveguides are physically large and often cost-prohibitive, severely limiting their utility as testing platforms and greatly limiting their use in characterizing and trouble-shooting fielded hardware. Consequently, there exists a growing technical need for an electronically simple, portable, and low-cost TDR technology. The receiver of a TDR system plays a key role in recording reflection waveforms; thus, such a receiver must have high analog bandwidth, high sampling rate, and high-voltage resolution. However, these requirements are difficult to meet using low-cost analog-to-digital converters (ADCs). This article describes a new TDR architecture, namely, jitter-based APC (JAPC), which obviates the need for external components based on an alternative concept, analog-to-probability conversion (APC) that was recently proposed. These results demonstrate that a fully reconfigurable and highly integrated TDR (iTDR) can be implemented on a field-programmable gate array (FPGA) chip without using any external circuit components. Empirical evaluation of the system was conducted using an HDMI cable as the device under test (DUT), and the resulting impedance inhomogeneity pattern (IIP) of the DUT was extracted with spatial and voltage resolutions of 5 cm and 80 $\muV$, respectively. These results demonstrate the feasibility of using the prototypical JAPC-based iTDR for real-world waveguide characterization applications

* 8 pages, 8 figures

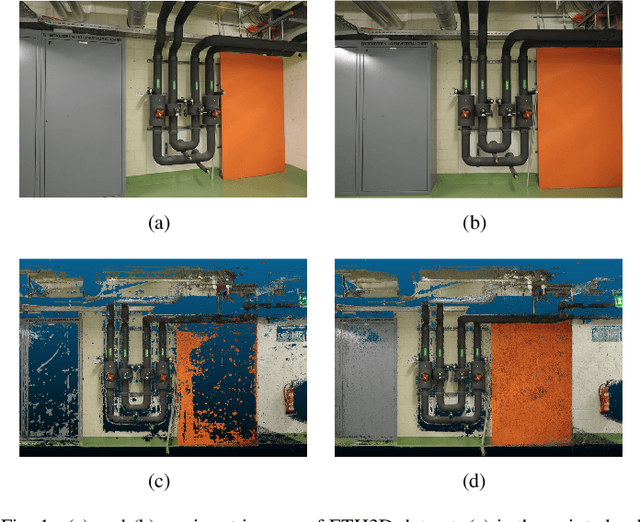

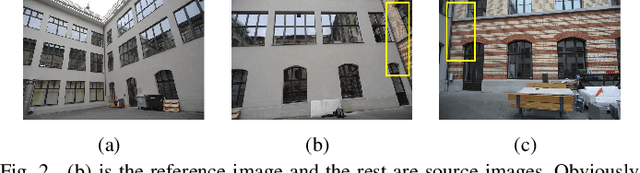

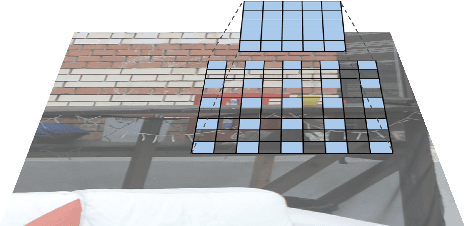

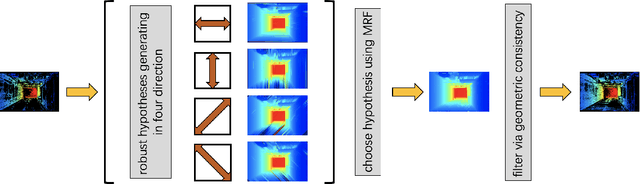

PHI-MVS: Plane Hypothesis Inference Multi-view Stereo for Large-Scale Scene Reconstruction

Apr 13, 2021

Abstract:PatchMatch based Multi-view Stereo (MVS) algorithms have achieved great success in large-scale scene reconstruction tasks. However, reconstruction of texture-less planes often fails as similarity measurement methods may become ineffective on these regions. Thus, a new plane hypothesis inference strategy is proposed to handle the above issue. The procedure consists of two steps: First, multiple plane hypotheses are generated using filtered initial depth maps on regions that are not successfully recovered; Second, depth hypotheses are selected using Markov Random Field (MRF). The strategy can significantly improve the completeness of reconstruction results with only acceptable computing time increasing. Besides, a new acceleration scheme similar to dilated convolution can speed up the depth map estimating process with only a slight influence on the reconstruction. We integrated the above ideas into a new MVS pipeline, Plane Hypothesis Inference Multi-view Stereo (PHI-MVS). The result of PHI-MVS is validated on ETH3D public benchmarks, and it demonstrates competing performance against the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge