Zhengming Ding

VLMs Guided Interpretable Decision Making for Autonomous Driving

Nov 17, 2025

Abstract:Recent advancements in autonomous driving (AD) have explored the use of vision-language models (VLMs) within visual question answering (VQA) frameworks for direct driving decision-making. However, these approaches often depend on handcrafted prompts and suffer from inconsistent performance, limiting their robustness and generalization in real-world scenarios. In this work, we evaluate state-of-the-art open-source VLMs on high-level decision-making tasks using ego-view visual inputs and identify critical limitations in their ability to deliver reliable, context-aware decisions. Motivated by these observations, we propose a new approach that shifts the role of VLMs from direct decision generators to semantic enhancers. Specifically, we leverage their strong general scene understanding to enrich existing vision-based benchmarks with structured, linguistically rich scene descriptions. Building on this enriched representation, we introduce a multi-modal interactive architecture that fuses visual and linguistic features for more accurate decision-making and interpretable textual explanations. Furthermore, we design a post-hoc refinement module that utilizes VLMs to enhance prediction reliability. Extensive experiments on two autonomous driving benchmarks demonstrate that our approach achieves state-of-the-art performance, offering a promising direction for integrating VLMs into reliable and interpretable AD systems.

Diffusion Guided Adversarial State Perturbations in Reinforcement Learning

Nov 10, 2025

Abstract:Reinforcement learning (RL) systems, while achieving remarkable success across various domains, are vulnerable to adversarial attacks. This is especially a concern in vision-based environments where minor manipulations of high-dimensional image inputs can easily mislead the agent's behavior. To this end, various defenses have been proposed recently, with state-of-the-art approaches achieving robust performance even under large state perturbations. However, after closer investigation, we found that the effectiveness of the current defenses is due to a fundamental weakness of the existing $l_p$ norm-constrained attacks, which can barely alter the semantics of image input even under a relatively large perturbation budget. In this work, we propose SHIFT, a novel policy-agnostic diffusion-based state perturbation attack to go beyond this limitation. Our attack is able to generate perturbed states that are semantically different from the true states while remaining realistic and history-aligned to avoid detection. Evaluations show that our attack effectively breaks existing defenses, including the most sophisticated ones, significantly outperforming existing attacks while being more perceptually stealthy. The results highlight the vulnerability of RL agents to semantics-aware adversarial perturbations, indicating the importance of developing more robust policies.

TCR-EML: Explainable Model Layers for TCR-pMHC Prediction

Oct 05, 2025Abstract:T cell receptor (TCR) recognition of peptide-MHC (pMHC) complexes is a central component of adaptive immunity, with implications for vaccine design, cancer immunotherapy, and autoimmune disease. While recent advances in machine learning have improved prediction of TCR-pMHC binding, the most effective approaches are black-box transformer models that cannot provide a rationale for predictions. Post-hoc explanation methods can provide insight with respect to the input but do not explicitly model biochemical mechanisms (e.g. known binding regions), as in TCR-pMHC binding. ``Explain-by-design'' models (i.e., with architectural components that can be examined directly after training) have been explored in other domains, but have not been used for TCR-pMHC binding. We propose explainable model layers (TCR-EML) that can be incorporated into protein-language model backbones for TCR-pMHC modeling. Our approach uses prototype layers for amino acid residue contacts drawn from known TCR-pMHC binding mechanisms, enabling high-quality explanations for predicted TCR-pMHC binding. Experiments of our proposed method on large-scale datasets demonstrate competitive predictive accuracy and generalization, and evaluation on the TCR-XAI benchmark demonstrates improved explainability compared with existing approaches.

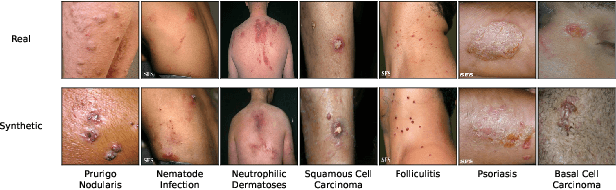

Doctor Approved: Generating Medically Accurate Skin Disease Images through AI-Expert Feedback

Jun 14, 2025Abstract:Paucity of medical data severely limits the generalizability of diagnostic ML models, as the full spectrum of disease variability can not be represented by a small clinical dataset. To address this, diffusion models (DMs) have been considered as a promising avenue for synthetic image generation and augmentation. However, they frequently produce medically inaccurate images, deteriorating the model performance. Expert domain knowledge is critical for synthesizing images that correctly encode clinical information, especially when data is scarce and quality outweighs quantity. Existing approaches for incorporating human feedback, such as reinforcement learning (RL) and Direct Preference Optimization (DPO), rely on robust reward functions or demand labor-intensive expert evaluations. Recent progress in Multimodal Large Language Models (MLLMs) reveals their strong visual reasoning capabilities, making them adept candidates as evaluators. In this work, we propose a novel framework, coined MAGIC (Medically Accurate Generation of Images through AI-Expert Collaboration), that synthesizes clinically accurate skin disease images for data augmentation. Our method creatively translates expert-defined criteria into actionable feedback for image synthesis of DMs, significantly improving clinical accuracy while reducing the direct human workload. Experiments demonstrate that our method greatly improves the clinical quality of synthesized skin disease images, with outputs aligning with dermatologist assessments. Additionally, augmenting training data with these synthesized images improves diagnostic accuracy by +9.02% on a challenging 20-condition skin disease classification task, and by +13.89% in the few-shot setting.

Exploiting Aggregation and Segregation of Representations for Domain Adaptive Human Pose Estimation

Dec 29, 2024Abstract:Human pose estimation (HPE) has received increasing attention recently due to its wide application in motion analysis, virtual reality, healthcare, etc. However, it suffers from the lack of labeled diverse real-world datasets due to the time- and labor-intensive annotation. To cope with the label deficiency issue, one common solution is to train the HPE models with easily available synthetic datasets (source) and apply them to real-world data (target) through domain adaptation (DA). Unfortunately, prevailing domain adaptation techniques within the HPE domain remain predominantly fixated on effecting alignment and aggregation between source and target features, often sidestepping the crucial task of excluding domain-specific representations. To rectify this, we introduce a novel framework that capitalizes on both representation aggregation and segregation for domain adaptive human pose estimation. Within this framework, we address the network architecture aspect by disentangling representations into distinct domain-invariant and domain-specific components, facilitating aggregation of domain-invariant features while simultaneously segregating domain-specific ones. Moreover, we tackle the discrepancy measurement facet by delving into various keypoint relationships and applying separate aggregation or segregation mechanisms to enhance alignment. Extensive experiments on various benchmarks, e.g., Human3.6M, LSP, H3D, and FreiHand, show that our method consistently achieves state-of-the-art performance. The project is available at \url{https://github.com/davidpengucf/EPIC}.

Enhancing Skin Disease Diagnosis: Interpretable Visual Concept Discovery with SAM Empowerment

Sep 14, 2024

Abstract:Current AI-assisted skin image diagnosis has achieved dermatologist-level performance in classifying skin cancer, driven by rapid advancements in deep learning architectures. However, unlike traditional vision tasks, skin images in general present unique challenges due to the limited availability of well-annotated datasets, complex variations in conditions, and the necessity for detailed interpretations to ensure patient safety. Previous segmentation methods have sought to reduce image noise and enhance diagnostic performance, but these techniques require fine-grained, pixel-level ground truth masks for training. In contrast, with the rise of foundation models, the Segment Anything Model (SAM) has been introduced to facilitate promptable segmentation, enabling the automation of the segmentation process with simple yet effective prompts. Efforts applying SAM predominantly focus on dermatoscopy images, which present more easily identifiable lesion boundaries than clinical photos taken with smartphones. This limitation constrains the practicality of these approaches to real-world applications. To overcome the challenges posed by noisy clinical photos acquired via non-standardized protocols and to improve diagnostic accessibility, we propose a novel Cross-Attentive Fusion framework for interpretable skin lesion diagnosis. Our method leverages SAM to generate visual concepts for skin diseases using prompts, integrating local visual concepts with global image features to enhance model performance. Extensive evaluation on two skin disease datasets demonstrates our proposed method's effectiveness on lesion diagnosis and interpretability.

From Majority to Minority: A Diffusion-based Augmentation for Underrepresented Groups in Skin Lesion Analysis

Jun 26, 2024

Abstract:AI-based diagnoses have demonstrated dermatologist-level performance in classifying skin cancer. However, such systems are prone to under-performing when tested on data from minority groups that lack sufficient representation in the training sets. Although data collection and annotation offer the best means for promoting minority groups, these processes are costly and time-consuming. Prior works have suggested that data from majority groups may serve as a valuable information source to supplement the training of diagnosis tools for minority groups. In this work, we propose an effective diffusion-based augmentation framework that maximizes the use of rich information from majority groups to benefit minority groups. Using groups with different skin types as a case study, our results show that the proposed framework can generate synthetic images that improve diagnostic results for the minority groups, even when there is little or no reference data from these target groups. The practical value of our work is evident in medical imaging analysis, where under-diagnosis persists as a problem for certain groups due to insufficient representation.

A Demographic-Conditioned Variational Autoencoder for fMRI Distribution Sampling and Removal of Confounds

May 13, 2024

Abstract:Objective: fMRI and derived measures such as functional connectivity (FC) have been used to predict brain age, general fluid intelligence, psychiatric disease status, and preclinical neurodegenerative disease. However, it is not always clear that all demographic confounds, such as age, sex, and race, have been removed from fMRI data. Additionally, many fMRI datasets are restricted to authorized researchers, making dissemination of these valuable data sources challenging. Methods: We create a variational autoencoder (VAE)-based model, DemoVAE, to decorrelate fMRI features from demographics and generate high-quality synthetic fMRI data based on user-supplied demographics. We train and validate our model using two large, widely used datasets, the Philadelphia Neurodevelopmental Cohort (PNC) and Bipolar and Schizophrenia Network for Intermediate Phenotypes (BSNIP). Results: We find that DemoVAE recapitulates group differences in fMRI data while capturing the full breadth of individual variations. Significantly, we also find that most clinical and computerized battery fields that are correlated with fMRI data are not correlated with DemoVAE latents. An exception are several fields related to schizophrenia medication and symptom severity. Conclusion: Our model generates fMRI data that captures the full distribution of FC better than traditional VAE or GAN models. We also find that most prediction using fMRI data is dependent on correlation with, and prediction of, demographics. Significance: Our DemoVAE model allows for generation of high quality synthetic data conditioned on subject demographics as well as the removal of the confounding effects of demographics. We identify that FC-based prediction tasks are highly influenced by demographic confounds.

GCC: Generative Calibration Clustering

Apr 14, 2024

Abstract:Deep clustering as an important branch of unsupervised representation learning focuses on embedding semantically similar samples into the identical feature space. This core demand inspires the exploration of contrastive learning and subspace clustering. However, these solutions always rely on the basic assumption that there are sufficient and category-balanced samples for generating valid high-level representation. This hypothesis actually is too strict to be satisfied for real-world applications. To overcome such a challenge, the natural strategy is utilizing generative models to augment considerable instances. How to use these novel samples to effectively fulfill clustering performance improvement is still difficult and under-explored. In this paper, we propose a novel Generative Calibration Clustering (GCC) method to delicately incorporate feature learning and augmentation into clustering procedure. First, we develop a discriminative feature alignment mechanism to discover intrinsic relationship across real and generated samples. Second, we design a self-supervised metric learning to generate more reliable cluster assignment to boost the conditional diffusion generation. Extensive experimental results on three benchmarks validate the effectiveness and advantage of our proposed method over the state-of-the-art methods.

Sketch3D: Style-Consistent Guidance for Sketch-to-3D Generation

Apr 07, 2024Abstract:Recently, image-to-3D approaches have achieved significant results with a natural image as input. However, it is not always possible to access these enriched color input samples in practical applications, where only sketches are available. Existing sketch-to-3D researches suffer from limitations in broad applications due to the challenges of lacking color information and multi-view content. To overcome them, this paper proposes a novel generation paradigm Sketch3D to generate realistic 3D assets with shape aligned with the input sketch and color matching the textual description. Concretely, Sketch3D first instantiates the given sketch in the reference image through the shape-preserving generation process. Second, the reference image is leveraged to deduce a coarse 3D Gaussian prior, and multi-view style-consistent guidance images are generated based on the renderings of the 3D Gaussians. Finally, three strategies are designed to optimize 3D Gaussians, i.e., structural optimization via a distribution transfer mechanism, color optimization with a straightforward MSE loss and sketch similarity optimization with a CLIP-based geometric similarity loss. Extensive visual comparisons and quantitative analysis illustrate the advantage of our Sketch3D in generating realistic 3D assets while preserving consistency with the input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge