Zeqing Wang

SpotEdit: Selective Region Editing in Diffusion Transformers

Dec 26, 2025Abstract:Diffusion Transformer models have significantly advanced image editing by encoding conditional images and integrating them into transformer layers. However, most edits involve modifying only small regions, while current methods uniformly process and denoise all tokens at every timestep, causing redundant computation and potentially degrading unchanged areas. This raises a fundamental question: Is it truly necessary to regenerate every region during editing? To address this, we propose SpotEdit, a training-free diffusion editing framework that selectively updates only the modified regions. SpotEdit comprises two key components: SpotSelector identifies stable regions via perceptual similarity and skips their computation by reusing conditional image features; SpotFusion adaptively blends these features with edited tokens through a dynamic fusion mechanism, preserving contextual coherence and editing quality. By reducing unnecessary computation and maintaining high fidelity in unmodified areas, SpotEdit achieves efficient and precise image editing.

MICo-150K: A Comprehensive Dataset Advancing Multi-Image Composition

Dec 08, 2025

Abstract:In controllable image generation, synthesizing coherent and consistent images from multiple reference inputs, i.e., Multi-Image Composition (MICo), remains a challenging problem, partly hindered by the lack of high-quality training data. To bridge this gap, we conduct a systematic study of MICo, categorizing it into 7 representative tasks and curate a large-scale collection of high-quality source images and construct diverse MICo prompts. Leveraging powerful proprietary models, we synthesize a rich amount of balanced composite images, followed by human-in-the-loop filtering and refinement, resulting in MICo-150K, a comprehensive dataset for MICo with identity consistency. We further build a Decomposition-and-Recomposition (De&Re) subset, where 11K real-world complex images are decomposed into components and recomposed, enabling both real and synthetic compositions. To enable comprehensive evaluation, we construct MICo-Bench with 100 cases per task and 300 challenging De&Re cases, and further introduce a new metric, Weighted-Ref-VIEScore, specifically tailored for MICo evaluation. Finally, we fine-tune multiple models on MICo-150K and evaluate them on MICo-Bench. The results show that MICo-150K effectively equips models without MICo capability and further enhances those with existing skills. Notably, our baseline model, Qwen-MICo, fine-tuned from Qwen-Image-Edit, matches Qwen-Image-2509 in 3-image composition while supporting arbitrary multi-image inputs beyond the latter's limitation. Our dataset, benchmark, and baseline collectively offer valuable resources for further research on Multi-Image Composition.

Minute-Long Videos with Dual Parallelisms

May 29, 2025

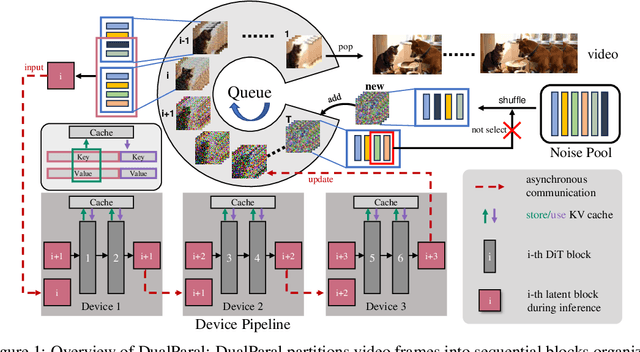

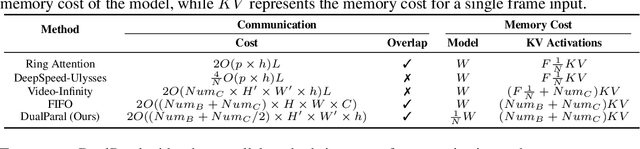

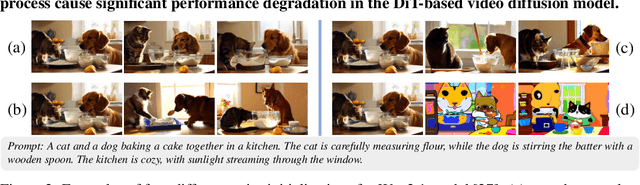

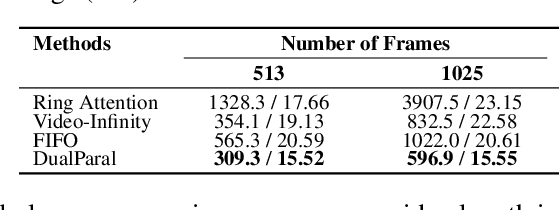

Abstract:Diffusion Transformer (DiT)-based video diffusion models generate high-quality videos at scale but incur prohibitive processing latency and memory costs for long videos. To address this, we propose a novel distributed inference strategy, termed DualParal. The core idea is that, instead of generating an entire video on a single GPU, we parallelize both temporal frames and model layers across GPUs. However, a naive implementation of this division faces a key limitation: since diffusion models require synchronized noise levels across frames, this implementation leads to the serialization of original parallelisms. We leverage a block-wise denoising scheme to handle this. Namely, we process a sequence of frame blocks through the pipeline with progressively decreasing noise levels. Each GPU handles a specific block and layer subset while passing previous results to the next GPU, enabling asynchronous computation and communication. To further optimize performance, we incorporate two key enhancements. Firstly, a feature cache is implemented on each GPU to store and reuse features from the prior block as context, minimizing inter-GPU communication and redundant computation. Secondly, we employ a coordinated noise initialization strategy, ensuring globally consistent temporal dynamics by sharing initial noise patterns across GPUs without extra resource costs. Together, these enable fast, artifact-free, and infinitely long video generation. Applied to the latest diffusion transformer video generator, our method efficiently produces 1,025-frame videos with up to 6.54$\times$ lower latency and 1.48$\times$ lower memory cost on 8$\times$RTX 4090 GPUs.

TimeCausality: Evaluating the Causal Ability in Time Dimension for Vision Language Models

May 21, 2025

Abstract:Reasoning about temporal causality, particularly irreversible transformations of objects governed by real-world knowledge (e.g., fruit decay and human aging), is a fundamental aspect of human visual understanding. Unlike temporal perception based on simple event sequences, this form of reasoning requires a deeper comprehension of how object states change over time. Although the current powerful Vision-Language Models (VLMs) have demonstrated impressive performance on a wide range of downstream tasks, their capacity to reason about temporal causality remains underexplored. To address this gap, we introduce \textbf{TimeCausality}, a novel benchmark specifically designed to evaluate the causal reasoning ability of VLMs in the temporal dimension. Based on our TimeCausality, we find that while the current SOTA open-source VLMs have achieved performance levels comparable to closed-source models like GPT-4o on various standard visual question answering tasks, they fall significantly behind on our benchmark compared with their closed-source competitors. Furthermore, even GPT-4o exhibits a marked drop in performance on TimeCausality compared to its results on other tasks. These findings underscore the critical need to incorporate temporal causality into the evaluation and development of VLMs, and they highlight an important challenge for the open-source VLM community moving forward. Code and Data are available at \href{https://github.com/Zeqing-Wang/TimeCausality }{TimeCausality}.

Tracking-Aware Deformation Field Estimation for Non-rigid 3D Reconstruction in Robotic Surgeries

Mar 04, 2025

Abstract:Minimally invasive procedures have been advanced rapidly by the robotic laparoscopic surgery. The latter greatly assists surgeons in sophisticated and precise operations with reduced invasiveness. Nevertheless, it is still safety critical to be aware of even the least tissue deformation during instrument-tissue interactions, especially in 3D space. To address this, recent works rely on NeRF to render 2D videos from different perspectives and eliminate occlusions. However, most of the methods fail to predict the accurate 3D shapes and associated deformation estimates robustly. Differently, we propose Tracking-Aware Deformation Field (TADF), a novel framework which reconstructs the 3D mesh along with the 3D tissue deformation simultaneously. It first tracks the key points of soft tissue by a foundation vision model, providing an accurate 2D deformation field. Then, the 2D deformation field is smoothly incorporated with a neural implicit reconstruction network to obtain tissue deformation in the 3D space. Finally, we experimentally demonstrate that the proposed method provides more accurate deformation estimation compared with other 3D neural reconstruction methods in two public datasets.

SAMCL: Empowering SAM to Continually Learn from Dynamic Domains

Dec 06, 2024Abstract:Segment Anything Model (SAM) struggles with segmenting objects in the open world, especially across diverse and dynamic domains. Continual segmentation (CS) is a potential technique to solve this issue, but a significant obstacle is the intractable balance between previous domains (stability) and new domains (plasticity) during CS. Furthermore, how to utilize two kinds of features of SAM, images and prompts, in an efficient and effective CS manner remains a significant hurdle. In this work, we propose a novel CS method, termed SAMCL, to address these challenges. It is the first study to empower SAM with the CS ability across dynamic domains. SAMCL decouples stability and plasticity during CS by two components: $\textit{AugModule}$ and $\textit{Module Selector}$. Specifically, SAMCL leverages individual $\textit{AugModule}$ to effectively and efficiently learn new relationships between images and prompts in each domain. $\textit{Module Selector}$ selects the appropriate module during testing, based on the inherent ability of SAM to distinguish between different domains. These two components enable SAMCL to realize a task-agnostic method without any interference across different domains. Experimental results demonstrate that SAMCL outperforms state-of-the-art methods, achieving an exceptionally low average forgetting of just $0.5$%, along with at least a $2.5$% improvement in transferring to unseen domains. Moreover, the tunable parameter consumption in AugModule is about $0.236$MB, marking at least a $23.3$% reduction compared to other fine-tuning methods.

Is this Generated Person Existed in Real-world? Fine-grained Detecting and Calibrating Abnormal Human-body

Nov 21, 2024

Abstract:Recent improvements in visual synthesis have significantly enhanced the depiction of generated human photos, which are pivotal due to their wide applicability and demand. Nonetheless, the existing text-to-image or text-to-video models often generate low-quality human photos that might differ considerably from real-world body structures, referred to as "abnormal human bodies". Such abnormalities, typically deemed unacceptable, pose considerable challenges in the detection and repair of them within human photos. These challenges require precise abnormality recognition capabilities, which entail pinpointing both the location and the abnormality type. Intuitively, Visual Language Models (VLMs) that have obtained remarkable performance on various visual tasks are quite suitable for this task. However, their performance on abnormality detection in human photos is quite poor. Hence, it is quite important to highlight this task for the research community. In this paper, we first introduce a simple yet challenging task, i.e., \textbf{F}ine-grained \textbf{H}uman-body \textbf{A}bnormality \textbf{D}etection \textbf{(FHAD)}, and construct two high-quality datasets for evaluation. Then, we propose a meticulous framework, named HumanCalibrator, which identifies and repairs abnormalities in human body structures while preserving the other content. Experiments indicate that our HumanCalibrator achieves high accuracy in abnormality detection and accomplishes an increase in visual comparisons while preserving the other visual content.

Efficient Continual Learning with Low Memory Footprint For Edge Device

Jul 15, 2024

Abstract:Continual learning(CL) is a useful technique to acquire dynamic knowledge continually. Although powerful cloud platforms can fully exert the ability of CL,e.g., customized recommendation systems, similar personalized requirements for edge devices are almost disregarded. This phenomenon stems from the huge resource overhead involved in training neural networks and overcoming the forgetting problem of CL. This paper focuses on these scenarios and proposes a compact algorithm called LightCL. Different from other CL methods bringing huge resource consumption to acquire generalizability among all tasks for delaying forgetting, LightCL compress the resource consumption of already generalized components in neural networks and uses a few extra resources to improve memory in other parts. We first propose two new metrics of learning plasticity and memory stability to seek generalizability during CL. Based on the discovery that lower and middle layers have more generalizability and deeper layers are opposite, we $\textit{Maintain Generalizability}$ by freezing the lower and middle layers. Then, we $\textit{Memorize Feature Patterns}$ to stabilize the feature extracting patterns of previous tasks to improve generalizability in deeper layers. In the experimental comparison, LightCL outperforms other SOTA methods in delaying forgetting and reduces at most $\textbf{6.16$\times$}$ memory footprint, proving the excellent performance of LightCL in efficiency. We also evaluate the efficiency of our method on an edge device, the Jetson Nano, which further proves our method's practical effectiveness.

Jump-teaching: Ultra Efficient and Robust Learning with Noisy Label

May 28, 2024

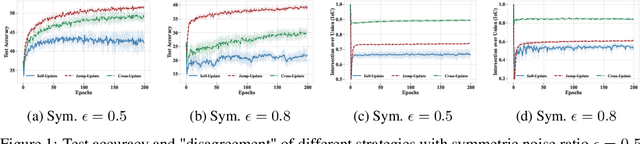

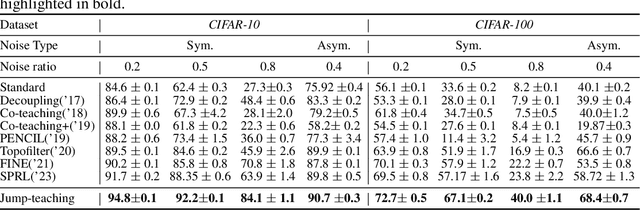

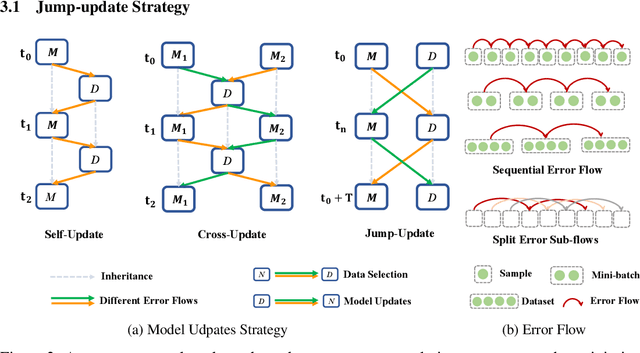

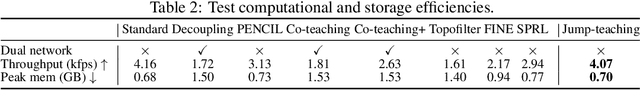

Abstract:Sample selection is the most straightforward technique to combat label noise, aiming to distinguish mislabeled samples during training and avoid the degradation of the robustness of the model. In the workflow, $\textit{selecting possibly clean data}$ and $\textit{model update}$ are iterative. However, their interplay and intrinsic characteristics hinder the robustness and efficiency of learning with noisy labels: 1) The model chooses clean data with selection bias, leading to the accumulated error in the model update. 2) Most selection strategies leverage partner networks or supplementary information to mitigate label corruption, albeit with increased computation resources and lower throughput speed. Therefore, we employ only one network with the jump manner update to decouple the interplay and mine more semantic information from the loss for a more precise selection. Specifically, the selection of clean data for each model update is based on one of the prior models, excluding the last iteration. The strategy of model update exhibits a jump behavior in the form. Moreover, we map the outputs of the network and labels into the same semantic feature space, respectively. In this space, a detailed and simple loss distribution is generated to distinguish clean samples more effectively. Our proposed approach achieves almost up to $2.53\times$ speedup, $0.46\times$ peak memory footprint, and superior robustness over state-of-the-art works with various noise settings.

Mimic: Speaking Style Disentanglement for Speech-Driven 3D Facial Animation

Dec 18, 2023Abstract:Speech-driven 3D facial animation aims to synthesize vivid facial animations that accurately synchronize with speech and match the unique speaking style. However, existing works primarily focus on achieving precise lip synchronization while neglecting to model the subject-specific speaking style, often resulting in unrealistic facial animations. To the best of our knowledge, this work makes the first attempt to explore the coupled information between the speaking style and the semantic content in facial motions. Specifically, we introduce an innovative speaking style disentanglement method, which enables arbitrary-subject speaking style encoding and leads to a more realistic synthesis of speech-driven facial animations. Subsequently, we propose a novel framework called \textbf{Mimic} to learn disentangled representations of the speaking style and content from facial motions by building two latent spaces for style and content, respectively. Moreover, to facilitate disentangled representation learning, we introduce four well-designed constraints: an auxiliary style classifier, an auxiliary inverse classifier, a content contrastive loss, and a pair of latent cycle losses, which can effectively contribute to the construction of the identity-related style space and semantic-related content space. Extensive qualitative and quantitative experiments conducted on three publicly available datasets demonstrate that our approach outperforms state-of-the-art methods and is capable of capturing diverse speaking styles for speech-driven 3D facial animation. The source code and supplementary video are publicly available at: https://zeqing-wang.github.io/Mimic/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge