Ze Li

EvolvTrip: Enhancing Literary Character Understanding with Temporal Theory-of-Mind Graphs

Jun 16, 2025

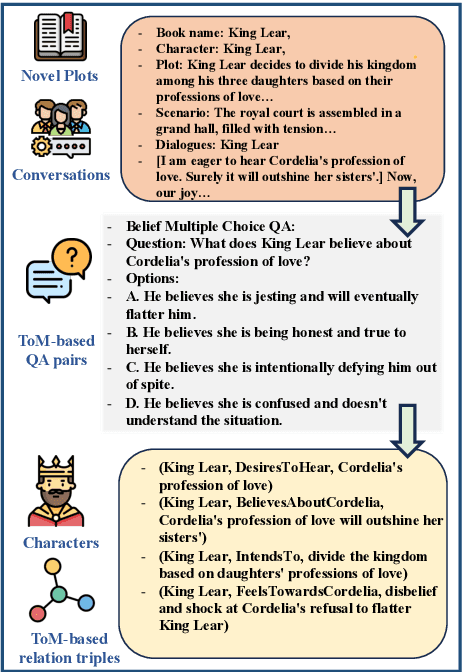

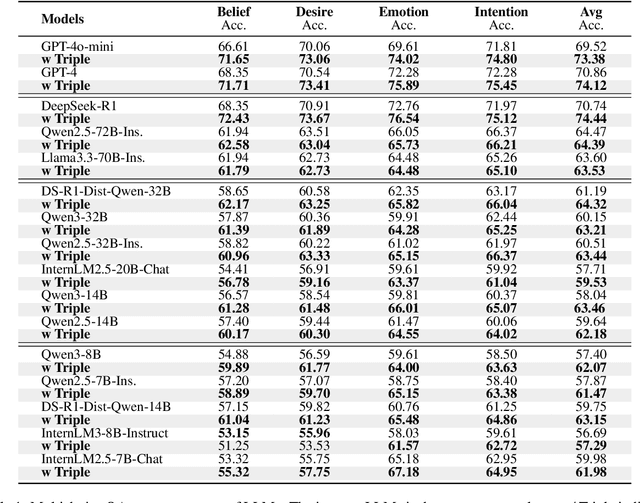

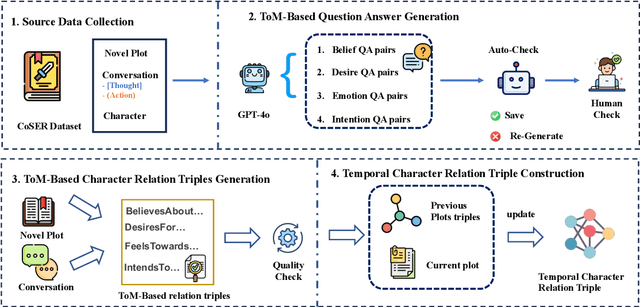

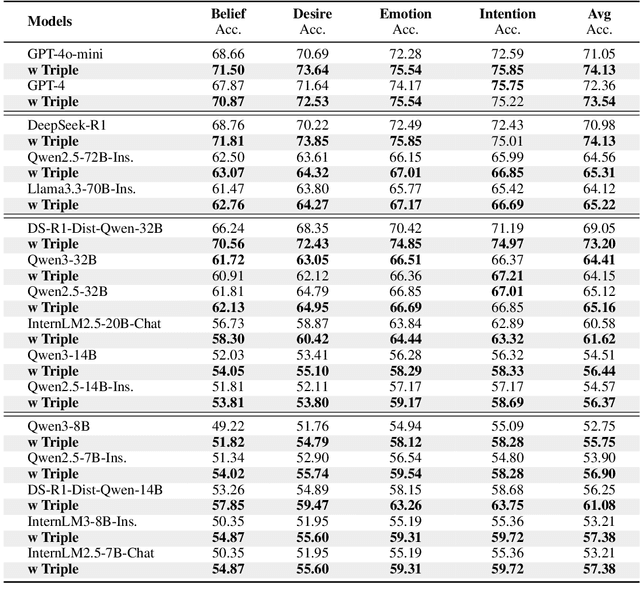

Abstract:A compelling portrayal of characters is essential to the success of narrative writing. For readers, appreciating a character's traits requires the ability to infer their evolving beliefs, desires, and intentions over the course of a complex storyline, a cognitive skill known as Theory-of-Mind (ToM). Performing ToM reasoning in prolonged narratives requires readers to integrate historical context with current narrative information, a task at which humans excel but Large Language Models (LLMs) often struggle. To systematically evaluate LLMs' ToM reasoning capability in long narratives, we construct LitCharToM, a benchmark of character-centric questions across four ToM dimensions from classic literature. Further, we introduce EvolvTrip, a perspective-aware temporal knowledge graph that tracks psychological development throughout narratives. Our experiments demonstrate that EvolvTrip consistently enhances performance of LLMs across varying scales, even in challenging extended-context scenarios. EvolvTrip proves to be particularly valuable for smaller models, partially bridging the performance gap with larger LLMs and showing great compatibility with lengthy narratives. Our findings highlight the importance of explicit representation of temporal character mental states in narrative comprehension and offer a foundation for more sophisticated character understanding. Our data and code are publicly available at https://github.com/Bernard-Yang/EvolvTrip.

Diarization-Aware Multi-Speaker Automatic Speech Recognition via Large Language Models

Jun 06, 2025Abstract:Multi-speaker automatic speech recognition (MS-ASR) faces significant challenges in transcribing overlapped speech, a task critical for applications like meeting transcription and conversational analysis. While serialized output training (SOT)-style methods serve as common solutions, they often discard absolute timing information, limiting their utility in time-sensitive scenarios. Leveraging recent advances in large language models (LLMs) for conversational audio processing, we propose a novel diarization-aware multi-speaker ASR system that integrates speaker diarization with LLM-based transcription. Our framework processes structured diarization inputs alongside frame-level speaker and semantic embeddings, enabling the LLM to generate segment-level transcriptions. Experiments demonstrate that the system achieves robust performance in multilingual dyadic conversations and excels in complex, high-overlap multi-speaker meeting scenarios. This work highlights the potential of LLMs as unified back-ends for joint speaker-aware segmentation and transcription.

Robust Low-Light Human Pose Estimation through Illumination-Texture Modulation

Jan 14, 2025

Abstract:As critical visual details become obscured, the low visibility and high ISO noise in extremely low-light images pose a significant challenge to human pose estimation. Current methods fail to provide high-quality representations due to reliance on pixel-level enhancements that compromise semantics and the inability to effectively handle extreme low-light conditions for robust feature learning. In this work, we propose a frequency-based framework for low-light human pose estimation, rooted in the "divide-and-conquer" principle. Instead of uniformly enhancing the entire image, our method focuses on task-relevant information. By applying dynamic illumination correction to the low-frequency components and low-rank denoising to the high-frequency components, we effectively enhance both the semantic and texture information essential for accurate pose estimation. As a result, this targeted enhancement method results in robust, high-quality representations, significantly improving pose estimation performance. Extensive experiments demonstrating its superiority over state-of-the-art methods in various challenging low-light scenarios.

Adversarial Attacks and Robust Defenses in Speaker Embedding based Zero-Shot Text-to-Speech System

Oct 05, 2024Abstract:Speaker embedding based zero-shot Text-to-Speech (TTS) systems enable high-quality speech synthesis for unseen speakers using minimal data. However, these systems are vulnerable to adversarial attacks, where an attacker introduces imperceptible perturbations to the original speaker's audio waveform, leading to synthesized speech sounds like another person. This vulnerability poses significant security risks, including speaker identity spoofing and unauthorized voice manipulation. This paper investigates two primary defense strategies to address these threats: adversarial training and adversarial purification. Adversarial training enhances the model's robustness by integrating adversarial examples during the training process, thereby improving resistance to such attacks. Adversarial purification, on the other hand, employs diffusion probabilistic models to revert adversarially perturbed audio to its clean form. Experimental results demonstrate that these defense mechanisms can significantly reduce the impact of adversarial perturbations, enhancing the security and reliability of speaker embedding based zero-shot TTS systems in adversarial environments.

The Database and Benchmark for Source Speaker Verification Against Voice Conversion

Jun 07, 2024Abstract:Voice conversion systems can transform audio to mimic another speaker's voice, thereby attacking speaker verification systems. However, ongoing studies on source speaker verification are hindered by limited data availability and methodological constraints. In this paper, we generate a large-scale converted speech database and train a batch of baseline systems based on the MFA-Conformer architecture to promote the source speaker verification task. In addition, we introduce a related task called conversion method recognition. An adapter-based multi-task learning approach is employed to achieve effective conversion method recognition without compromising source speaker verification performance. Additionally, we investigate and effectively address the open-set conversion method recognition problem through the implementation of an open-set nearest neighbor approach.

Positional encoding is not the same as context: A study on positional encoding for Sequential recommendation

May 16, 2024Abstract:The expansion of streaming media and e-commerce has led to a boom in recommendation systems, including Sequential recommendation systems, which consider the user's previous interactions with items. In recent years, research has focused on architectural improvements such as transformer blocks and feature extraction that can augment model information. Among these features are context and attributes. Of particular importance is the temporal footprint, which is often considered part of the context and seen in previous publications as interchangeable with positional information. Other publications use positional encodings with little attention to them. In this paper, we analyse positional encodings, showing that they provide relative information between items that are not inferable from the temporal footprint. Furthermore, we evaluate different encodings and how they affect metrics and stability using Amazon datasets. We added some new encodings to help with these problems along the way. We found that we can reach new state-of-the-art results by finding the correct positional encoding, but more importantly, certain encodings stabilise the training.

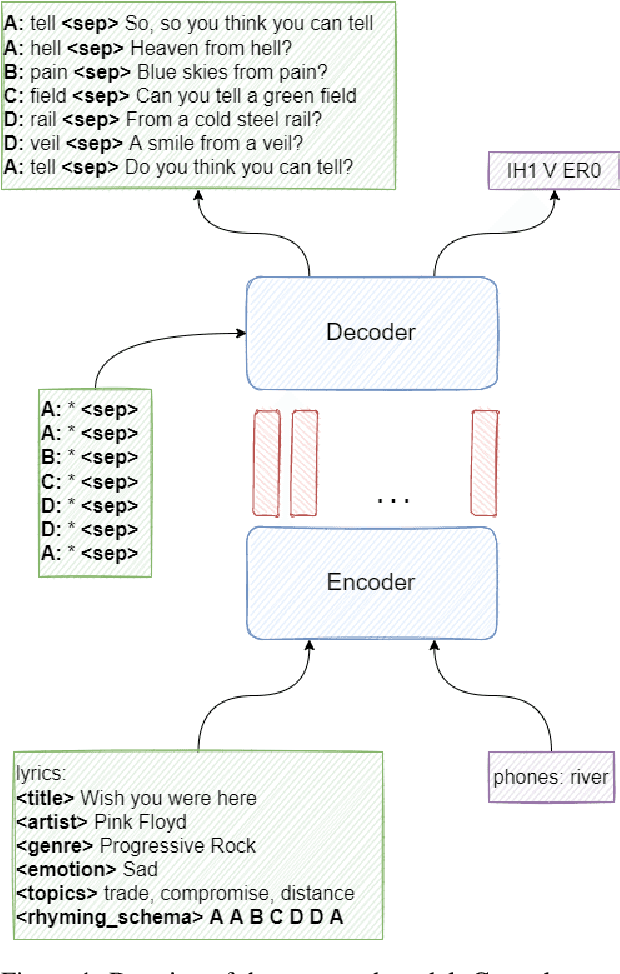

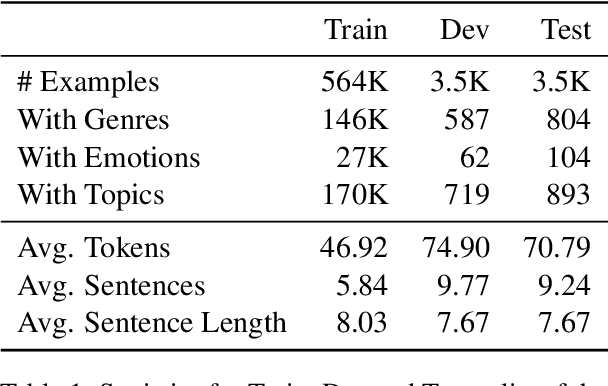

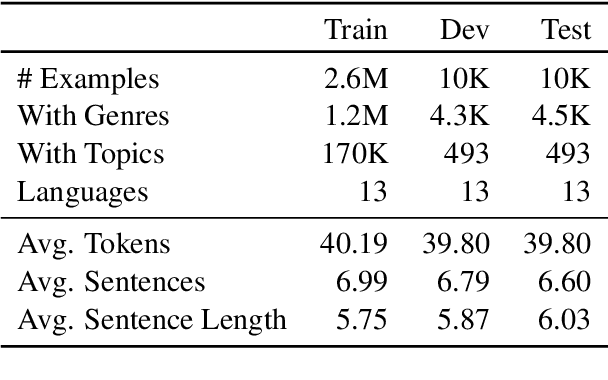

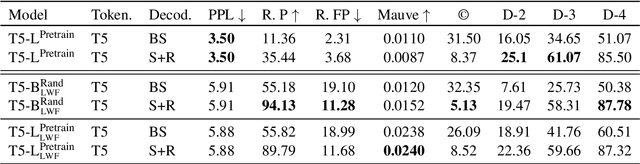

Encoder-Decoder Framework for Interactive Free Verses with Generation with Controllable High-Quality Rhyming

May 08, 2024

Abstract:Composing poetry or lyrics involves several creative factors, but a challenging aspect of generation is the adherence to a more or less strict metric and rhyming pattern. To address this challenge specifically, previous work on the task has mainly focused on reverse language modeling, which brings the critical selection of each rhyming word to the forefront of each verse. On the other hand, reversing the word order requires that models be trained from scratch with this task-specific goal and cannot take advantage of transfer learning from a Pretrained Language Model (PLM). We propose a novel fine-tuning approach that prepends the rhyming word at the start of each lyric, which allows the critical rhyming decision to be made before the model commits to the content of the lyric (as during reverse language modeling), but maintains compatibility with the word order of regular PLMs as the lyric itself is still generated in left-to-right order. We conducted extensive experiments to compare this fine-tuning against the current state-of-the-art strategies for rhyming, finding that our approach generates more readable text and better rhyming capabilities. Furthermore, we furnish a high-quality dataset in English and 12 other languages, analyse the approach's feasibility in a multilingual context, provide extensive experimental results shedding light on good and bad practices for lyrics generation, and propose metrics to compare methods in the future.

Multi-objective Progressive Clustering for Semi-supervised Domain Adaptation in Speaker Verification

Oct 07, 2023

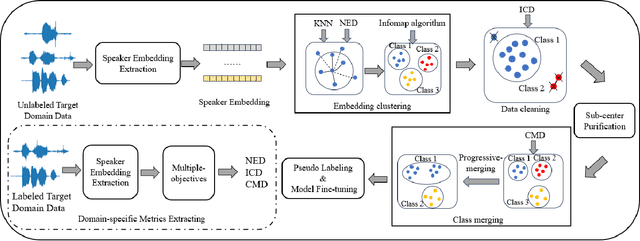

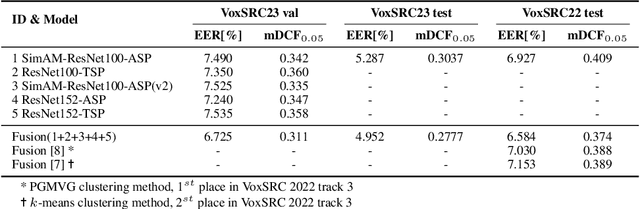

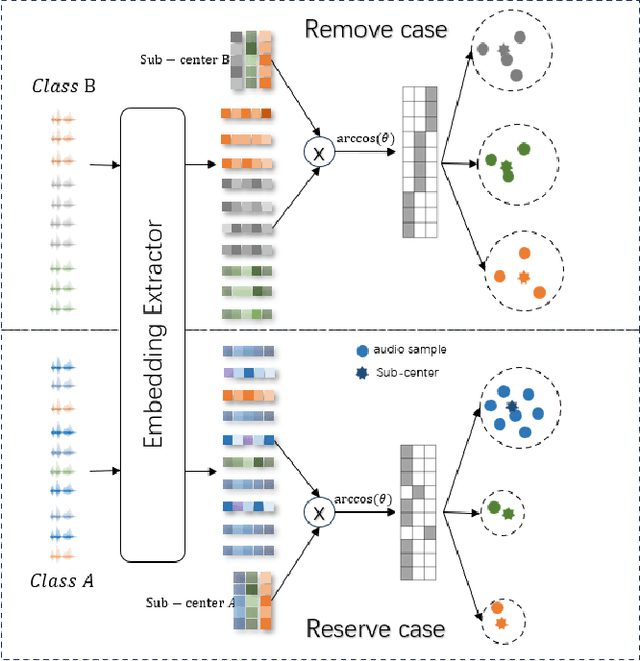

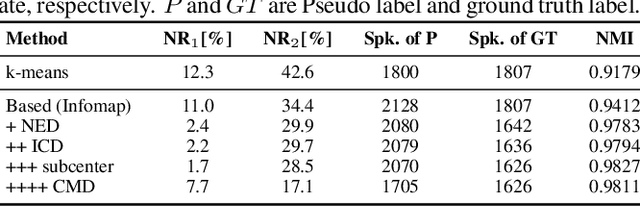

Abstract:Utilizing the pseudo-labeling algorithm with large-scale unlabeled data becomes crucial for semi-supervised domain adaptation in speaker verification tasks. In this paper, we propose a novel pseudo-labeling method named Multi-objective Progressive Clustering (MoPC), specifically designed for semi-supervised domain adaptation. Firstly, we utilize limited labeled data from the target domain to derive domain-specific descriptors based on multiple distinct objectives, namely within-graph denoising, intra-class denoising and inter-class denoising. Then, the Infomap algorithm is adopted for embedding clustering, and the descriptors are leveraged to further refine the target domain's pseudo-labels. Moreover, to further improve the quality of pseudo labels, we introduce the subcenter-purification and progressive-merging strategy for label denoising. Our proposed MoPC method achieves 4.95% EER and ranked the 1$^{st}$ place on the evaluation set of VoxSRC 2023 track 3. We also conduct additional experiments on the FFSVC dataset and yield promising results.

The DKU-MSXF Speaker Verification System for the VoxCeleb Speaker Recognition Challenge 2023

Aug 17, 2023Abstract:This paper is the system description of the DKU-MSXF System for the track1, track2 and track3 of the VoxCeleb Speaker Recognition Challenge 2023 (VoxSRC-23). For Track 1, we utilize a network structure based on ResNet for training. By constructing a cross-age QMF training set, we achieve a substantial improvement in system performance. For Track 2, we inherite the pre-trained model from Track 1 and conducte mixed training by incorporating the VoxBlink-clean dataset. In comparison to Track 1, the models incorporating VoxBlink-clean data exhibit a performance improvement by more than 10% relatively. For Track3, the semi-supervised domain adaptation task, a novel pseudo-labeling method based on triple thresholds and sub-center purification is adopted to make domain adaptation. The final submission achieves mDCF of 0.1243 in task1, mDCF of 0.1165 in Track 2 and EER of 4.952% in Track 3.

Polarized hyperspectral imaging with single fiber bundle via incoherent light transmission matrix approach

Jan 11, 2021

Abstract:The scattering of multispectral incoherent light is a common and unfavorable signal scrambling in natural scenes. However, the blurred light spot due to scattering still holds lots of information remaining to be explored. Former methods failed to recover the polarized hyperspectral information from scattered incoherent light or relied on additional dispersion elements. Here we put forward the transmission matrix (TM) approach for extended objects under incoherent illumination by speculating the unknown TM through experimentally calibrated or digitally emulated ways. Employing a fiber bundle as a powerful imaging and dispersion element, we recover the spatial information in 252 polarized-spectral channels from a single speckle, thus achieving single-shot, high-resolution, broadband hyperspectral imaging for two polarization states with the cheap, compact, fiber-bundle-only system. Based on the scattering principle itself, our method not only greatly improves the robustness of the TM approach to retrieve the input spectral information, but also reveals the feasibility to explore the polarized spatio-spectral information from blurry speckles only with the help of simple optical setups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge