Yinlong Liu

AffordanceGrasp-R1:Leveraging Reasoning-Based Affordance Segmentation with Reinforcement Learning for Robotic Grasping

Feb 03, 2026Abstract:We introduce AffordanceGrasp-R1, a reasoning-driven affordance segmentation framework for robotic grasping that combines a chain-of-thought (CoT) cold-start strategy with reinforcement learning to enhance deduction and spatial grounding. In addition, we redesign the grasping pipeline to be more context-aware by generating grasp candidates from the global scene point cloud and subsequently filtering them using instruction-conditioned affordance masks. Extensive experiments demonstrate that AffordanceGrasp-R1 consistently outperforms state-of-the-art (SOTA) methods on benchmark datasets, and real-world robotic grasping evaluations further validate its robustness and generalization under complex language-conditioned manipulation scenarios.

Hot-Swap MarkBoard: An Efficient Black-box Watermarking Approach for Large-scale Model Distribution

Jul 28, 2025

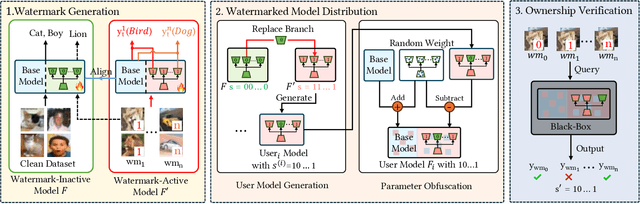

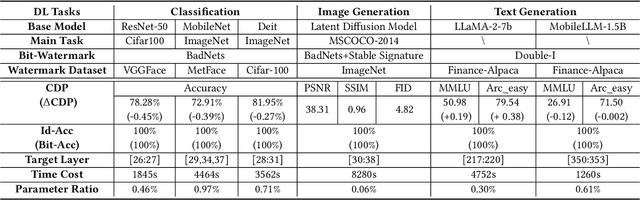

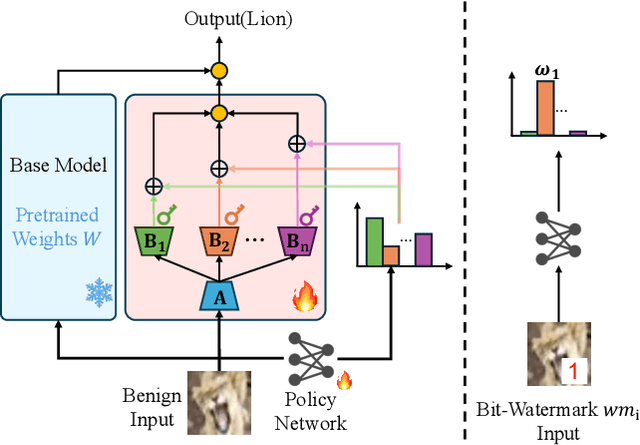

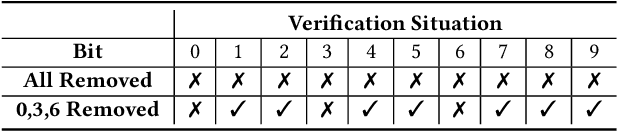

Abstract:Recently, Deep Learning (DL) models have been increasingly deployed on end-user devices as On-Device AI, offering improved efficiency and privacy. However, this deployment trend poses more serious Intellectual Property (IP) risks, as models are distributed on numerous local devices, making them vulnerable to theft and redistribution. Most existing ownership protection solutions (e.g., backdoor-based watermarking) are designed for cloud-based AI-as-a-Service (AIaaS) and are not directly applicable to large-scale distribution scenarios, where each user-specific model instance must carry a unique watermark. These methods typically embed a fixed watermark, and modifying the embedded watermark requires retraining the model. To address these challenges, we propose Hot-Swap MarkBoard, an efficient watermarking method. It encodes user-specific $n$-bit binary signatures by independently embedding multiple watermarks into a multi-branch Low-Rank Adaptation (LoRA) module, enabling efficient watermark customization without retraining through branch swapping. A parameter obfuscation mechanism further entangles the watermark weights with those of the base model, preventing removal without degrading model performance. The method supports black-box verification and is compatible with various model architectures and DL tasks, including classification, image generation, and text generation. Extensive experiments across three types of tasks and six backbone models demonstrate our method's superior efficiency and adaptability compared to existing approaches, achieving 100\% verification accuracy.

Linearly Solving Robust Rotation Estimation

Jun 13, 2025Abstract:Rotation estimation plays a fundamental role in computer vision and robot tasks, and extremely robust rotation estimation is significantly useful for safety-critical applications. Typically, estimating a rotation is considered a non-linear and non-convex optimization problem that requires careful design. However, in this paper, we provide some new perspectives that solving a rotation estimation problem can be reformulated as solving a linear model fitting problem without dropping any constraints and without introducing any singularities. In addition, we explore the dual structure of a rotation motion, revealing that it can be represented as a great circle on a quaternion sphere surface. Accordingly, we propose an easily understandable voting-based method to solve rotation estimation. The proposed method exhibits exceptional robustness to noise and outliers and can be computed in parallel with graphics processing units (GPUs) effortlessly. Particularly, leveraging the power of GPUs, the proposed method can obtain a satisfactory rotation solution for large-scale($10^6$) and severely corrupted (99$\%$ outlier ratio) rotation estimation problems under 0.5 seconds. Furthermore, to validate our theoretical framework and demonstrate the superiority of our proposed method, we conduct controlled experiments and real-world dataset experiments. These experiments provide compelling evidence supporting the effectiveness and robustness of our approach in solving rotation estimation problems.

Accelerating Outlier-robust Rotation Estimation by Stereographic Projection

Feb 10, 2025Abstract:Rotation estimation plays a fundamental role in many computer vision and robot tasks. However, efficiently estimating rotation in large inputs containing numerous outliers (i.e., mismatches) and noise is a recognized challenge. Many robust rotation estimation methods have been designed to address this challenge. Unfortunately, existing methods are often inapplicable due to their long computation time and the risk of local optima. In this paper, we propose an efficient and robust rotation estimation method. Specifically, our method first investigates geometric constraints involving only the rotation axis. Then, it uses stereographic projection and spatial voting techniques to identify the rotation axis and angle. Furthermore, our method efficiently obtains the optimal rotation estimation and can estimate multiple rotations simultaneously. To verify the feasibility of our method, we conduct comparative experiments using both synthetic and real-world data. The results show that, with GPU assistance, our method can solve large-scale ($10^6$ points) and severely corrupted (90\% outlier rate) rotation estimation problems within 0.07 seconds, with an angular error of only 0.01 degrees, which is superior to existing methods in terms of accuracy and efficiency.

Efficient and Robust Point Cloud Registration via Heuristics-guided Parameter Search

Apr 09, 2024

Abstract:Estimating the rigid transformation with 6 degrees of freedom based on a putative 3D correspondence set is a crucial procedure in point cloud registration. Existing correspondence identification methods usually lead to large outlier ratios ($>$ 95 $\%$ is common), underscoring the significance of robust registration methods. Many researchers turn to parameter search-based strategies (e.g., Branch-and-Bround) for robust registration. Although related methods show high robustness, their efficiency is limited to the high-dimensional search space. This paper proposes a heuristics-guided parameter search strategy to accelerate the search while maintaining high robustness. We first sample some correspondences (i.e., heuristics) and then just need to sequentially search the feasible regions that make each sample an inlier. Our strategy largely reduces the search space and can guarantee accuracy with only a few inlier samples, therefore enjoying an excellent trade-off between efficiency and robustness. Since directly parameterizing the 6-dimensional nonlinear feasible region for efficient search is intractable, we construct a three-stage decomposition pipeline to reparameterize the feasible region, resulting in three lower-dimensional sub-problems that are easily solvable via our strategy. Besides reducing the searching dimension, our decomposition enables the leverage of 1-dimensional interval stabbing at all three stages for searching acceleration. Moreover, we propose a valid sampling strategy to guarantee our sampling effectiveness, and a compatibility verification setup to further accelerate our search. Extensive experiments on both simulated and real-world datasets demonstrate that our approach exhibits comparable robustness with state-of-the-art methods while achieving a significant efficiency boost.

Transformation Decoupling Strategy based on Screw Theory for Deterministic Point Cloud Registration with Gravity Prior

Nov 02, 2023Abstract:Point cloud registration is challenging in the presence of heavy outlier correspondences. This paper focuses on addressing the robust correspondence-based registration problem with gravity prior that often arises in practice. The gravity directions are typically obtained by inertial measurement units (IMUs) and can reduce the degree of freedom (DOF) of rotation from 3 to 1. We propose a novel transformation decoupling strategy by leveraging screw theory. This strategy decomposes the original 4-DOF problem into three sub-problems with 1-DOF, 2-DOF, and 1-DOF, respectively, thereby enhancing the computation efficiency. Specifically, the first 1-DOF represents the translation along the rotation axis and we propose an interval stabbing-based method to solve it. The second 2-DOF represents the pole which is an auxiliary variable in screw theory and we utilize a branch-and-bound method to solve it. The last 1-DOF represents the rotation angle and we propose a global voting method for its estimation. The proposed method sequentially solves three consensus maximization sub-problems, leading to efficient and deterministic registration. In particular, it can even handle the correspondence-free registration problem due to its significant robustness. Extensive experiments on both synthetic and real-world datasets demonstrate that our method is more efficient and robust than state-of-the-art methods, even when dealing with outlier rates exceeding 99%.

Efficient and Deterministic Search Strategy Based on Residual Projections for Point Cloud Registration

May 19, 2023Abstract:Estimating the rigid transformation between two LiDAR scans through putative 3D correspondences is a typical point cloud registration paradigm. Current 3D feature matching approaches commonly lead to numerous outlier correspondences, making outlier-robust registration techniques indispensable. Many recent studies have adopted the branch and bound (BnB) optimization framework to solve the correspondence-based point cloud registration problem globally and deterministically. Nonetheless, BnB-based methods are time-consuming to search the entire 6-dimensional parameter space, since their computational complexity is exponential to the dimension of the solution domain. In order to enhance algorithm efficiency, existing works attempt to decouple the 6 degrees of freedom (DOF) original problem into two 3-DOF sub-problems, thereby reducing the dimension of the parameter space. In contrast, our proposed approach introduces a novel pose decoupling strategy based on residual projections, effectively decomposing the raw problem into three 2-DOF rotation search sub-problems. Subsequently, we employ a novel BnB-based search method to solve these sub-problems, achieving efficient and deterministic registration. Furthermore, our method can be adapted to address the challenging problem of simultaneous pose and correspondence registration (SPCR). Through extensive experiments conducted on synthetic and real-world datasets, we demonstrate that our proposed method outperforms state-of-the-art methods in terms of efficiency, while simultaneously ensuring robustness.

HRegNet: A Hierarchical Network for Large-scale Outdoor LiDAR Point Cloud Registration

Jul 26, 2021

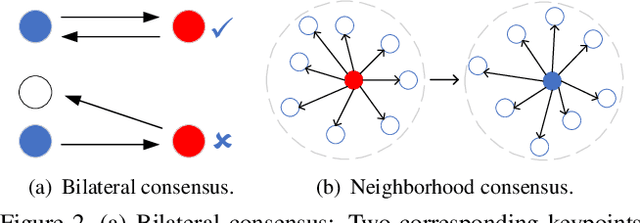

Abstract:Point cloud registration is a fundamental problem in 3D computer vision. Outdoor LiDAR point clouds are typically large-scale and complexly distributed, which makes the registration challenging. In this paper, we propose an efficient hierarchical network named HRegNet for large-scale outdoor LiDAR point cloud registration. Instead of using all points in the point clouds, HRegNet performs registration on hierarchically extracted keypoints and descriptors. The overall framework combines the reliable features in deeper layer and the precise position information in shallower layers to achieve robust and precise registration. We present a correspondence network to generate correct and accurate keypoints correspondences. Moreover, bilateral consensus and neighborhood consensus are introduced for keypoints matching and novel similarity features are designed to incorporate them into the correspondence network, which significantly improves the registration performance. Besides, the whole network is also highly efficient since only a small number of keypoints are used for registration. Extensive experiments are conducted on two large-scale outdoor LiDAR point cloud datasets to demonstrate the high accuracy and efficiency of the proposed HRegNet. The project website is https://ispc-group.github.io/hregnet.

PointINet: Point Cloud Frame Interpolation Network

Dec 18, 2020

Abstract:LiDAR point cloud streams are usually sparse in time dimension, which is limited by hardware performance. Generally, the frame rates of mechanical LiDAR sensors are 10 to 20 Hz, which is much lower than other commonly used sensors like cameras. To overcome the temporal limitations of LiDAR sensors, a novel task named Point Cloud Frame Interpolation is studied in this paper. Given two consecutive point cloud frames, Point Cloud Frame Interpolation aims to generate intermediate frame(s) between them. To achieve that, we propose a novel framework, namely Point Cloud Frame Interpolation Network (PointINet). Based on the proposed method, the low frame rate point cloud streams can be upsampled to higher frame rates. We start by estimating bi-directional 3D scene flow between the two point clouds and then warp them to the given time step based on the 3D scene flow. To fuse the two warped frames and generate intermediate point cloud(s), we propose a novel learning-based points fusion module, which simultaneously takes two warped point clouds into consideration. We design both quantitative and qualitative experiments to evaluate the performance of the point cloud frame interpolation method and extensive experiments on two large scale outdoor LiDAR datasets demonstrate the effectiveness of the proposed PointINet. Our code is available at https://github.com/ispc-lab/PointINet.git.

MoNet: Motion-based Point Cloud Prediction Network

Nov 21, 2020

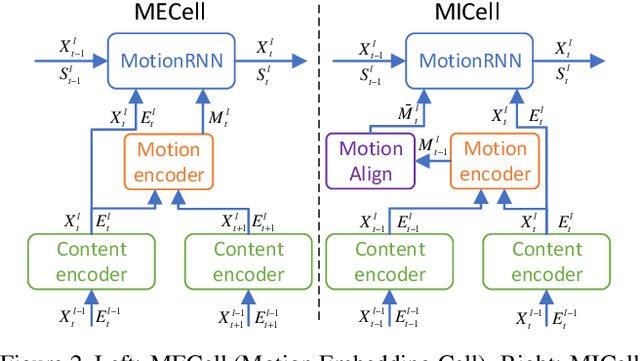

Abstract:Predicting the future can significantly improve the safety of intelligent vehicles, which is a key component in autonomous driving. 3D point clouds accurately model 3D information of surrounding environment and are crucial for intelligent vehicles to perceive the scene. Therefore, prediction of 3D point clouds has great significance for intelligent vehicles, which can be utilized for numerous further applications. However, due to point clouds are unordered and unstructured, point cloud prediction is challenging and has not been deeply explored in current literature. In this paper, we propose a novel motion-based neural network named MoNet. The key idea of the proposed MoNet is to integrate motion features between two consecutive point clouds into the prediction pipeline. The introduction of motion features enables the model to more accurately capture the variations of motion information across frames and thus make better predictions for future motion. In addition, content features are introduced to model the spatial content of individual point clouds. A recurrent neural network named MotionRNN is proposed to capture the temporal correlations of both features. Besides, we propose an attention-based motion align module to address the problem of missing motion features in the inference pipeline. Extensive experiments on two large scale outdoor LiDAR datasets demonstrate the performance of the proposed MoNet. Moreover, we perform experiments on applications using the predicted point clouds and the results indicate the great application potential of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge