Ying Cui

COMETS: Coordinated Multi-Destination Video Transmission with In-Network Rate Adaptation

Jan 26, 2026Abstract:Large-scale video streaming events attract millions of simultaneous viewers, stressing existing delivery infrastructures. Client-driven adaptation reacts slowly to shared congestion, while server-based coordination introduces scalability bottlenecks and single points of failure. We present COMETS, a coordinated multi-destination video transmission framework that leverages information-centric networking principles such as request aggregation and in-network state awareness to enable scalable, fair, and adaptive rate control. COMETS introduces a novel range-interest protocol and distributed in-network decision process that aligns video quality across receiver groups while minimizing redundant transmissions. To achieve this, we develop a lightweight distributed optimization framework that guides per-hop quality adaptation without centralized control. Extensive emulation shows that COMETS consistently improves bandwidth utilization, fairness, and user-perceived quality of experience over DASH, MoQ, and ICN baselines, particularly under high concurrency. The results highlight COMETS as a practical, deployable approach for next-generation scalable video delivery.

* Accepted to appear in IEEE Transactions on Multimedia (2026)

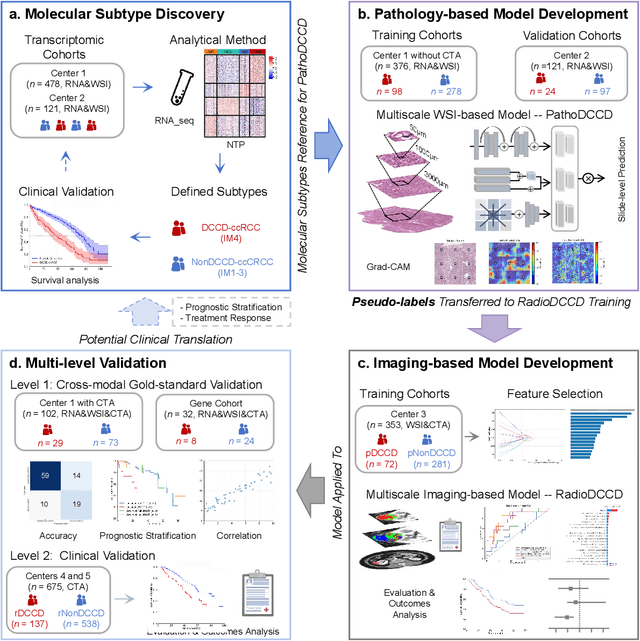

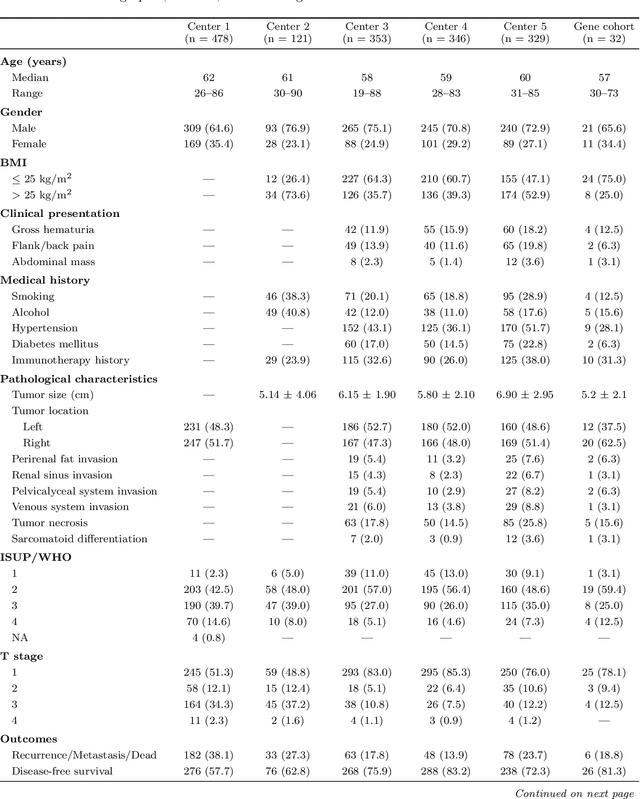

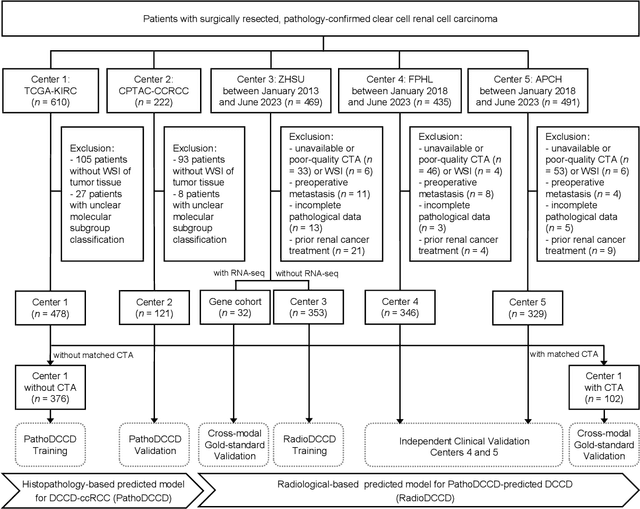

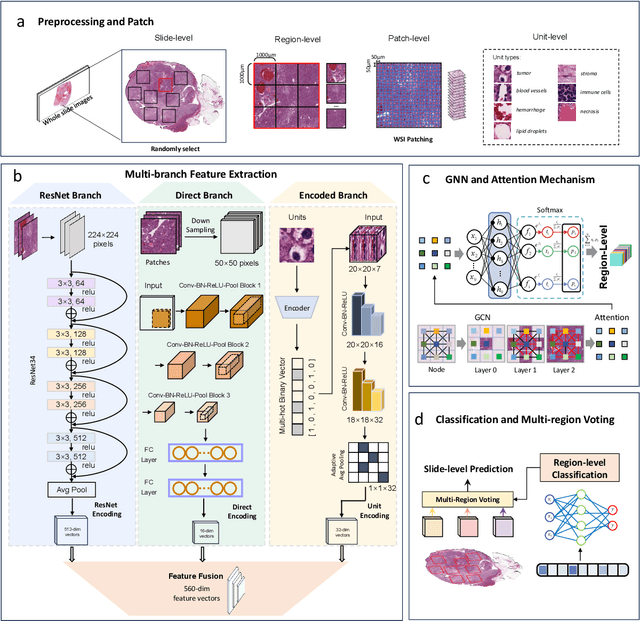

Multiscale Cross-Modal Mapping of Molecular, Pathologic, and Radiologic Phenotypes in Lipid-Deficient Clear Cell Renal CellCarcinoma

Dec 13, 2025

Abstract:Clear cell renal cell carcinoma (ccRCC) exhibits extensive intratumoral heterogeneity on multiple biological scales, contributing to variable clinical outcomes and limiting the effectiveness of conventional TNM staging, which highlights the urgent need for multiscale integrative analytic frameworks. The lipid-deficient de-clear cell differentiated (DCCD) ccRCC subtype, defined by multi-omics analyses, is associated with adverse outcomes even in early-stage disease. Here, we establish a hierarchical cross-scale framework for the preoperative identification of DCCD-ccRCC. At the highest layer, cross-modal mapping transferred molecular signatures to histological and CT phenotypes, establishing a molecular-to-pathology-to-radiology supervisory bridge. Within this framework, each modality-specific model is designed to mirror the inherent hierarchical structure of tumor biology. PathoDCCD captured multi-scale microscopic features, from cellular morphology and tissue architecture to meso-regional organization. RadioDCCD integrated complementary macroscopic information by combining whole-tumor and its habitat-subregions radiomics with a 2D maximal-section heterogeneity metric. These nested models enabled integrated molecular subtype prediction and clinical risk stratification. Across five cohorts totaling 1,659 patients, PathoDCCD reliably recapitulated molecular subtypes, while RadioDCCD provided reliable preoperative prediction. The consistent predictions identified patients with the poorest clinical outcomes. This cross-scale paradigm unifies molecular biology, computational pathology, and quantitative radiology into a biologically grounded strategy for preoperative noninvasive molecular phenotyping of ccRCC.

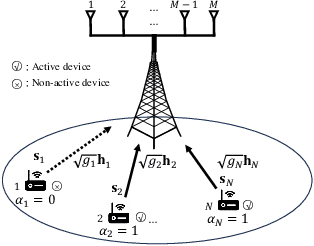

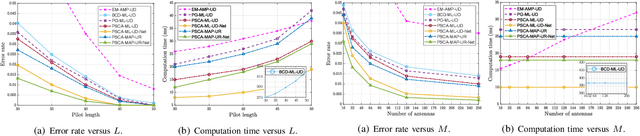

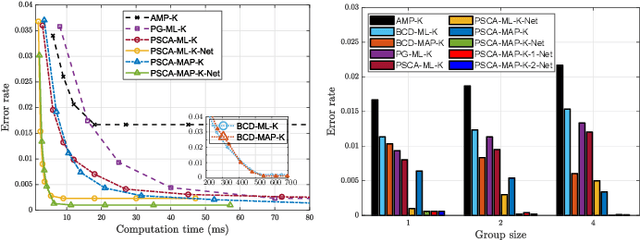

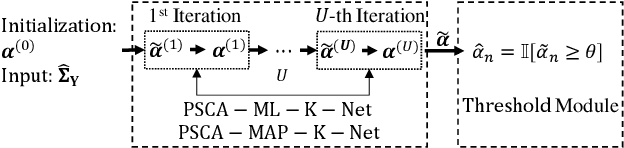

Fast MLE and MAPE-Based Device Activity Detection for Grant-Free Access via PSCA and PSCA-Net

Mar 19, 2025

Abstract:Fast and accurate device activity detection is the critical challenge in grant-free access for supporting massive machine-type communications (mMTC) and ultra-reliable low-latency communications (URLLC) in 5G and beyond. The state-of-the-art methods have unsatisfactory error rates or computation times. To address these outstanding issues, we propose new maximum likelihood estimation (MLE) and maximum a posterior estimation (MAPE) based device activity detection methods for known and unknown pathloss that achieve superior error rate and computation time tradeoffs using optimization and deep learning techniques. Specifically, we investigate four non-convex optimization problems for MLE and MAPE in the two pathloss cases, with one MAPE problem being formulated for the first time. For each non-convex problem, we develop an innovative parallel iterative algorithm using the parallel successive convex approximation (PSCA) method. Each PSCA-based algorithm allows parallel computations, uses up to the objective function's second-order information, converges to the problem's stationary points, and has a low per-iteration computational complexity compared to the state-of-the-art algorithms. Then, for each PSCA-based iterative algorithm, we present a deep unrolling neural network implementation, called PSCA-Net, to further reduce the computation time. Each PSCA-Net elegantly marries the underlying PSCA-based algorithm's parallel computation mechanism with the parallelizable neural network architecture and effectively optimizes its step sizes based on vast data samples to speed up the convergence. Numerical results demonstrate that the proposed methods can significantly reduce the error rate and computation time compared to the state-of-the-art methods, revealing their significant values for grant-free access.

Distill Any Depth: Distillation Creates a Stronger Monocular Depth Estimator

Feb 26, 2025Abstract:Monocular depth estimation (MDE) aims to predict scene depth from a single RGB image and plays a crucial role in 3D scene understanding. Recent advances in zero-shot MDE leverage normalized depth representations and distillation-based learning to improve generalization across diverse scenes. However, current depth normalization methods for distillation, relying on global normalization, can amplify noisy pseudo-labels, reducing distillation effectiveness. In this paper, we systematically analyze the impact of different depth normalization strategies on pseudo-label distillation. Based on our findings, we propose Cross-Context Distillation, which integrates global and local depth cues to enhance pseudo-label quality. Additionally, we introduce a multi-teacher distillation framework that leverages complementary strengths of different depth estimation models, leading to more robust and accurate depth predictions. Extensive experiments on benchmark datasets demonstrate that our approach significantly outperforms state-of-the-art methods, both quantitatively and qualitatively.

DiffCalib: Reformulating Monocular Camera Calibration as Diffusion-Based Dense Incident Map Generation

May 24, 2024Abstract:Monocular camera calibration is a key precondition for numerous 3D vision applications. Despite considerable advancements, existing methods often hinge on specific assumptions and struggle to generalize across varied real-world scenarios, and the performance is limited by insufficient training data. Recently, diffusion models trained on expansive datasets have been confirmed to maintain the capability to generate diverse, high-quality images. This success suggests a strong potential of the models to effectively understand varied visual information. In this work, we leverage the comprehensive visual knowledge embedded in pre-trained diffusion models to enable more robust and accurate monocular camera intrinsic estimation. Specifically, we reformulate the problem of estimating the four degrees of freedom (4-DoF) of camera intrinsic parameters as a dense incident map generation task. The map details the angle of incidence for each pixel in the RGB image, and its format aligns well with the paradigm of diffusion models. The camera intrinsic then can be derived from the incident map with a simple non-learning RANSAC algorithm during inference. Moreover, to further enhance the performance, we jointly estimate a depth map to provide extra geometric information for the incident map estimation. Extensive experiments on multiple testing datasets demonstrate that our model achieves state-of-the-art performance, gaining up to a 40% reduction in prediction errors. Besides, the experiments also show that the precise camera intrinsic and depth maps estimated by our pipeline can greatly benefit practical applications such as 3D reconstruction from a single in-the-wild image.

Fast Computation of Superquantile-Constrained Optimization Through Implicit Scenario Reduction

May 13, 2024Abstract:Superquantiles have recently gained significant interest as a risk-aware metric for addressing fairness and distribution shifts in statistical learning and decision making problems. This paper introduces a fast, scalable and robust second-order computational framework to solve large-scale optimization problems with superquantile-based constraints. Unlike empirical risk minimization, superquantile-based optimization requires ranking random functions evaluated across all scenarios to compute the tail conditional expectation. While this tail-based feature might seem computationally unfriendly, it provides an advantageous setting for a semismooth-Newton-based augmented Lagrangian method. The superquantile operator effectively reduces the dimensions of the Newton systems since the tail expectation involves considerably fewer scenarios. Notably, the extra cost of obtaining relevant second-order information and performing matrix inversions is often comparable to, and sometimes even less than, the effort required for gradient computation. Our developed solver is particularly effective when the number of scenarios substantially exceeds the number of decision variables. In synthetic problems with linear and convex diagonal quadratic objectives, numerical experiments demonstrate that our method outperforms existing approaches by a large margin: It achieves speeds more than 750 times faster for linear and quadratic objectives than the alternating direction method of multipliers as implemented by OSQP for computing low-accuracy solutions. Additionally, it is up to 25 times faster for linear objectives and 70 times faster for quadratic objectives than the commercial solver Gurobi, and 20 times faster for linear objectives and 30 times faster for quadratic objectives than the Portfolio Safeguard optimization suite for high-accuracy solution computations.

Evaluation of ChatGPT-Generated Medical Responses: A Systematic Review and Meta-Analysis

Oct 12, 2023Abstract:Large language models such as ChatGPT are increasingly explored in medical domains. However, the absence of standard guidelines for performance evaluation has led to methodological inconsistencies. This study aims to summarize the available evidence on evaluating ChatGPT's performance in medicine and provide direction for future research. We searched ten medical literature databases on June 15, 2023, using the keyword "ChatGPT". A total of 3520 articles were identified, of which 60 were reviewed and summarized in this paper and 17 were included in the meta-analysis. The analysis showed that ChatGPT displayed an overall integrated accuracy of 56% (95% CI: 51%-60%, I2 = 87%) in addressing medical queries. However, the studies varied in question resource, question-asking process, and evaluation metrics. Moreover, many studies failed to report methodological details, including the version of ChatGPT and whether each question was used independently or repeatedly. Our findings revealed that although ChatGPT demonstrated considerable potential for application in healthcare, the heterogeneity of the studies and insufficient reporting may affect the reliability of these results. Further well-designed studies with comprehensive and transparent reporting are needed to evaluate ChatGPT's performance in medicine.

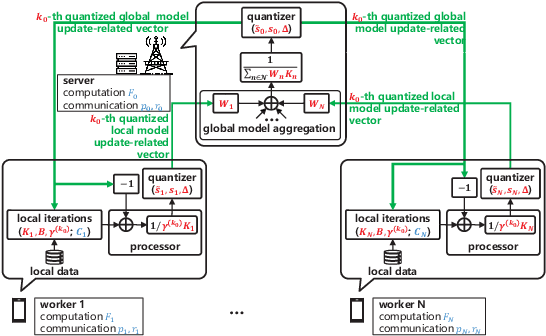

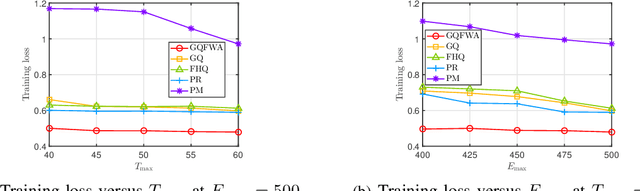

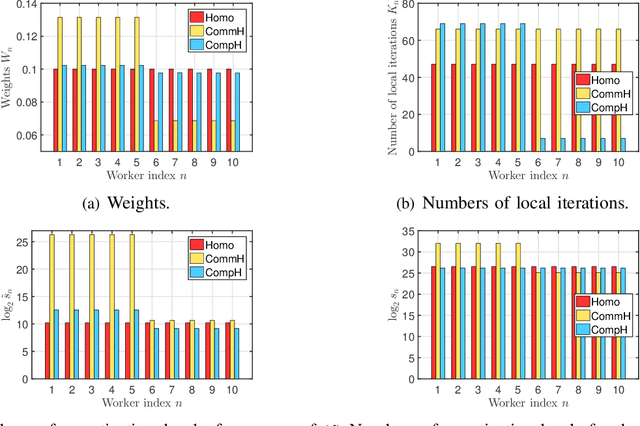

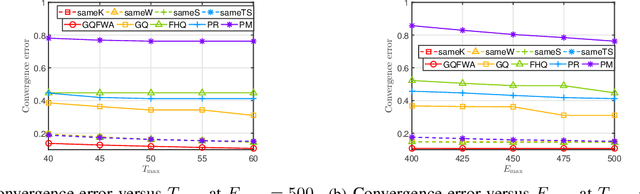

GQFedWAvg: Optimization-Based Quantized Federated Learning in General Edge Computing Systems

Jun 13, 2023

Abstract:The optimal implementation of federated learning (FL) in practical edge computing systems has been an outstanding problem. In this paper, we propose an optimization-based quantized FL algorithm, which can appropriately fit a general edge computing system with uniform or nonuniform computing and communication resources at the workers. Specifically, we first present a new random quantization scheme and analyze its properties. Then, we propose a general quantized FL algorithm, namely GQFedWAvg. Specifically, GQFedWAvg applies the proposed quantization scheme to quantize wisely chosen model update-related vectors and adopts a generalized mini-batch stochastic gradient descent (SGD) method with the weighted average local model updates in global model aggregation. Besides, GQFedWAvg has several adjustable algorithm parameters to flexibly adapt to the computing and communication resources at the server and workers. We also analyze the convergence of GQFedWAvg. Next, we optimize the algorithm parameters of GQFedWAvg to minimize the convergence error under the time and energy constraints. We successfully tackle the challenging non-convex problem using general inner approximation (GIA) and multiple delicate tricks. Finally, we interpret GQFedWAvg's function principle and show its considerable gains over existing FL algorithms using numerical results.

MLE-based Device Activity Detection under Rician Fading for Massive Grant-free Access with Perfect and Imperfect Synchronization

Jun 11, 2023Abstract:Most existing studies on massive grant-free access, proposed to support massive machine-type communications (mMTC) for the Internet of things (IoT), assume Rayleigh fading and perfect synchronization for simplicity. However, in practice, line-of-sight (LoS) components generally exist, and time and frequency synchronization are usually imperfect. This paper systematically investigates maximum likelihood estimation (MLE)-based device activity detection under Rician fading for massive grant-free access with perfect and imperfect synchronization. Specifically, we formulate device activity detection in the synchronous case and joint device activity and offset detection in three asynchronous cases (i.e., time, frequency, and time and frequency asynchronous cases) as MLE problems. In the synchronous case, we propose an iterative algorithm to obtain a stationary point of the MLE problem. In each asynchronous case, we propose two iterative algorithms with identical detection performance but different computational complexities. In particular, one is computationally efficient for small ranges of offsets, whereas the other one, relying on fast Fourier transform (FFT) and inverse FFT, is computationally efficient for large ranges of offsets. The proposed algorithms generalize the existing MLE-based methods for Rayleigh fading and perfect synchronization. Numerical results show the notable gains of the proposed algorithms over existing methods in detection accuracy and computation time.

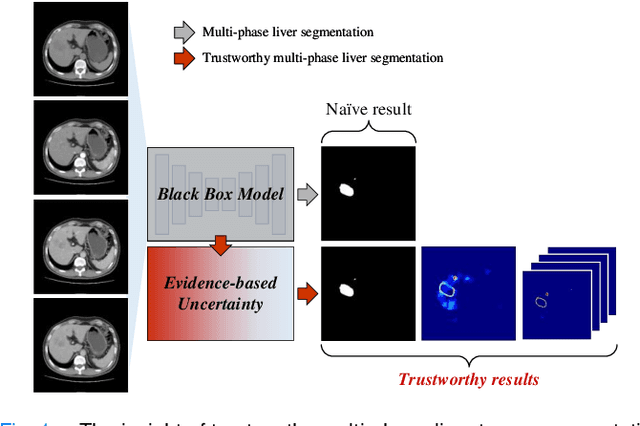

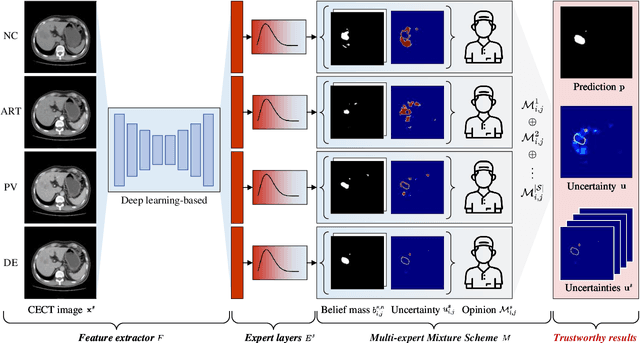

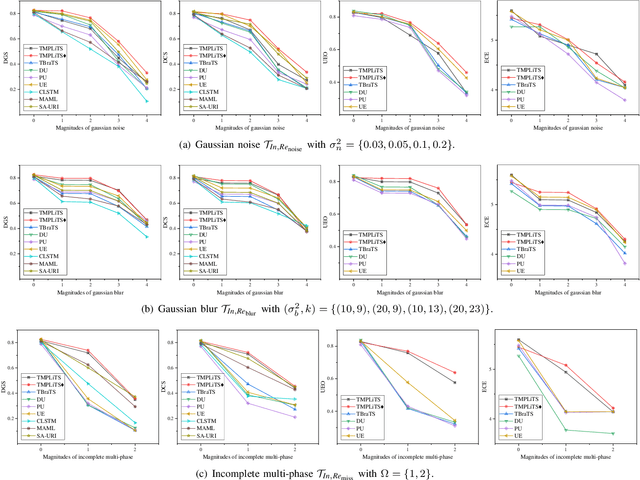

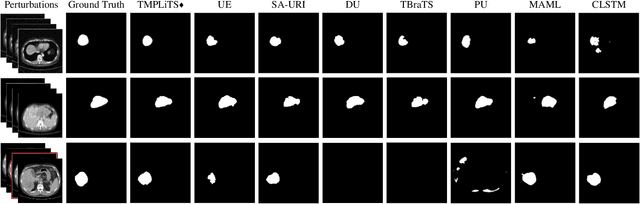

Trustworthy Multi-phase Liver Tumor Segmentation via Evidence-based Uncertainty

May 09, 2023

Abstract:Multi-phase liver contrast-enhanced computed tomography (CECT) images convey the complementary multi-phase information for liver tumor segmentation (LiTS), which are crucial to assist the diagnosis of liver cancer clinically. However, the performances of existing multi-phase liver tumor segmentation (MPLiTS)-based methods suffer from redundancy and weak interpretability, % of the fused result, resulting in the implicit unreliability of clinical applications. In this paper, we propose a novel trustworthy multi-phase liver tumor segmentation (TMPLiTS), which is a unified framework jointly conducting segmentation and uncertainty estimation. The trustworthy results could assist the clinicians to make a reliable diagnosis. Specifically, Dempster-Shafer Evidence Theory (DST) is introduced to parameterize the segmentation and uncertainty as evidence following Dirichlet distribution. The reliability of segmentation results among multi-phase CECT images is quantified explicitly. Meanwhile, a multi-expert mixture scheme (MEMS) is proposed to fuse the multi-phase evidences, which can guarantee the effect of fusion procedure based on theoretical analysis. Experimental results demonstrate the superiority of TMPLiTS compared with the state-of-the-art methods. Meanwhile, the robustness of TMPLiTS is verified, where the reliable performance can be guaranteed against the perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge