Wenbo Xiao

Multimodal Feature-Driven Deep Learning for the Prediction of Duck Body Dimensions and Weight

Mar 19, 2025Abstract:Accurate body dimension and weight measurements are critical for optimizing poultry management, health assessment, and economic efficiency. This study introduces an innovative deep learning-based model leveraging multimodal data-2D RGB images from different views, depth images, and 3D point clouds-for the non-invasive estimation of duck body dimensions and weight. A dataset of 1,023 Linwu ducks, comprising over 5,000 samples with diverse postures and conditions, was collected to support model training. The proposed method innovatively employs PointNet++ to extract key feature points from point clouds, extracts and computes corresponding 3D geometric features, and fuses them with multi-view convolutional 2D features. A Transformer encoder is then utilized to capture long-range dependencies and refine feature interactions, thereby enhancing prediction robustness. The model achieved a mean absolute percentage error (MAPE) of 6.33% and an R2 of 0.953 across eight morphometric parameters, demonstrating strong predictive capability. Unlike conventional manual measurements, the proposed model enables high-precision estimation while eliminating the necessity for physical handling, thereby reducing animal stress and broadening its application scope. This study marks the first application of deep learning techniques to poultry body dimension and weight estimation, providing a valuable reference for the intelligent and precise management of the livestock industry with far-reaching practical significance.

A Neural Network Architecture Based on Attention Gate Mechanism for 3D Magnetotelluric Forward Modeling

Mar 14, 2025

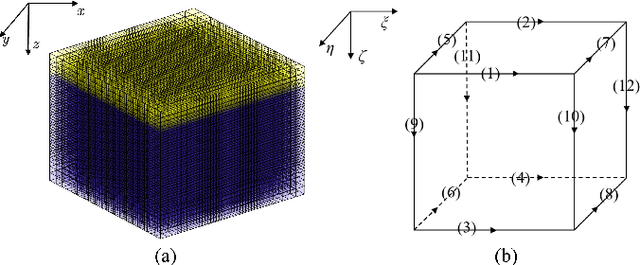

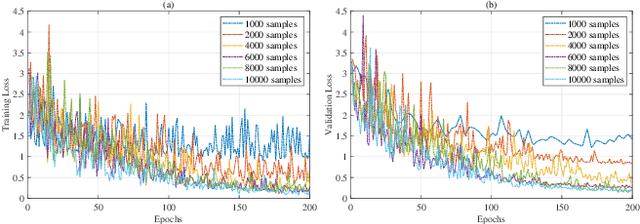

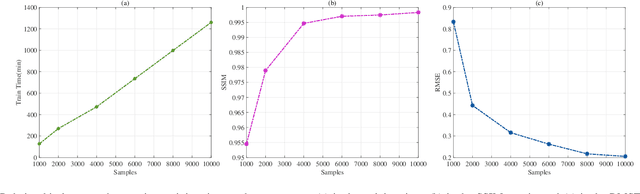

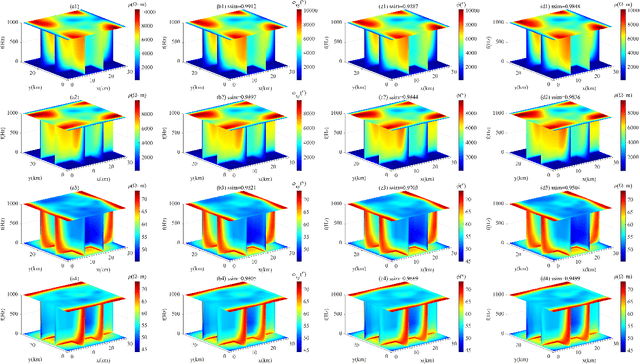

Abstract:Traditional three-dimensional magnetotelluric (MT) numerical forward modeling methods, such as the finite element method (FEM) and finite volume method (FVM), suffer from high computational costs and low efficiency due to limitations in mesh refinement and computational resources. We propose a novel neural network architecture named MTAGU-Net, which integrates an attention gating mechanism for 3D MT forward modeling. Specifically, a dual-path attention gating module is designed based on forward response data images and embedded in the skip connections between the encoder and decoder. This module enables the fusion of critical anomaly information from shallow feature maps during the decoding of deep feature maps, significantly enhancing the network's capability to extract features from anomalous regions. Furthermore, we introduce a synthetic model generation method utilizing 3D Gaussian random field (GRF), which accurately replicates the electrical structures of real-world geological scenarios with high fidelity. Numerical experiments demonstrate that MTAGU-Net outperforms conventional 3D U-Net in terms of convergence stability and prediction accuracy, with the structural similarity index (SSIM) of the forward response data consistently exceeding 0.98. Moreover, the network can accurately predict forward response data on previously unseen datasets models, demonstrating its strong generalization ability and validating the feasibility and effectiveness of this method in practical applications.

Safety Metric Aware Trajectory Repairing for Automated Driving

Aug 20, 2024

Abstract:Recent analyses highlight challenges in autonomous vehicle technologies, particularly failures in decision-making under dynamic or emergency conditions. Traditional automated driving systems recalculate the entire trajectory in a changing environment. Instead, a novel approach retains valid trajectory segments, minimizing the need for complete replanning and reducing changes to the original plan. This work introduces a trajectory repairing framework that calculates a feasible evasive trajectory while computing the Feasible Time-to-React (F-TTR), balancing the maintenance of the original plan with safety assurance. The framework employs a binary search algorithm to iteratively create repaired trajectories, guaranteeing both the safety and feasibility of the trajectory repairing result. In contrast to earlier approaches that separated the calculation of safety metrics from trajectory repairing, which resulted in unsuccessful plans for evasive maneuvers, our work has the anytime capability to provide both a Feasible Time-to-React and an evasive trajectory for further execution.

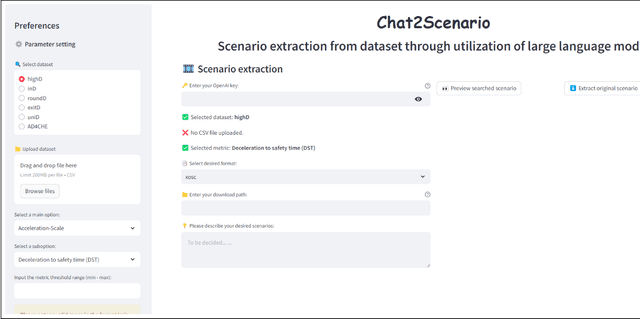

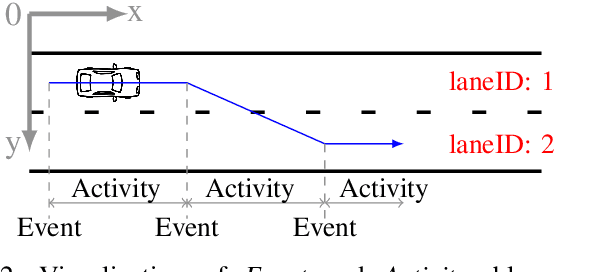

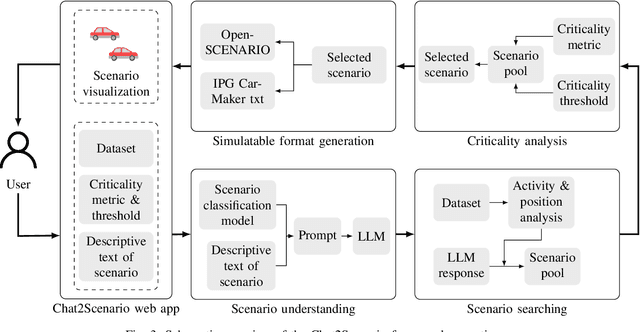

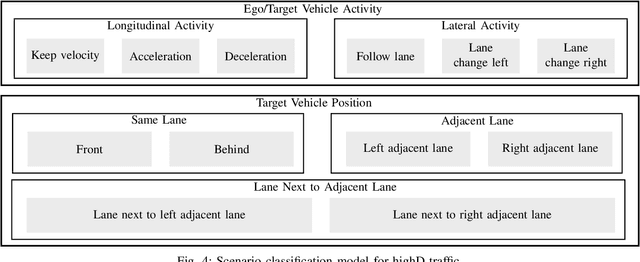

Chat2Scenario: Scenario Extraction From Dataset Through Utilization of Large Language Model

Apr 26, 2024

Abstract:The advent of Large Language Models (LLM) provides new insights to validate Automated Driving Systems (ADS). In the herein-introduced work, a novel approach to extracting scenarios from naturalistic driving datasets is presented. A framework called Chat2Scenario is proposed leveraging the advanced Natural Language Processing (NLP) capabilities of LLM to understand and identify different driving scenarios. By inputting descriptive texts of driving conditions and specifying the criticality metric thresholds, the framework efficiently searches for desired scenarios and converts them into ASAM OpenSCENARIO and IPG CarMaker text files. This methodology streamlines the scenario extraction process and enhances efficiency. Simulations are executed to validate the efficiency of the approach. The framework is presented based on a user-friendly web app and is accessible via the following link: https://github.com/ftgTUGraz/Chat2Scenario.

From Denoising Training to Test-Time Adaptation: Enhancing Domain Generalization for Medical Image Segmentation

Nov 03, 2023Abstract:In medical image segmentation, domain generalization poses a significant challenge due to domain shifts caused by variations in data acquisition devices and other factors. These shifts are particularly pronounced in the most common scenario, which involves only single-source domain data due to privacy concerns. To address this, we draw inspiration from the self-supervised learning paradigm that effectively discourages overfitting to the source domain. We propose the Denoising Y-Net (DeY-Net), a novel approach incorporating an auxiliary denoising decoder into the basic U-Net architecture. The auxiliary decoder aims to perform denoising training, augmenting the domain-invariant representation that facilitates domain generalization. Furthermore, this paradigm provides the potential to utilize unlabeled data. Building upon denoising training, we propose Denoising Test Time Adaptation (DeTTA) that further: (i) adapts the model to the target domain in a sample-wise manner, and (ii) adapts to the noise-corrupted input. Extensive experiments conducted on widely-adopted liver segmentation benchmarks demonstrate significant domain generalization improvements over our baseline and state-of-the-art results compared to other methods. Code is available at https://github.com/WenRuxue/DeTTA.

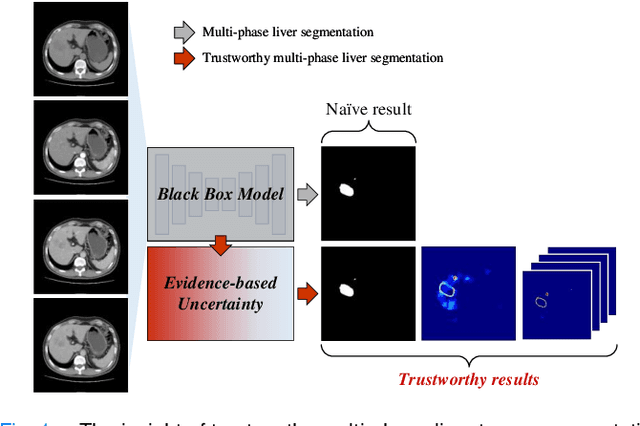

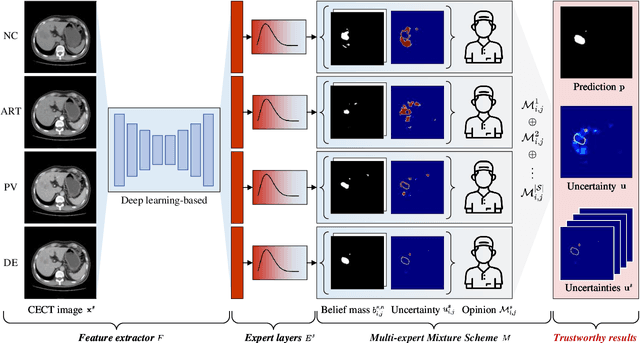

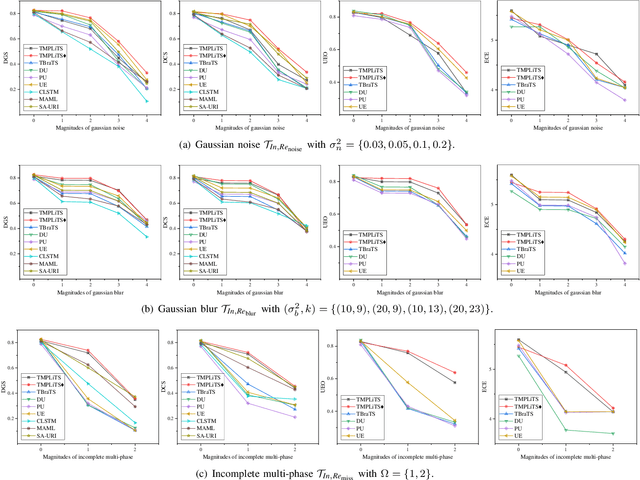

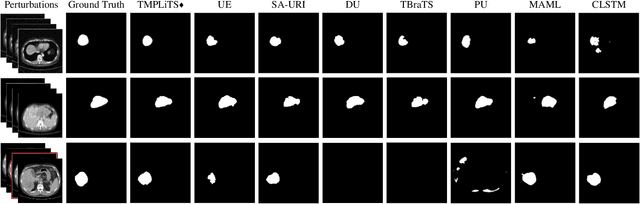

Trustworthy Multi-phase Liver Tumor Segmentation via Evidence-based Uncertainty

May 09, 2023

Abstract:Multi-phase liver contrast-enhanced computed tomography (CECT) images convey the complementary multi-phase information for liver tumor segmentation (LiTS), which are crucial to assist the diagnosis of liver cancer clinically. However, the performances of existing multi-phase liver tumor segmentation (MPLiTS)-based methods suffer from redundancy and weak interpretability, % of the fused result, resulting in the implicit unreliability of clinical applications. In this paper, we propose a novel trustworthy multi-phase liver tumor segmentation (TMPLiTS), which is a unified framework jointly conducting segmentation and uncertainty estimation. The trustworthy results could assist the clinicians to make a reliable diagnosis. Specifically, Dempster-Shafer Evidence Theory (DST) is introduced to parameterize the segmentation and uncertainty as evidence following Dirichlet distribution. The reliability of segmentation results among multi-phase CECT images is quantified explicitly. Meanwhile, a multi-expert mixture scheme (MEMS) is proposed to fuse the multi-phase evidences, which can guarantee the effect of fusion procedure based on theoretical analysis. Experimental results demonstrate the superiority of TMPLiTS compared with the state-of-the-art methods. Meanwhile, the robustness of TMPLiTS is verified, where the reliable performance can be guaranteed against the perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge