Yihui Ren

AutoSizer: Automatic Sizing of Analog and Mixed-Signal Circuits via Large Language Model (LLM) Agents

Feb 02, 2026Abstract:The design of Analog and Mixed-Signal (AMS) integrated circuits remains heavily reliant on expert knowledge, with transistor sizing a major bottleneck due to nonlinear behavior, high-dimensional design spaces, and strict performance constraints. Existing Electronic Design Automation (EDA) methods typically frame sizing as static black-box optimization, resulting in inefficient and less robust solutions. Although Large Language Models (LLMs) exhibit strong reasoning abilities, they are not suited for precise numerical optimization in AMS sizing. To address this gap, we propose AutoSizer, a reflective LLM-driven meta-optimization framework that unifies circuit understanding, adaptive search-space construction, and optimization orchestration in a closed loop. It employs a two-loop optimization framework, with an inner loop for circuit sizing and an outer loop that analyzes optimization dynamics and constraints to iteratively refine the search space from simulation feedback. We further introduce AMS-SizingBench, an open benchmark comprising 24 diverse AMS circuits in SKY130 CMOS technology, designed to evaluate adaptive optimization policies under realistic simulator-based constraints. AutoSizer experimentally achieves higher solution quality, faster convergence, and higher success rate across varying circuit difficulties, outperforming both traditional optimization methods and existing LLM-based agents.

Parameter Inference and Uncertainty Quantification with Diffusion Models: Extending CDI to 2D Spatial Conditioning

Jan 23, 2026Abstract:Uncertainty quantification is critical in scientific inverse problems to distinguish identifiable parameters from those that remain ambiguous given available measurements. The Conditional Diffusion Model-based Inverse Problem Solver (CDI) has previously demonstrated effective probabilistic inference for one-dimensional temporal signals, but its applicability to higher-dimensional spatial data remains unexplored. We extend CDI to two-dimensional spatial conditioning, enabling probabilistic parameter inference directly from spatial observations. We validate this extension on convergent beam electron diffraction (CBED) parameter inference - a challenging multi-parameter inverse problem in materials characterization where sample geometry, electronic structure, and thermal properties must be extracted from 2D diffraction patterns. Using simulated CBED data with ground-truth parameters, we demonstrate that CDI produces well-calibrated posterior distributions that accurately reflect measurement constraints: tight distributions for well-determined quantities and appropriately broad distributions for ambiguous parameters. In contrast, standard regression methods - while appearing accurate on aggregate metrics - mask this underlying uncertainty by predicting training set means for poorly constrained parameters. Our results confirm that CDI successfully extends from temporal to spatial domains, providing the genuine uncertainty information required for robust scientific inference.

LeJOT: An Intelligent Job Cost Orchestration Solution for Databricks Platform

Dec 20, 2025Abstract:With the rapid advancements in big data technologies, the Databricks platform has become a cornerstone for enterprises and research institutions, offering high computational efficiency and a robust ecosystem. However, managing the escalating operational costs associated with job execution remains a critical challenge. Existing solutions rely on static configurations or reactive adjustments, which fail to adapt to the dynamic nature of workloads. To address this, we introduce LeJOT, an intelligent job cost orchestration framework that leverages machine learning for execution time prediction and a solver-based optimization model for real-time resource allocation. Unlike conventional scheduling techniques, LeJOT proactively predicts workload demands, dynamically allocates computing resources, and minimizes costs while ensuring performance requirements are met. Experimental results on real-world Databricks workloads demonstrate that LeJOT achieves an average 20% reduction in cloud computing costs within a minute-level scheduling timeframe, outperforming traditional static allocation strategies. Our approach provides a scalable and adaptive solution for cost-efficient job scheduling in Data Lakehouse environments.

CircuitSense: A Hierarchical Circuit System Benchmark Bridging Visual Comprehension and Symbolic Reasoning in Engineering Design Process

Sep 26, 2025Abstract:Engineering design operates through hierarchical abstraction from system specifications to component implementations, requiring visual understanding coupled with mathematical reasoning at each level. While Multi-modal Large Language Models (MLLMs) excel at natural image tasks, their ability to extract mathematical models from technical diagrams remains unexplored. We present \textbf{CircuitSense}, a comprehensive benchmark evaluating circuit understanding across this hierarchy through 8,006+ problems spanning component-level schematics to system-level block diagrams. Our benchmark uniquely examines the complete engineering workflow: Perception, Analysis, and Design, with a particular emphasis on the critical but underexplored capability of deriving symbolic equations from visual inputs. We introduce a hierarchical synthetic generation pipeline consisting of a grid-based schematic generator and a block diagram generator with auto-derived symbolic equation labels. Comprehensive evaluation of six state-of-the-art MLLMs, including both closed-source and open-source models, reveals fundamental limitations in visual-to-mathematical reasoning. Closed-source models achieve over 85\% accuracy on perception tasks involving component recognition and topology identification, yet their performance on symbolic derivation and analytical reasoning falls below 19\%, exposing a critical gap between visual parsing and symbolic reasoning. Models with stronger symbolic reasoning capabilities consistently achieve higher design task accuracy, confirming the fundamental role of mathematical understanding in circuit synthesis and establishing symbolic reasoning as the key metric for engineering competence.

Towards an Introspective Dynamic Model of Globally Distributed Computing Infrastructures

Jun 24, 2025Abstract:Large-scale scientific collaborations like ATLAS, Belle II, CMS, DUNE, and others involve hundreds of research institutes and thousands of researchers spread across the globe. These experiments generate petabytes of data, with volumes soon expected to reach exabytes. Consequently, there is a growing need for computation, including structured data processing from raw data to consumer-ready derived data, extensive Monte Carlo simulation campaigns, and a wide range of end-user analysis. To manage these computational and storage demands, centralized workflow and data management systems are implemented. However, decisions regarding data placement and payload allocation are often made disjointly and via heuristic means. A significant obstacle in adopting more effective heuristic or AI-driven solutions is the absence of a quick and reliable introspective dynamic model to evaluate and refine alternative approaches. In this study, we aim to develop such an interactive system using real-world data. By examining job execution records from the PanDA workflow management system, we have pinpointed key performance indicators such as queuing time, error rate, and the extent of remote data access. The dataset includes five months of activity. Additionally, we are creating a generative AI model to simulate time series of payloads, which incorporate visible features like category, event count, and submitting group, as well as hidden features like the total computational load-derived from existing PanDA records and computing site capabilities. These hidden features, which are not visible to job allocators, whether heuristic or AI-driven, influence factors such as queuing times and data movement.

EvRT-DETR: The Surprising Effectiveness of DETR-based Detection for Event Cameras

Dec 03, 2024Abstract:Event-based cameras (EBCs) have emerged as a bio-inspired alternative to traditional cameras, offering advantages in power efficiency, temporal resolution, and high dynamic range. However, the development of image analysis methods for EBCs is challenging due to the sparse and asynchronous nature of the data. This work addresses the problem of object detection for the EBC cameras. The current approaches to EBC object detection focus on constructing complex data representations and rely on specialized architectures. Here, we demonstrate that the combination of a Real-Time DEtection TRansformer, or RT-DETR, a state-of-the-art natural image detector, with a simple image-like representation of the EBC data achieves remarkable performance, surpassing current state-of-the-art results. Specifically, we show that a properly trained RT-DETR model on the EBC data achieves performance comparable to the most advanced EBC object detection methods. Next, we propose a low-rank adaptation (LoRA)-inspired way to augment the RT-DETR model to handle temporal dynamics of the data. The designed EvRT-DETR model outperforms the current, most advanced results on standard benchmark datasets Gen1 (mAP $+2.3$) and Gen4 (mAP $+1.4$) while only using standard modules from natural image and video analysis. These results demonstrate that effective EBC object detection can be achieved through careful adaptation of mainstream object detection architectures without requiring specialized architectural engineering. The code is available at: https://github.com/realtime-intelligence/evrt-detr

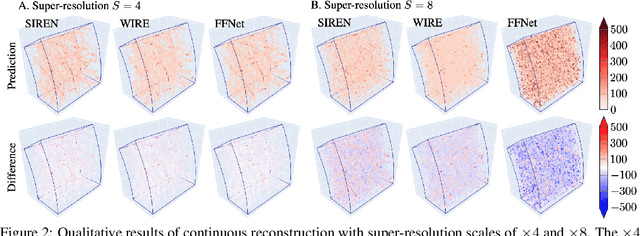

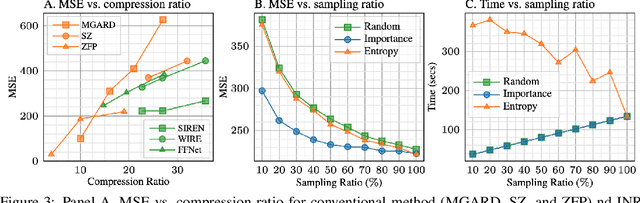

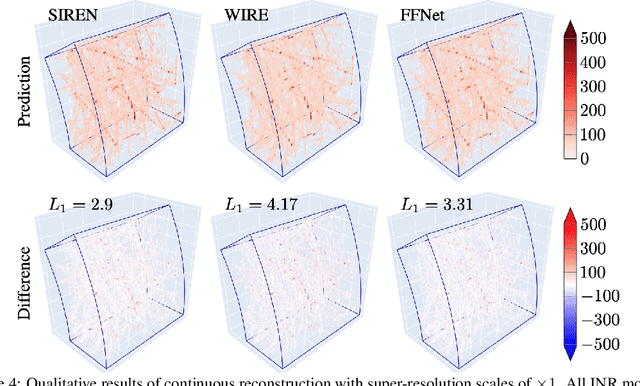

Efficient Compression of Sparse Accelerator Data Using Implicit Neural Representations and Importance Sampling

Dec 02, 2024

Abstract:High-energy, large-scale particle colliders in nuclear and high-energy physics generate data at extraordinary rates, reaching up to $1$ terabyte and several petabytes per second, respectively. The development of real-time, high-throughput data compression algorithms capable of reducing this data to manageable sizes for permanent storage is of paramount importance. A unique characteristic of the tracking detector data is the extreme sparsity of particle trajectories in space, with an occupancy rate ranging from approximately $10^{-6}$ to $10\%$. Furthermore, for downstream tasks, a continuous representation of this data is often more useful than a voxel-based, discrete representation due to the inherently continuous nature of the signals involved. To address these challenges, we propose a novel approach using implicit neural representations for data learning and compression. We also introduce an importance sampling technique to accelerate the network training process. Our method is competitive with traditional compression algorithms, such as MGARD, SZ, and ZFP, while offering significant speed-ups and maintaining negligible accuracy loss through our importance sampling strategy.

Variable Rate Neural Compression for Sparse Detector Data

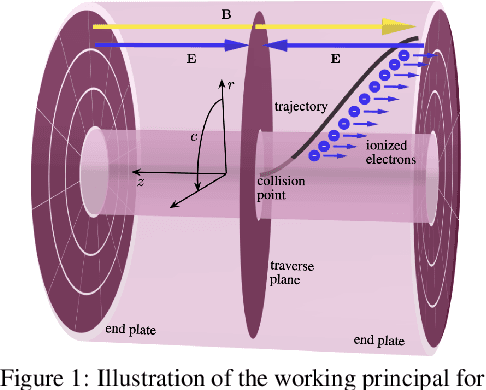

Nov 18, 2024Abstract:High-energy large-scale particle colliders generate data at extraordinary rates. Developing real-time high-throughput data compression algorithms to reduce data volume and meet the bandwidth requirement for storage has become increasingly critical. Deep learning is a promising technology that can address this challenging topic. At the newly constructed sPHENIX experiment at the Relativistic Heavy Ion Collider, a Time Projection Chamber (TPC) serves as the main tracking detector, which records three-dimensional particle trajectories in a volume of a gas-filled cylinder. In terms of occupancy, the resulting data flow can be very sparse reaching $10^{-3}$ for proton-proton collisions. Such sparsity presents a challenge to conventional learning-free lossy compression algorithms, such as SZ, ZFP, and MGARD. In contrast, emerging deep learning-based models, particularly those utilizing convolutional neural networks for compression, have outperformed these conventional methods in terms of compression ratios and reconstruction accuracy. However, research on the efficacy of these deep learning models in handling sparse datasets, like those produced in particle colliders, remains limited. Furthermore, most deep learning models do not adapt their processing speeds to data sparsity, which affects efficiency. To address this issue, we propose a novel approach for TPC data compression via key-point identification facilitated by sparse convolution. Our proposed algorithm, BCAE-VS, achieves a $75\%$ improvement in reconstruction accuracy with a $10\%$ increase in compression ratio over the previous state-of-the-art model. Additionally, BCAE-VS manages to achieve these results with a model size over two orders of magnitude smaller. Lastly, we have experimentally verified that as sparsity increases, so does the model's throughput.

Mitigating Parameter Degeneracy using Joint Conditional Diffusion Model for WECC Composite Load Model in Power Systems

Nov 15, 2024Abstract:Data-driven modeling for dynamic systems has gained widespread attention in recent years. Its inverse formulation, parameter estimation, aims to infer the inherent model parameters from observations. However, parameter degeneracy, where different combinations of parameters yield the same observable output, poses a critical barrier to accurately and uniquely identifying model parameters. In the context of WECC composite load model (CLM) in power systems, utility practitioners have observed that CLM parameters carefully selected for one fault event may not perform satisfactorily in another fault. Here, we innovate a joint conditional diffusion model-based inverse problem solver (JCDI), that incorporates a joint conditioning architecture with simultaneous inputs of multi-event observations to improve parameter generalizability. Simulation studies on the WECC CLM show that the proposed JCDI effectively reduces uncertainties of degenerate parameters, thus the parameter estimation error is decreased by 42.1% compared to a single-event learning scheme. This enables the model to achieve high accuracy in predicting power trajectories under different fault events, including electronic load tripping and motor stalling, outperforming standard deep reinforcement learning and supervised learning approaches. We anticipate this work will contribute to mitigating parameter degeneracy in system dynamics, providing a general parameter estimation framework across various scientific domains.

Automated and Holistic Co-design of Neural Networks and ASICs for Enabling In-Pixel Intelligence

Jul 18, 2024Abstract:Extreme edge-AI systems, such as those in readout ASICs for radiation detection, must operate under stringent hardware constraints such as micron-level dimensions, sub-milliwatt power, and nanosecond-scale speed while providing clear accuracy advantages over traditional architectures. Finding ideal solutions means identifying optimal AI and ASIC design choices from a design space that has explosively expanded during the merger of these domains, creating non-trivial couplings which together act upon a small set of solutions as constraints tighten. It is impractical, if not impossible, to manually determine ideal choices among possibilities that easily exceed billions even in small-size problems. Existing methods to bridge this gap have leveraged theoretical understanding of hardware to f architecture search. However, the assumptions made in computing such theoretical metrics are too idealized to provide sufficient guidance during the difficult search for a practical implementation. Meanwhile, theoretical estimates for many other crucial metrics (like delay) do not even exist and are similarly variable, dependent on parameters of the process design kit (PDK). To address these challenges, we present a study that employs intelligent search using multi-objective Bayesian optimization, integrating both neural network search and ASIC synthesis in the loop. This approach provides reliable feedback on the collective impact of all cross-domain design choices. We showcase the effectiveness of our approach by finding several Pareto-optimal design choices for effective and efficient neural networks that perform real-time feature extraction from input pulses within the individual pixels of a readout ASIC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge