Yaoyu Zhang

Adaptive Preconditioners Trigger Loss Spikes in Adam

Jun 05, 2025Abstract:Loss spikes emerge commonly during training across neural networks of varying architectures and scales when using the Adam optimizer. In this work, we investigate the underlying mechanism responsible for Adam spikes. While previous explanations attribute these phenomena to the lower-loss-as-sharper characteristics of the loss landscape, our analysis reveals that Adam's adaptive preconditioners themselves can trigger spikes. Specifically, we identify a critical regime where squared gradients become substantially smaller than the second-order moment estimates, causing the latter to undergo a $\beta_2$-exponential decay and to respond sluggishly to current gradient information. This mechanism can push the maximum eigenvalue of the preconditioned Hessian beyond the classical stability threshold $2/\eta$ for a sustained period, inducing instability. This instability further leads to an alignment between the gradient and the maximum eigendirection, and a loss spike occurs precisely when the gradient-directional curvature exceeds $2/\eta$. We verify this mechanism through extensive experiments on fully connected networks, convolutional networks, and Transformer architectures.

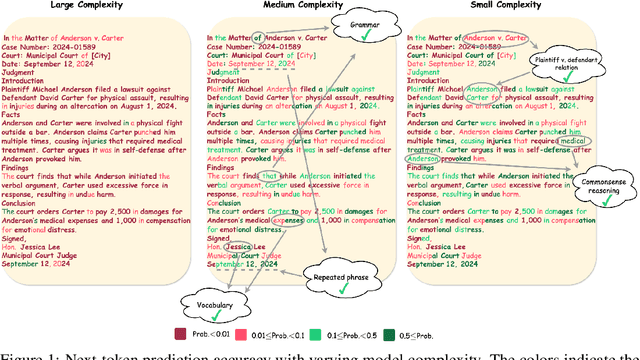

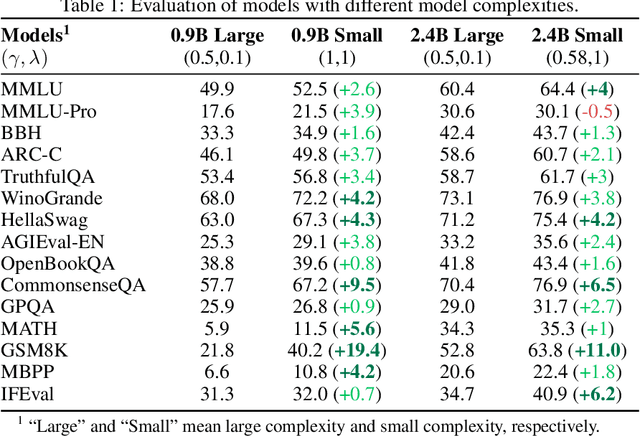

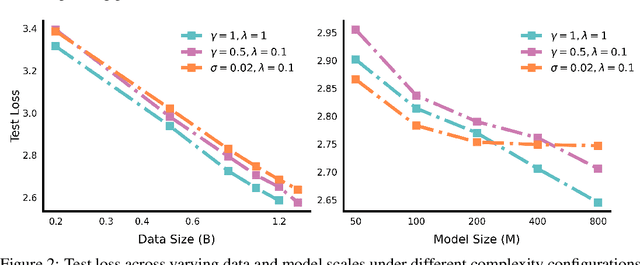

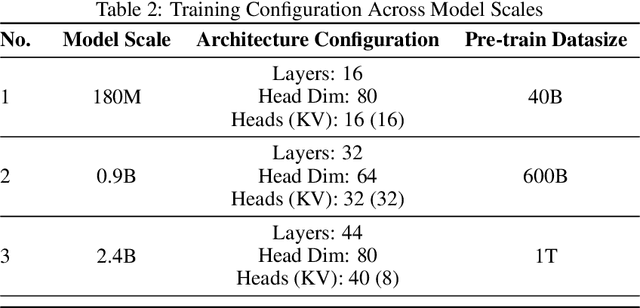

Scalable Complexity Control Facilitates Reasoning Ability of LLMs

May 29, 2025

Abstract:The reasoning ability of large language models (LLMs) has been rapidly advancing in recent years, attracting interest in more fundamental approaches that can reliably enhance their generalizability. This work demonstrates that model complexity control, conveniently implementable by adjusting the initialization rate and weight decay coefficient, improves the scaling law of LLMs consistently over varying model sizes and data sizes. This gain is further illustrated by comparing the benchmark performance of 2.4B models pretrained on 1T tokens with different complexity hyperparameters. Instead of fixing the initialization std, we found that a constant initialization rate (the exponent of std) enables the scaling law to descend faster in both model and data sizes. These results indicate that complexity control is a promising direction for the continual advancement of LLMs.

Uncovering Critical Sets of Deep Neural Networks via Sample-Independent Critical Lifting

May 19, 2025Abstract:This paper investigates the sample dependence of critical points for neural networks. We introduce a sample-independent critical lifting operator that associates a parameter of one network with a set of parameters of another, thus defining sample-dependent and sample-independent lifted critical points. We then show by example that previously studied critical embeddings do not capture all sample-independent lifted critical points. Finally, we demonstrate the existence of sample-dependent lifted critical points for sufficiently large sample sizes and prove that saddles appear among them.

Embedding principle of homogeneous neural network for classification problem

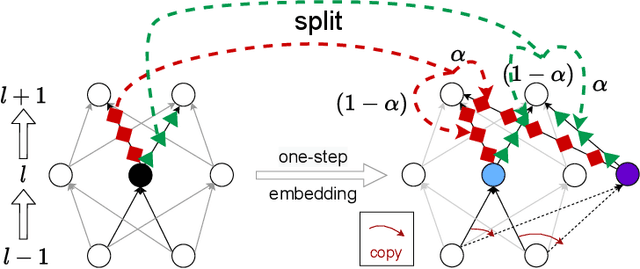

May 18, 2025Abstract:Understanding the convergence points and optimization landscape of neural networks is crucial, particularly for homogeneous networks where Karush-Kuhn-Tucker (KKT) points of the associated maximum-margin problem often characterize solutions. This paper investigates the relationship between such KKT points across networks of different widths generated via neuron splitting. We introduce and formalize the \textbf{KKT point embedding principle}, establishing that KKT points of a homogeneous network's max-margin problem ($P_{\Phi}$) can be embedded into the KKT points of a larger network's problem ($P_{\tilde{\Phi}}$) via specific linear isometric transformations corresponding to neuron splitting. We rigorously prove this principle holds for neuron splitting in both two-layer and deep homogeneous networks. Furthermore, we connect this static embedding to the dynamics of gradient flow training with smooth losses. We demonstrate that trajectories initiated from appropriately mapped points remain mapped throughout training and that the resulting $\omega$-limit sets of directions are correspondingly mapped ($T(L(\theta(0))) = L(\boldsymbol{\eta}(0))$), thereby preserving the alignment with KKT directions dynamically when directional convergence occurs. Our findings offer insights into the effects of network width, parameter redundancy, and the structural connections between solutions found via optimization in homogeneous networks of varying sizes.

An overview of condensation phenomenon in deep learning

Apr 13, 2025Abstract:In this paper, we provide an overview of a common phenomenon, condensation, observed during the nonlinear training of neural networks: During the nonlinear training of neural networks, neurons in the same layer tend to condense into groups with similar outputs. Empirical observations suggest that the number of condensed clusters of neurons in the same layer typically increases monotonically as training progresses. Neural networks with small weight initializations or Dropout optimization can facilitate this condensation process. We also examine the underlying mechanisms of condensation from the perspectives of training dynamics and the structure of the loss landscape. The condensation phenomenon offers valuable insights into the generalization abilities of neural networks and correlates to stronger reasoning abilities in transformer-based language models.

Complexity Control Facilitates Reasoning-Based Compositional Generalization in Transformers

Jan 15, 2025

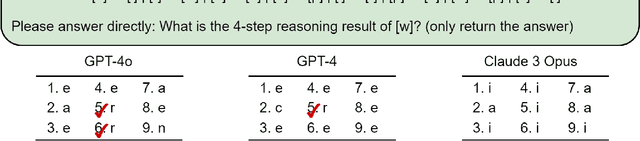

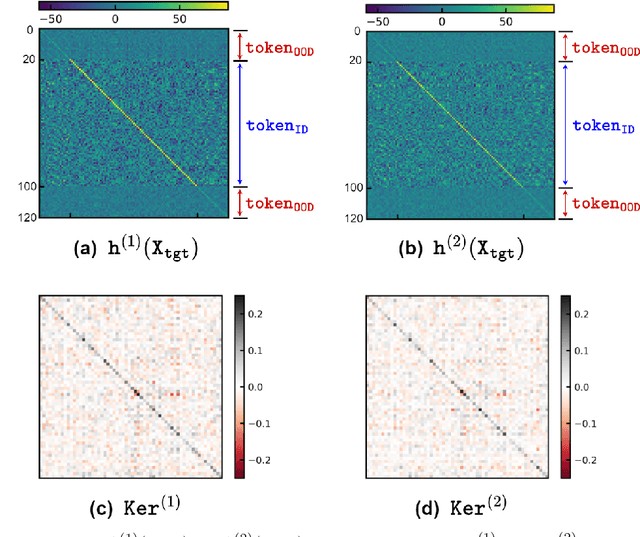

Abstract:Transformers have demonstrated impressive capabilities across various tasks, yet their performance on compositional problems remains a subject of debate. In this study, we investigate the internal mechanisms underlying Transformers' behavior in compositional tasks. We find that complexity control strategies significantly influence whether the model learns primitive-level rules that generalize out-of-distribution (reasoning-based solutions) or relies solely on memorized mappings (memory-based solutions). By applying masking strategies to the model's information circuits and employing multiple complexity metrics, we reveal distinct internal working mechanisms associated with different solution types. Further analysis reveals that reasoning-based solutions exhibit a lower complexity bias, which aligns with the well-studied neuron condensation phenomenon. This lower complexity bias is hypothesized to be the key factor enabling these solutions to learn reasoning rules. We validate these conclusions across multiple real-world datasets, including image generation and natural language processing tasks, confirming the broad applicability of our findings.

Local Linear Recovery Guarantee of Deep Neural Networks at Overparameterization

Jun 26, 2024

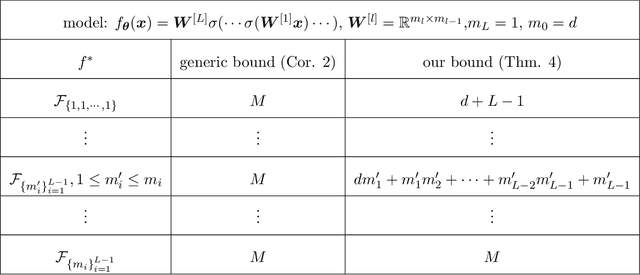

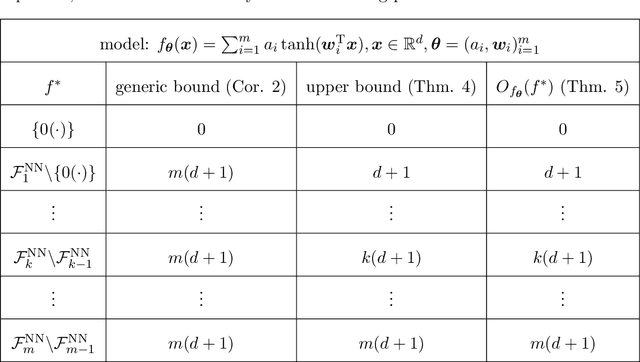

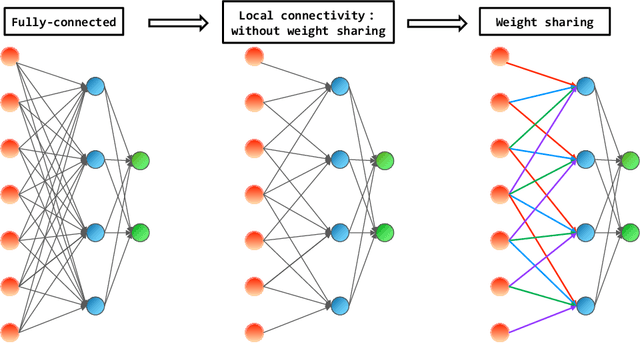

Abstract:Determining whether deep neural network (DNN) models can reliably recover target functions at overparameterization is a critical yet complex issue in the theory of deep learning. To advance understanding in this area, we introduce a concept we term "local linear recovery" (LLR), a weaker form of target function recovery that renders the problem more amenable to theoretical analysis. In the sense of LLR, we prove that functions expressible by narrower DNNs are guaranteed to be recoverable from fewer samples than model parameters. Specifically, we establish upper limits on the optimistic sample sizes, defined as the smallest sample size necessary to guarantee LLR, for functions in the space of a given DNN. Furthermore, we prove that these upper bounds are achieved in the case of two-layer tanh neural networks. Our research lays a solid groundwork for future investigations into the recovery capabilities of DNNs in overparameterized scenarios.

Geometry of Critical Sets and Existence of Saddle Branches for Two-layer Neural Networks

May 26, 2024Abstract:This paper presents a comprehensive analysis of critical point sets in two-layer neural networks. To study such complex entities, we introduce the critical embedding operator and critical reduction operator as our tools. Given a critical point, we use these operators to uncover the whole underlying critical set representing the same output function, which exhibits a hierarchical structure. Furthermore, we prove existence of saddle branches for any critical set whose output function can be represented by a narrower network. Our results provide a solid foundation to the further study of optimization and training behavior of neural networks.

Towards Understanding How Transformer Perform Multi-step Reasoning with Matching Operation

May 24, 2024

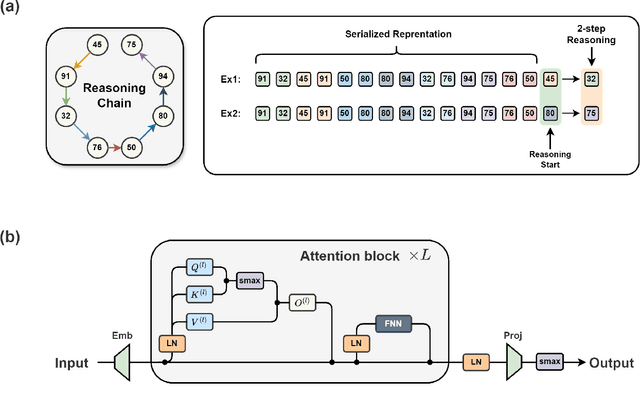

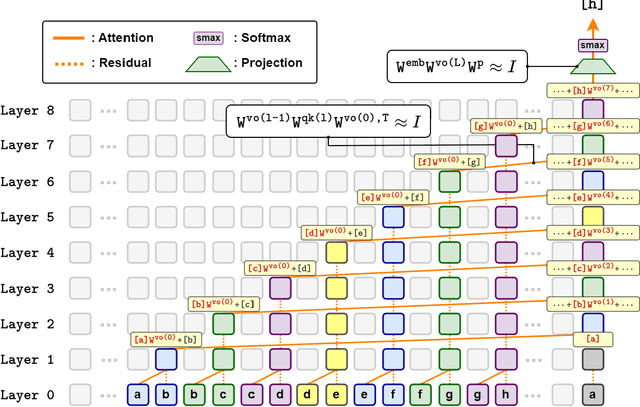

Abstract:Large language models have consistently struggled with complex reasoning tasks, such as mathematical problem-solving. Investigating the internal reasoning mechanisms of these models can help us design better model architectures and training strategies, ultimately enhancing their reasoning capabilities. In this study, we examine the matching mechanism employed by Transformer for multi-step reasoning on a constructed dataset. We investigate factors that influence the model's matching mechanism and discover that small initialization and post-LayerNorm can facilitate the formation of the matching mechanism, thereby enhancing the model's reasoning ability. Moreover, we propose a method to improve the model's reasoning capability by adding orthogonal noise. Finally, we investigate the parallel reasoning mechanism of Transformers and propose a conjecture on the upper bound of the model's reasoning ability based on this phenomenon. These insights contribute to a deeper understanding of the reasoning processes in large language models and guide designing more effective reasoning architectures and training strategies.

A rationale from frequency perspective for grokking in training neural network

May 24, 2024

Abstract:Grokking is the phenomenon where neural networks NNs initially fit the training data and later generalize to the test data during training. In this paper, we empirically provide a frequency perspective to explain the emergence of this phenomenon in NNs. The core insight is that the networks initially learn the less salient frequency components present in the test data. We observe this phenomenon across both synthetic and real datasets, offering a novel viewpoint for elucidating the grokking phenomenon by characterizing it through the lens of frequency dynamics during the training process. Our empirical frequency-based analysis sheds new light on understanding the grokking phenomenon and its underlying mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge