Xuhua Ren

POSE: Phased One-Step Adversarial Equilibrium for Video Diffusion Models

Aug 28, 2025

Abstract:The field of video diffusion generation faces critical bottlenecks in sampling efficiency, especially for large-scale models and long sequences. Existing video acceleration methods adopt image-based techniques but suffer from fundamental limitations: they neither model the temporal coherence of video frames nor provide single-step distillation for large-scale video models. To bridge this gap, we propose POSE (Phased One-Step Equilibrium), a distillation framework that reduces the sampling steps of large-scale video diffusion models, enabling the generation of high-quality videos in a single step. POSE employs a carefully designed two-phase process to distill video models:(i) stability priming: a warm-up mechanism to stabilize adversarial distillation that adapts the high-quality trajectory of the one-step generator from high to low signal-to-noise ratio regimes, optimizing the video quality of single-step mappings near the endpoints of flow trajectories. (ii) unified adversarial equilibrium: a flexible self-adversarial distillation mechanism that promotes stable single-step adversarial training towards a Nash equilibrium within the Gaussian noise space, generating realistic single-step videos close to real videos. For conditional video generation, we propose (iii) conditional adversarial consistency, a method to improve both semantic consistency and frame consistency between conditional frames and generated frames. Comprehensive experiments demonstrate that POSE outperforms other acceleration methods on VBench-I2V by average 7.15% in semantic alignment, temporal conference and frame quality, reducing the latency of the pre-trained model by 100$\times$, from 1000 seconds to 10 seconds, while maintaining competitive performance.

Hunyuan-Game: Industrial-grade Intelligent Game Creation Model

May 20, 2025Abstract:Intelligent game creation represents a transformative advancement in game development, utilizing generative artificial intelligence to dynamically generate and enhance game content. Despite notable progress in generative models, the comprehensive synthesis of high-quality game assets, including both images and videos, remains a challenging frontier. To create high-fidelity game content that simultaneously aligns with player preferences and significantly boosts designer efficiency, we present Hunyuan-Game, an innovative project designed to revolutionize intelligent game production. Hunyuan-Game encompasses two primary branches: image generation and video generation. The image generation component is built upon a vast dataset comprising billions of game images, leading to the development of a group of customized image generation models tailored for game scenarios: (1) General Text-to-Image Generation. (2) Game Visual Effects Generation, involving text-to-effect and reference image-based game visual effect generation. (3) Transparent Image Generation for characters, scenes, and game visual effects. (4) Game Character Generation based on sketches, black-and-white images, and white models. The video generation component is built upon a comprehensive dataset of millions of game and anime videos, leading to the development of five core algorithmic models, each targeting critical pain points in game development and having robust adaptation to diverse game video scenarios: (1) Image-to-Video Generation. (2) 360 A/T Pose Avatar Video Synthesis. (3) Dynamic Illustration Generation. (4) Generative Video Super-Resolution. (5) Interactive Game Video Generation. These image and video generation models not only exhibit high-level aesthetic expression but also deeply integrate domain-specific knowledge, establishing a systematic understanding of diverse game and anime art styles.

Open-Vocabulary Scene Text Recognition via Pseudo-Image Labeling and Margin Loss

Mar 12, 2024Abstract:Scene text recognition is an important and challenging task in computer vision. However, most prior works focus on recognizing pre-defined words, while there are various out-of-vocabulary (OOV) words in real-world applications. In this paper, we propose a novel open-vocabulary text recognition framework, Pseudo-OCR, to recognize OOV words. The key challenge in this task is the lack of OOV training data. To solve this problem, we first propose a pseudo label generation module that leverages character detection and image inpainting to produce substantial pseudo OOV training data from real-world images. Unlike previous synthetic data, our pseudo OOV data contains real characters and backgrounds to simulate real-world applications. Secondly, to reduce noises in pseudo data, we present a semantic checking mechanism to filter semantically meaningful data. Thirdly, we introduce a quality-aware margin loss to boost the training with pseudo data. Our loss includes a margin-based part to enhance the classification ability, and a quality-aware part to penalize low-quality samples in both real and pseudo data. Extensive experiments demonstrate that our approach outperforms the state-of-the-art on eight datasets and achieves the first rank in the ICDAR2022 challenge.

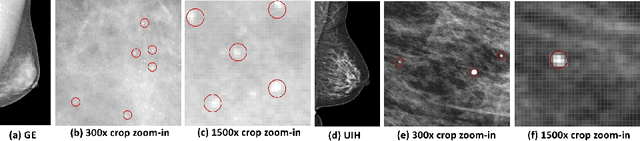

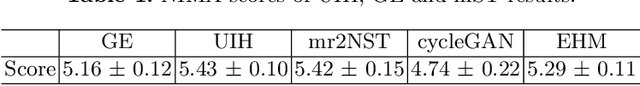

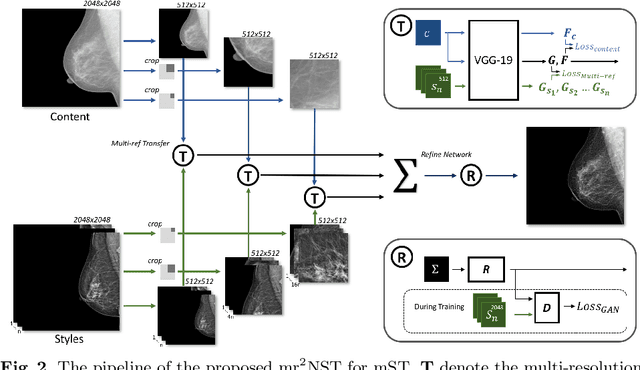

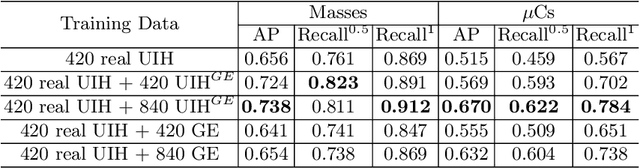

mr2NST: Multi-Resolution and Multi-Reference Neural Style Transfer for Mammography

May 25, 2020

Abstract:Computer-aided diagnosis with deep learning techniques has been shown to be helpful for the diagnosis of the mammography in many clinical studies. However, the image styles of different vendors are very distinctive, and there may exist domain gap among different vendors that could potentially compromise the universal applicability of one deep learning model. In this study, we explicitly address style variety issue with the proposed multi-resolution and multi-reference neural style transfer (mr2NST) network. The mr2NST can normalize the styles from different vendors to the same style baseline with very high resolution. We illustrate that the image quality of the transferred images is comparable to the quality of original images of the target domain (vendor) in terms of NIMA scores. Meanwhile, the mr2NST results are also shown to be helpful for the lesion detection in mammograms.

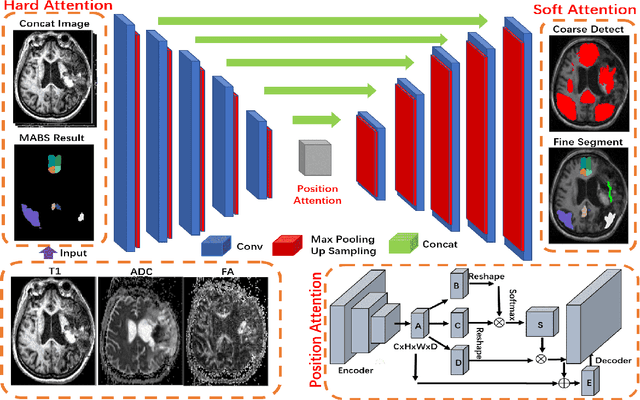

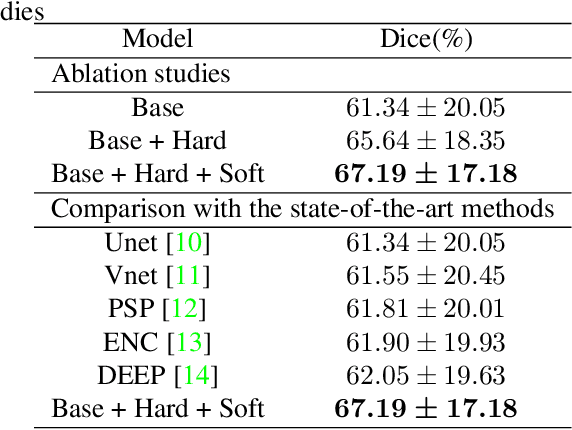

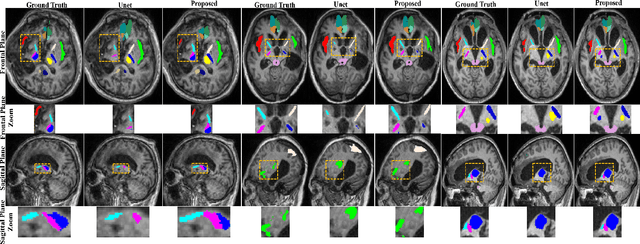

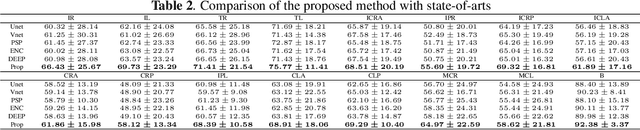

Robust Brain Magnetic Resonance Image Segmentation for Hydrocephalus Patients: Hard and Soft Attention

Jan 12, 2020

Abstract:Brain magnetic resonance (MR) segmentation for hydrocephalus patients is considered as a challenging work. Encoding the variation of the brain anatomical structures from different individuals cannot be easily achieved. The task becomes even more difficult especially when the image data from hydrocephalus patients are considered, which often have large deformations and differ significantly from the normal subjects. Here, we propose a novel strategy with hard and soft attention modules to solve the segmentation problems for hydrocephalus MR images. Our main contributions are three-fold: 1) the hard-attention module generates coarse segmentation map using multi-atlas-based method and the VoxelMorph tool, which guides subsequent segmentation process and improves its robustness; 2) the soft-attention module incorporates position attention to capture precise context information, which further improves the segmentation accuracy; 3) we validate our method by segmenting insula, thalamus and many other regions-of-interests (ROIs) that are critical to quantify brain MR images of hydrocephalus patients in real clinical scenario. The proposed method achieves much improved robustness and accuracy when segmenting all 17 consciousness-related ROIs with high variations for different subjects. To the best of our knowledge, this is the first work to employ deep learning for solving the brain segmentation problems of hydrocephalus patients.

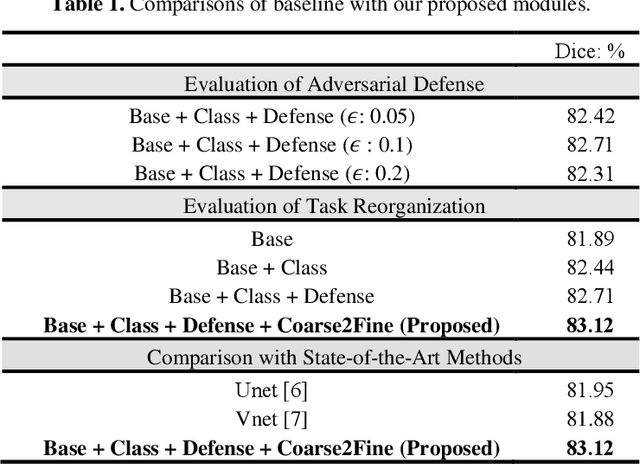

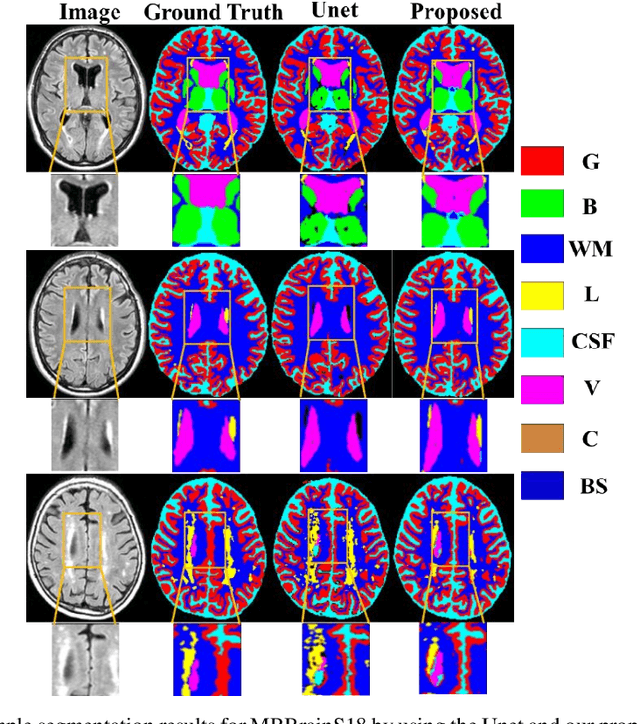

Brain MR Image Segmentation in Small Dataset with Adversarial Defense and Task Reorganization

Jun 25, 2019

Abstract:Medical image segmentation is challenging especially in dealing with small dataset of 3D MR images. Encoding the variation of brain anatomical struc-tures from individual subjects cannot be easily achieved, which is further chal-lenged by only a limited number of well labeled subjects for training. In this study, we aim to address the issue of brain MR image segmentation in small da-taset. First, concerning the limited number of training images, we adopt adver-sarial defense to augment the training data and therefore increase the robustness of the network. Second, inspired by the prior knowledge of neural anatomies, we reorganize the segmentation tasks of different regions into several groups in a hierarchical way. Third, the task reorganization extends to the semantic level, as we incorporate an additional object-level classification task to contribute high-order visual features toward the pixel-level segmentation task. In experiments we validate our method by segmenting gray matter, white matter, and several major regions on a challenge dataset. The proposed method with only seven subjects for training can achieve 84.46% of Dice score in the onsite test set.

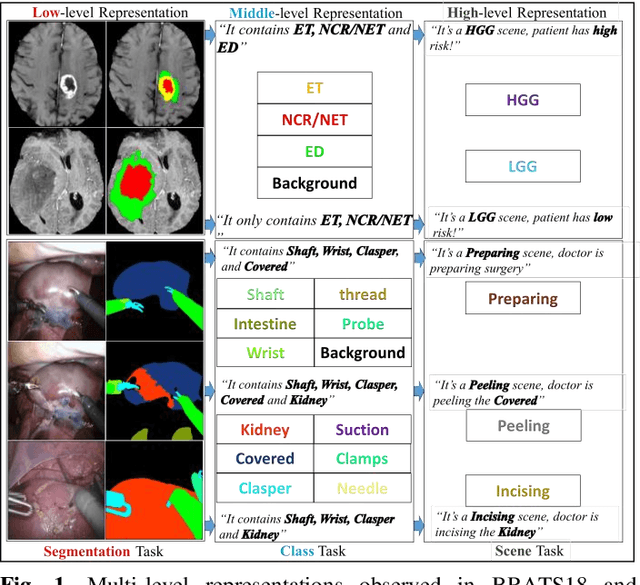

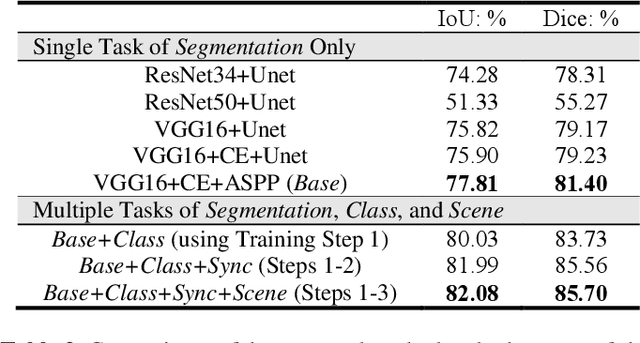

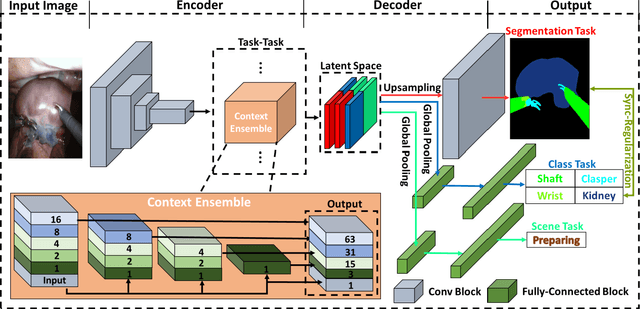

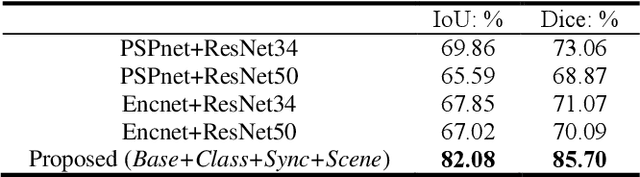

Task Decomposition and Synchronization for Semantic Biomedical Image Segmentation

May 21, 2019

Abstract:Semantic segmentation is essentially important to biomedical image analysis. Many recent works mainly focus on integrating the Fully Convolutional Network (FCN) architecture with sophisticated convolution implementation and deep supervision. In this paper, we propose to decompose the single segmentation task into three subsequent sub-tasks, including (1) pixel-wise image segmentation, (2) prediction of the class labels of the objects within the image, and (3) classification of the scene the image belonging to. While these three sub-tasks are trained to optimize their individual loss functions of different perceptual levels, we propose to let them interact by the task-task context ensemble. Moreover, we propose a novel sync-regularization to penalize the deviation between the outputs of the pixel-wise segmentation and the class prediction tasks. These effective regularizations help FCN utilize context information comprehensively and attain accurate semantic segmentation, even though the number of the images for training may be limited in many biomedical applications. We have successfully applied our framework to three diverse 2D/3D medical image datasets, including Robotic Scene Segmentation Challenge 18 (ROBOT18), Brain Tumor Segmentation Challenge 18 (BRATS18), and Retinal Fundus Glaucoma Challenge (REFUGE18). We have achieved top-tier performance in all three challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge