Xinyu Mao

AiReview: An Open Platform for Accelerating Systematic Reviews with LLMs

Apr 05, 2025

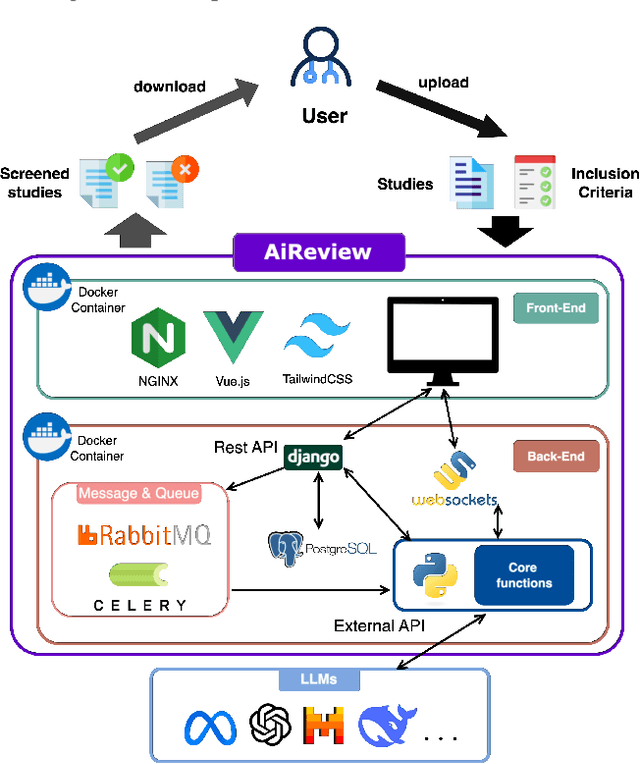

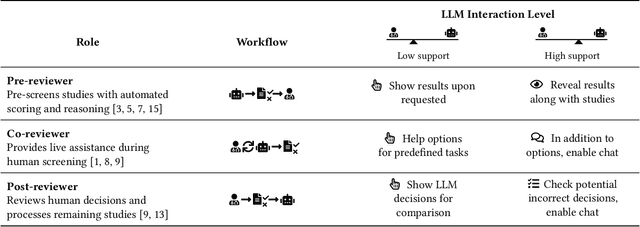

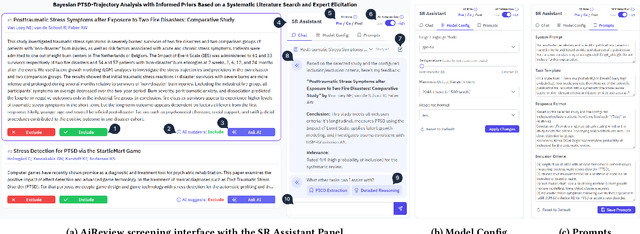

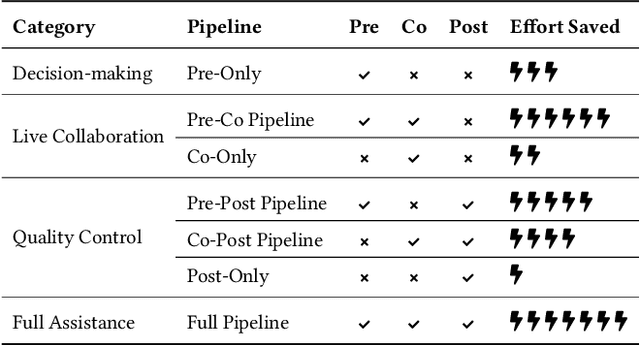

Abstract:Systematic reviews are fundamental to evidence-based medicine. Creating one is time-consuming and labour-intensive, mainly due to the need to screen, or assess, many studies for inclusion in the review. Several tools have been developed to streamline this process, mostly relying on traditional machine learning methods. Large language models (LLMs) have shown potential in further accelerating the screening process. However, no tool currently allows end users to directly leverage LLMs for screening or facilitates systematic and transparent usage of LLM-assisted screening methods. This paper introduces (i) an extensible framework for applying LLMs to systematic review tasks, particularly title and abstract screening, and (ii) a web-based interface for LLM-assisted screening. Together, these elements form AiReview-a novel platform for LLM-assisted systematic review creation. AiReview is the first of its kind to bridge the gap between cutting-edge LLM-assisted screening methods and those that create medical systematic reviews. The tool is available at https://aireview.ielab.io. The source code is also open sourced at https://github.com/ielab/ai-review.

DenseReviewer: A Screening Prioritisation Tool for Systematic Review based on Dense Retrieval

Feb 05, 2025Abstract:Screening is a time-consuming and labour-intensive yet required task for medical systematic reviews, as tens of thousands of studies often need to be screened. Prioritising relevant studies to be screened allows downstream systematic review creation tasks to start earlier and save time. In previous work, we developed a dense retrieval method to prioritise relevant studies with reviewer feedback during the title and abstract screening stage. Our method outperforms previous active learning methods in both effectiveness and efficiency. In this demo, we extend this prior work by creating (1) a web-based screening tool that enables end-users to screen studies exploiting state-of-the-art methods and (2) a Python library that integrates models and feedback mechanisms and allows researchers to develop and demonstrate new active learning methods. We describe the tool's design and showcase how it can aid screening. The tool is available at https://densereviewer.ielab.io. The source code is also open sourced at https://github.com/ielab/densereviewer.

Procedural Content Generation via Generative Artificial Intelligence

Jul 12, 2024Abstract:The attempt to utilize machine learning in PCG has been made in the past. In this survey paper, we investigate how generative artificial intelligence (AI), which saw a significant increase in interest in the mid-2010s, is being used for PCG. We review applications of generative AI for the creation of various types of content, including terrains, items, and even storylines. While generative AI is effective for PCG, one significant issues it faces is that building high-performance generative AI requires vast amounts of training data. Because content generally highly customized, domain-specific training data is scarce, and straightforward approaches to generative AI models may not work well. For PCG research to advance further, issues related to limited training data must be overcome. Thus, we also give special consideration to research that addresses the challenges posed by limited training data.

Dense Retrieval with Continuous Explicit Feedback for Systematic Review Screening Prioritisation

Jun 30, 2024

Abstract:The goal of screening prioritisation in systematic reviews is to identify relevant documents with high recall and rank them in early positions for review. This saves reviewing effort if paired with a stopping criterion, and speeds up review completion if performed alongside downstream tasks. Recent studies have shown that neural models have good potential on this task, but their time-consuming fine-tuning and inference discourage their widespread use for screening prioritisation. In this paper, we propose an alternative approach that still relies on neural models, but leverages dense representations and relevance feedback to enhance screening prioritisation, without the need for costly model fine-tuning and inference. This method exploits continuous relevance feedback from reviewers during document screening to efficiently update the dense query representation, which is then applied to rank the remaining documents to be screened. We evaluate this approach across the CLEF TAR datasets for this task. Results suggest that the investigated dense query-driven approach is more efficient than directly using neural models and shows promising effectiveness compared to previous methods developed on the considered datasets. Our code is available at https://github.com/ielab/dense-screening-feedback.

A Reproducibility Study of Goldilocks: Just-Right Tuning of BERT for TAR

Jan 16, 2024Abstract:Screening documents is a tedious and time-consuming aspect of high-recall retrieval tasks, such as compiling a systematic literature review, where the goal is to identify all relevant documents for a topic. To help streamline this process, many Technology-Assisted Review (TAR) methods leverage active learning techniques to reduce the number of documents requiring review. BERT-based models have shown high effectiveness in text classification, leading to interest in their potential use in TAR workflows. In this paper, we investigate recent work that examined the impact of further pre-training epochs on the effectiveness and efficiency of a BERT-based active learning pipeline. We first report that we could replicate the original experiments on two specific TAR datasets, confirming some of the findings: importantly, that further pre-training is critical to high effectiveness, but requires attention in terms of selecting the correct training epoch. We then investigate the generalisability of the pipeline on a different TAR task, that of medical systematic reviews. In this context, we show that there is no need for further pre-training if a domain-specific BERT backbone is used within the active learning pipeline. This finding provides practical implications for using the studied active learning pipeline within domain-specific TAR tasks.

On the Power of SVD in the Stochastic Block Model

Sep 27, 2023Abstract:A popular heuristic method for improving clustering results is to apply dimensionality reduction before running clustering algorithms. It has been observed that spectral-based dimensionality reduction tools, such as PCA or SVD, improve the performance of clustering algorithms in many applications. This phenomenon indicates that spectral method not only serves as a dimensionality reduction tool, but also contributes to the clustering procedure in some sense. It is an interesting question to understand the behavior of spectral steps in clustering problems. As an initial step in this direction, this paper studies the power of vanilla-SVD algorithm in the stochastic block model (SBM). We show that, in the symmetric setting, vanilla-SVD algorithm recovers all clusters correctly. This result answers an open question posed by Van Vu (Combinatorics Probability and Computing, 2018) in the symmetric setting.

SLPT: Selective Labeling Meets Prompt Tuning on Label-Limited Lesion Segmentation

Aug 09, 2023

Abstract:Medical image analysis using deep learning is often challenged by limited labeled data and high annotation costs. Fine-tuning the entire network in label-limited scenarios can lead to overfitting and suboptimal performance. Recently, prompt tuning has emerged as a more promising technique that introduces a few additional tunable parameters as prompts to a task-agnostic pre-trained model, and updates only these parameters using supervision from limited labeled data while keeping the pre-trained model unchanged. However, previous work has overlooked the importance of selective labeling in downstream tasks, which aims to select the most valuable downstream samples for annotation to achieve the best performance with minimum annotation cost. To address this, we propose a framework that combines selective labeling with prompt tuning (SLPT) to boost performance in limited labels. Specifically, we introduce a feature-aware prompt updater to guide prompt tuning and a TandEm Selective LAbeling (TESLA) strategy. TESLA includes unsupervised diversity selection and supervised selection using prompt-based uncertainty. In addition, we propose a diversified visual prompt tuning strategy to provide multi-prompt-based discrepant predictions for TESLA. We evaluate our method on liver tumor segmentation and achieve state-of-the-art performance, outperforming traditional fine-tuning with only 6% of tunable parameters, also achieving 94% of full-data performance by labeling only 5% of the data.

Style Transfer Enabled Sim2Real Framework for Efficient Learning of Robotic Ultrasound Image Analysis Using Simulated Data

May 16, 2023Abstract:Robotic ultrasound (US) systems have shown great potential to make US examinations easier and more accurate. Recently, various machine learning techniques have been proposed to realize automatic US image interpretation for robotic US acquisition tasks. However, obtaining large amounts of real US imaging data for training is usually expensive or even unfeasible in some clinical applications. An alternative is to build a simulator to generate synthetic US data for training, but the differences between simulated and real US images may result in poor model performance. This work presents a Sim2Real framework to efficiently learn robotic US image analysis tasks based only on simulated data for real-world deployment. A style transfer module is proposed based on unsupervised contrastive learning and used as a preprocessing step to convert the real US images into the simulation style. Thereafter, a task-relevant model is designed to combine CNNs with vision transformers to generate the task-dependent prediction with improved generalization ability. We demonstrate the effectiveness of our method in an image regression task to predict the probe position based on US images in robotic transesophageal echocardiography (TEE). Our results show that using only simulated US data and a small amount of unlabelled real data for training, our method can achieve comparable performance to semi-supervised and fully supervised learning methods. Moreover, the effectiveness of our previously proposed CT-based US image simulation method is also indirectly confirmed.

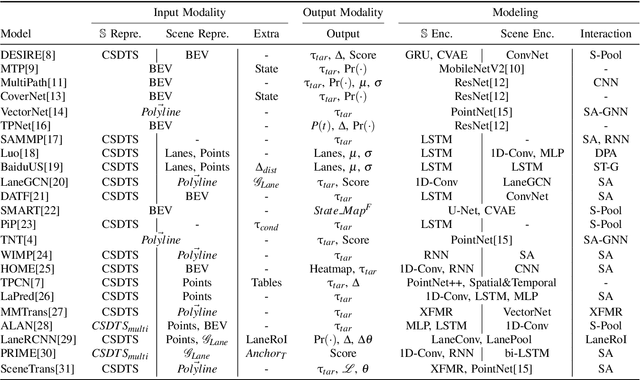

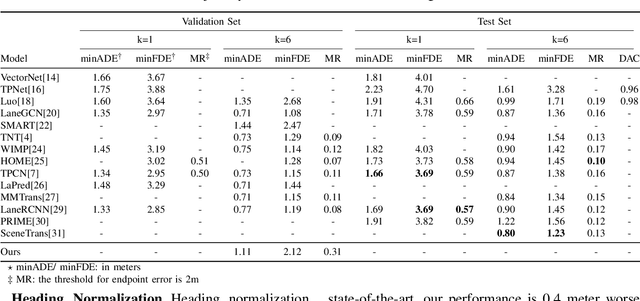

A Survey on Deep-Learning Approaches for Vehicle Trajectory Prediction in Autonomous Driving

Oct 29, 2021

Abstract:With the rapid development of machine learning, autonomous driving has become a hot issue, making urgent demands for more intelligent perception and planning systems. Self-driving cars can avoid traffic crashes with precisely predicted future trajectories of surrounding vehicles. In this work, we review and categorize existing learning-based trajectory forecasting methods from perspectives of representation, modeling, and learning. Moreover, we make our implementation of Target-driveN Trajectory Prediction publicly available at https://github.com/Henry1iu/TNT-Trajectory-Predition, demonstrating its outstanding performance whereas its original codes are withheld. Enlightenment is expected for researchers seeking to improve trajectory prediction performance based on the achievement we have made.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge