Teerapong Leelanupab

Beyond GeneGPT: A Multi-Agent Architecture with Open-Source LLMs for Enhanced Genomic Question Answering

Nov 19, 2025Abstract:Genomic question answering often requires complex reasoning and integration across diverse biomedical sources. GeneGPT addressed this challenge by combining domain-specific APIs with OpenAI's code-davinci-002 large language model to enable natural language interaction with genomic databases. However, its reliance on a proprietary model limits scalability, increases operational costs, and raises concerns about data privacy and generalization. In this work, we revisit and reproduce GeneGPT in a pilot study using open source models, including Llama 3.1, Qwen2.5, and Qwen2.5 Coder, within a monolithic architecture; this allows us to identify the limitations of this approach. Building on this foundation, we then develop OpenBioLLM, a modular multi-agent framework that extends GeneGPT by introducing agent specialization for tool routing, query generation, and response validation. This enables coordinated reasoning and role-based task execution. OpenBioLLM matches or outperforms GeneGPT on over 90% of the benchmark tasks, achieving average scores of 0.849 on Gene-Turing and 0.830 on GeneHop, while using smaller open-source models without additional fine-tuning or tool-specific pretraining. OpenBioLLM's modular multi-agent design reduces latency by 40-50% across benchmark tasks, significantly improving efficiency without compromising model capability. The results of our comprehensive evaluation highlight the potential of open-source multi-agent systems for genomic question answering. Code and resources are available at https://github.com/ielab/OpenBioLLM.

AiReview: An Open Platform for Accelerating Systematic Reviews with LLMs

Apr 05, 2025

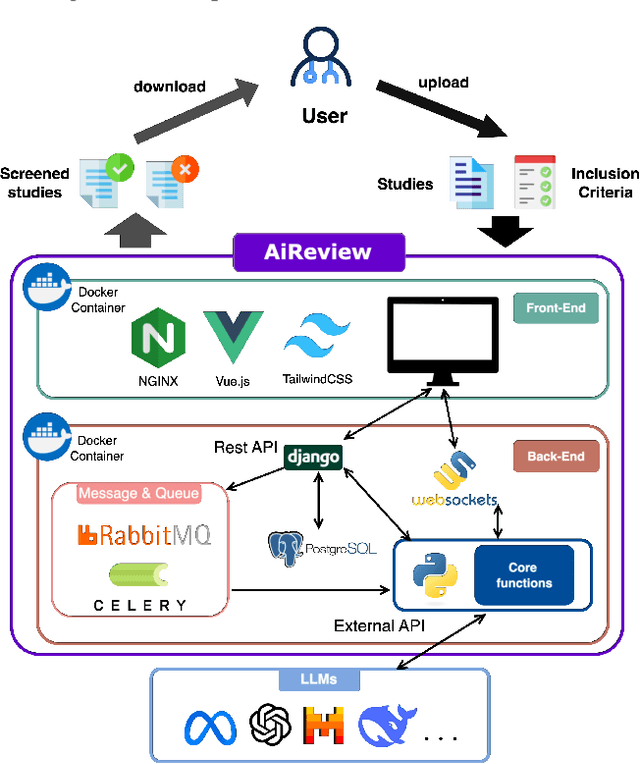

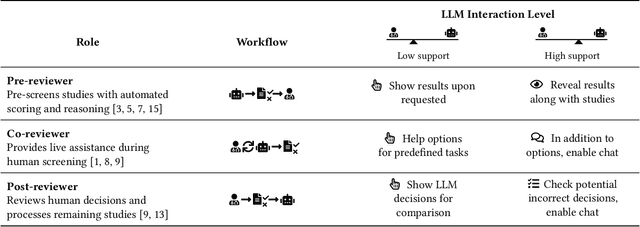

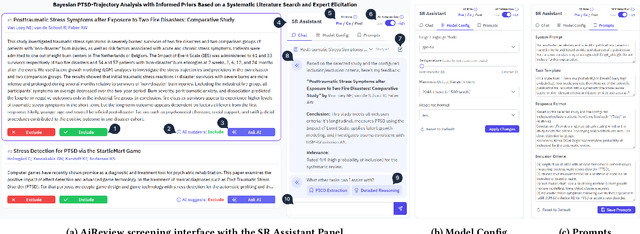

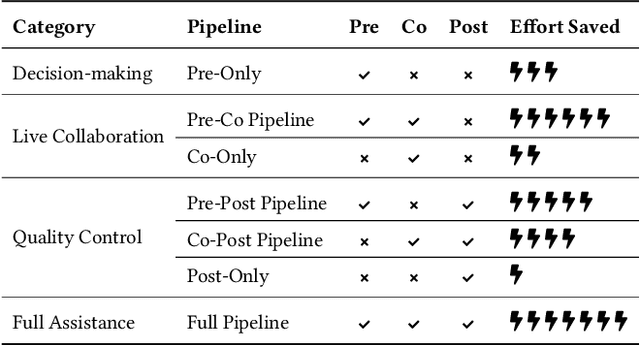

Abstract:Systematic reviews are fundamental to evidence-based medicine. Creating one is time-consuming and labour-intensive, mainly due to the need to screen, or assess, many studies for inclusion in the review. Several tools have been developed to streamline this process, mostly relying on traditional machine learning methods. Large language models (LLMs) have shown potential in further accelerating the screening process. However, no tool currently allows end users to directly leverage LLMs for screening or facilitates systematic and transparent usage of LLM-assisted screening methods. This paper introduces (i) an extensible framework for applying LLMs to systematic review tasks, particularly title and abstract screening, and (ii) a web-based interface for LLM-assisted screening. Together, these elements form AiReview-a novel platform for LLM-assisted systematic review creation. AiReview is the first of its kind to bridge the gap between cutting-edge LLM-assisted screening methods and those that create medical systematic reviews. The tool is available at https://aireview.ielab.io. The source code is also open sourced at https://github.com/ielab/ai-review.

DenseReviewer: A Screening Prioritisation Tool for Systematic Review based on Dense Retrieval

Feb 05, 2025Abstract:Screening is a time-consuming and labour-intensive yet required task for medical systematic reviews, as tens of thousands of studies often need to be screened. Prioritising relevant studies to be screened allows downstream systematic review creation tasks to start earlier and save time. In previous work, we developed a dense retrieval method to prioritise relevant studies with reviewer feedback during the title and abstract screening stage. Our method outperforms previous active learning methods in both effectiveness and efficiency. In this demo, we extend this prior work by creating (1) a web-based screening tool that enables end-users to screen studies exploiting state-of-the-art methods and (2) a Python library that integrates models and feedback mechanisms and allows researchers to develop and demonstrate new active learning methods. We describe the tool's design and showcase how it can aid screening. The tool is available at https://densereviewer.ielab.io. The source code is also open sourced at https://github.com/ielab/densereviewer.

Embark on DenseQuest: A System for Selecting the Best Dense Retriever for a Custom Collection

Jul 09, 2024

Abstract:In this demo we present a web-based application for selecting an effective pre-trained dense retriever to use on a private collection. Our system, DenseQuest, provides unsupervised selection and ranking capabilities to predict the best dense retriever among a pool of available dense retrievers, tailored to an uploaded target collection. DenseQuest implements a number of existing approaches, including a recent, highly effective method powered by Large Language Models (LLMs), which requires neither queries nor relevance judgments. The system is designed to be intuitive and easy to use for those information retrieval engineers and researchers who need to identify a general-purpose dense retrieval model to encode or search a new private target collection. Our demonstration illustrates conceptual architecture and the different use case scenarios of the system implemented on the cloud, enabling universal access and use. DenseQuest is available at https://densequest.ielab.io.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge