Xingyu Zhu

Spider-Sense: Intrinsic Risk Sensing for Efficient Agent Defense with Hierarchical Adaptive Screening

Feb 05, 2026Abstract:As large language models (LLMs) evolve into autonomous agents, their real-world applicability has expanded significantly, accompanied by new security challenges. Most existing agent defense mechanisms adopt a mandatory checking paradigm, in which security validation is forcibly triggered at predefined stages of the agent lifecycle. In this work, we argue that effective agent security should be intrinsic and selective rather than architecturally decoupled and mandatory. We propose Spider-Sense framework, an event-driven defense framework based on Intrinsic Risk Sensing (IRS), which allows agents to maintain latent vigilance and trigger defenses only upon risk perception. Once triggered, the Spider-Sense invokes a hierarchical defence mechanism that trades off efficiency and precision: it resolves known patterns via lightweight similarity matching while escalating ambiguous cases to deep internal reasoning, thereby eliminating reliance on external models. To facilitate rigorous evaluation, we introduce S$^2$Bench, a lifecycle-aware benchmark featuring realistic tool execution and multi-stage attacks. Extensive experiments demonstrate that Spider-Sense achieves competitive or superior defense performance, attaining the lowest Attack Success Rate (ASR) and False Positive Rate (FPR), with only a marginal latency overhead of 8.3\%.

Contextual Drag: How Errors in the Context Affect LLM Reasoning

Feb 04, 2026Abstract:Central to many self-improvement pipelines for large language models (LLMs) is the assumption that models can improve by reflecting on past mistakes. We study a phenomenon termed contextual drag: the presence of failed attempts in the context biases subsequent generations toward structurally similar errors. Across evaluations of 11 proprietary and open-weight models on 8 reasoning tasks, contextual drag induces 10-20% performance drops, and iterative self-refinement in models with severe contextual drag can collapse into self-deterioration. Structural analysis using tree edit distance reveals that subsequent reasoning trajectories inherit structurally similar error patterns from the context. We demonstrate that neither external feedback nor successful self-verification suffices to eliminate this effect. While mitigation strategies such as fallback-behavior fine-tuning and context denoising yield partial improvements, they fail to fully restore baseline performance, positioning contextual drag as a persistent failure mode in current reasoning architectures.

On the Power of Context-Enhanced Learning in LLMs

Mar 03, 2025Abstract:We formalize a new concept for LLMs, context-enhanced learning. It involves standard gradient-based learning on text except that the context is enhanced with additional data on which no auto-regressive gradients are computed. This setting is a gradient-based analog of usual in-context learning (ICL) and appears in some recent works. Using a multi-step reasoning task, we prove in a simplified setting that context-enhanced learning can be exponentially more sample-efficient than standard learning when the model is capable of ICL. At a mechanistic level, we find that the benefit of context-enhancement arises from a more accurate gradient learning signal. We also experimentally demonstrate that it appears hard to detect or recover learning materials that were used in the context during training. This may have implications for data security as well as copyright.

DreaMark: Rooting Watermark in Score Distillation Sampling Generated Neural Radiance Fields

Dec 18, 2024Abstract:Recent advancements in text-to-3D generation can generate neural radiance fields (NeRFs) with score distillation sampling, enabling 3D asset creation without real-world data capture. With the rapid advancement in NeRF generation quality, protecting the copyright of the generated NeRF has become increasingly important. While prior works can watermark NeRFs in a post-generation way, they suffer from two vulnerabilities. First, a delay lies between NeRF generation and watermarking because the secret message is embedded into the NeRF model post-generation through fine-tuning. Second, generating a non-watermarked NeRF as an intermediate creates a potential vulnerability for theft. To address both issues, we propose Dreamark to embed a secret message by backdooring the NeRF during NeRF generation. In detail, we first pre-train a watermark decoder. Then, the Dreamark generates backdoored NeRFs in a way that the target secret message can be verified by the pre-trained watermark decoder on an arbitrary trigger viewport. We evaluate the generation quality and watermark robustness against image- and model-level attacks. Extensive experiments show that the watermarking process will not degrade the generation quality, and the watermark achieves 90+% accuracy among both image-level attacks (e.g., Gaussian noise) and model-level attacks (e.g., pruning attack).

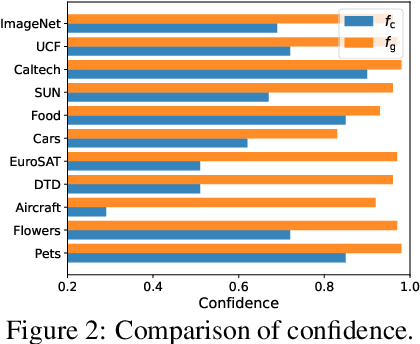

Enhancing Zero-Shot Vision Models by Label-Free Prompt Distribution Learning and Bias Correcting

Oct 25, 2024

Abstract:Vision-language models, such as CLIP, have shown impressive generalization capacities when using appropriate text descriptions. While optimizing prompts on downstream labeled data has proven effective in improving performance, these methods entail labor costs for annotations and are limited by their quality. Additionally, since CLIP is pre-trained on highly imbalanced Web-scale data, it suffers from inherent label bias that leads to suboptimal performance. To tackle the above challenges, we propose a label-Free prompt distribution learning and bias correction framework, dubbed as **Frolic**, which boosts zero-shot performance without the need for labeled data. Specifically, our Frolic learns distributions over prompt prototypes to capture diverse visual representations and adaptively fuses these with the original CLIP through confidence matching. This fused model is further enhanced by correcting label bias via a label-free logit adjustment. Notably, our method is not only training-free but also circumvents the necessity for hyper-parameter tuning. Extensive experimental results across 16 datasets demonstrate the efficacy of our approach, particularly outperforming the state-of-the-art by an average of $2.6\%$ on 10 datasets with CLIP ViT-B/16 and achieving an average margin of $1.5\%$ on ImageNet and its five distribution shifts with CLIP ViT-B/16. Codes are available in https://github.com/zhuhsingyuu/Frolic.

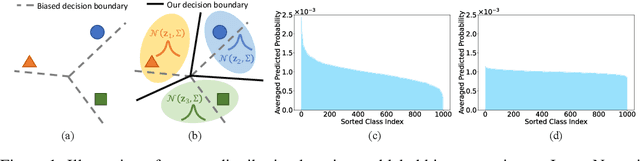

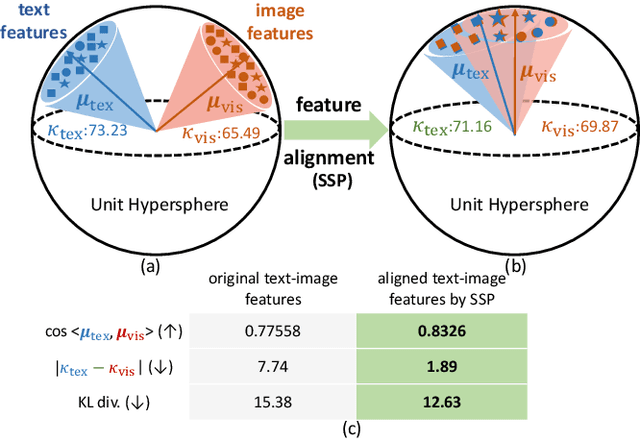

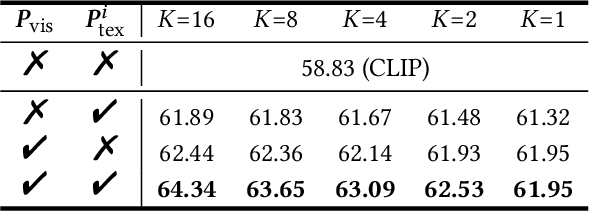

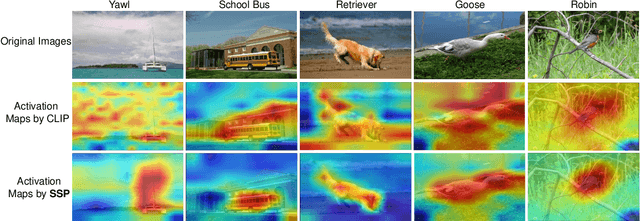

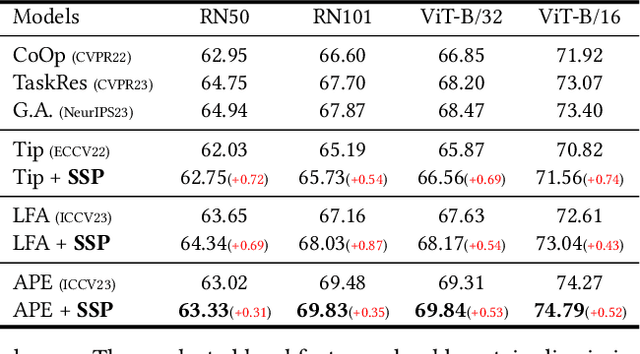

Selective Vision-Language Subspace Projection for Few-shot CLIP

Jul 26, 2024

Abstract:Vision-language models such as CLIP are capable of mapping the different modality data into a unified feature space, enabling zero/few-shot inference by measuring the similarity of given images and texts. However, most existing methods overlook modality gaps in CLIP's encoded features, which is shown as the text and image features lie far apart from each other, resulting in limited classification performance. To tackle this issue, we introduce a method called Selective Vision-Language Subspace Projection (SSP), which incorporates local image features and utilizes them as a bridge to enhance the alignment between image-text pairs. Specifically, our SSP framework comprises two parallel modules: a vision projector and a language projector. Both projectors utilize local image features to span the respective subspaces for image and texts, thereby projecting the image and text features into their respective subspaces to achieve alignment. Moreover, our approach entails only training-free matrix calculations and can be seamlessly integrated into advanced CLIP-based few-shot learning frameworks. Extensive experiments on 11 datasets have demonstrated SSP's superior text-image alignment capabilities, outperforming the state-of-the-art alignment methods. The code is available at https://github.com/zhuhsingyuu/SSP

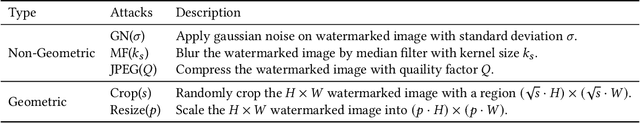

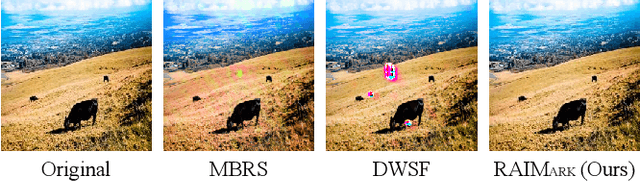

Achieving Resolution-Agnostic DNN-based Image Watermarking:A Novel Perspective of Implicit Neural Representation

May 14, 2024

Abstract:DNN-based watermarking methods are rapidly developing and delivering impressive performances. Recent advances achieve resolution-agnostic image watermarking by reducing the variant resolution watermarking problem to a fixed resolution watermarking problem. However, such a reduction process can potentially introduce artifacts and low robustness. To address this issue, we propose the first, to the best of our knowledge, Resolution-Agnostic Image WaterMarking (RAIMark) framework by watermarking the implicit neural representation (INR) of image. Unlike previous methods, our method does not rely on the previous reduction process by directly watermarking the continuous signal instead of image pixels, thus achieving resolution-agnostic watermarking. Precisely, given an arbitrary-resolution image, we fit an INR for the target image. As a continuous signal, such an INR can be sampled to obtain images with variant resolutions. Then, we quickly fine-tune the fitted INR to get a watermarked INR conditioned on a binary secret message. A pre-trained watermark decoder extracts the hidden message from any sampled images with arbitrary resolutions. By directly watermarking INR, we achieve resolution-agnostic watermarking with increased robustness. Extensive experiments show that our method outperforms previous methods with significant improvements: averagely improved bit accuracy by 7%$\sim$29%. Notably, we observe that previous methods are vulnerable to at least one watermarking attack (e.g. JPEG, crop, resize), while ours are robust against all watermarking attacks.

Boosting Few-Shot Learning via Attentive Feature Regularization

Mar 23, 2024

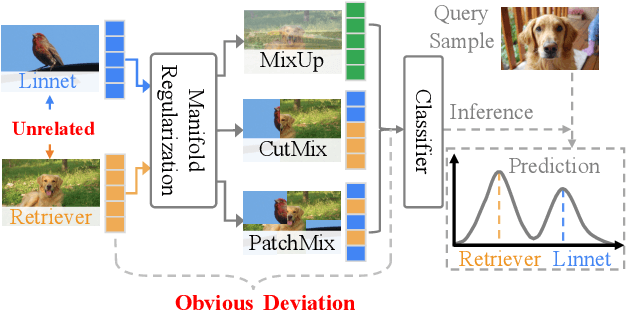

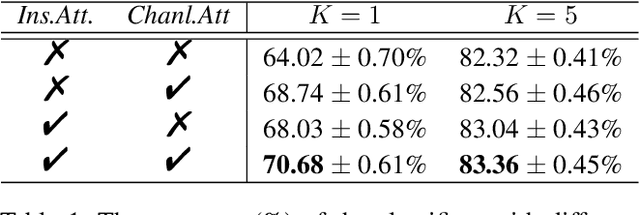

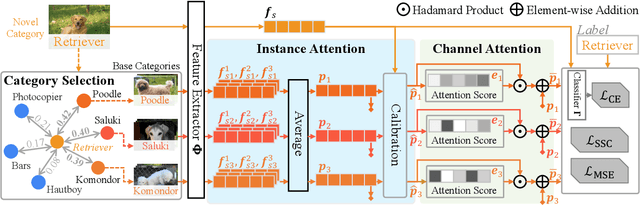

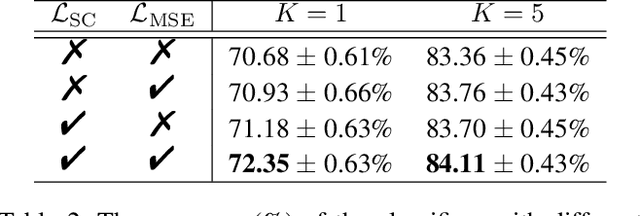

Abstract:Few-shot learning (FSL) based on manifold regularization aims to improve the recognition capacity of novel objects with limited training samples by mixing two samples from different categories with a blending factor. However, this mixing operation weakens the feature representation due to the linear interpolation and the overlooking of the importance of specific channels. To solve these issues, this paper proposes attentive feature regularization (AFR) which aims to improve the feature representativeness and discriminability. In our approach, we first calculate the relations between different categories of semantic labels to pick out the related features used for regularization. Then, we design two attention-based calculations at both the instance and channel levels. These calculations enable the regularization procedure to focus on two crucial aspects: the feature complementarity through adaptive interpolation in related categories and the emphasis on specific feature channels. Finally, we combine these regularization strategies to significantly improve the classifier performance. Empirical studies on several popular FSL benchmarks demonstrate the effectiveness of AFR, which improves the recognition accuracy of novel categories without the need to retrain any feature extractor, especially in the 1-shot setting. Furthermore, the proposed AFR can seamlessly integrate into other FSL methods to improve classification performance.

Towards Function Space Mesh Watermarking: Protecting the Copyright of Signed Distance Fields

Nov 18, 2023

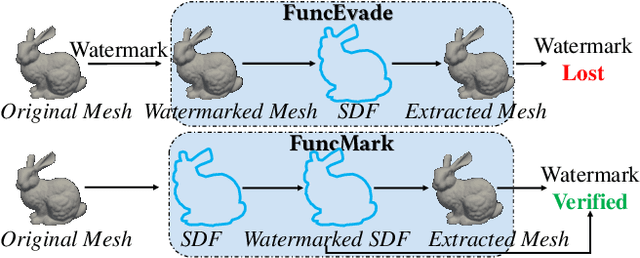

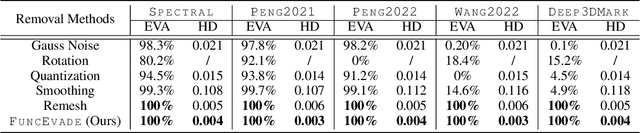

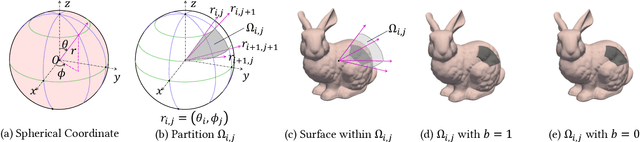

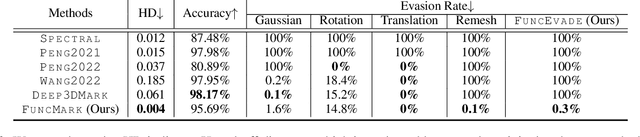

Abstract:The signed distance field (SDF) represents 3D geometries in continuous function space. Due to its continuous nature, explicit 3D models (e.g., meshes) can be extracted from it at arbitrary resolution, which means losing the SDF is equivalent to losing the mesh. Recent research has shown meshes can also be extracted from SDF-enhanced neural radiance fields (NeRF). Such a signal raises an alarm that any implicit neural representation with SDF enhancement can extract the original mesh, which indicates identifying the SDF's intellectual property becomes an urgent issue. This paper proposes FuncMark, a robust and invisible watermarking method to protect the copyright of signed distance fields by leveraging analytic on-surface deformations to embed binary watermark messages. Such deformation can survive isosurfacing and thus be inherited by the extracted meshes for further watermark message decoding. Our method can recover the message with high-resolution meshes extracted from SDFs and detect the watermark even when mesh vertices are extremely sparse. Furthermore, our method is robust even when various distortions (including remeshing) are encountered. Extensive experiments demonstrate that our \tool significantly outperforms state-of-the-art approaches and the message is still detectable even when only 50 vertex samples are given.

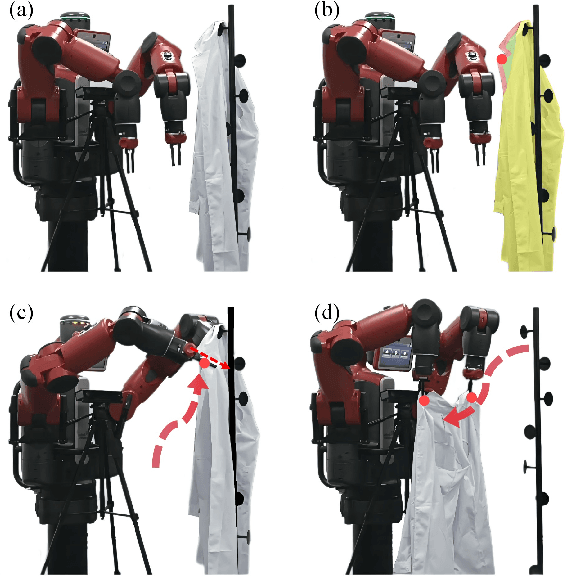

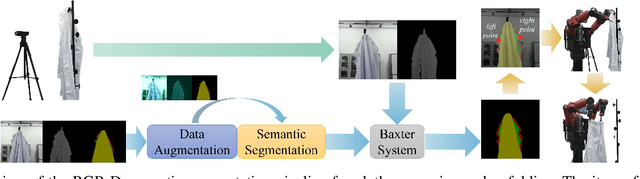

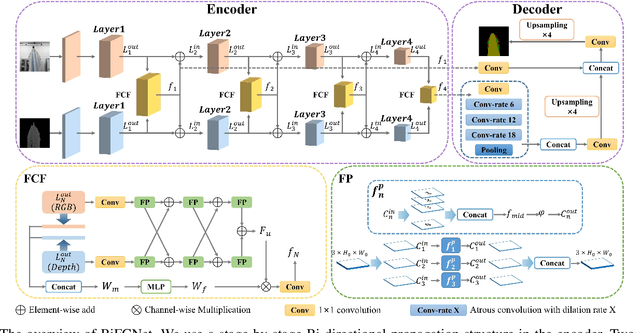

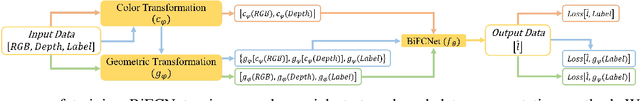

Clothes Grasping and Unfolding Based on RGB-D Semantic Segmentation

May 08, 2023

Abstract:Clothes grasping and unfolding is a core step in robotic-assisted dressing. Most existing works leverage depth images of clothes to train a deep learning-based model to recognize suitable grasping points. These methods often utilize physics engines to synthesize depth images to reduce the cost of real labeled data collection. However, the natural domain gap between synthetic and real images often leads to poor performance of these methods on real data. Furthermore, these approaches often struggle in scenarios where grasping points are occluded by the clothing item itself. To address the above challenges, we propose a novel Bi-directional Fractal Cross Fusion Network (BiFCNet) for semantic segmentation, enabling recognition of graspable regions in order to provide more possibilities for grasping. Instead of using depth images only, we also utilize RGB images with rich color features as input to our network in which the Fractal Cross Fusion (FCF) module fuses RGB and depth data by considering global complex features based on fractal geometry. To reduce the cost of real data collection, we further propose a data augmentation method based on an adversarial strategy, in which the color and geometric transformations simultaneously process RGB and depth data while maintaining the label correspondence. Finally, we present a pipeline for clothes grasping and unfolding from the perspective of semantic segmentation, through the addition of a strategy for grasp point selection from segmentation regions based on clothing flatness measures, while taking into account the grasping direction. We evaluate our BiFCNet on the public dataset NYUDv2 and obtained comparable performance to current state-of-the-art models. We also deploy our model on a Baxter robot, running extensive grasping and unfolding experiments as part of our ablation studies, achieving an 84% success rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge