Wenxiang Cong

Diffusion Prior Regularized Iterative Reconstruction for Low-dose CT

Oct 10, 2023

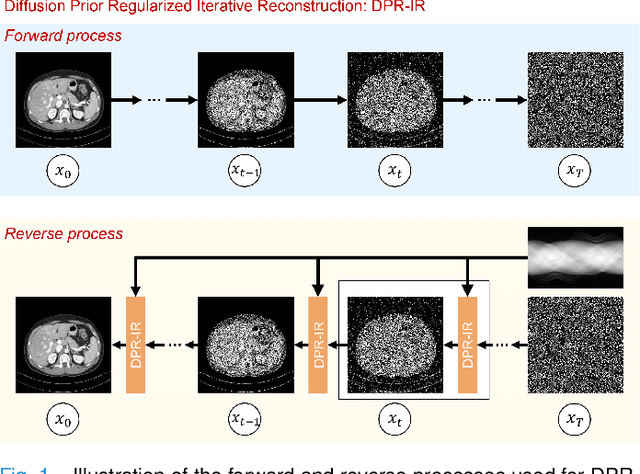

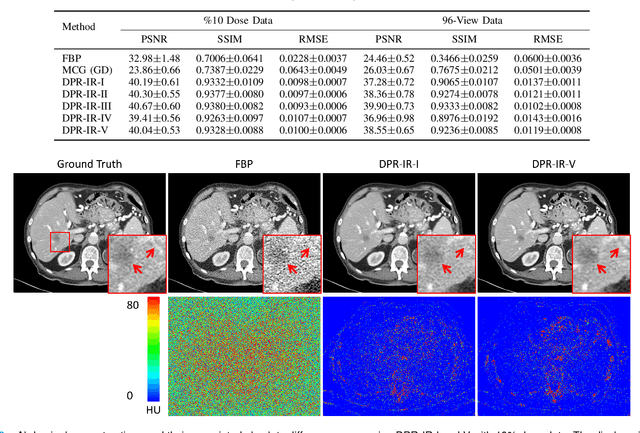

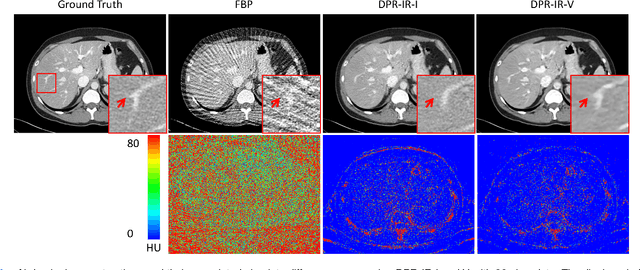

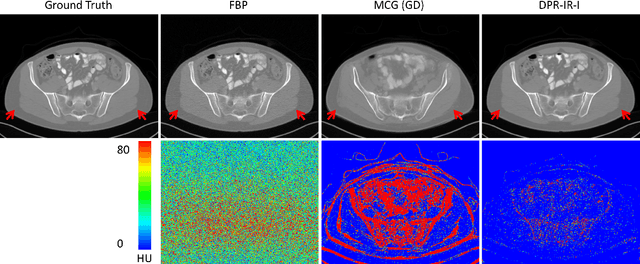

Abstract:Computed tomography (CT) involves a patient's exposure to ionizing radiation. To reduce the radiation dose, we can either lower the X-ray photon count or down-sample projection views. However, either of the ways often compromises image quality. To address this challenge, here we introduce an iterative reconstruction algorithm regularized by a diffusion prior. Drawing on the exceptional imaging prowess of the denoising diffusion probabilistic model (DDPM), we merge it with a reconstruction procedure that prioritizes data fidelity. This fusion capitalizes on the merits of both techniques, delivering exceptional reconstruction results in an unsupervised framework. To further enhance the efficiency of the reconstruction process, we incorporate the Nesterov momentum acceleration technique. This enhancement facilitates superior diffusion sampling in fewer steps. As demonstrated in our experiments, our method offers a potential pathway to high-definition CT image reconstruction with minimized radiation.

Sub-volume-based Denoising Diffusion Probabilistic Model for Cone-beam CT Reconstruction from Incomplete Data

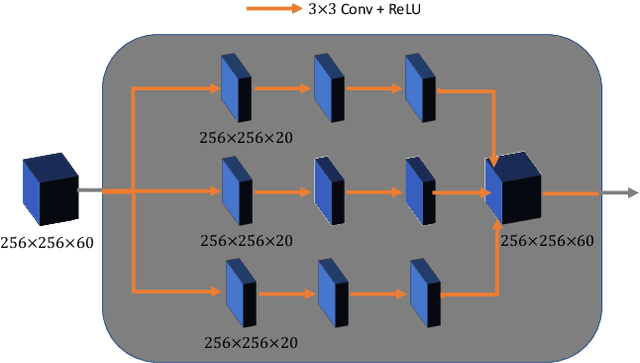

Mar 25, 2023Abstract:Deep learning (DL) has emerged as a new approach in the field of computed tomography (CT) with many applicaitons. A primary example is CT reconstruction from incomplete data, such as sparse-view image reconstruction. However, applying DL to sparse-view cone-beam CT (CBCT) remains challenging. Many models learn the mapping from sparse-view CT images to the ground truth but often fail to achieve satisfactory performance. Incorporating sinogram data and performing dual-domain reconstruction improve image quality with artifact suppression, but a straightforward 3D implementation requires storing an entire 3D sinogram in memory and many parameters of dual-domain networks. This remains a major challenge, limiting further research, development and applications. In this paper, we propose a sub-volume-based 3D denoising diffusion probabilistic model (DDPM) for CBCT image reconstruction from down-sampled data. Our DDPM network, trained on data cubes extracted from paired fully sampled sinograms and down-sampled sinograms, is employed to inpaint down-sampled sinograms. Our method divides the entire sinogram into overlapping cubes and processes them in parallel on multiple GPUs, successfully overcoming the memory limitation. Experimental results demonstrate that our approach effectively suppresses few-view artifacts while preserving textural details faithfully.

Patch-Based Denoising Diffusion Probabilistic Model for Sparse-View CT Reconstruction

Nov 18, 2022

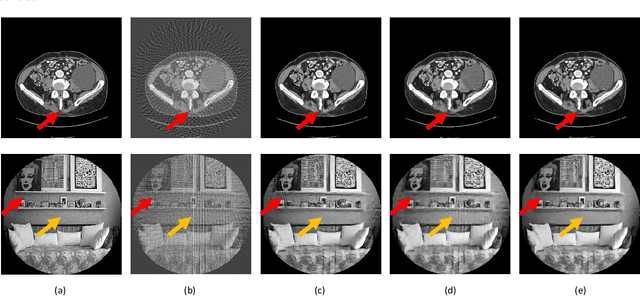

Abstract:Sparse-view computed tomography (CT) can be used to reduce radiation dose greatly but is suffers from severe image artifacts. Recently, the deep learning based method for sparse-view CT reconstruction has attracted a major attention. However, neural networks often have a limited ability to remove the artifacts when they only work in the image domain. Deep learning-based sinogram processing can achieve a better anti-artifact performance, but it inevitably requires feature maps of the whole image in a video memory, which makes handling large-scale or three-dimensional (3D) images rather challenging. In this paper, we propose a patch-based denoising diffusion probabilistic model (DDPM) for sparse-view CT reconstruction. A DDPM network based on patches extracted from fully sampled projection data is trained and then used to inpaint down-sampled projection data. The network does not require paired full-sampled and down-sampled data, enabling unsupervised learning. Since the data processing is patch-based, the deep learning workflow can be distributed in parallel, overcoming the memory problem of large-scale data. Our experiments show that the proposed method can effectively suppress few-view artifacts while faithfully preserving textural details.

Phase function estimation from a diffuse optical image via deep learning

Nov 16, 2021

Abstract:The phase function is a key element of a light propagation model for Monte Carlo (MC) simulation, which is usually fitted with an analytic function with associated parameters. In recent years, machine learning methods were reported to estimate the parameters of the phase function of a particular form such as the Henyey-Greenstein phase function but, to our knowledge, no studies have been performed to determine the form of the phase function. Here we design a convolutional neural network to estimate the phase function from a diffuse optical image without any explicit assumption on the form of the phase function. Specifically, we use a Gaussian mixture model as an example to represent the phase function generally and learn the model parameters accurately. The Gaussian mixture model is selected because it provides the analytic expression of phase function to facilitate deflection angle sampling in MC simulation, and does not significantly increase the number of free parameters. Our proposed method is validated on MC-simulated reflectance images of typical biological tissues using the Henyey-Greenstein phase function with different anisotropy factors. The effects of field of view (FOV) and spatial resolution on the errors are analyzed to optimize the estimation method. The mean squared error of the phase function is 0.01 and the relative error of the anisotropy factor is 3.28%.

Deep Interactive Denoiser for X-Ray Computed Tomography

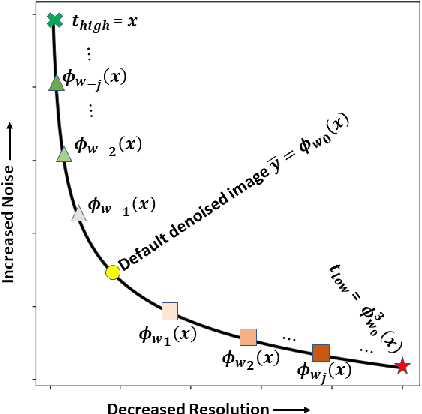

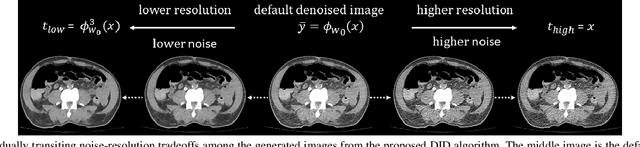

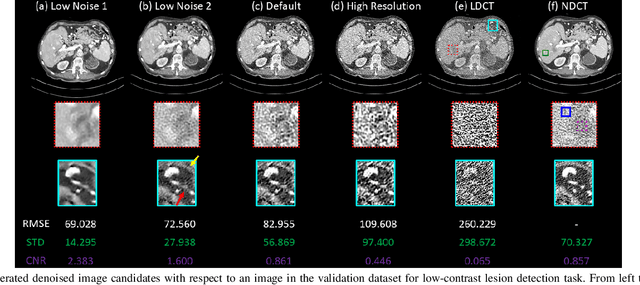

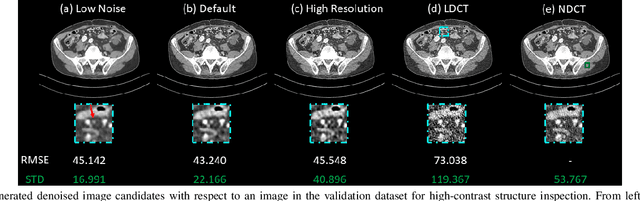

Dec 06, 2020

Abstract:Low dose computed tomography (LDCT) is desirable for both diagnostic imaging and image guided interventions. Denoisers are openly used to improve the quality of LDCT. Deep learning (DL)-based denoisers have shown state-of-the-art performance and are becoming one of the mainstream methods. However, there exists two challenges regarding the DL-based denoisers: 1) a trained model typically does not generate different image candidates with different noise-resolution tradeoffs which sometimes are needed for different clinical tasks; 2) the model generalizability might be an issue when the noise level in the testing images is different from that in the training dataset. To address these two challenges, in this work, we introduce a lightweight optimization process at the testing phase on top of any existing DL-based denoisers to generate multiple image candidates with different noise-resolution tradeoffs suitable for different clinical tasks in real-time. Consequently, our method allows the users to interact with the denoiser to efficiently review various image candidates and quickly pick up the desired one, and thereby was termed as deep interactive denoiser (DID). Experimental results demonstrated that DID can deliver multiple image candidates with different noise-resolution tradeoffs, and shows great generalizability regarding various network architectures, as well as training and testing datasets with various noise levels.

Stabilizing Deep Tomographic Reconstruction Networks

Aug 19, 2020

Abstract:Tomographic image reconstruction with deep learning is an emerging field of applied artificial intelligence but a recent study reveals that deep reconstruction networks, such as well-known AUTOMAP, are unstable for computed tomography (CT) and magnetic resonance imaging (MRI). Specifically, three kinds of instabilities were identified: (1) strong output artefacts from tiny perturbation, (2) poor detection of small features, and (3) decreased performance with increased input data. These instabilities are believed to be from lacking kernel awareness and nontrivial to overcome, but compressed sensing (CS) reconstruction was reported to be stable due to its kernel awareness. Since deep reconstruction may potentially become the main driving force to achieve better image quality, stabilizing deep reconstruction networks is an urgent challenge. Here we propose an Analytic, Compressive, Iterative Deep (ACID) network to fundamentally address this challenge. Instead of only using deep learning or compressed sensing, ACID consists of four modules including deep reconstruction, CS, analytic mapping, and iterative refinement. In our experiments, ACID eliminated all three kinds of instabilities and significantly improved image quality relative to the methods in the aforementioned PNAS study. ACID is only an example of integrating diverse algorithmic ingredients but it has clearly demonstrated that data-driven reconstruction can be stabilized to outperform reconstruction using CS alone. The power of ACID comes from a unique combination of a deep reconstruction network trained on big data, CS via advanced optimization, and iterative refinement that stabilizes the whole workflow. We anticipate that this integrative closed-loop data driven approach will add great value to clinical and other applications.

Low-dimensional Manifold Constrained Disentanglement Network for Metal Artifact Reduction

Jul 08, 2020

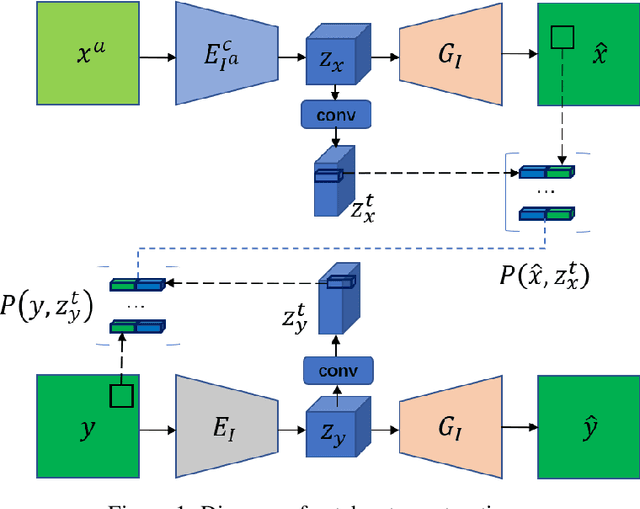

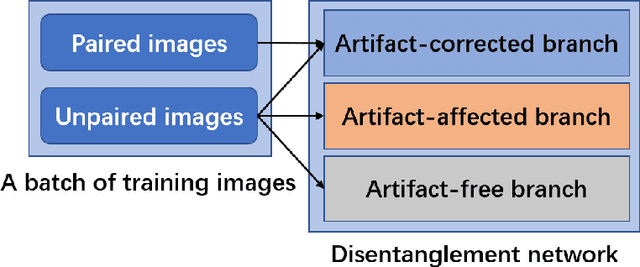

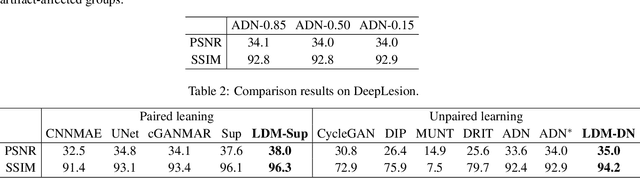

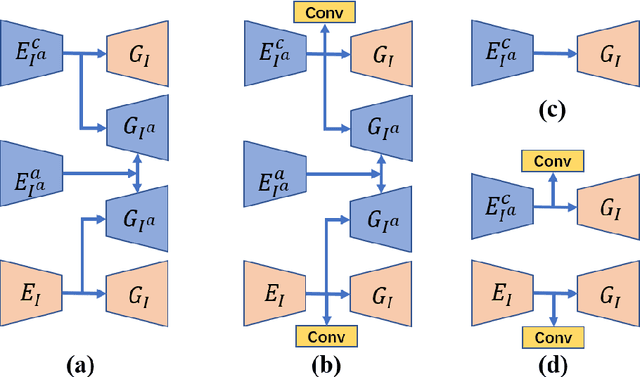

Abstract:Deep neural network based methods have achieved promising results for CT metal artifact reduction (MAR), most of which use many synthesized paired images for training. As synthesized metal artifacts in CT images may not accurately reflect the clinical counterparts, an artifact disentanglement network (ADN) was proposed with unpaired clinical images directly, producing promising results on clinical datasets. However, without sufficient supervision, it is difficult for ADN to recover structural details of artifact-affected CT images based on adversarial losses only. To overcome these problems, here we propose a low-dimensional manifold (LDM) constrained disentanglement network (DN), leveraging the image characteristics that the patch manifold is generally low-dimensional. Specifically, we design an LDM-DN learning algorithm to empower the disentanglement network through optimizing the synergistic network loss functions while constraining the recovered images to be on a low-dimensional patch manifold. Moreover, learning from both paired and unpaired data, an efficient hybrid optimization scheme is proposed to further improve the MAR performance on clinical datasets. Extensive experiments demonstrate that the proposed LDM-DN approach can consistently improve the MAR performance in paired and/or unpaired learning settings, outperforming competing methods on synthesized and clinical datasets.

Deep Efficient End-to-end Reconstruction (DEER) Network for Low-dose Few-view Breast CT from Projection Data

Dec 16, 2019

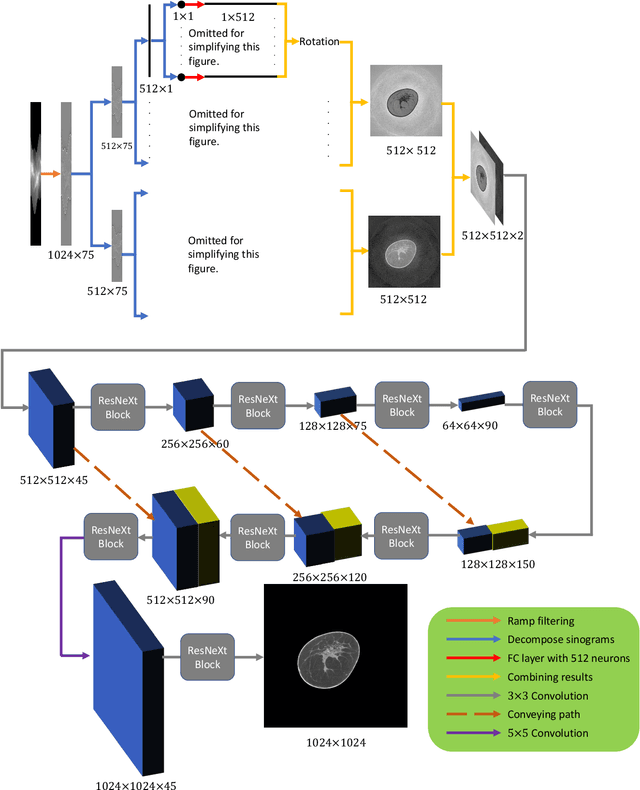

Abstract:Breast CT provides image volumes with isotropic resolution in high contrast, enabling detection of calcification (down to a few hundred microns in size) and subtle density differences. Since breast is sensitive to x-ray radiation, dose reduction of breast CT is an important topic, and for this purpose low-dose few-view scanning is a main approach. In this article, we propose a Deep Efficient End-to-end Reconstruction (DEER) network for low-dose few-view breast CT. The major merits of our network include high dose efficiency, excellent image quality, and low model complexity. By the design, the proposed network can learn the reconstruction process in terms of as less as O(N) parameters, where N is the size of an image to be reconstructed, which represents orders of magnitude improvements relative to the state-of-the-art deep-learning based reconstruction methods that map projection data to tomographic images directly. As a result, our method does not require expensive GPUs to train and run. Also, validated on a cone-beam breast CT dataset prepared by Koning Corporation on a commercial scanner, our method demonstrates competitive performance over the state-of-the-art reconstruction networks in terms of image quality.

Deep-learning-based Breast CT for Radiation Dose Reduction

Sep 25, 2019

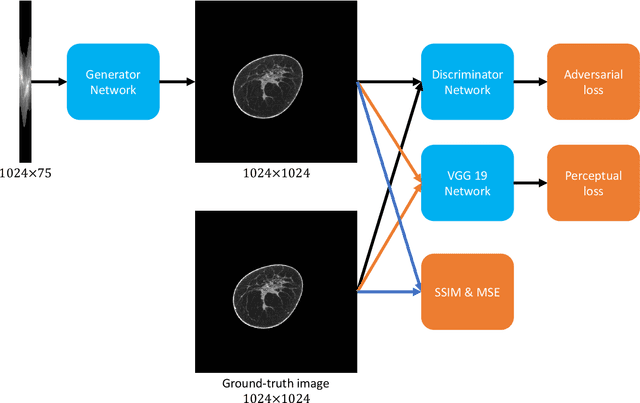

Abstract:Cone-beam breast computed tomography (CT) provides true 3D breast images with isotropic resolution and high-contrast information, detecting calcifications as small as a few hundred microns and revealing subtle tissue differences. However, breast is highly sensitive to x-ray radiation. It is critically important for healthcare to reduce radiation dose. Few-view cone-beam CT only uses a fraction of x-ray projection data acquired by standard cone-beam breast CT, enabling significant reduction of the radiation dose. However, insufficient sampling data would cause severe streak artifacts in CT images reconstructed using conventional methods. In this study, we propose a deep-learning-based method to establish a residual neural network model for the image reconstruction, which is applied for few-view breast CT to produce high quality breast CT images. We respectively evaluate the deep-learning-based image reconstruction using one third and one quarter of x-ray projection views of the standard cone-beam breast CT. Based on clinical breast imaging dataset, we perform a supervised learning to train the neural network from few-view CT images to corresponding full-view CT images. Experimental results show that the deep learning-based image reconstruction method allows few-view breast CT to achieve a radiation dose <6 mGy per cone-beam CT scan, which is a threshold set by FDA for mammographic screening.

Dual Network Architecture for Few-view CT -- Trained on ImageNet Data and Transferred for Medical Imaging

Jul 17, 2019

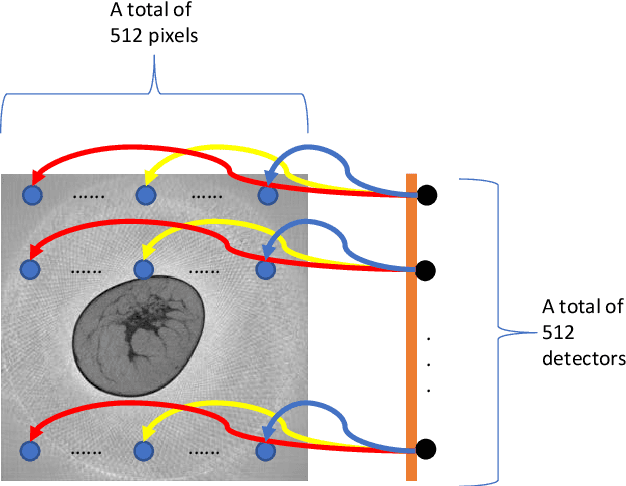

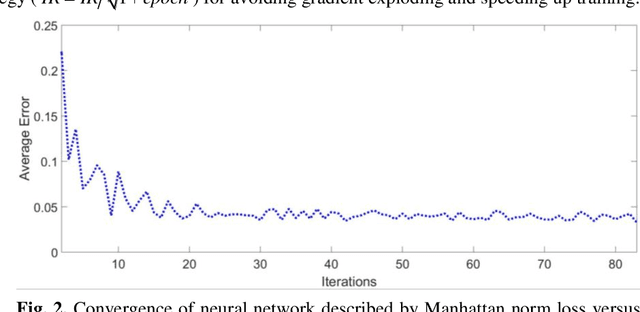

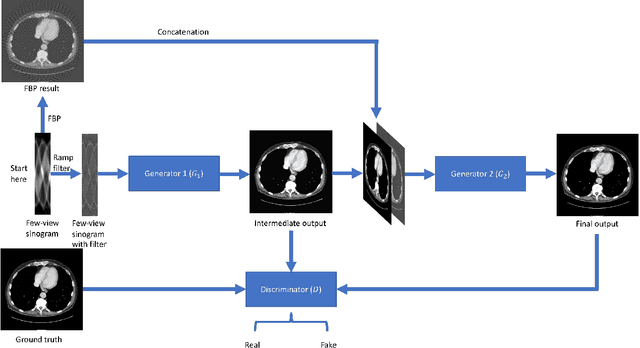

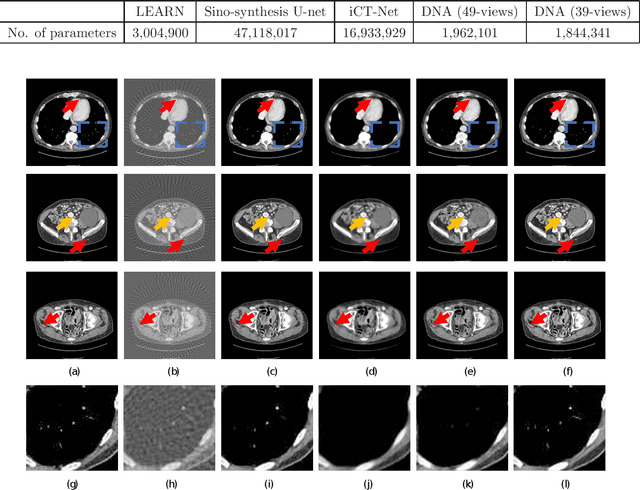

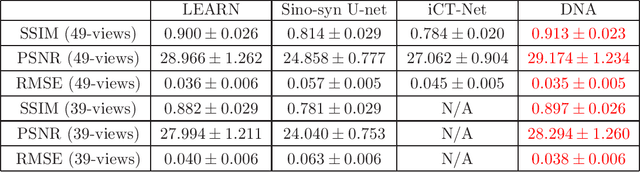

Abstract:X-ray computed tomography (CT) reconstructs cross-sectional images from projection data. However, ionizing X-ray radiation associated with CT scanning might induce cancer and genetic damage and raises public concerns, and the reduction of radiation dose has attracted major attention. Few-view CT image reconstruction is an important topic to reduce the radiation dose. Recently, data-driven algorithms have shown great potential to solve the few-view CT problem. In this paper, we develop a dual network architecture (DNA) for reconstructing images directly from sinograms. In the proposed DNA method, a point-based fully-connected layer learns the backprojection process requesting significantly less memory than the prior art and with O(C*N*N_c) parameters where N and N_c denote the dimension of reconstructed images and number of projections respectively. C is an adjustable parameter that can be set as low as 1. Our experimental results demonstrate that DNA produces a competitive performance over the other state-of-the-art methods. Interestingly, natural images can be used to pre-train DNA to avoid overfitting when the amount of real patient images is limited.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge