Biling Wang

Medical Artificial Intelligence and Automation Laboratory and Department of Radiation Oncology, UT Southwestern Medical Center, Dallas TX 75235, USA

AI-Assisted Decision-Making for Clinical Assessment of Auto-Segmented Contour Quality

May 01, 2025Abstract:Purpose: This study presents a Deep Learning (DL)-based quality assessment (QA) approach for evaluating auto-generated contours (auto-contours) in radiotherapy, with emphasis on Online Adaptive Radiotherapy (OART). Leveraging Bayesian Ordinal Classification (BOC) and calibrated uncertainty thresholds, the method enables confident QA predictions without relying on ground truth contours or extensive manual labeling. Methods: We developed a BOC model to classify auto-contour quality and quantify prediction uncertainty. A calibration step was used to optimize uncertainty thresholds that meet clinical accuracy needs. The method was validated under three data scenarios: no manual labels, limited labels, and extensive labels. For rectum contours in prostate cancer, we applied geometric surrogate labels when manual labels were absent, transfer learning when limited, and direct supervision when ample labels were available. Results: The BOC model delivered robust performance across all scenarios. Fine-tuning with just 30 manual labels and calibrating with 34 subjects yielded over 90% accuracy on test data. Using the calibrated threshold, over 93% of the auto-contours' qualities were accurately predicted in over 98% of cases, reducing unnecessary manual reviews and highlighting cases needing correction. Conclusion: The proposed QA model enhances contouring efficiency in OART by reducing manual workload and enabling fast, informed clinical decisions. Through uncertainty quantification, it ensures safer, more reliable radiotherapy workflows.

Performance Deterioration of Deep Learning Models after Clinical Deployment: A Case Study with Auto-segmentation for Definitive Prostate Cancer Radiotherapy

Oct 11, 2022

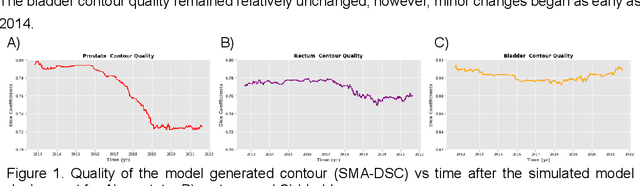

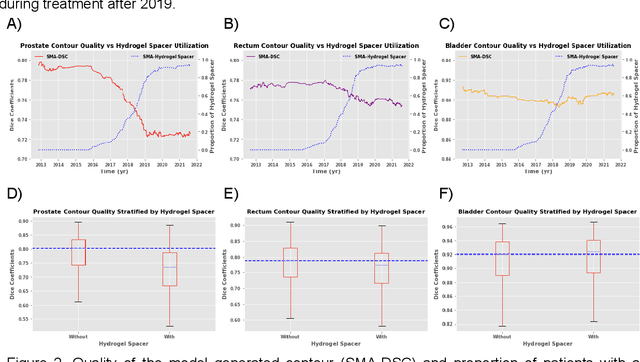

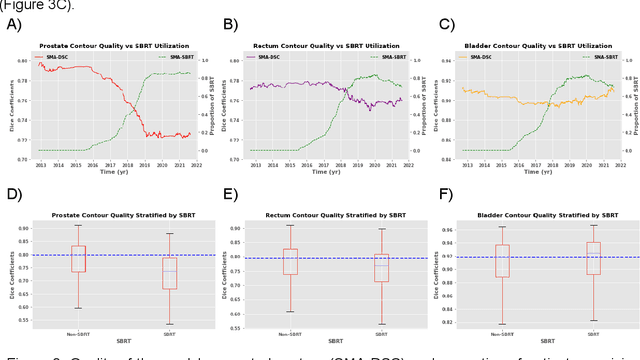

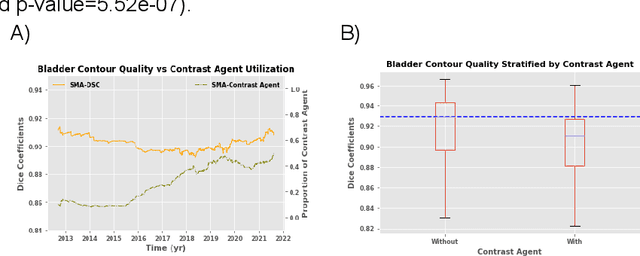

Abstract:In the past decade, deep learning (DL)-based artificial intelligence (AI) has witnessed unprecedented success and has led to much excitement in medicine. However, many successful models have not been implemented in the clinic predominantly due to concerns regarding the lack of interpretability and generalizability in both spatial and temporal domains. In this work, we used a DL-based auto segmentation model for intact prostate patients to observe any temporal performance changes and then correlate them to possible explanatory variables. We retrospectively simulated the clinical implementation of our DL model to investigate temporal performance trends. Our cohort included 912 patients with prostate cancer treated with definitive radiotherapy from January 2006 to August 2021 at the University of Texas Southwestern Medical Center (UTSW). We trained a U-Net-based DL auto segmentation model on the data collected before 2012 and tested it on data collected from 2012 to 2021 to simulate the clinical deployment of the trained model starting in 2012. We visualize the trends using a simple moving average curve and used ANOVA and t-test to investigate the impact of various clinical factors. The prostate and rectum contour quality decreased rapidly after 2016-2017. Stereotactic body radiotherapy (SBRT) and hydrogel spacer use were significantly associated with prostate contour quality (p=5.6e-12 and 0.002, respectively). SBRT and physicians' styles are significantly associated with the rectum contour quality (p=0.0005 and 0.02, respectively). Only the presence of contrast within the bladder significantly affected the bladder contour quality (p=1.6e-7). We showed that DL model performance decreased over time in concordance with changes in clinical practice patterns and changes in clinical personnel.

Uncertainty estimations methods for a deep learning model to aid in clinical decision-making -- a clinician's perspective

Oct 02, 2022

Abstract:Prediction uncertainty estimation has clinical significance as it can potentially quantify prediction reliability. Clinicians may trust 'blackbox' models more if robust reliability information is available, which may lead to more models being adopted into clinical practice. There are several deep learning-inspired uncertainty estimation techniques, but few are implemented on medical datasets -- fewer on single institutional datasets/models. We sought to compare dropout variational inference (DO), test-time augmentation (TTA), conformal predictions, and single deterministic methods for estimating uncertainty using our model trained to predict feeding tube placement for 271 head and neck cancer patients treated with radiation. We compared the area under the curve (AUC), sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) trends for each method at various cutoffs that sought to stratify patients into 'certain' and 'uncertain' cohorts. These cutoffs were obtained by calculating the percentile "uncertainty" within the validation cohort and applied to the testing cohort. Broadly, the AUC, sensitivity, and NPV increased as the predictions were more 'certain' -- i.e., lower uncertainty estimates. However, when a majority vote (implementing 2/3 criteria: DO, TTA, conformal predictions) or a stricter approach (3/3 criteria) were used, AUC, sensitivity, and NPV improved without a notable loss in specificity or PPV. Especially for smaller, single institutional datasets, it may be important to evaluate multiple estimations techniques before incorporating a model into clinical practice.

Region Specific Optimization (RSO)-based Deep Interactive Registration

Mar 08, 2022

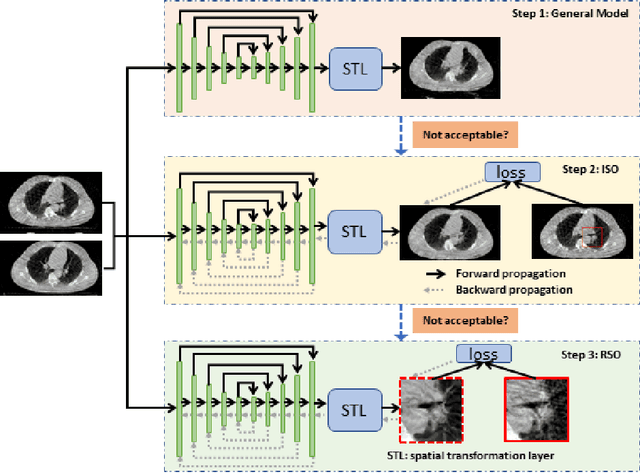

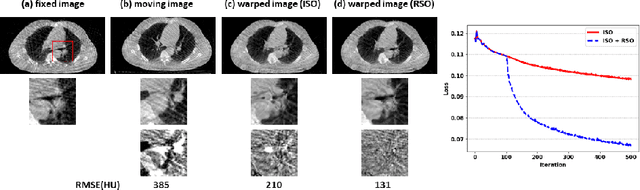

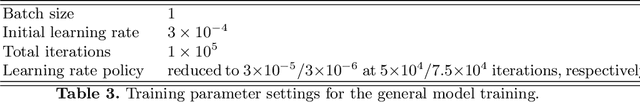

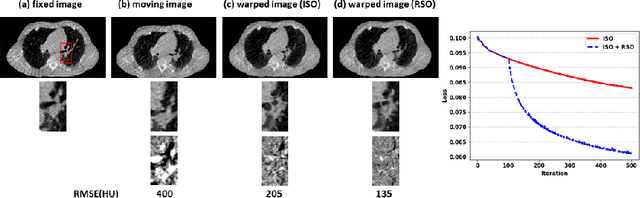

Abstract:Medical image registration is a fundamental and vital task which will affect the efficacy of many downstream clinical tasks. Deep learning (DL)-based deformable image registration (DIR) methods have been investigated, showing state-of-the-art performance. A test time optimization (TTO) technique was proposed to further improve the DL models' performance. Despite the substantial accuracy improvement with this TTO technique, there still remained some regions that exhibited large registration errors even after many TTO iterations. To mitigate this challenge, we firstly identified the reason why the TTO technique was slow, or even failed, to improve those regions' registration results. We then proposed a two-levels TTO technique, i.e., image-specific optimization (ISO) and region-specific optimization (RSO), where the region can be interactively indicated by the clinician during the registration result reviewing process. For both efficiency and accuracy, we further envisioned a three-step DL-based image registration workflow. Experimental results showed that our proposed method outperformed the conventional method qualitatively and quantitatively.

Deep High-Resolution Network for Low Dose X-ray CT Denoising

Feb 01, 2021

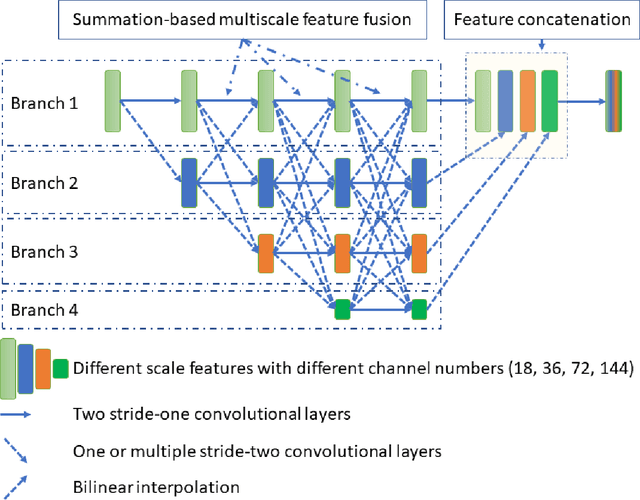

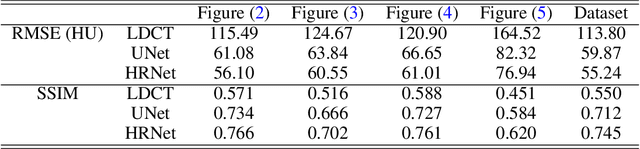

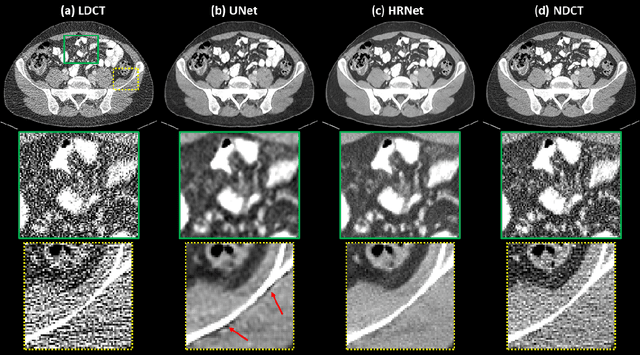

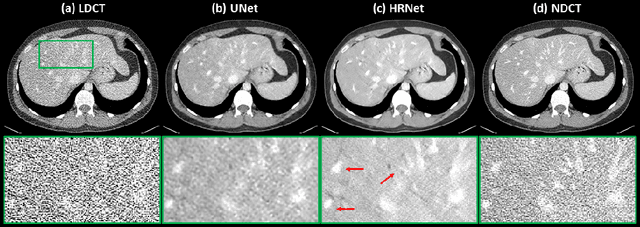

Abstract:Low Dose Computed Tomography (LDCT) is clinically desirable due to the reduced radiation to patients. However, the quality of LDCT images is often sub-optimal because of the inevitable strong quantum noise. Inspired by their unprecedent success in computer vision, deep learning (DL)-based techniques have been used for LDCT denoising. Despite the promising noise removal ability of DL models, people have observed that the resolution of the DL-denoised images is compromised, decreasing their clinical value. Aiming at relieving this problem, in this work, we developed a more effective denoiser by introducing a high-resolution network (HRNet). Since HRNet consists of multiple branches of subnetworks to extract multiscale features which are later fused together, the quality of the generated features can be substantially enhanced, leading to improved denoising performance. Experimental results demonstrated that the introduced HRNet-based denoiser outperforms the benchmarked UNet-based denoiser in terms of superior image resolution preservation ability while comparable, if not better, noise suppression ability. Quantitative metrics in terms of root-mean-squared-errors (RMSE)/structure similarity index (SSIM) showed that the HRNet-based denoiser can improve the values from 113.80/0.550 (LDCT) to 55.24/0.745 (HRNet), in comparison to 59.87/0.712 for the UNet-based denoiser.

Deep Dose Plugin Towards Real-time Monte Carlo Dose Calculation Through a Deep Learning based Denoising Algorithm

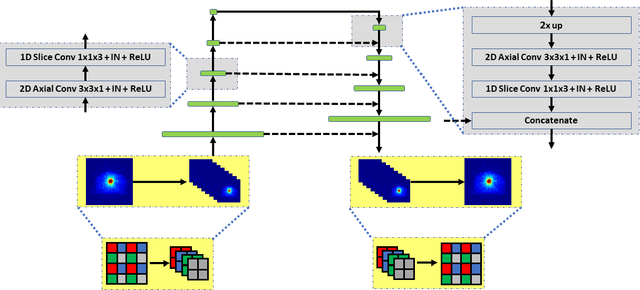

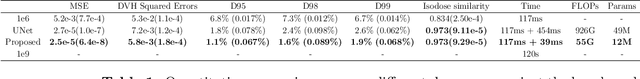

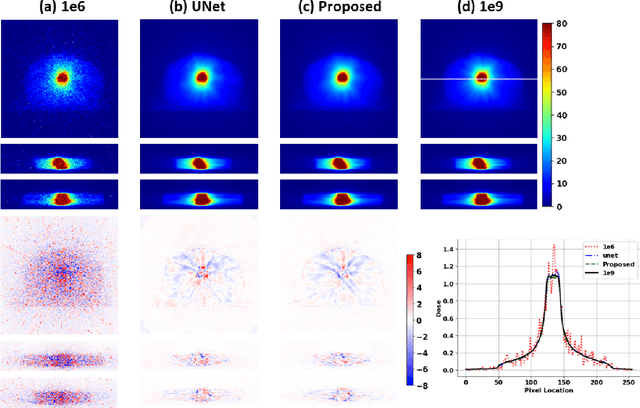

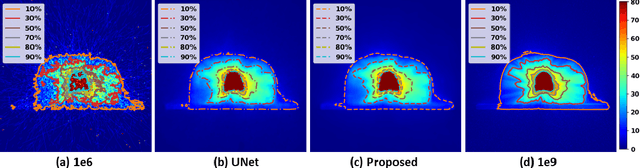

Dec 23, 2020

Abstract:Monte Carlo (MC) simulation is considered the gold standard method for radiotherapy dose calculation. However, achieving high precision requires a large number of simulation histories, which is time consuming. The use of computer graphics processing units (GPUs) has greatly accelerated MC simulation and allows dose calculation within a few minutes for a typical radiotherapy treatment plan. However, some clinical applications demand real time efficiency for MC dose calculation. To tackle this problem, we have developed a real time, deep learning based dose denoiser that can be plugged into a current GPU based MC dose engine to enable real time MC dose calculation. We used two different acceleration strategies to achieve this goal: 1) we applied voxel unshuffle and voxel shuffle operators to decrease the input and output sizes without any information loss, and 2) we decoupled the 3D volumetric convolution into a 2D axial convolution and a 1D slice convolution. In addition, we used a weakly supervised learning framework to train the network, which greatly reduces the size of the required training dataset and thus enables fast fine tuning based adaptation of the trained model to different radiation beams. Experimental results show that the proposed denoiser can run in as little as 39 ms, which is around 11.6 times faster than the baseline model. As a result, the whole MC dose calculation pipeline can be finished within 0.15 seconds, including both GPU MC dose calculation and deep learning based denoising, achieving the real time efficiency needed for some radiotherapy applications, such as online adaptive radiotherapy.

Deep Interactive Denoiser for X-Ray Computed Tomography

Dec 06, 2020

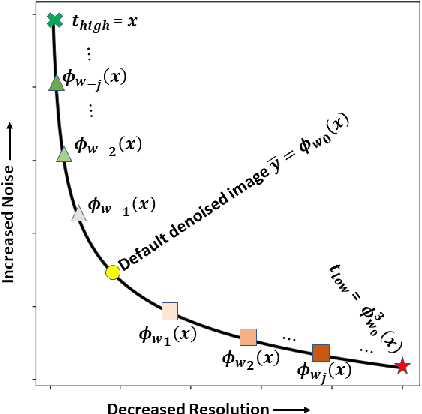

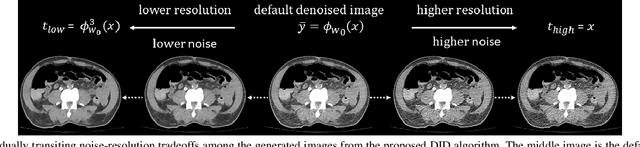

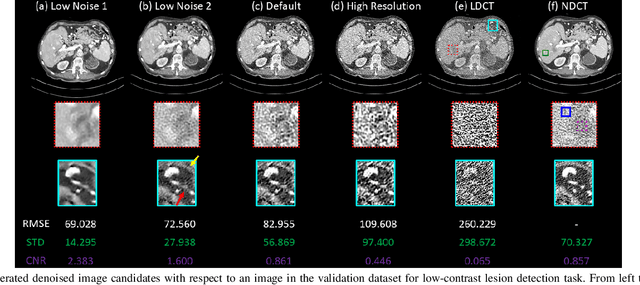

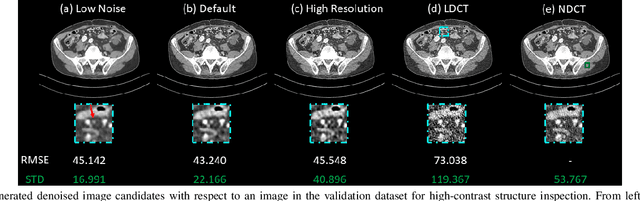

Abstract:Low dose computed tomography (LDCT) is desirable for both diagnostic imaging and image guided interventions. Denoisers are openly used to improve the quality of LDCT. Deep learning (DL)-based denoisers have shown state-of-the-art performance and are becoming one of the mainstream methods. However, there exists two challenges regarding the DL-based denoisers: 1) a trained model typically does not generate different image candidates with different noise-resolution tradeoffs which sometimes are needed for different clinical tasks; 2) the model generalizability might be an issue when the noise level in the testing images is different from that in the training dataset. To address these two challenges, in this work, we introduce a lightweight optimization process at the testing phase on top of any existing DL-based denoisers to generate multiple image candidates with different noise-resolution tradeoffs suitable for different clinical tasks in real-time. Consequently, our method allows the users to interact with the denoiser to efficiently review various image candidates and quickly pick up the desired one, and thereby was termed as deep interactive denoiser (DID). Experimental results demonstrated that DID can deliver multiple image candidates with different noise-resolution tradeoffs, and shows great generalizability regarding various network architectures, as well as training and testing datasets with various noise levels.

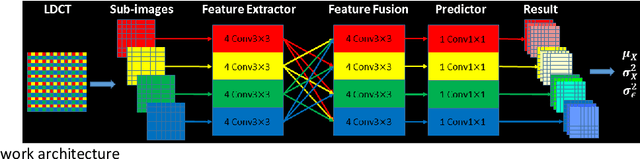

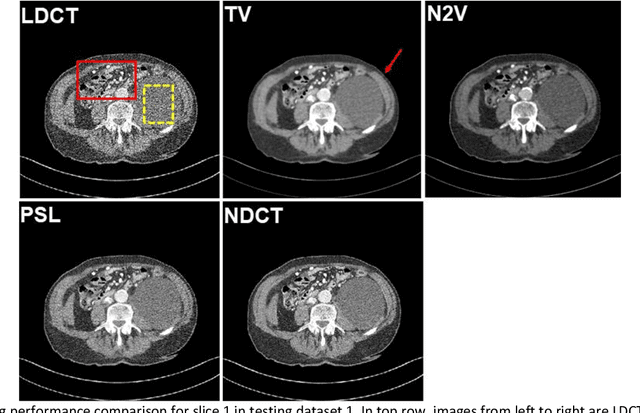

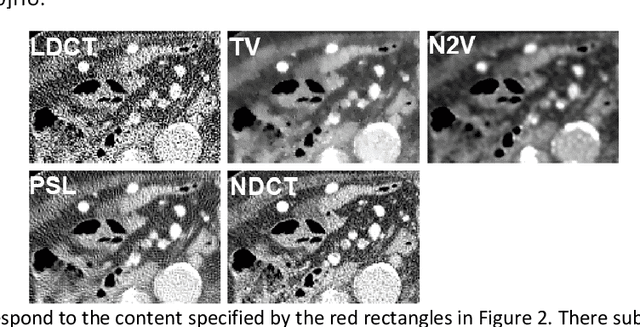

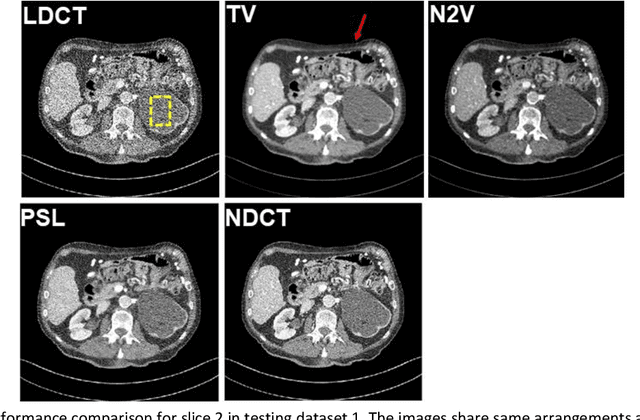

Probabilistic self-learning framework for Low-dose CT Denoising

May 30, 2020

Abstract:Despite the indispensable role of X-ray computed tomography (CT) in diagnostic medicine field, the associated ionizing radiation is still a major concern considering that it may cause genetic and cancerous diseases. Decreasing the exposure can reduce the dose and hence the radiation-related risk, but will also induce higher quantum noise. Supervised deep learning can be used to train a neural network to denoise the low-dose CT (LDCT). However, its success requires massive pixel-wise paired LDCT and normal-dose CT (NDCT) images, which are rarely available in real practice. To alleviate this problem, in this paper, a shift-invariant property based neural network was devised to learn the inherent pixel correlations and also the noise distribution by only using the LDCT images, shaping into our probabilistic self-learning framework. Experimental results demonstrated that the proposed method outperformed the competitors, producing an enhanced LDCT image that has similar image style as the routine NDCT which is highly-preferable in clinic practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge