David Sher

Medical Artificial Intelligence and Automation Laboratory and Department of Radiation Oncology, UT Southwestern Medical Center, Dallas TX 75235, USA

Uncertainty estimations methods for a deep learning model to aid in clinical decision-making -- a clinician's perspective

Oct 02, 2022

Abstract:Prediction uncertainty estimation has clinical significance as it can potentially quantify prediction reliability. Clinicians may trust 'blackbox' models more if robust reliability information is available, which may lead to more models being adopted into clinical practice. There are several deep learning-inspired uncertainty estimation techniques, but few are implemented on medical datasets -- fewer on single institutional datasets/models. We sought to compare dropout variational inference (DO), test-time augmentation (TTA), conformal predictions, and single deterministic methods for estimating uncertainty using our model trained to predict feeding tube placement for 271 head and neck cancer patients treated with radiation. We compared the area under the curve (AUC), sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) trends for each method at various cutoffs that sought to stratify patients into 'certain' and 'uncertain' cohorts. These cutoffs were obtained by calculating the percentile "uncertainty" within the validation cohort and applied to the testing cohort. Broadly, the AUC, sensitivity, and NPV increased as the predictions were more 'certain' -- i.e., lower uncertainty estimates. However, when a majority vote (implementing 2/3 criteria: DO, TTA, conformal predictions) or a stricter approach (3/3 criteria) were used, AUC, sensitivity, and NPV improved without a notable loss in specificity or PPV. Especially for smaller, single institutional datasets, it may be important to evaluate multiple estimations techniques before incorporating a model into clinical practice.

Towards reliable head and neck cancers locoregional recurrence prediction using delta-radiomics and learning with rejection option

Aug 30, 2022

Abstract:A reliable locoregional recurrence (LRR) prediction model is important for the personalized management of head and neck cancers (HNC) patients. This work aims to develop a delta-radiomics feature-based multi-classifier, multi-objective, and multi-modality (Delta-mCOM) model for post-treatment HNC LRR prediction and adopting a learning with rejection option (LRO) strategy to boost the prediction reliability by rejecting samples with high prediction uncertainties. In this retrospective study, we collected PET/CT image and clinical data from 224 HNC patients. We calculated the differences between radiomics features extracted from PET/CT images acquired before and after radiotherapy as the input features. Using clinical parameters, PET and CT radiomics features, we built and optimized three separate single-modality models. We used multiple classifiers for model construction and employed sensitivity and specificity simultaneously as the training objectives. For testing samples, we fused the output probabilities from all these single-modality models to obtain the final output probabilities of the Delta-mCOM model. In the LRO strategy, we estimated the epistemic and aleatoric uncertainties when predicting with Delta-mCOM model and identified patients associated with prediction of higher reliability. Predictions with higher epistemic uncertainty or higher aleatoric uncertainty than given thresholds were deemed unreliable, and they were rejected before providing a final prediction. Different thresholds corresponding to different low-reliability prediction rejection ratios were applied. The inclusion of the delta-radiomics feature improved the accuracy of HNC LRR prediction, and the proposed Delta-mCOM model can give more reliable predictions by rejecting predictions for samples of high uncertainty using the LRO strategy.

Registration-Guided Deep Learning Image Segmentation for Cone Beam CT-based Online Adaptive Radiotherapy

Aug 19, 2021

Abstract:Adaptive radiotherapy (ART), especially online ART, effectively accounts for positioning errors and anatomical changes. One key component of online ART is accurately and efficiently delineating organs at risk (OARs) and targets on online images, such as CBCT, to meet the online demands of plan evaluation and adaptation. Deep learning (DL)-based automatic segmentation has gained great success in segmenting planning CT, but its applications to CBCT yielded inferior results due to the low image quality and limited available contour labels for training. To overcome these obstacles to online CBCT segmentation, we propose a registration-guided DL (RgDL) segmentation framework that integrates image registration algorithms and DL segmentation models. The registration algorithm generates initial contours, which were used as guidance by DL model to obtain accurate final segmentations. We had two implementations the proposed framework--Rig-RgDL (Rig for rigid body) and Def-RgDL (Def for deformable)--with rigid body (RB) registration or deformable image registration (DIR) as the registration algorithm respectively and U-Net as DL model architecture. The two implementations of RgDL framework were trained and evaluated on seven OARs in an institutional clinical Head and Neck (HN) dataset. Compared to the baseline approaches using the registration or the DL alone, RgDL achieved more accurate segmentation, as measured by higher mean Dice similarity coefficients (DSC) and other distance-based metrics. Rig-RgDL achieved a DSC of 84.5% on seven OARs on average, higher than RB or DL alone by 4.5% and 4.7%. The DSC of Def-RgDL is 86.5%, higher than DIR or DL alone by 2.4% and 6.7%. The inference time took by the DL model to generate final segmentations of seven OARs is less than one second in RgDL. The resulting segmentation accuracy and efficiency show the promise of applying RgDL framework for online ART.

Three-Dimensional Radiotherapy Dose Prediction on Head and Neck Cancer Patients with a Hierarchically Densely Connected U-net Deep Learning Architecture

May 25, 2018

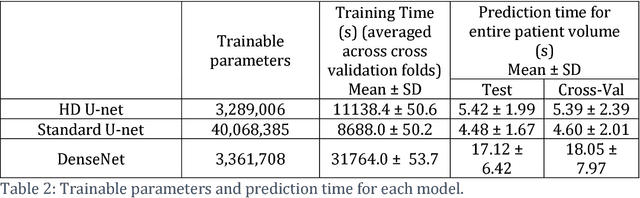

Abstract:The treatment planning process for patients with head and neck (H&N) cancer is regarded as one of the most complicated due large target volume, multiple prescription dose levels, and many radiation-sensitive critical structures near the target. Treatment planning for this site requires a high level of human expertise and a tremendous amount of effort to produce personalized high quality plans, taking as long as a week, which deteriorates the chances of tumor control and patient survival. To solve this problem, we propose to investigate a deep learning-based dose prediction model, Hierarchically Densely Connected U-net, based on two highly popular network architectures: U-net and DenseNet. We find that this new architecture is able to accurately and efficiently predict the dose distribution, outperforming the other two models, the Standard U-net and DenseNet, in homogeneity, dose conformity, and dose coverage on the test data. On average, our proposed model is capable of predicting the OAR max dose within 6.3% and mean dose within 5.1% of the prescription dose on the test data. The other models, the Standard U-net and DenseNet, performed worse, having an OAR max dose prediction error of 8.2% and 9.3%, respectively, and mean dose prediction error of 6.4% and 6.8%, respectively. In addition, our proposed model used 12 times less trainable parameters than the Standard U-net, and predicted the patient dose 4 times faster than DenseNet.

Developing and Analyzing Boundary Detection Operators Using Probabilistic Models

Mar 27, 2013Abstract:Most feature detectors such as edge detectors or circle finders are statistical, in the sense that they decide at each point in an image about the presence of a feature, this paper describes the use of Bayesian feature detectors.

Appropriate and Inappropriate Estimation Techniques

Mar 27, 2013

Abstract:Mode {also called MAP} estimation, mean estimation and median estimation are examined here to determine when they can be safely used to derive {posterior) cost minimizing estimates. (These are all Bayes procedures, using the mode. mean. or median of the posterior distribution). It is found that modal estimation only returns cost minimizing estimates when the cost function is 0-t. If the cost function is a function of distance then mean estimation only returns cost minimizing estimates when the cost function is squared distance from the true value and median estimation only returns cost minimizing estimates when the cost function ts the distance from the true value. Results are presented on the goodness or modal estimation with non 0-t cost functions

Towards a Normative Theory of Scientific Evidence

Mar 27, 2013Abstract:A scientific reasoning system makes decisions using objective evidence in the form of independent experimental trials, propositional axioms, and constraints on the probabilities of events. As a first step towards this goal, we propose a system that derives probability intervals from objective evidence in those forms. Our reasoning system can manage uncertainty about data and rules in a rule based expert system. We expect that our system will be particularly applicable to diagnosis and analysis in domains with a wealth of experimental evidence such as medicine. We discuss limitations of this solution and propose future directions for this research. This work can be considered a generalization of Nilsson's "probabilistic logic" [Nil86] to intervals and experimental observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge