Xun Jia

New Insights into Automatic Treatment Planning for Cancer Radiotherapy Using Explainable Artificial Intelligence

Aug 19, 2025Abstract:Objective: This study aims to uncover the opaque decision-making process of an artificial intelligence (AI) agent for automatic treatment planning. Approach: We examined a previously developed AI agent based on the Actor-Critic with Experience Replay (ACER) network, which automatically tunes treatment planning parameters (TPPs) for inverse planning in prostate cancer intensity modulated radiotherapy. We selected multiple checkpoint ACER agents from different stages of training and applied an explainable AI (EXAI) method to analyze the attribution from dose-volume histogram (DVH) inputs to TPP-tuning decisions. We then assessed each agent's planning efficacy and efficiency and evaluated their policy and final TPP tuning spaces. Combining these analyses, we systematically examined how ACER agents generated high-quality treatment plans in response to different DVH inputs. Results: Attribution analysis revealed that ACER agents progressively learned to identify dose-violation regions from DVH inputs and promote appropriate TPP-tuning actions to mitigate them. Organ-wise similarities between DVH attributions and dose-violation reductions ranged from 0.25 to 0.5 across tested agents. Agents with stronger attribution-violation similarity required fewer tuning steps (~12-13 vs. 22), exhibited a more concentrated TPP-tuning space with lower entropy (~0.3 vs. 0.6), converged on adjusting only a few TPPs, and showed smaller discrepancies between practical and theoretical tuning steps. Putting together, these findings indicate that high-performing ACER agents can effectively identify dose violations from DVH inputs and employ a global tuning strategy to achieve high-quality treatment planning, much like skilled human planners. Significance: Better interpretability of the agent's decision-making process may enhance clinician trust and inspire new strategies for automatic treatment planning.

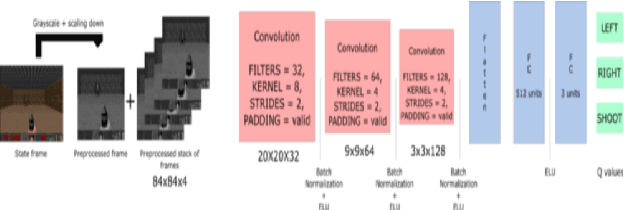

Using reinforcement learning to design an AI assistantfor a satisfying co-op experience

May 07, 2021

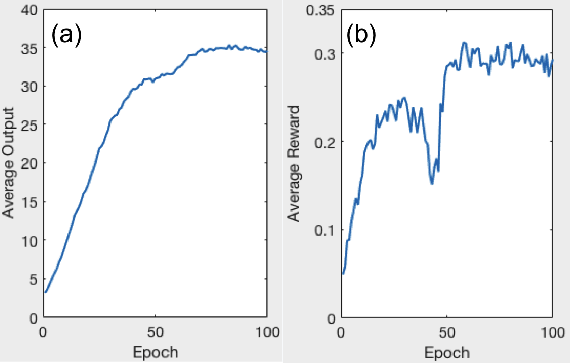

Abstract:In this project, we designed an intelligent assistant player for the single-player game Space Invaders with the aim to provide a satisfying co-op experience. The agent behaviour was designed using reinforcement learning techniques and evaluated based on several criteria. We validate the hypothesis that an AI-driven computer player can provide a satisfying co-op experience.

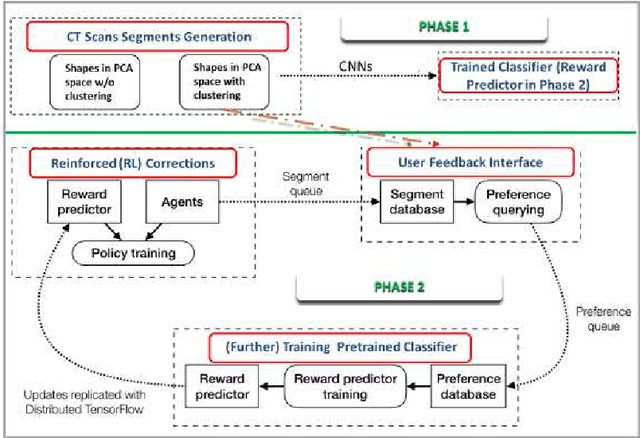

Image Synthesis for Data Augmentation in Medical CT using Deep Reinforcement Learning

Mar 22, 2021

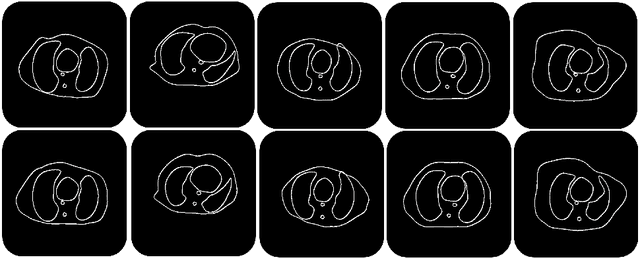

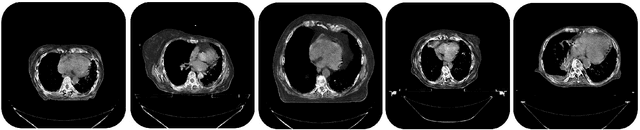

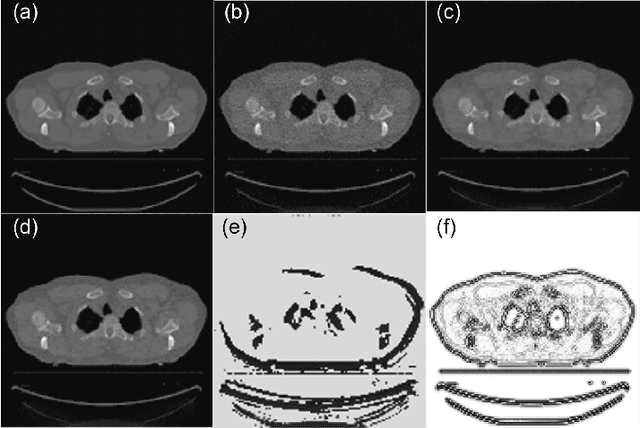

Abstract:Deep learning has shown great promise for CT image reconstruction, in particular to enable low dose imaging and integrated diagnostics. These merits, however, stand at great odds with the low availability of diverse image data which are needed to train these neural networks. We propose to overcome this bottleneck via a deep reinforcement learning (DRL) approach that is integrated with a style-transfer (ST) methodology, where the DRL generates the anatomical shapes and the ST synthesizes the texture detail. We show that our method bears high promise for generating novel and anatomically accurate high resolution CT images at large and diverse quantities. Our approach is specifically designed to work with even small image datasets which is desirable given the often low amount of image data many researchers have available to them.

Noise Entangled GAN For Low-Dose CT Simulation

Feb 18, 2021

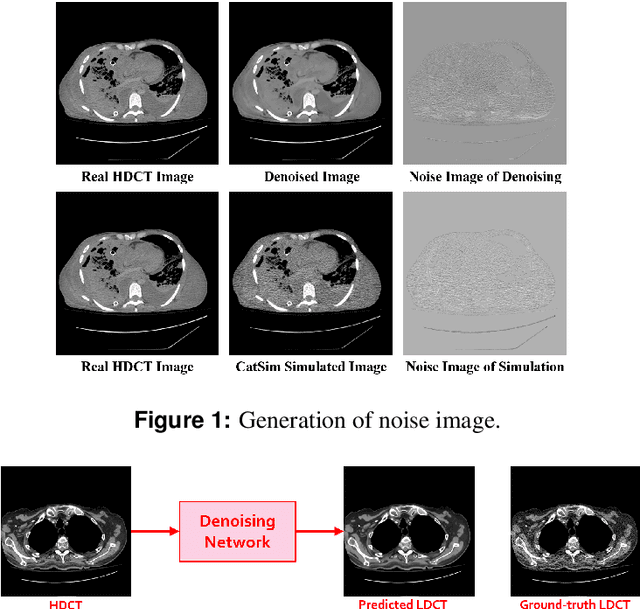

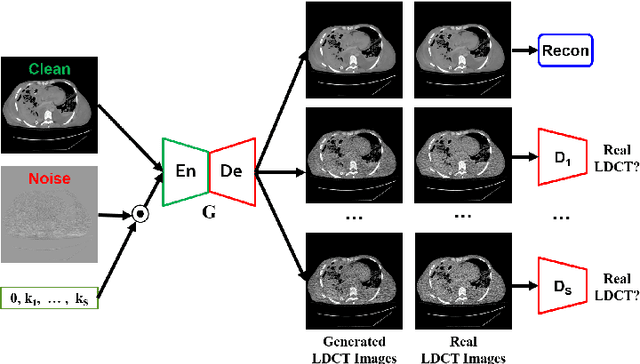

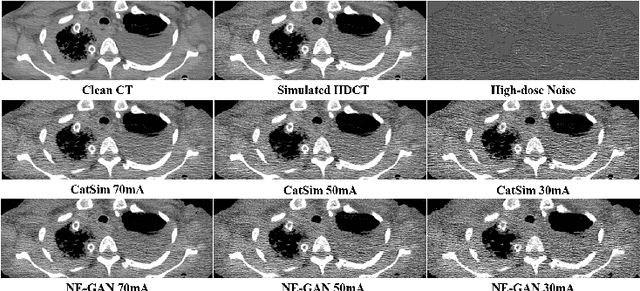

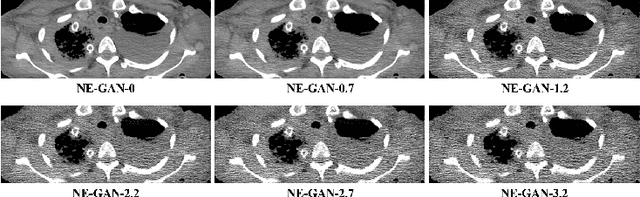

Abstract:We propose a Noise Entangled GAN (NE-GAN) for simulating low-dose computed tomography (CT) images from a higher dose CT image. First, we present two schemes to generate a clean CT image and a noise image from the high-dose CT image. Then, given these generated images, an NE-GAN is proposed to simulate different levels of low-dose CT images, where the level of generated noise can be continuously controlled by a noise factor. NE-GAN consists of a generator and a set of discriminators, and the number of discriminators is determined by the number of noise levels during training. Compared with the traditional methods based on the projection data that are usually unavailable in real applications, NE-GAN can directly learn from the real and/or simulated CT images and may create low-dose CT images quickly without the need of raw data or other proprietary CT scanner information. The experimental results show that the proposed method has the potential to simulate realistic low-dose CT images.

Incorporating human and learned domain knowledge into training deep neural networks: A differentiable dose volume histogram and adversarial inspired framework for generating Pareto optimal dose distributions in radiation therapy

Aug 16, 2019

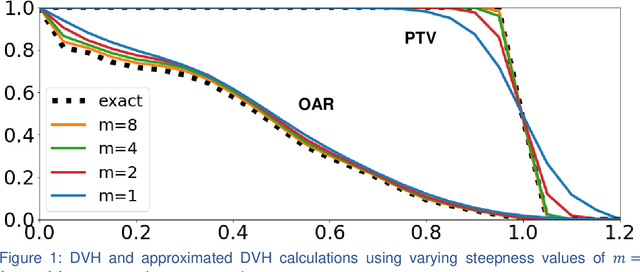

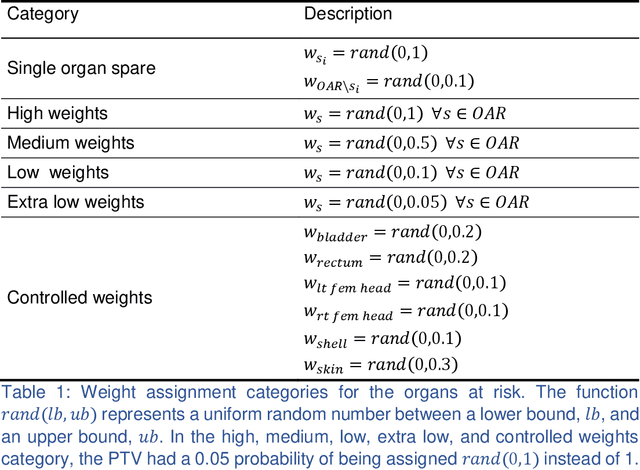

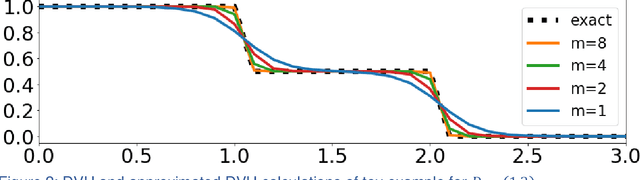

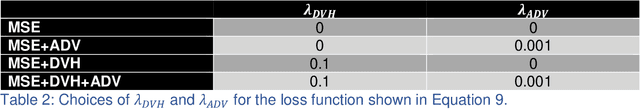

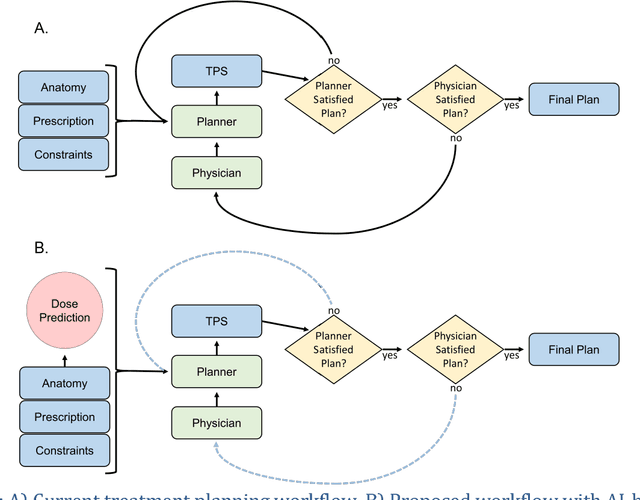

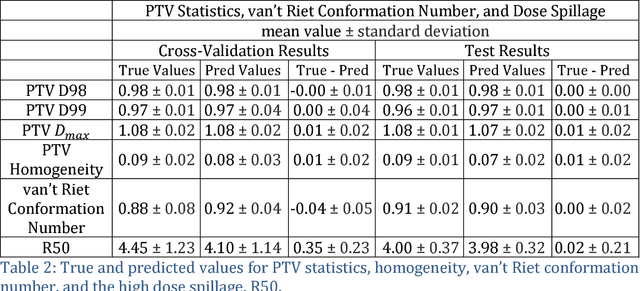

Abstract:We propose a novel domain specific loss, which is a differentiable loss function based on the dose volume histogram, and combine it with an adversarial loss for the training of deep neural networks to generate Pareto optimal dose distributions. The mean squared error (MSE) loss, dose volume histogram (DVH) loss, and adversarial (ADV) loss were used to train 4 instances of the neural network model: 1) MSE, 2) MSE+ADV, 3) MSE+DVH, and 4) MSE+DVH+ADV. 70 prostate patients were acquired, and the dose influence arrays were calculated for each patient. 1200 Pareto surface plans per patient were generated by pseudo-randomizing the tradeoff weights (84,000 plans total). We divided the data into 54 training, 6 validation, and 10 testing patients. Each model was trained for 100,000 iterations, with a batch size of 2. The prediction time of each model is 0.052 seconds. Quantitatively, the MSE+DVH+ADV model had the lowest prediction error of 0.038 (conformation), 0.026 (homogeneity), 0.298 (R50), 1.65% (D95), 2.14% (D98), 2.43% (D99). The MSE model had the worst prediction error of 0.134 (conformation), 0.041 (homogeneity), 0.520 (R50), 3.91% (D95), 4.33% (D98), 4.60% (D99). For both the mean dose PTV error and the max dose PTV, Body, Bladder and rectum error, the MSE+DVH+ADV outperformed all other models. All model's predictions have an average mean and max dose error less than 2.8% and 4.2%, respectively. Expert human domain specific knowledge can be the largest driver in the performance improvement, and adversarial learning can be used to further capture nuanced features. The real-time prediction capabilities allow for a physician to quickly navigate the tradeoff space, and produce a dose distribution as a tangible endpoint for the dosimetrist to use for planning. This can considerably reduce the treatment planning time, allowing for clinicians to focus their efforts on challenging cases.

Three-Dimensional Radiotherapy Dose Prediction on Head and Neck Cancer Patients with a Hierarchically Densely Connected U-net Deep Learning Architecture

May 25, 2018

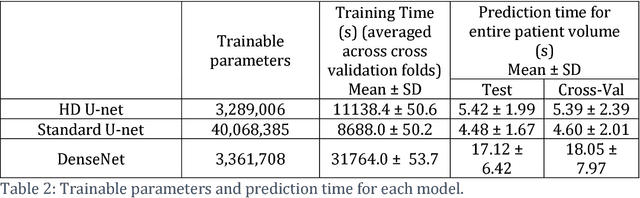

Abstract:The treatment planning process for patients with head and neck (H&N) cancer is regarded as one of the most complicated due large target volume, multiple prescription dose levels, and many radiation-sensitive critical structures near the target. Treatment planning for this site requires a high level of human expertise and a tremendous amount of effort to produce personalized high quality plans, taking as long as a week, which deteriorates the chances of tumor control and patient survival. To solve this problem, we propose to investigate a deep learning-based dose prediction model, Hierarchically Densely Connected U-net, based on two highly popular network architectures: U-net and DenseNet. We find that this new architecture is able to accurately and efficiently predict the dose distribution, outperforming the other two models, the Standard U-net and DenseNet, in homogeneity, dose conformity, and dose coverage on the test data. On average, our proposed model is capable of predicting the OAR max dose within 6.3% and mean dose within 5.1% of the prescription dose on the test data. The other models, the Standard U-net and DenseNet, performed worse, having an OAR max dose prediction error of 8.2% and 9.3%, respectively, and mean dose prediction error of 6.4% and 6.8%, respectively. In addition, our proposed model used 12 times less trainable parameters than the Standard U-net, and predicted the patient dose 4 times faster than DenseNet.

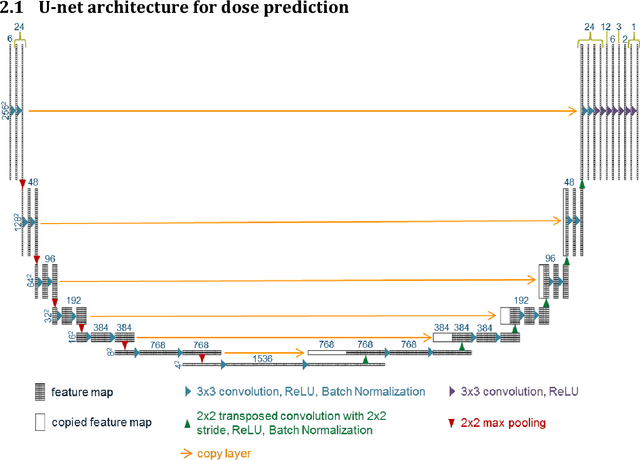

Dose Prediction with U-net: A Feasibility Study for Predicting Dose Distributions from Contours using Deep Learning on Prostate IMRT Patients

May 23, 2018

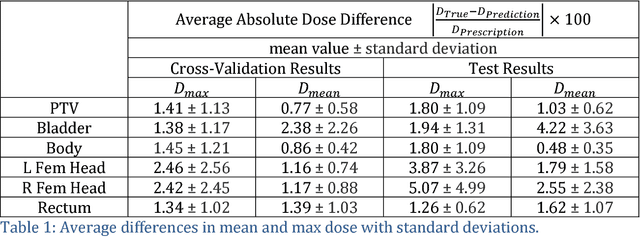

Abstract:With the advancement of treatment modalities in radiation therapy for cancer patients, outcomes have improved, but at the cost of increased treatment plan complexity and planning time. The accurate prediction of dose distributions would alleviate this issue by guiding clinical plan optimization to save time and maintain high quality plans. We have modified a convolutional deep network model, U-net (originally designed for segmentation purposes), for predicting dose from patient image contours. We show that, as an example, we are able to accurately predict the dose of intensity-modulated radiation therapy (IMRT) for prostate cancer patients, where the average dice similarity coefficient is 0.91 when comparing the predicted vs. true isodose volumes between 0% and 100% of the prescription dose. The average value of the absolute differences in [max, mean] dose is found to be under 5% of the prescription dose, specifically for each structure is [1.80%, 1.03%](PTV), [1.94%, 4.22%](Bladder), [1.80%, 0.48%](Body), [3.87%, 1.79%](L Femoral Head), [5.07%, 2.55%](R Femoral Head), and [1.26%, 1.62%](Rectum) of the prescription dose. We thus managed to map a desired radiation dose distribution from a patient's PTV and OAR contours. As an additional advantage, relatively little data was used in the techniques and models described in this paper.

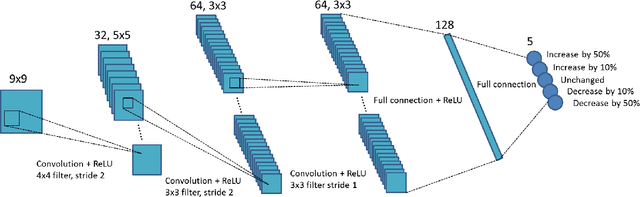

Intelligent Parameter Tuning in Optimization-based Iterative CT Reconstruction via Deep Reinforcement Learning

Nov 01, 2017

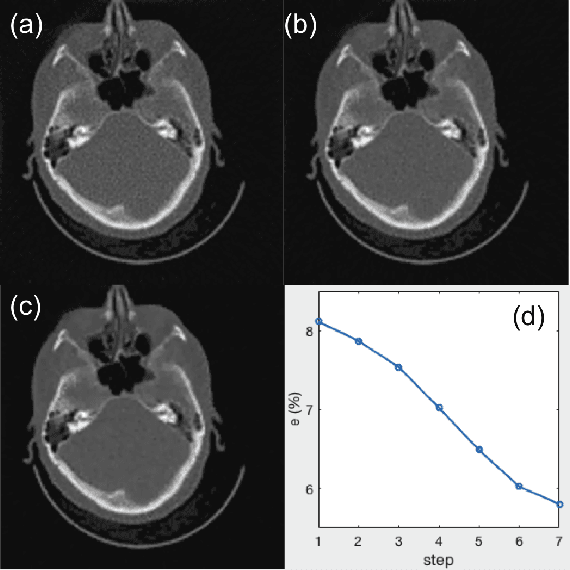

Abstract:A number of image-processing problems can be formulated as optimization problems. The objective function typically contains several terms specifically designed for different purposes. Parameters in front of these terms are used to control the relative weights among them. It is of critical importance to tune these parameters, as quality of the solution depends on their values. Tuning parameter is a relatively straightforward task for a human, as one can intelligently determine the direction of parameter adjustment based on the solution quality. Yet manual parameter tuning is not only tedious in many cases, but becomes impractical when a number of parameters exist in a problem. Aiming at solving this problem, this paper proposes an approach that employs deep reinforcement learning to train a system that can automatically adjust parameters in a human-like manner. We demonstrate our idea in an example problem of optimization-based iterative CT reconstruction with a pixel-wise total-variation regularization term. We set up a parameter tuning policy network (PTPN), which maps an CT image patch to an output that specifies the direction and amplitude by which the parameter at the patch center is adjusted. We train the PTPN via an end-to-end reinforcement learning procedure. We demonstrate that under the guidance of the trained PTPN for parameter tuning at each pixel, reconstructed CT images attain quality similar or better than in those reconstructed with manually tuned parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge