Xuejun Gu

Leveraging Global Binary Masks for Structure Segmentation in Medical Images

May 13, 2022

Abstract:Deep learning (DL) models for medical image segmentation are highly influenced by intensity variations of input images and lack generalization due to primarily utilizing pixels' intensity information for inference. Acquiring sufficient training data is another challenge limiting models' applications. We proposed to leverage the consistency of organs' anatomical shape and position information in medical images. We introduced a framework leveraging recurring anatomical patterns through global binary masks for organ segmentation. Two scenarios were studied.1) Global binary masks were the only model's (i.e. U-Net) input, forcing exclusively encoding organs' position and shape information for segmentation/localization.2) Global binary masks were incorporated as an additional channel functioning as position/shape clues to mitigate training data scarcity. Two datasets of the brain and heart CT images with their ground-truth were split into (26:10:10) and (12:3:5) for training, validation, and test respectively. Training exclusively on global binary masks led to Dice scores of 0.77(0.06) and 0.85(0.04), with the average Euclidian distance of 3.12(1.43)mm and 2.5(0.93)mm relative to the center of mass of the ground truth for the brain and heart structures respectively. The outcomes indicate that a surprising degree of position and shape information is encoded through global binary masks. Incorporating global binary masks led to significantly higher accuracy relative to the model trained on only CT images in small subsets of training data; the performance improved by 4.3-125.3% and 1.3-48.1% for 1-8 training cases of the brain and heart datasets respectively. The findings imply the advantages of utilizing global binary masks for building generalizable models and to compensate for training data scarcity.

Registration-Guided Deep Learning Image Segmentation for Cone Beam CT-based Online Adaptive Radiotherapy

Aug 19, 2021

Abstract:Adaptive radiotherapy (ART), especially online ART, effectively accounts for positioning errors and anatomical changes. One key component of online ART is accurately and efficiently delineating organs at risk (OARs) and targets on online images, such as CBCT, to meet the online demands of plan evaluation and adaptation. Deep learning (DL)-based automatic segmentation has gained great success in segmenting planning CT, but its applications to CBCT yielded inferior results due to the low image quality and limited available contour labels for training. To overcome these obstacles to online CBCT segmentation, we propose a registration-guided DL (RgDL) segmentation framework that integrates image registration algorithms and DL segmentation models. The registration algorithm generates initial contours, which were used as guidance by DL model to obtain accurate final segmentations. We had two implementations the proposed framework--Rig-RgDL (Rig for rigid body) and Def-RgDL (Def for deformable)--with rigid body (RB) registration or deformable image registration (DIR) as the registration algorithm respectively and U-Net as DL model architecture. The two implementations of RgDL framework were trained and evaluated on seven OARs in an institutional clinical Head and Neck (HN) dataset. Compared to the baseline approaches using the registration or the DL alone, RgDL achieved more accurate segmentation, as measured by higher mean Dice similarity coefficients (DSC) and other distance-based metrics. Rig-RgDL achieved a DSC of 84.5% on seven OARs on average, higher than RB or DL alone by 4.5% and 4.7%. The DSC of Def-RgDL is 86.5%, higher than DIR or DL alone by 2.4% and 6.7%. The inference time took by the DL model to generate final segmentations of seven OARs is less than one second in RgDL. The resulting segmentation accuracy and efficiency show the promise of applying RgDL framework for online ART.

Saliency-Guided Deep Learning Network for Automatic Tumor Bed Volume Delineation in Post-operative Breast Irradiation

May 06, 2021

Abstract:Efficient, reliable and reproducible target volume delineation is a key step in the effective planning of breast radiotherapy. However, post-operative breast target delineation is challenging as the contrast between the tumor bed volume (TBV) and normal breast tissue is relatively low in CT images. In this study, we propose to mimic the marker-guidance procedure in manual target delineation. We developed a saliency-based deep learning segmentation (SDL-Seg) algorithm for accurate TBV segmentation in post-operative breast irradiation. The SDL-Seg algorithm incorporates saliency information in the form of markers' location cues into a U-Net model. The design forces the model to encode the location-related features, which underscores regions with high saliency levels and suppresses low saliency regions. The saliency maps were generated by identifying markers on CT images. Markers' locations were then converted to probability maps using a distance-transformation coupled with a Gaussian filter. Subsequently, the CT images and the corresponding saliency maps formed a multi-channel input for the SDL-Seg network. Our in-house dataset was comprised of 145 prone CT images from 29 post-operative breast cancer patients, who received 5-fraction partial breast irradiation (PBI) regimen on GammaPod. The performance of the proposed method was compared against basic U-Net. Our model achieved mean (standard deviation) of 76.4 %, 6.76 mm, and 1.9 mm for DSC, HD95, and ASD respectively on the test set with computation time of below 11 seconds per one CT volume. SDL-Seg showed superior performance relative to basic U-Net for all the evaluation metrics while preserving low computation cost. The findings demonstrate that SDL-Seg is a promising approach for improving the efficiency and accuracy of the on-line treatment planning procedure of PBI, such as GammaPod based PBI.

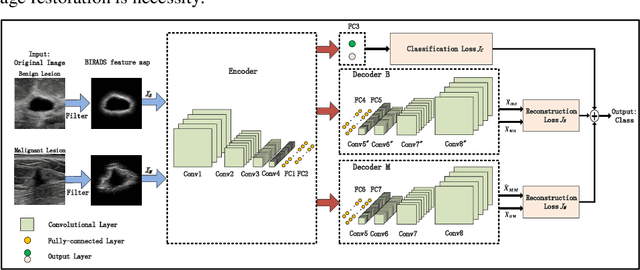

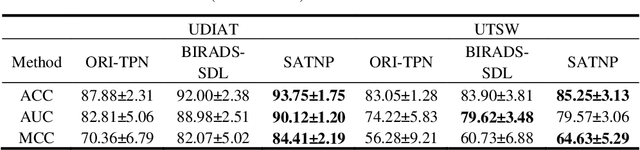

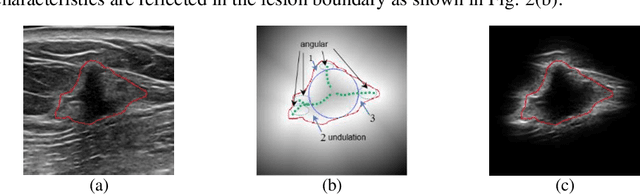

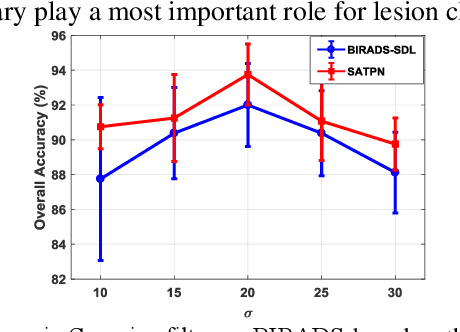

Breast Ultrasound Computer-Aided Diagnosis Using Structure-Aware Triplet Path Networks

Aug 09, 2019

Abstract:Breast ultrasound (US) is an effective imaging modality for breast cancer detec-tion and diagnosis. The structural characteristics of breast lesion play an im-portant role in Computer-Aided Diagnosis (CAD). In this paper, a novel struc-ture-aware triplet path networks (SATPN) was designed to integrate classifica-tion and two image reconstruction tasks to achieve accurate diagnosis on US im-ages with small training dataset. Specifically, we enhance clinically-approved breast lesion structure characteristics though converting original breast US imag-es to BIRADS-oriented feature maps (BFMs) with a distance-transformation coupled Gaussian filter. Then, the converted BFMs were used as the inputs of SATPN, which performed lesion classification task and two unsupervised stacked convolutional Auto-Encoder (SCAE) networks for benign and malignant image reconstruction tasks, independently. We trained the SATPN with an alter-native learning strategy by balancing image reconstruction error and classification label prediction error. At the test stage, the lesion label was determined by the weighted voting with reconstruction error and label prediction error. We com-pared the performance of the SATPN with TPN using original image as input and our previous developed semi-supervised deep learning methods using BFMs as inputs. Experimental results on two breast US datasets showed that SATPN ranked the best among the three networks, with classification accuracy around 93.5%. These findings indicated that SATPN is promising for effective breast US lesion CAD using small datasets.

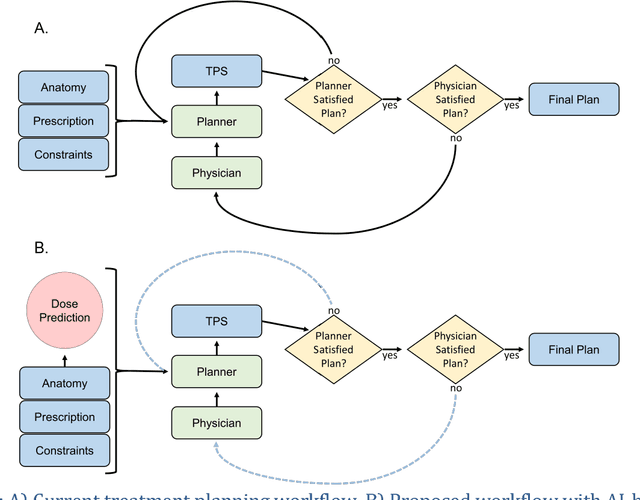

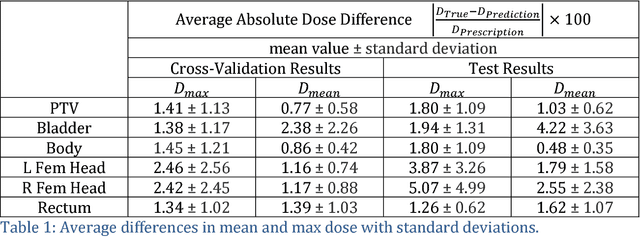

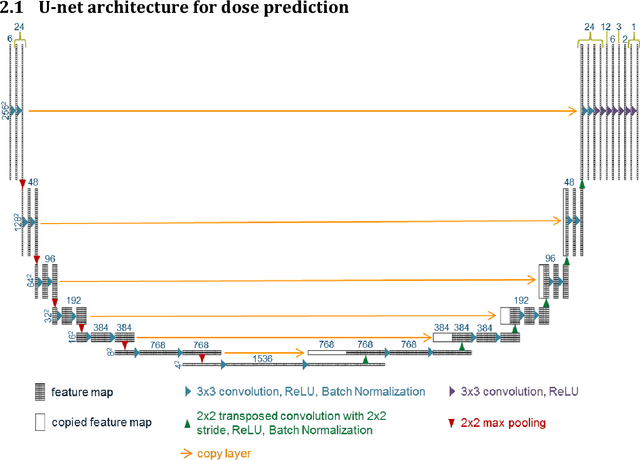

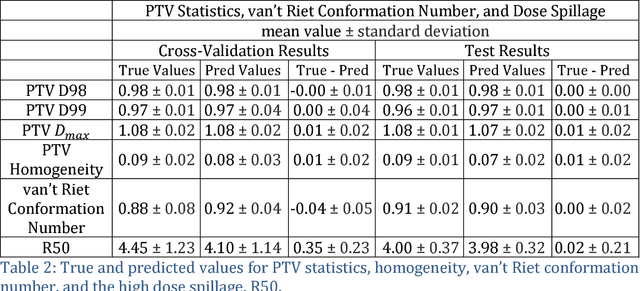

Dose Prediction with U-net: A Feasibility Study for Predicting Dose Distributions from Contours using Deep Learning on Prostate IMRT Patients

May 23, 2018

Abstract:With the advancement of treatment modalities in radiation therapy for cancer patients, outcomes have improved, but at the cost of increased treatment plan complexity and planning time. The accurate prediction of dose distributions would alleviate this issue by guiding clinical plan optimization to save time and maintain high quality plans. We have modified a convolutional deep network model, U-net (originally designed for segmentation purposes), for predicting dose from patient image contours. We show that, as an example, we are able to accurately predict the dose of intensity-modulated radiation therapy (IMRT) for prostate cancer patients, where the average dice similarity coefficient is 0.91 when comparing the predicted vs. true isodose volumes between 0% and 100% of the prescription dose. The average value of the absolute differences in [max, mean] dose is found to be under 5% of the prescription dose, specifically for each structure is [1.80%, 1.03%](PTV), [1.94%, 4.22%](Bladder), [1.80%, 0.48%](Body), [3.87%, 1.79%](L Femoral Head), [5.07%, 2.55%](R Femoral Head), and [1.26%, 1.62%](Rectum) of the prescription dose. We thus managed to map a desired radiation dose distribution from a patient's PTV and OAR contours. As an additional advantage, relatively little data was used in the techniques and models described in this paper.

Soft-NeuroAdapt: A 3-DOF Neuro-Adaptive Patient Pose Correction System For Frameless and Maskless Cancer Radiotherapy

Sep 22, 2017

Abstract:Precise patient positioning is fundamental to successful removal of malignant tumors during treatment of head and neck cancers. Errors in patient positioning have been known to damage critical organs and cause complications. To better address issues of patient positioning and motion, we introduce a 3-DOF neuro-adaptive soft-robot, called Soft-NeuroAdapt to correct deviations along 3 axes. The robot consists of inflatable air bladders that adaptively control head deviations from target while ensuring patient safety and comfort. The adaptive-neuro controller combines a state feedback component, a feedforward regulator, and a neural network that ensures correct adaptation. States are measured by a 3D vision system. We validate Soft-NeuroAdapt on a 3D printed head-and-neck dummy, and demonstrate that the controller provides adaptive actuation that compensates for intrafractional deviations in patient positioning.

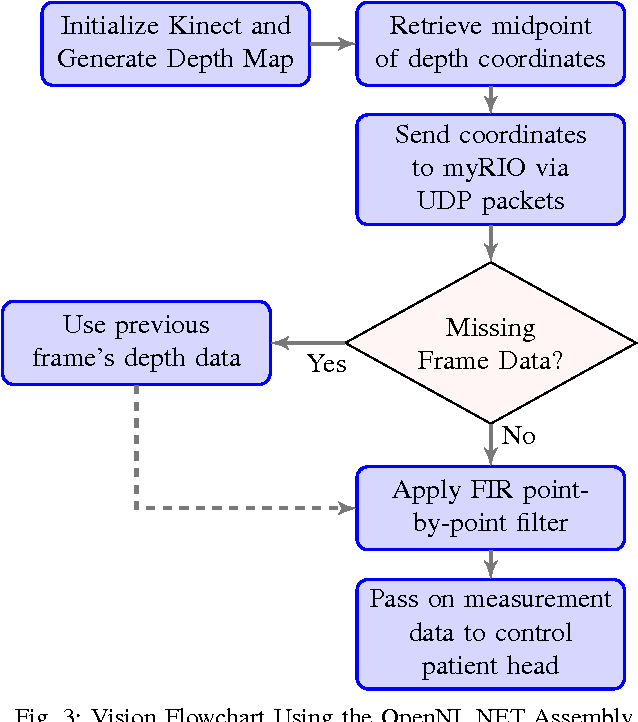

Vision-based Control of a Soft Robot for Maskless Head and Neck Cancer Radiotherapy

Oct 05, 2016

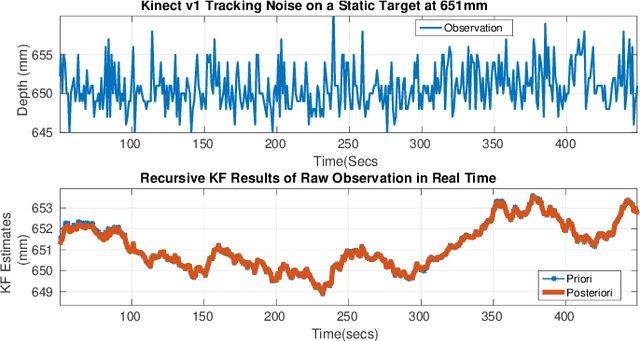

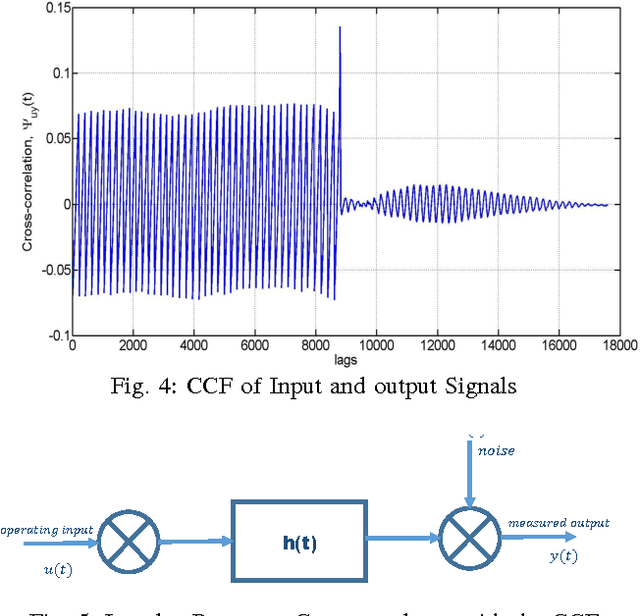

Abstract:This work presents an on-going investigation of the control of a pneumatic soft-robot actuator addressing accurate patient positioning systems in maskless head and neck cancer radiotherapy. We employ two RGB-D sensors in a sensor fusion scheme to better estimate a patient's head pitch motion. A system identification prediction error model is used to obtain a linear time invariant state space model. We then use the model to design a linear quadratic Gaussian feedback controller to manipulate the patient head position based on sensed head pitch motion. Experiments demonstrate the success of our approach.

Nonlinear Systems Identification Using Deep Dynamic Neural Networks

Oct 05, 2016

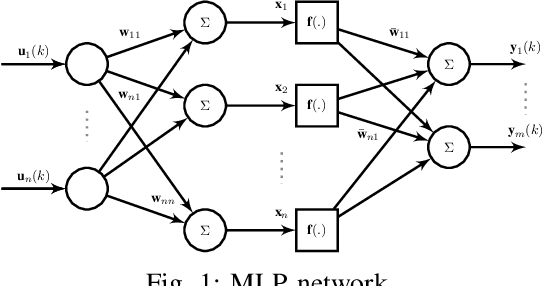

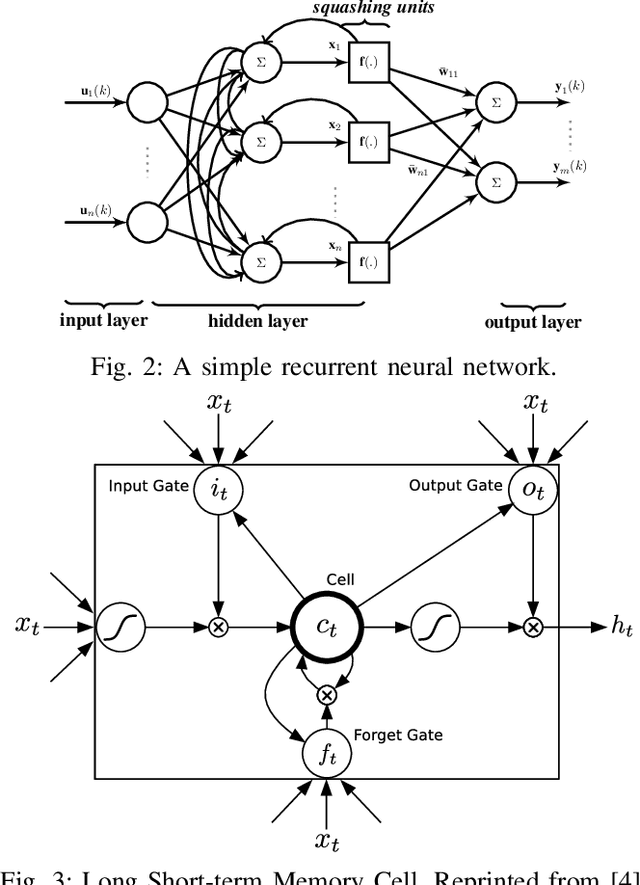

Abstract:Neural networks are known to be effective function approximators. Recently, deep neural networks have proven to be very effective in pattern recognition, classification tasks and human-level control to model highly nonlinear realworld systems. This paper investigates the effectiveness of deep neural networks in the modeling of dynamical systems with complex behavior. Three deep neural network structures are trained on sequential data, and we investigate the effectiveness of these networks in modeling associated characteristics of the underlying dynamical systems. We carry out similar evaluations on select publicly available system identification datasets. We demonstrate that deep neural networks are effective model estimators from input-output data

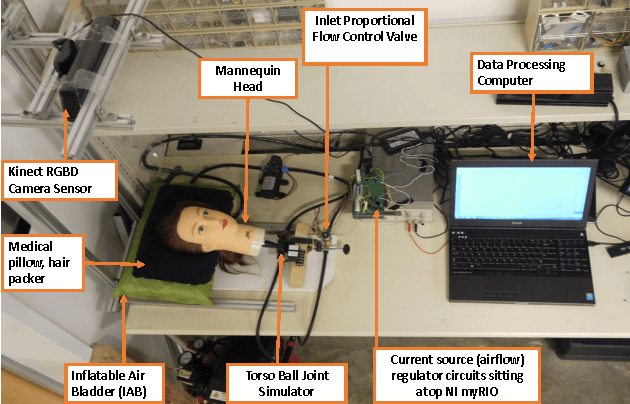

A Real-Time Soft Robotic Patient Positioning System for Maskless Head-and-Neck Cancer Radiotherapy: An Initial Investigation

Sep 19, 2015

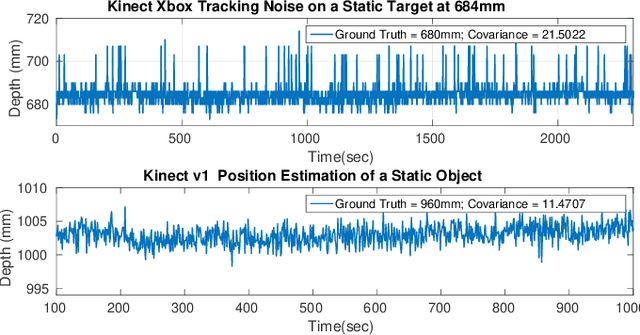

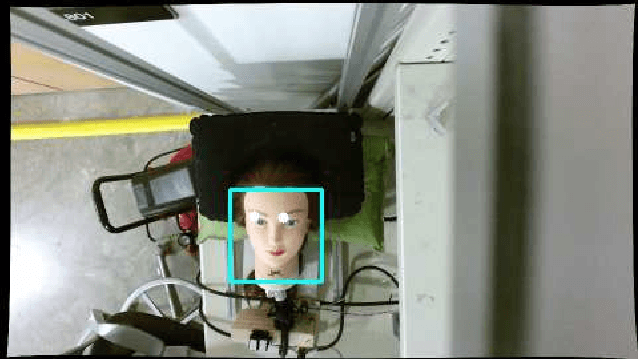

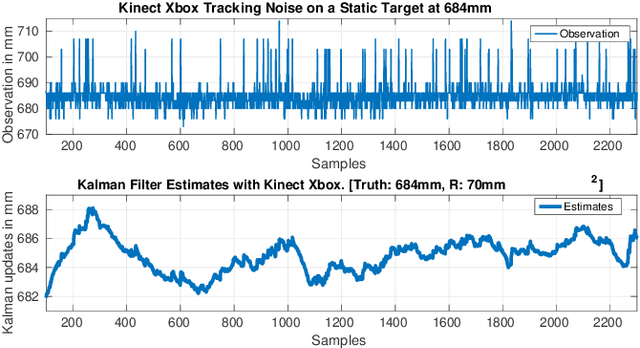

Abstract:We present an initial examination of a novel approach to accurately position a patient during head and neck intensity modulated radiotherapy (IMRT). Position-based visual-servoing of a radio-transparent soft robot is used to control the flexion/extension cranial motion of a manikin head. A Kinect RGB-D camera is used to measure head position and the error between the sensed and desired position is used to control a pneumatic system which regulates pressure within an inflatable air bladder (IAB). Results show that the system is capable of controlling head motion to within 2mm with respect to a reference trajectory. This establishes proof-of-concept that using multiple IABs and actuators can improve cancer treatment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge