Deep High-Resolution Network for Low Dose X-ray CT Denoising

Paper and Code

Feb 01, 2021

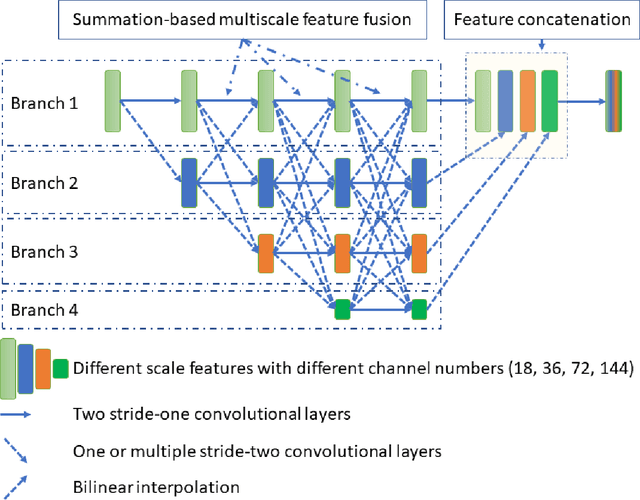

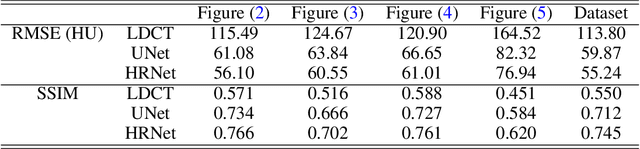

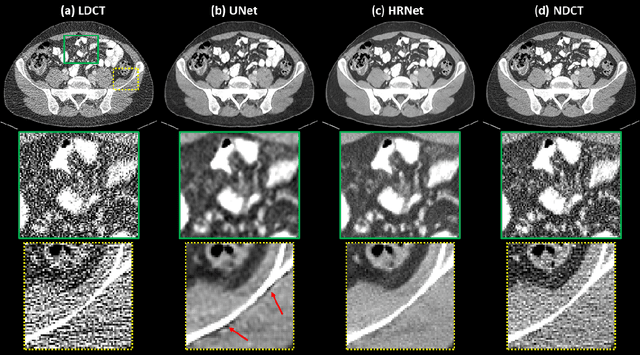

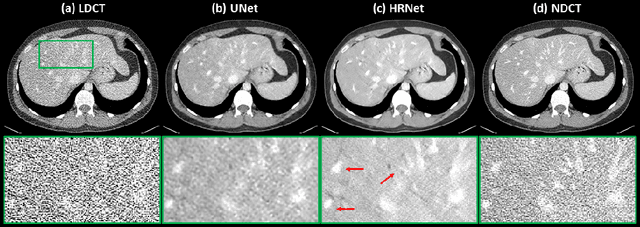

Low Dose Computed Tomography (LDCT) is clinically desirable due to the reduced radiation to patients. However, the quality of LDCT images is often sub-optimal because of the inevitable strong quantum noise. Inspired by their unprecedent success in computer vision, deep learning (DL)-based techniques have been used for LDCT denoising. Despite the promising noise removal ability of DL models, people have observed that the resolution of the DL-denoised images is compromised, decreasing their clinical value. Aiming at relieving this problem, in this work, we developed a more effective denoiser by introducing a high-resolution network (HRNet). Since HRNet consists of multiple branches of subnetworks to extract multiscale features which are later fused together, the quality of the generated features can be substantially enhanced, leading to improved denoising performance. Experimental results demonstrated that the introduced HRNet-based denoiser outperforms the benchmarked UNet-based denoiser in terms of superior image resolution preservation ability while comparable, if not better, noise suppression ability. Quantitative metrics in terms of root-mean-squared-errors (RMSE)/structure similarity index (SSIM) showed that the HRNet-based denoiser can improve the values from 113.80/0.550 (LDCT) to 55.24/0.745 (HRNet), in comparison to 59.87/0.712 for the UNet-based denoiser.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge