Vladimir Ivan

Online Multi-Contact Receding Horizon Planning via Value Function Approximation

Jun 12, 2023

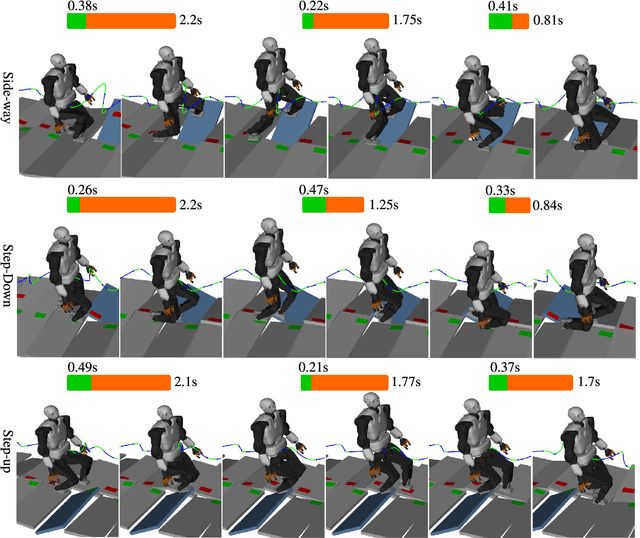

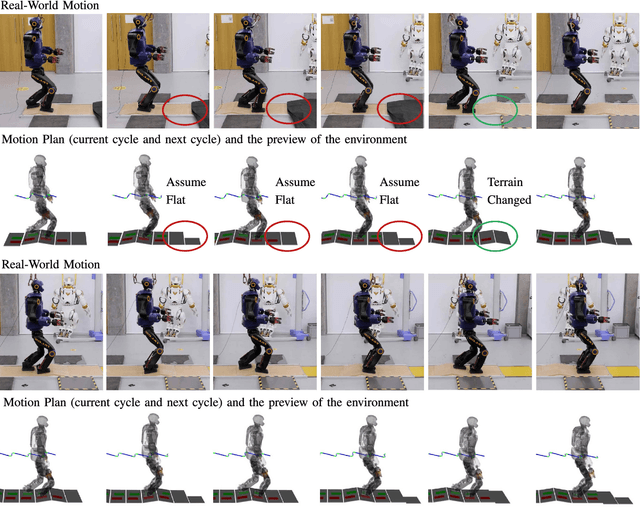

Abstract:Planning multi-contact motions in a receding horizon fashion requires a value function to guide the planning with respect to the future, e.g., building momentum to traverse large obstacles. Traditionally, the value function is approximated by computing trajectories in a prediction horizon (never executed) that foresees the future beyond the execution horizon. However, given the non-convex dynamics of multi-contact motions, this approach is computationally expensive. To enable online Receding Horizon Planning (RHP) of multi-contact motions, we find efficient approximations of the value function. Specifically, we propose a trajectory-based and a learning-based approach. In the former, namely RHP with Multiple Levels of Model Fidelity, we approximate the value function by computing the prediction horizon with a convex relaxed model. In the latter, namely Locally-Guided RHP, we learn an oracle to predict local objectives for locomotion tasks, and we use these local objectives to construct local value functions for guiding a short-horizon RHP. We evaluate both approaches in simulation by planning centroidal trajectories of a humanoid robot walking on moderate slopes, and on large slopes where the robot cannot maintain static balance. Our results show that locally-guided RHP achieves the best computation efficiency (95\%-98.6\% cycles converge online). This computation advantage enables us to demonstrate online receding horizon planning of our real-world humanoid robot Talos walking in dynamic environments that change on-the-fly.

Topology-Based MPC for Automatic Footstep Placement and Contact Surface Selection

Mar 24, 2023

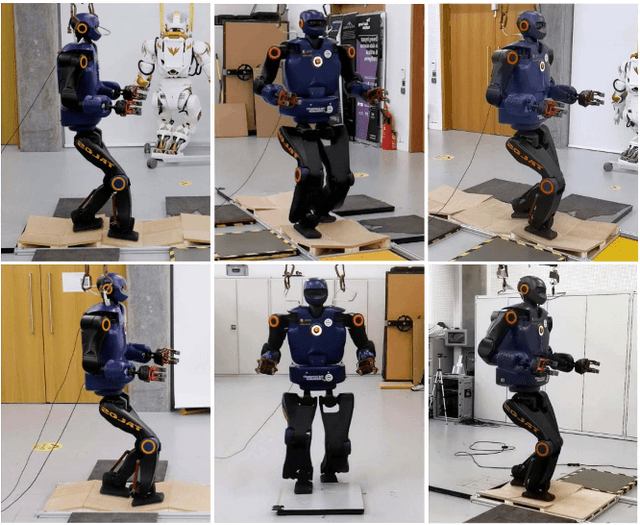

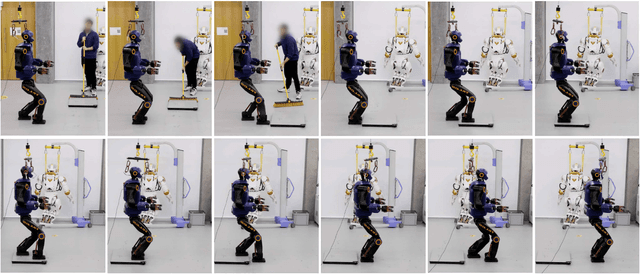

Abstract:State-of-the-art approaches to footstep planning assume reduced-order dynamics when solving the combinatorial problem of selecting contact surfaces in real time. However, in exchange for computational efficiency, these approaches ignore joint torque limits and limb dynamics. In this work, we address these limitations by presenting a topology-based approach that enables~\gls{mpc} to simultaneously plan full-body motions, torque commands, footstep placements, and contact surfaces in real time. To determine if a robot's foot is inside a contact surface, we borrow the winding number concept from topology. We then use this winding number and potential field to create a contact-surface penalty function. By using this penalty function,~\gls{mpc} can select a contact surface from all candidate surfaces in the vicinity and determine footstep placements within it. We demonstrate the benefits of our approach by showing the impact of considering full-body dynamics, which includes joint torque limits and limb dynamics, on the selection of footstep placements and contact surfaces. Furthermore, we validate the feasibility of deploying our topology-based approach in an~\gls{mpc} scheme and explore its potential capabilities through a series of experimental and simulation trials.

* 7 pages, 6 figures

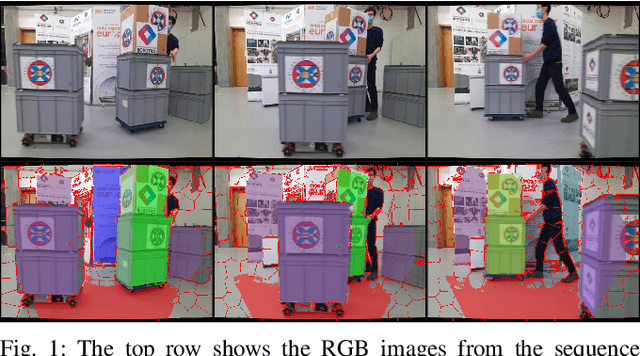

RGB-D-Inertial SLAM in Indoor Dynamic Environments with Long-term Large Occlusion

Mar 23, 2023Abstract:This work presents a novel RGB-D-inertial dynamic SLAM method that can enable accurate localisation when the majority of the camera view is occluded by multiple dynamic objects over a long period of time. Most dynamic SLAM approaches either remove dynamic objects as outliers when they account for a minor proportion of the visual input, or detect dynamic objects using semantic segmentation before camera tracking. Therefore, dynamic objects that cause large occlusions are difficult to detect without prior information. The remaining visual information from the static background is also not enough to support localisation when large occlusion lasts for a long period. To overcome these problems, our framework presents a robust visual-inertial bundle adjustment that simultaneously tracks camera, estimates cluster-wise dense segmentation of dynamic objects and maintains a static sparse map by combining dense and sparse features. The experiment results demonstrate that our method achieves promising localisation and object segmentation performance compared to other state-of-the-art methods in the scenario of long-term large occlusion.

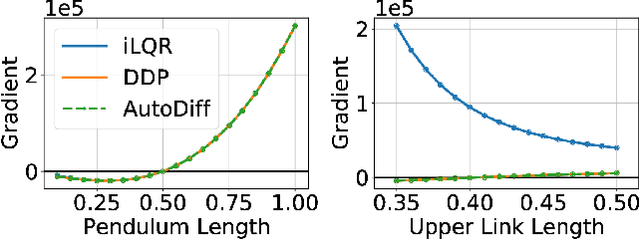

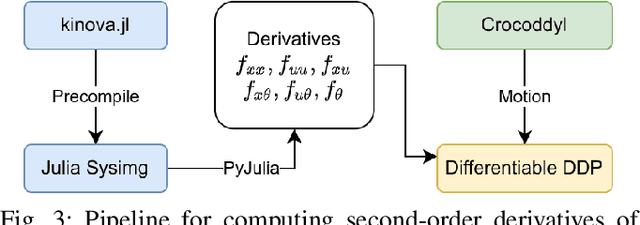

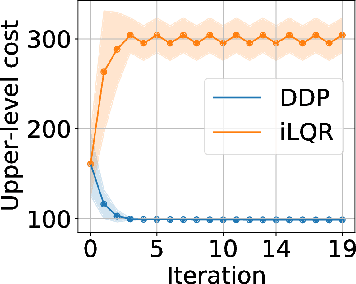

Differentiable Optimal Control via Differential Dynamic Programming

Sep 02, 2022

Abstract:Robot design optimization, imitation learning and system identification share a common problem which requires optimization over robot or task parameters at the same time as optimizing the robot motion. To solve these problems, we can use differentiable optimal control for which the gradients of the robot's motion with respect to the parameters are required. We propose a method to efficiently compute these gradients analytically via the differential dynamic programming (DDP) algorithm using sensitivity analysis (SA). We show that we must include second-order dynamics terms when computing the gradients. However, we do not need to include them when computing the motion. We validate our approach on the pendulum and double pendulum systems. Furthermore, we compare against using the derivatives of the iterative linear quadratic regulator (iLQR), which ignores these second-order terms everywhere, on a co-design task for the Kinova arm, where we optimize the link lengths of the robot for a target reaching task. We show that optimizing using iLQR gradients diverges as ignoring the second-order dynamics affects the computation of the derivatives. Instead, optimizing using DDP gradients converges to the same optimum for a range of initial designs allowing our formulation to scale to complex systems.

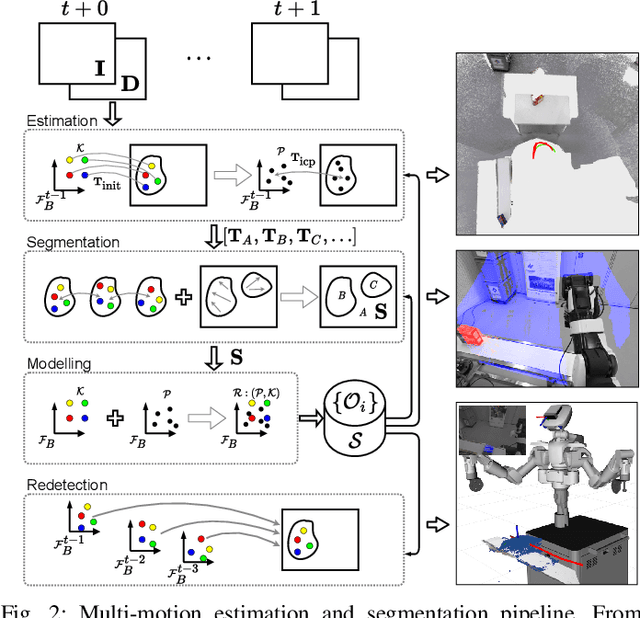

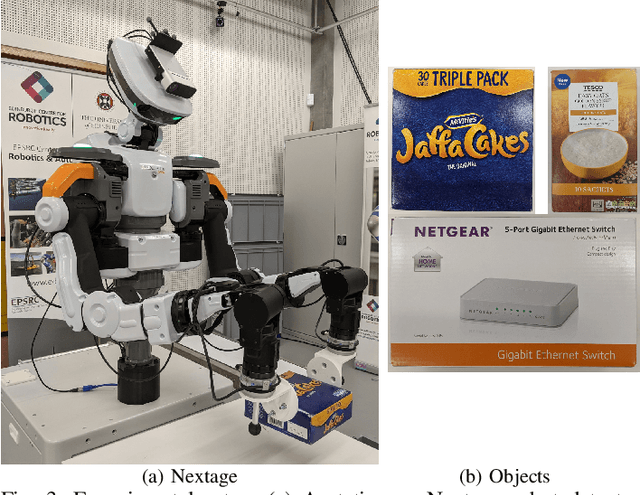

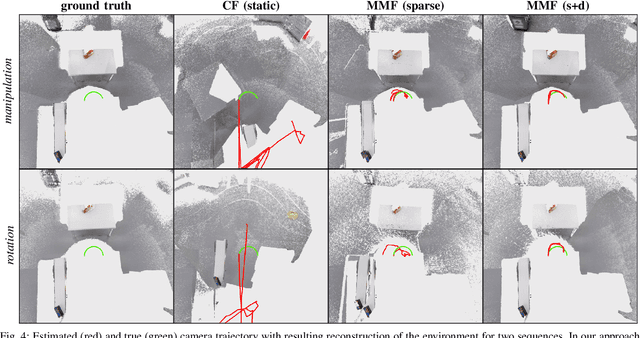

Sparse-Dense Motion Modelling and Tracking for Manipulation without Prior Object Models

Apr 25, 2022

Abstract:This work presents an approach for modelling and tracking previously unseen objects for robotic grasping tasks. Using the motion of objects in a scene, our approach segments rigid entities from the scene and continuously tracks them to create a dense and sparse model of the object and the environment. While the dense tracking enables interaction with these models, the sparse tracking makes this robust against fast movements and allows to redetect already modelled objects. The evaluation on a dual-arm grasping task demonstrates that our approach 1) enables a robot to detect new objects online without a prior model and to grasp these objects using only a simple parameterisable geometric representation, and 2) is much more robust compared to the state of the art methods.

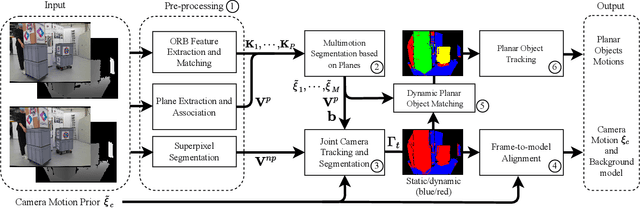

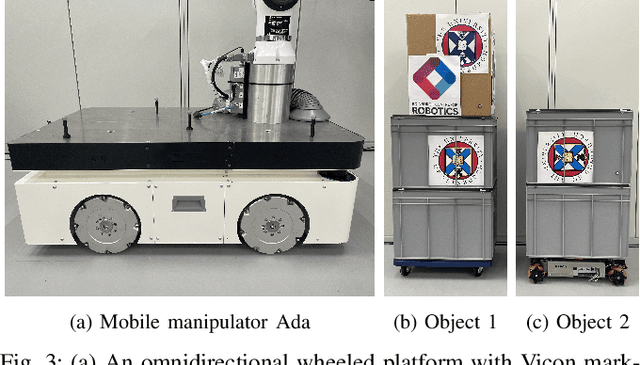

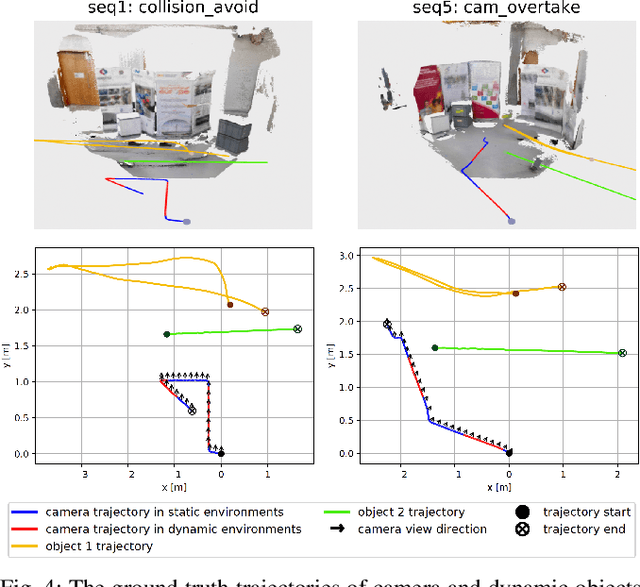

RGB-D SLAM in Indoor Planar Environments with Multiple Large Dynamic Objects

Mar 06, 2022

Abstract:This work presents a novel dense RGB-D SLAM approach for dynamic planar environments that enables simultaneous multi-object tracking, camera localisation and background reconstruction. Previous dynamic SLAM methods either rely on semantic segmentation to directly detect dynamic objects; or assume that dynamic objects occupy a smaller proportion of the camera view than the static background and can, therefore, be removed as outliers. Our approach, however, enables dense SLAM when the camera view is largely occluded by multiple dynamic objects with the aid of camera motion prior. The dynamic planar objects are separated by their different rigid motions and tracked independently. The remaining dynamic non-planar areas are removed as outliers and not mapped into the background. The evaluation demonstrates that our approach outperforms the state-of-the-art methods in terms of localisation, mapping, dynamic segmentation and object tracking. We also demonstrate its robustness to large drift in the camera motion prior.

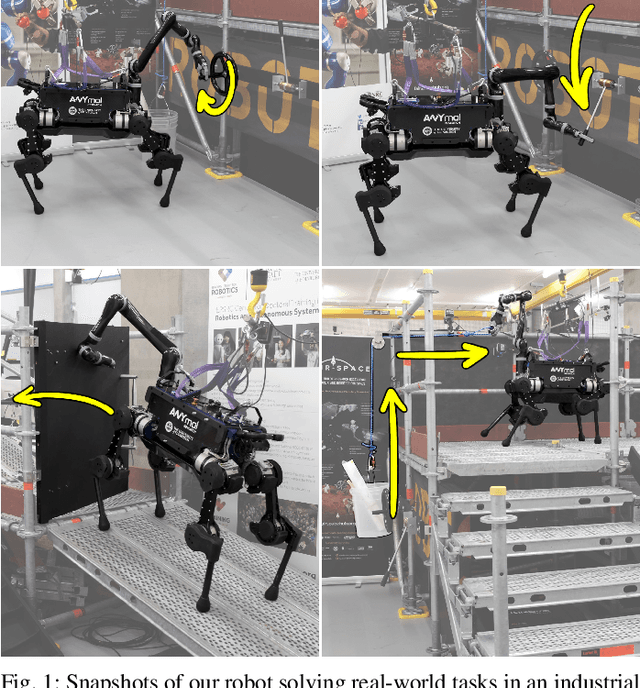

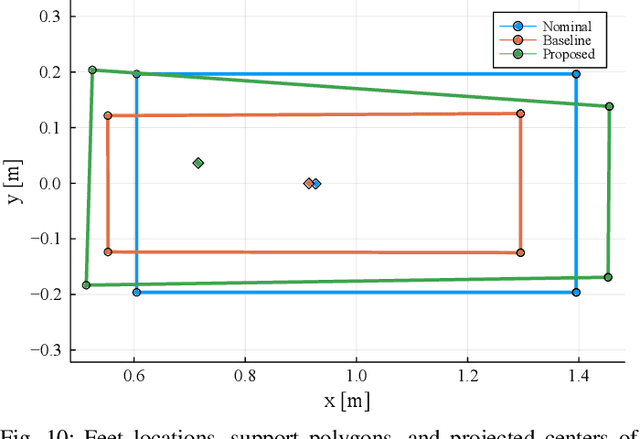

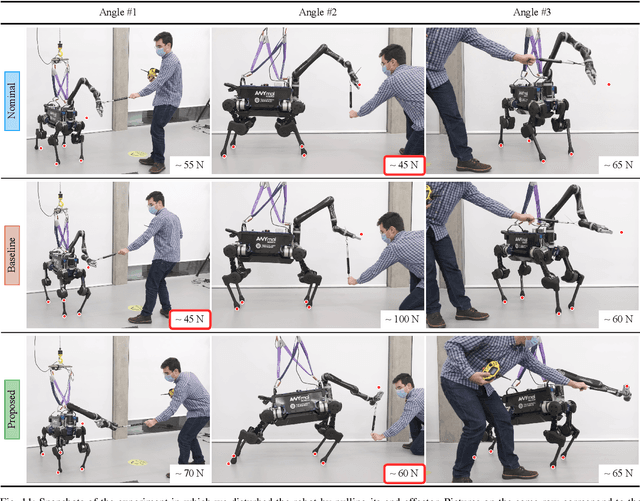

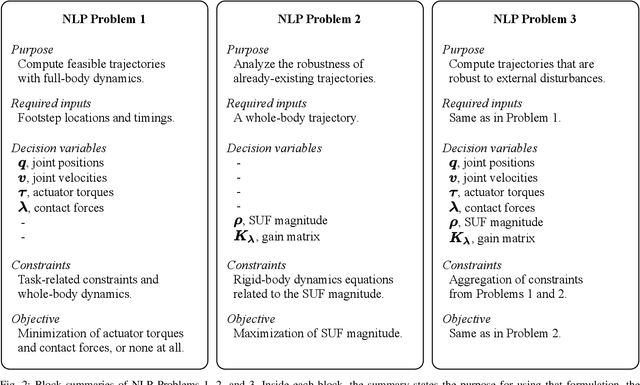

RoLoMa: Robust Loco-Manipulation for Quadruped Robots with Arms

Mar 02, 2022

Abstract:Deployment of robotic systems in the real world requires a certain level of robustness in order to deal with uncertainty factors, such as mismatches in the dynamics model, noise in sensor readings, and communication delays. Some approaches tackle these issues reactively at the control stage. However, regardless of the controller, online motion execution can only be as robust as the system capabilities allow at any given state. This is why it is important to have good motion plans to begin with, where robustness is considered proactively. To this end, we propose a metric (derived from first principles) for representing robustness against external disturbances. We then use this metric within our trajectory optimization framework for solving complex loco-manipulation tasks. Through our experiments, we show that trajectories generated using our approach can resist a greater range of forces originating from any possible direction. By using our method, we can compute trajectories that solve tasks as effectively as before, with the added benefit of being able to counteract stronger disturbances in worst-case scenarios.

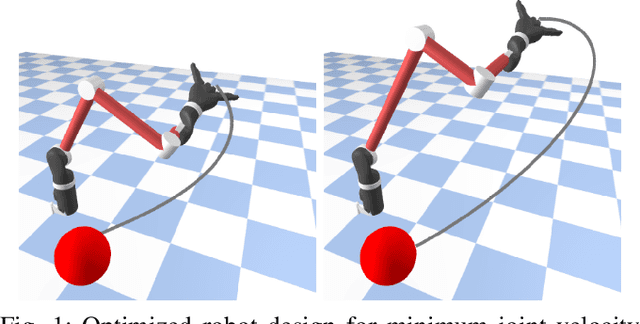

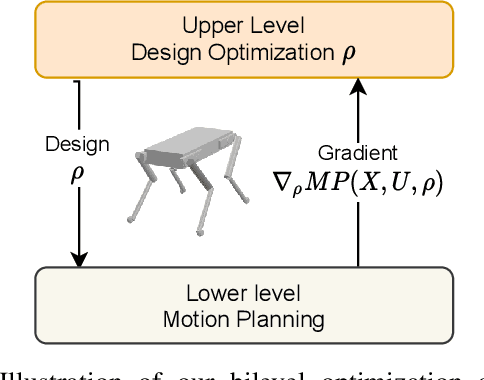

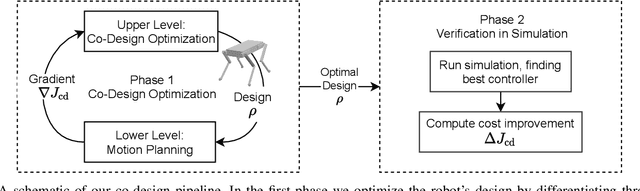

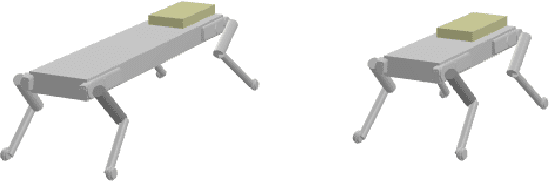

Co-Designing Robots by Differentiating Motion Solvers

Mar 08, 2021

Abstract:We present a novel algorithm for the computational co-design of legged robots and dynamic maneuvers. Current state-of-the-art approaches are based on random sampling or concurrent optimization. A few recently proposed methods explore the relationship between the gradient of the optimal motion and robot design. Inspired by these approaches, we propose a bilevel optimization approach that exploits the derivatives of the motion planning sub-problem (the inner level) without simplifying assumptions on its structure. Our approach can quickly optimize the robot's morphology while considering its full dynamics, joint limits and physical constraints such as friction cones. It has a faster convergence rate and greater scalability for larger design problems than state-of-the-art approaches based on sampling methods. It also allows us to handle constraints such as the actuation limits, which are important for co-designing dynamic maneuvers. We demonstrate these capabilities by studying jumping and trotting gaits under different design metrics and verify our results in a physics simulator. For these cases, our algorithm converges in less than a third of the number of iterations needed for sampling approaches, and the computation time scales linearly.

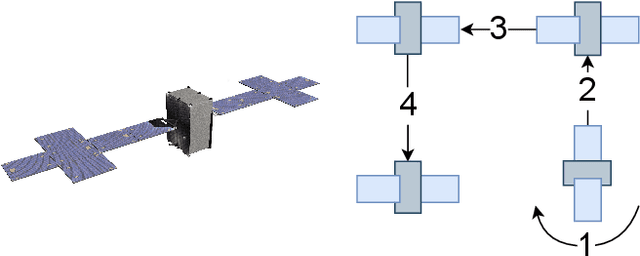

Sparsity-Inducing Optimal Control via Differential Dynamic Programming

Nov 14, 2020

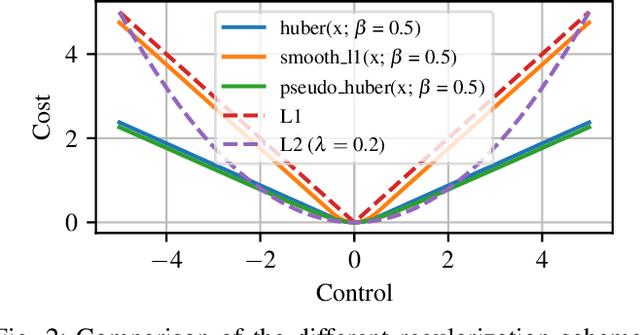

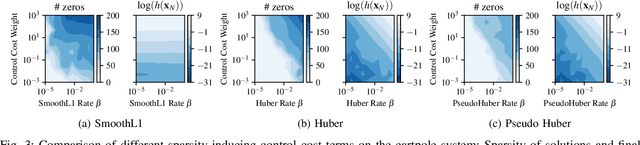

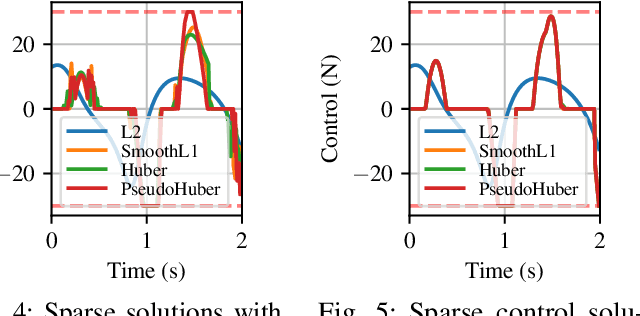

Abstract:Optimal control is a popular approach to synthesize highly dynamic motion. Commonly, L2 regularization is used on the control inputs in order to minimize energy used and to ensure smoothness of the control inputs. However, for some systems, such as satellites, the control needs to be applied in sparse bursts due to how the propulsion system operates. In this paper, we study approaches to induce sparsity in optimal control solutions---namely via smooth L1 and Huber regularization penalties. We apply these loss terms to state-of-the-art Differential Dynamic Programming (DDP)-based solvers to create a family of sparsity-inducing optimal control methods. We analyze and compare the effect of the different losses on inducing sparsity, their numerical conditioning, their impact on convergence, and discuss hyperparameter settings. We demonstrate our method in simulation and hardware experiments on canonical dynamics systems, control of satellites, and the NASA Valkyrie humanoid robot. We provide an implementation of our method and all examples for reproducibility on GitHub.

A Passive Navigation Planning Algorithm for Collision-free Control of Mobile Robots

Nov 01, 2020

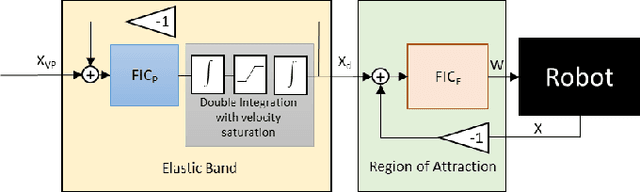

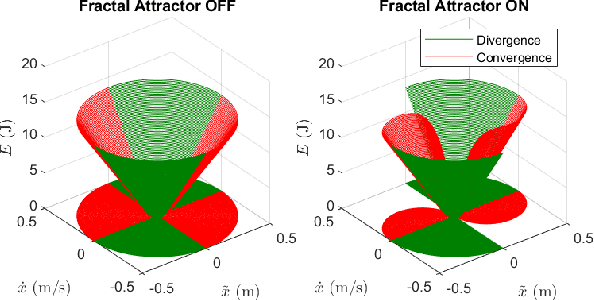

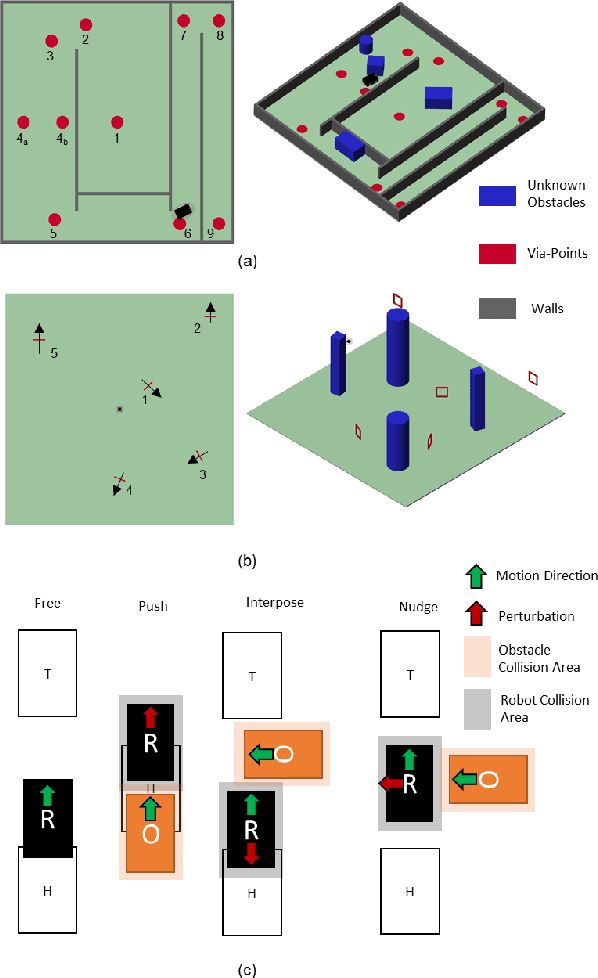

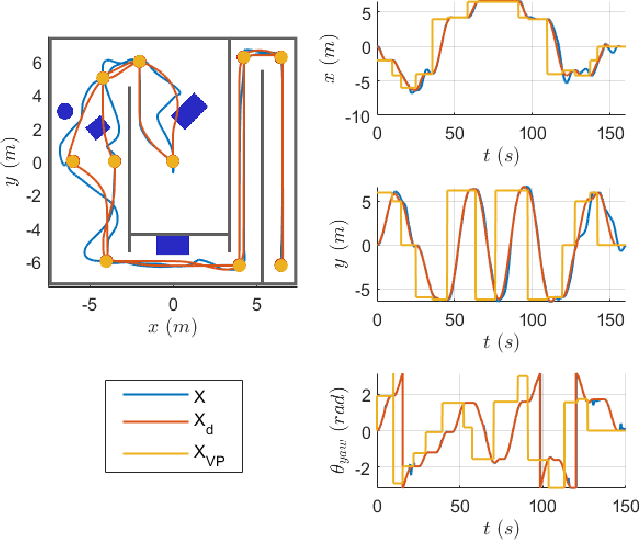

Abstract:Path planning and collision avoidance are challenging in complex and highly variable environments due to the limited horizon of events. In literature, there are multiple model- and learning-based approaches that require significant computational resources to be effectively deployed and they may have limited generality. We propose a planning algorithm based on a globally stable passive controller that can plan smooth trajectories using limited computational resources in challenging environmental conditions. The architecture combines the recently proposed fractal impedance controller with elastic bands and regions of finite time invariance. As the method is based on an impedance controller, it can also be used directly as a force/torque controller. We validated our method in simulation to analyse the ability of interactive navigation in challenging concave domains via the issuing of via-points, and its robustness to low bandwidth feedback. A swarm simulation using 11 agents validated the scalability of the proposed method. We have performed hardware experiments on a holonomic wheeled platform validating smoothness and robustness of interaction with dynamic agents (i.e., humans and robots). The computational complexity of the proposed local planner enables deployment with low-power micro-controllers lowering the energy consumption compared to other methods that rely upon numeric optimisation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge