Christian Rauch

Beyond Master and Apprentice: Grounding Foundation Models for Symbiotic Interactive Learning in a Shared Latent Space

Nov 07, 2025

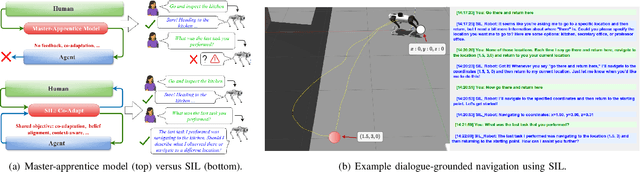

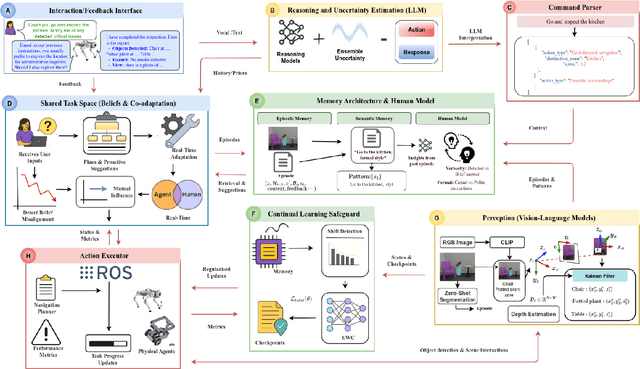

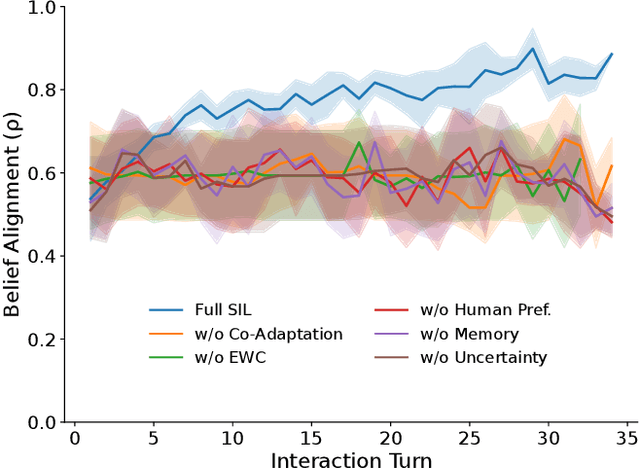

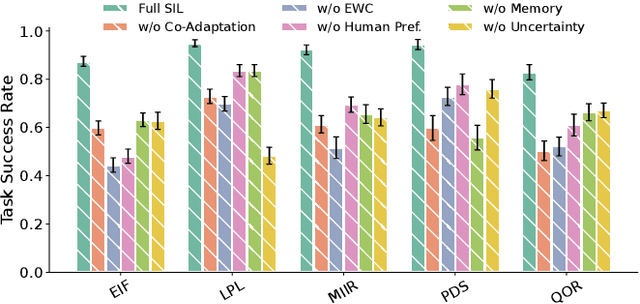

Abstract:Today's autonomous agents can understand free-form natural language instructions and execute long-horizon tasks in a manner akin to human-level reasoning. These capabilities are mostly driven by large-scale pre-trained foundation models (FMs). However, the approaches with which these models are grounded for human-robot interaction (HRI) perpetuate a master-apprentice model, where the apprentice (embodied agent) passively receives and executes the master's (human's) commands without reciprocal learning. This reactive interaction approach does not capture the co-adaptive dynamics inherent in everyday multi-turn human-human interactions. To address this, we propose a Symbiotic Interactive Learning (SIL) approach that enables both the master and the apprentice to co-adapt through mutual, bidirectional interactions. We formalised SIL as a co-adaptation process within a shared latent task space, where the agent and human maintain joint belief states that evolve based on interaction history. This enables the agent to move beyond reactive execution to proactive clarification, adaptive suggestions, and shared plan refinement. To realise these novel behaviours, we leveraged pre-trained FMs for spatial perception and reasoning, alongside a lightweight latent encoder that grounds the models' outputs into task-specific representations. Furthermore, to ensure stability as the tasks evolve, we augment SIL with a memory architecture that prevents the forgetting of learned task-space representations. We validate SIL on both simulated and real-world embodied tasks, including instruction following, information retrieval, query-oriented reasoning, and interactive dialogues. Demos and resources are public at:~\href{https://linusnep.github.io/SIL/}{https://linusnep.github.io/SIL/}.

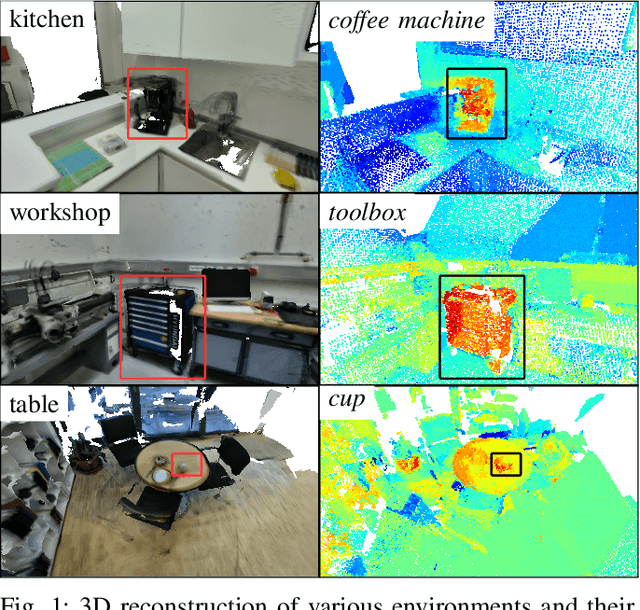

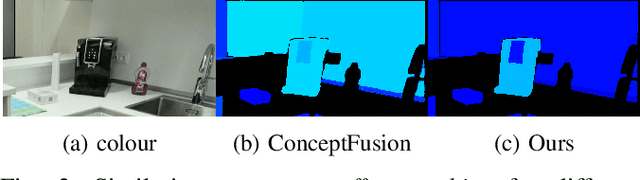

Real-Time 3D Vision-Language Embedding Mapping

Aug 08, 2025

Abstract:A metric-accurate semantic 3D representation is essential for many robotic tasks. This work proposes a simple, yet powerful, way to integrate the 2D embeddings of a Vision-Language Model in a metric-accurate 3D representation at real-time. We combine a local embedding masking strategy, for a more distinct embedding distribution, with a confidence-weighted 3D integration for more reliable 3D embeddings. The resulting metric-accurate embedding representation is task-agnostic and can represent semantic concepts on a global multi-room, as well as on a local object-level. This enables a variety of interactive robotic applications that require the localisation of objects-of-interest via natural language. We evaluate our approach on a variety of real-world sequences and demonstrate that these strategies achieve a more accurate object-of-interest localisation while improving the runtime performance in order to meet our real-time constraints. We further demonstrate the versatility of our approach in a variety of interactive handheld, mobile robotics and manipulation tasks, requiring only raw image data.

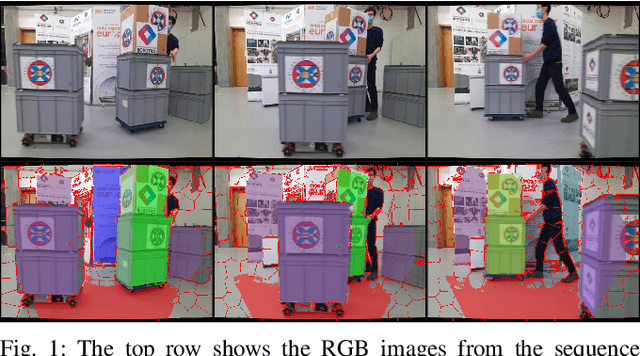

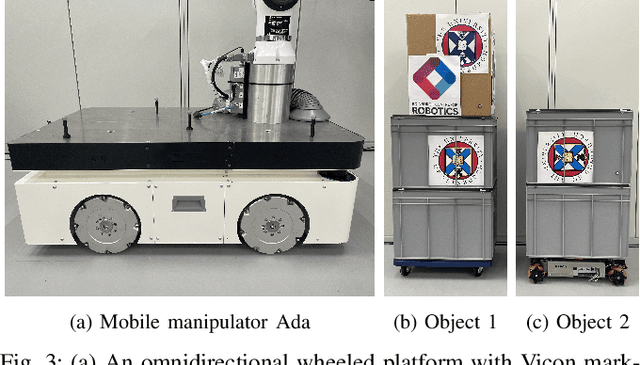

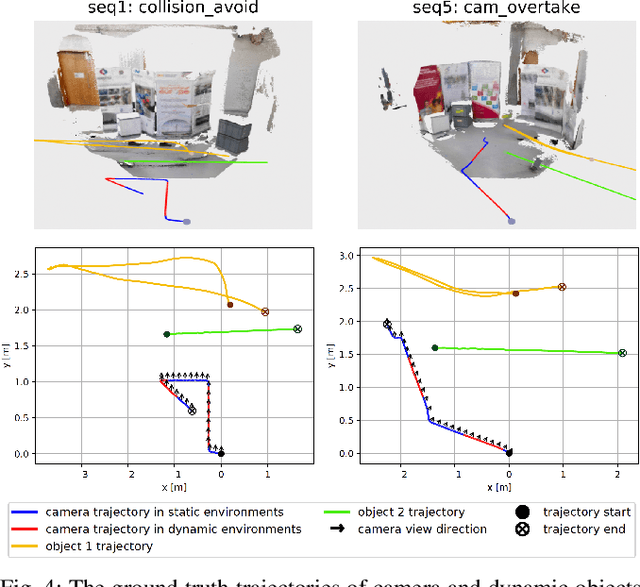

RGB-D-Inertial SLAM in Indoor Dynamic Environments with Long-term Large Occlusion

Mar 23, 2023Abstract:This work presents a novel RGB-D-inertial dynamic SLAM method that can enable accurate localisation when the majority of the camera view is occluded by multiple dynamic objects over a long period of time. Most dynamic SLAM approaches either remove dynamic objects as outliers when they account for a minor proportion of the visual input, or detect dynamic objects using semantic segmentation before camera tracking. Therefore, dynamic objects that cause large occlusions are difficult to detect without prior information. The remaining visual information from the static background is also not enough to support localisation when large occlusion lasts for a long period. To overcome these problems, our framework presents a robust visual-inertial bundle adjustment that simultaneously tracks camera, estimates cluster-wise dense segmentation of dynamic objects and maintains a static sparse map by combining dense and sparse features. The experiment results demonstrate that our method achieves promising localisation and object segmentation performance compared to other state-of-the-art methods in the scenario of long-term large occlusion.

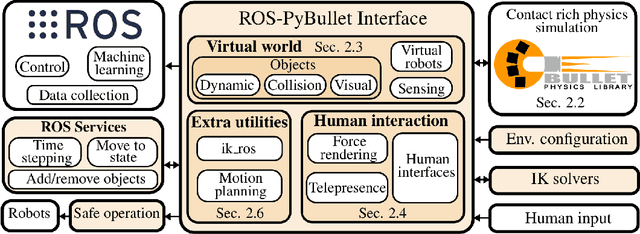

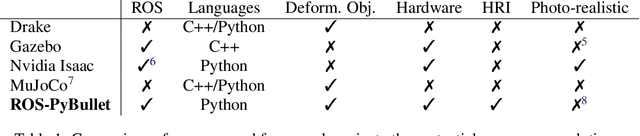

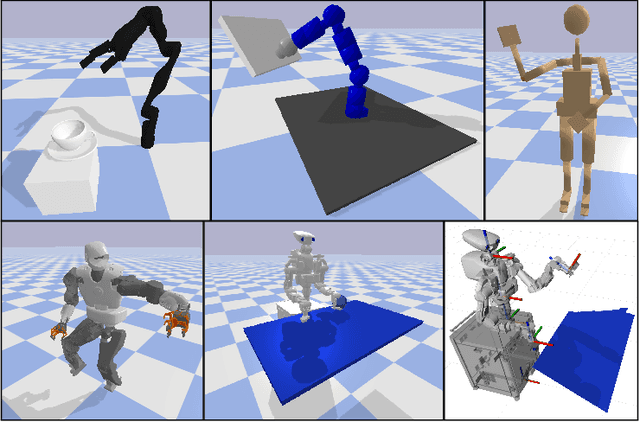

ROS-PyBullet Interface: A Framework for Reliable Contact Simulation and Human-Robot Interaction

Oct 13, 2022

Abstract:Reliable contact simulation plays a key role in the development of (semi-)autonomous robots, especially when dealing with contact-rich manipulation scenarios, an active robotics research topic. Besides simulation, components such as sensing, perception, data collection, robot hardware control, human interfaces, etc. are all key enablers towards applying machine learning algorithms or model-based approaches in real world systems. However, there is a lack of software connecting reliable contact simulation with the larger robotics ecosystem (i.e. ROS, Orocos), for a more seamless application of novel approaches, found in the literature, to existing robotic hardware. In this paper, we present the ROS-PyBullet Interface, a framework that provides a bridge between the reliable contact/impact simulator PyBullet and the Robot Operating System (ROS). Furthermore, we provide additional utilities for facilitating Human-Robot Interaction (HRI) in the simulated environment. We also present several use-cases that highlight the capabilities and usefulness of our framework. Please check our video, source code, and examples included in the supplementary material. Our full code base is open source and can be found at https://github.com/cmower/ros_pybullet_interface.

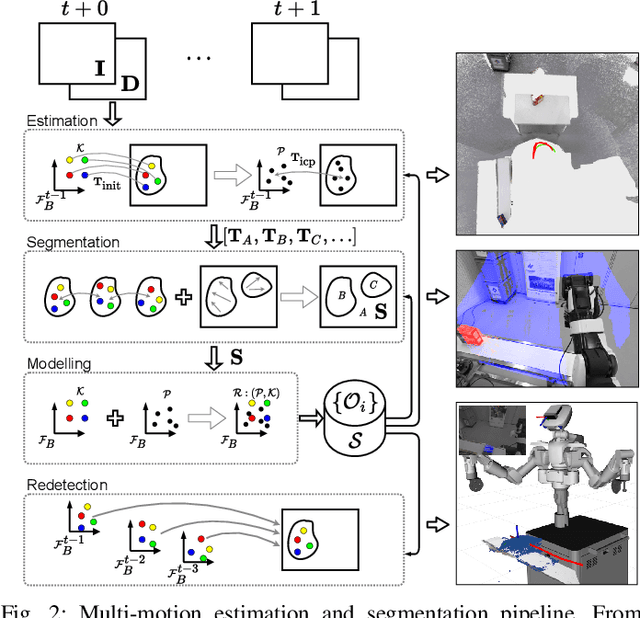

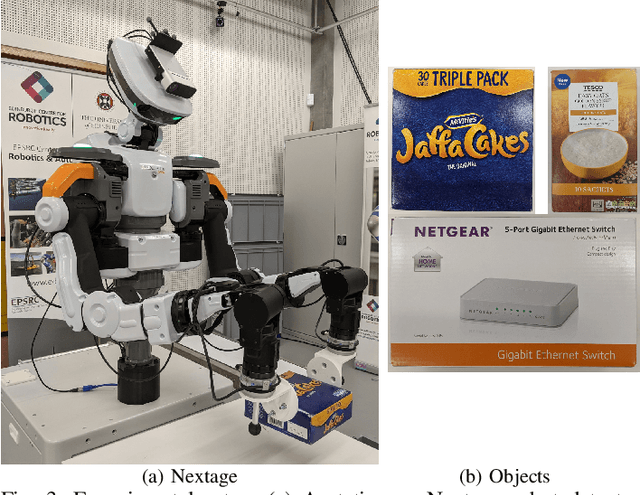

Sparse-Dense Motion Modelling and Tracking for Manipulation without Prior Object Models

Apr 25, 2022

Abstract:This work presents an approach for modelling and tracking previously unseen objects for robotic grasping tasks. Using the motion of objects in a scene, our approach segments rigid entities from the scene and continuously tracks them to create a dense and sparse model of the object and the environment. While the dense tracking enables interaction with these models, the sparse tracking makes this robust against fast movements and allows to redetect already modelled objects. The evaluation on a dual-arm grasping task demonstrates that our approach 1) enables a robot to detect new objects online without a prior model and to grasp these objects using only a simple parameterisable geometric representation, and 2) is much more robust compared to the state of the art methods.

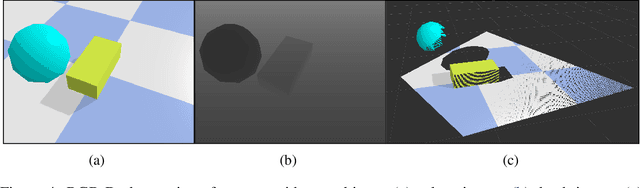

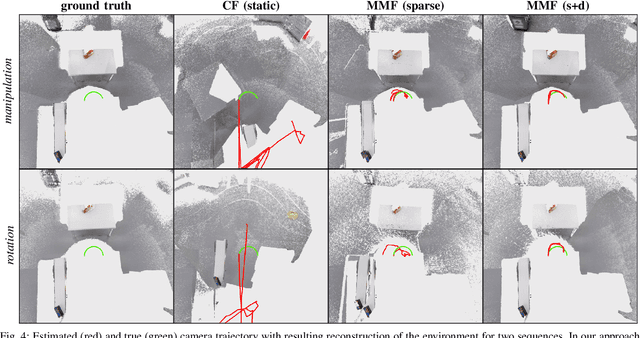

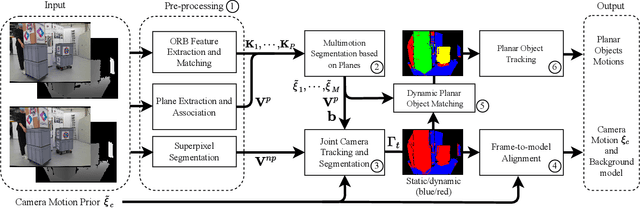

RGB-D SLAM in Indoor Planar Environments with Multiple Large Dynamic Objects

Mar 06, 2022

Abstract:This work presents a novel dense RGB-D SLAM approach for dynamic planar environments that enables simultaneous multi-object tracking, camera localisation and background reconstruction. Previous dynamic SLAM methods either rely on semantic segmentation to directly detect dynamic objects; or assume that dynamic objects occupy a smaller proportion of the camera view than the static background and can, therefore, be removed as outliers. Our approach, however, enables dense SLAM when the camera view is largely occluded by multiple dynamic objects with the aid of camera motion prior. The dynamic planar objects are separated by their different rigid motions and tracked independently. The remaining dynamic non-planar areas are removed as outliers and not mapped into the background. The evaluation demonstrates that our approach outperforms the state-of-the-art methods in terms of localisation, mapping, dynamic segmentation and object tracking. We also demonstrate its robustness to large drift in the camera motion prior.

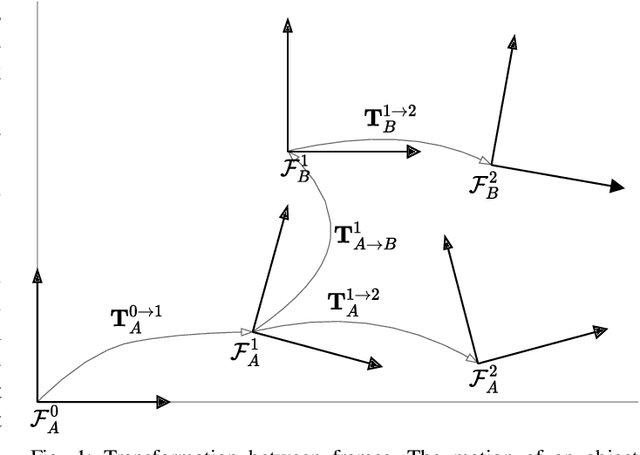

RigidFusion: Robot Localisation and Mapping in Environments with Large Dynamic Rigid Objects

Oct 21, 2020

Abstract:This work presents a novel approach to simultaneously track a robot with respect to multiple rigid entities, including the environment and additional dynamic objects in a scene. Previous approaches treat dynamic parts of a scene as outliers and are thus limited to small amount of dynamics, or rely on prior information for all objects in the scene to enable robust camera tracking. Here, we propose to formulate localisation and object tracking as the same underlying problem and simultaneously track multiple rigid transformations, therefore enabling simultaneous localisation and object tracking for mobile manipulators in dynamic scenes. We evaluate our approach on multiple challenging dynamic scenes with large occlusions. The evaluation demonstrates that our approach achieves better scene segmentation and camera pose tracking in highly dynamic scenes without requiring knowledge of the dynamic object's appearance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge