Ugo Tanielian

3DGEN: A GAN-based approach for generating novel 3D models from image data

Dec 13, 2023

Abstract:The recent advances in text and image synthesis show a great promise for the future of generative models in creative fields. However, a less explored area is the one of 3D model generation, with a lot of potential applications to game design, video production, and physical product design. In our paper, we present 3DGEN, a model that leverages the recent work on both Neural Radiance Fields for object reconstruction and GAN-based image generation. We show that the proposed architecture can generate plausible meshes for objects of the same category as the training images and compare the resulting meshes with the state-of-the-art baselines, leading to visible uplifts in generation quality.

AdBooster: Personalized Ad Creative Generation using Stable Diffusion Outpainting

Sep 08, 2023

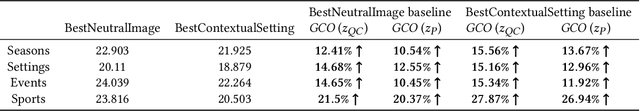

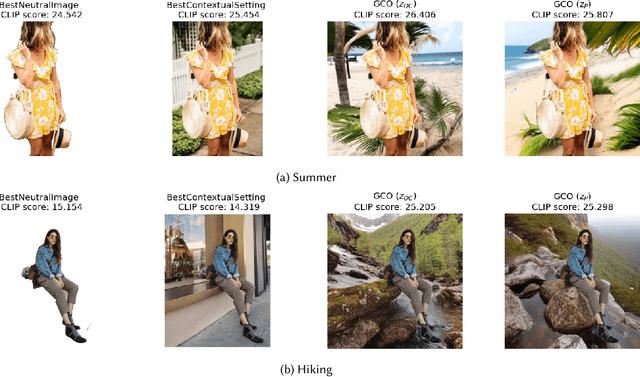

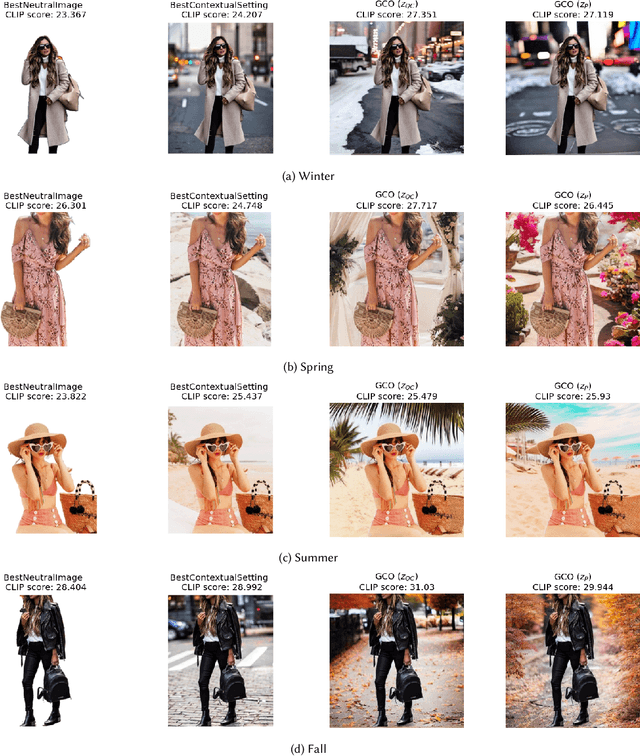

Abstract:In digital advertising, the selection of the optimal item (recommendation) and its best creative presentation (creative optimization) have traditionally been considered separate disciplines. However, both contribute significantly to user satisfaction, underpinning our assumption that it relies on both an item's relevance and its presentation, particularly in the case of visual creatives. In response, we introduce the task of {\itshape Generative Creative Optimization (GCO)}, which proposes the use of generative models for creative generation that incorporate user interests, and {\itshape AdBooster}, a model for personalized ad creatives based on the Stable Diffusion outpainting architecture. This model uniquely incorporates user interests both during fine-tuning and at generation time. To further improve AdBooster's performance, we also introduce an automated data augmentation pipeline. Through our experiments on simulated data, we validate AdBooster's effectiveness in generating more relevant creatives than default product images, showing its potential of enhancing user engagement.

Optimal precision for GANs

Jul 21, 2022

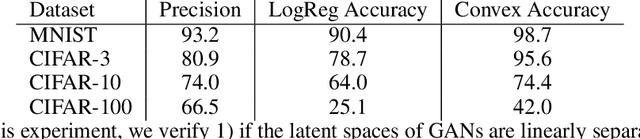

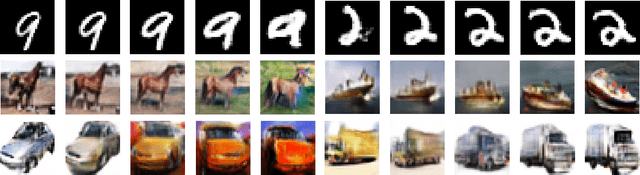

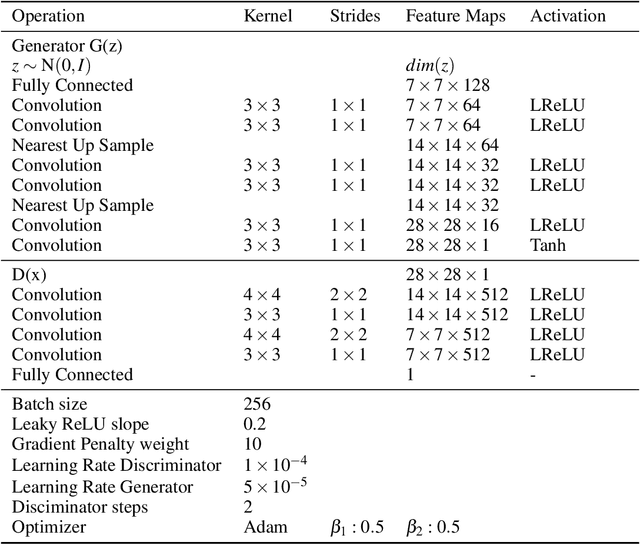

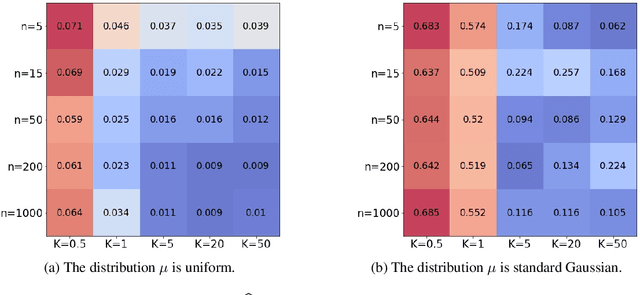

Abstract:When learning disconnected distributions, Generative adversarial networks (GANs) are known to face model misspecification. Indeed, a continuous mapping from a unimodal latent distribution to a disconnected one is impossible, so GANs necessarily generate samples outside of the support of the target distribution. This raises a fundamental question: what is the latent space partition that minimizes the measure of these areas? Building on a recent result of geometric measure theory, we prove that an optimal GANs must structure its latent space as a 'simplicial cluster' - a Voronoi partition where cells are convex cones - when the dimension of the latent space is larger than the number of modes. In this configuration, each Voronoi cell maps to a distinct mode of the data. We derive both an upper and a lower bound on the optimal precision of GANs learning disconnected manifolds. Interestingly, these two bounds have the same order of decrease: $\sqrt{\log m}$, $m$ being the number of modes. Finally, we perform several experiments to exhibit the geometry of the latent space and experimentally show that GANs have a geometry with similar properties to the theoretical one.

Lessons from the AdKDD'21 Privacy-Preserving ML Challenge

Jan 31, 2022

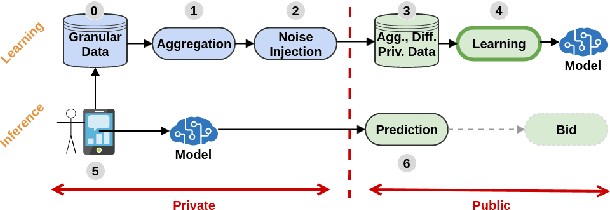

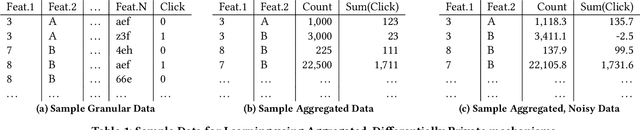

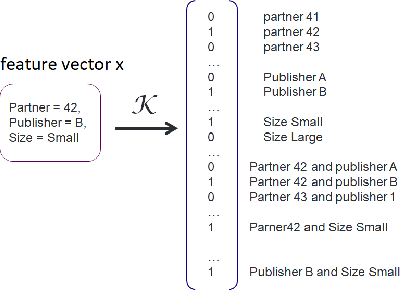

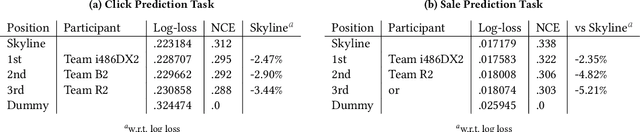

Abstract:Designing data sharing mechanisms providing performance and strong privacy guarantees is a hot topic for the Online Advertising industry. Namely, a prominent proposal discussed under the Improving Web Advertising Business Group at W3C only allows sharing advertising signals through aggregated, differentially private reports of past displays. To study this proposal extensively, an open Privacy-Preserving Machine Learning Challenge took place at AdKDD'21, a premier workshop on Advertising Science with data provided by advertising company Criteo. In this paper, we describe the challenge tasks, the structure of the available datasets, report the challenge results, and enable its full reproducibility. A key finding is that learning models on large, aggregated data in the presence of a small set of unaggregated data points can be surprisingly efficient and cheap. We also run additional experiments to observe the sensitivity of winning methods to different parameters such as privacy budget or quantity of available privileged side information. We conclude that the industry needs either alternate designs for private data sharing or a breakthrough in learning with aggregated data only to keep ad relevance at a reasonable level.

Optimal 1-Wasserstein Distance for WGANs

Jan 08, 2022

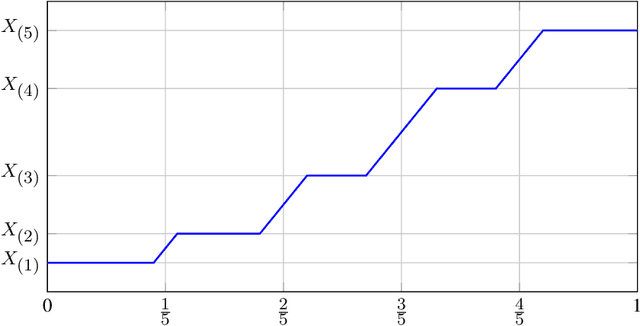

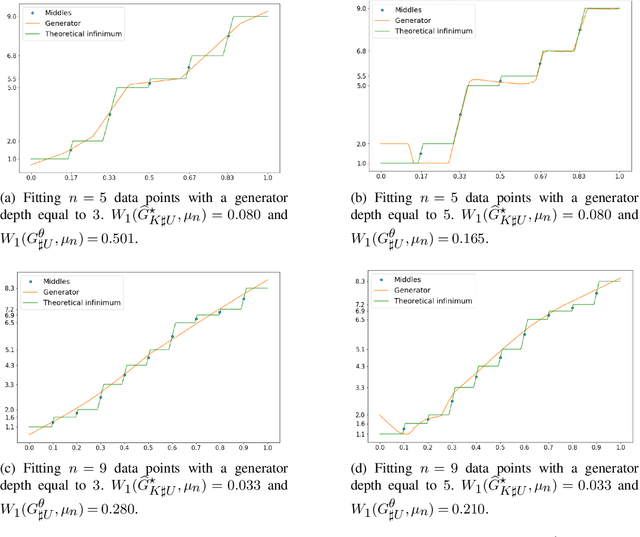

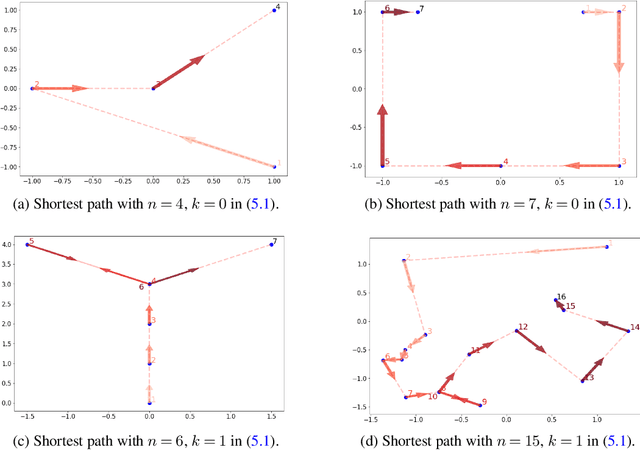

Abstract:The mathematical forces at work behind Generative Adversarial Networks raise challenging theoretical issues. Motivated by the important question of characterizing the geometrical properties of the generated distributions, we provide a thorough analysis of Wasserstein GANs (WGANs) in both the finite sample and asymptotic regimes. We study the specific case where the latent space is univariate and derive results valid regardless of the dimension of the output space. We show in particular that for a fixed sample size, the optimal WGANs are closely linked with connected paths minimizing the sum of the squared Euclidean distances between the sample points. We also highlight the fact that WGANs are able to approach (for the 1-Wasserstein distance) the target distribution as the sample size tends to infinity, at a given convergence rate and provided the family of generative Lipschitz functions grows appropriately. We derive in passing new results on optimal transport theory in the semi-discrete setting.

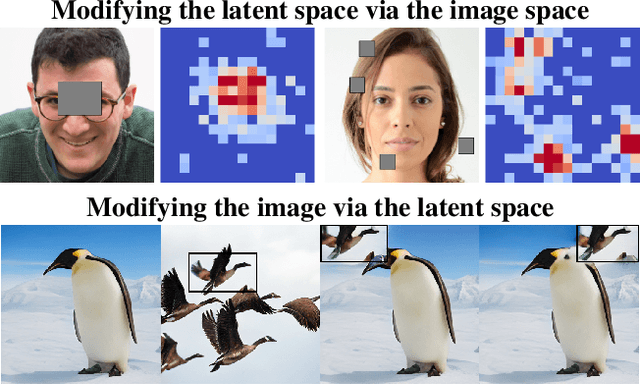

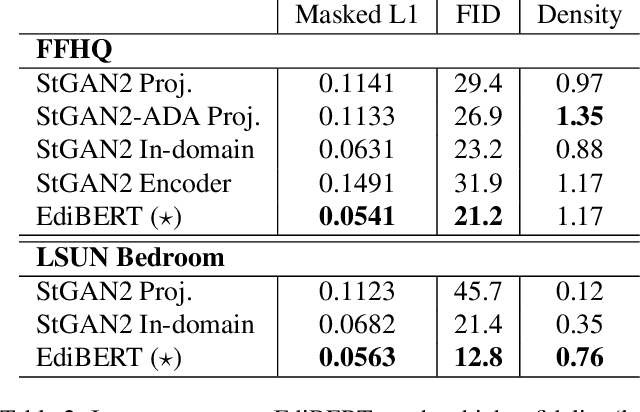

EdiBERT, a generative model for image editing

Nov 30, 2021

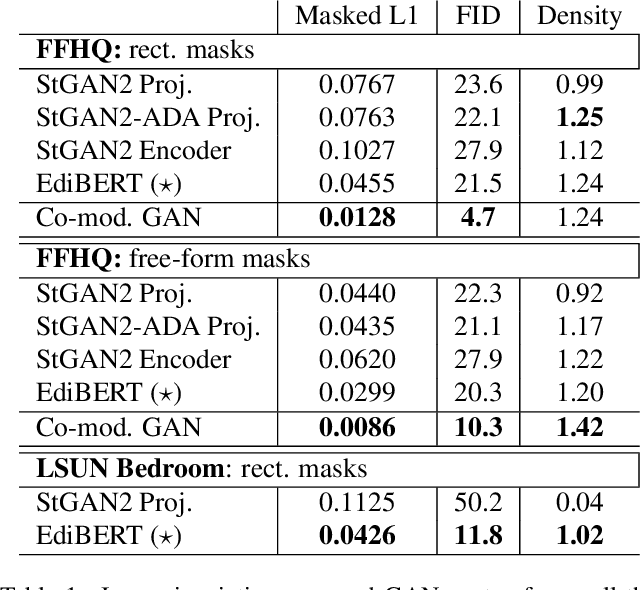

Abstract:Advances in computer vision are pushing the limits of im-age manipulation, with generative models sampling detailed images on various tasks. However, a specialized model is often developed and trained for each specific task, even though many image edition tasks share similarities. In denoising, inpainting, or image compositing, one always aims at generating a realistic image from a low-quality one. In this paper, we aim at making a step towards a unified approach for image editing. To do so, we propose EdiBERT, a bi-directional transformer trained in the discrete latent space built by a vector-quantized auto-encoder. We argue that such a bidirectional model is suited for image manipulation since any patch can be re-sampled conditionally to the whole image. Using this unique and straightforward training objective, we show that the resulting model matches state-of-the-art performances on a wide variety of tasks: image denoising, image completion, and image composition.

Latent reweighting, an almost free improvement for GANs

Oct 19, 2021

Abstract:Standard formulations of GANs, where a continuous function deforms a connected latent space, have been shown to be misspecified when fitting different classes of images. In particular, the generator will necessarily sample some low-quality images in between the classes. Rather than modifying the architecture, a line of works aims at improving the sampling quality from pre-trained generators at the expense of increased computational cost. Building on this, we introduce an additional network to predict latent importance weights and two associated sampling methods to avoid the poorest samples. This idea has several advantages: 1) it provides a way to inject disconnectedness into any GAN architecture, 2) since the rejection happens in the latent space, it avoids going through both the generator and the discriminator, saving computation time, 3) this importance weights formulation provides a principled way to reduce the Wasserstein's distance to the target distribution. We demonstrate the effectiveness of our method on several datasets, both synthetic and high-dimensional.

What Users Want? WARHOL: A Generative Model for Recommendation

Sep 02, 2021

Abstract:Current recommendation approaches help online merchants predict, for each visiting user, which subset of their existing products is the most relevant. However, besides being interested in matching users with existing products, merchants are also interested in understanding their users' underlying preferences. This could indeed help them produce or acquire better matching products in the future. We argue that existing recommendation models cannot directly be used to predict the optimal combination of features that will make new products serve better the needs of the target audience. To tackle this, we turn to generative models, which allow us to learn explicitly distributions over product feature combinations both in text and visual space. We develop WARHOL, a product generation and recommendation architecture that takes as input past user shopping activity and generates relevant textual and visual descriptions of novel products. We show that WARHOL can approach the performance of state-of-the-art recommendation models, while being able to generate entirely new products that are relevant to the given user profiles.

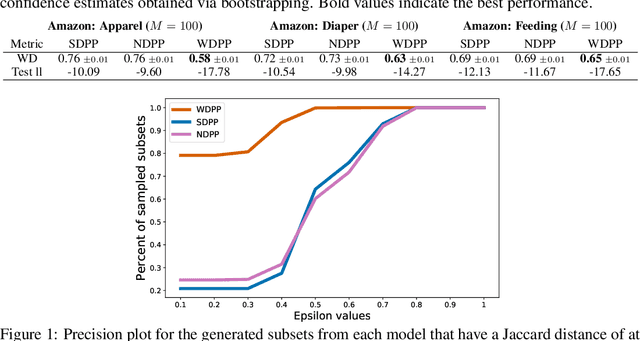

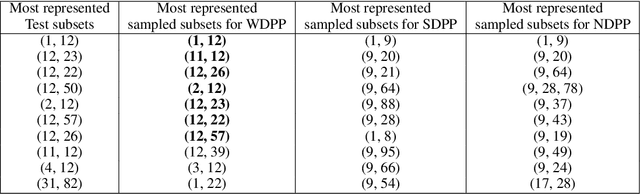

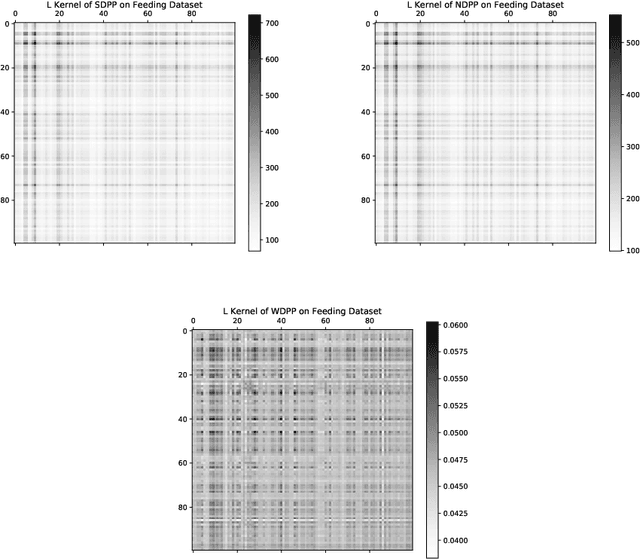

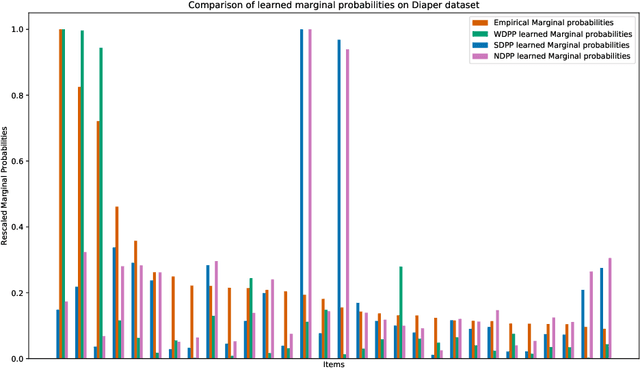

Wasserstein Learning of Determinantal Point Processes

Nov 19, 2020

Abstract:Determinantal point processes (DPPs) have received significant attention as an elegant probabilistic model for discrete subset selection. Most prior work on DPP learning focuses on maximum likelihood estimation (MLE). While efficient and scalable, MLE approaches do not leverage any subset similarity information and may fail to recover the true generative distribution of discrete data. In this work, by deriving a differentiable relaxation of a DPP sampling algorithm, we present a novel approach for learning DPPs that minimizes the Wasserstein distance between the model and data composed of observed subsets. Through an evaluation on a real-world dataset, we show that our Wasserstein learning approach provides significantly improved predictive performance on a generative task compared to DPPs trained using MLE.

Learning disconnected manifolds: a no GANs land

Jun 22, 2020

Abstract:Typical architectures of Generative AdversarialNetworks make use of a unimodal latent distribution transformed by a continuous generator. Consequently, the modeled distribution always has connected support which is cumbersome when learning a disconnected set of manifolds. We formalize this problem by establishing a no free lunch theorem for the disconnected manifold learning stating an upper bound on the precision of the targeted distribution. This is done by building on the necessary existence of a low-quality region where the generator continuously samples data between two disconnected modes. Finally, we derive a rejection sampling method based on the norm of generators Jacobian and show its efficiency on several generators including BigGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge