Subhasis Chaudhuri

Revised Regularization for Efficient Continual Learning through Correlation-Based Parameter Update in Bayesian Neural Networks

Nov 21, 2024

Abstract:We propose a Bayesian neural network-based continual learning algorithm using Variational Inference, aiming to overcome several drawbacks of existing methods. Specifically, in continual learning scenarios, storing network parameters at each step to retain knowledge poses challenges. This is compounded by the crucial need to mitigate catastrophic forgetting, particularly given the limited access to past datasets, which complicates maintaining correspondence between network parameters and datasets across all sessions. Current methods using Variational Inference with KL divergence risk catastrophic forgetting during uncertain node updates and coupled disruptions in certain nodes. To address these challenges, we propose the following strategies. To reduce the storage of the dense layer parameters, we propose a parameter distribution learning method that significantly reduces the storage requirements. In the continual learning framework employing variational inference, our study introduces a regularization term that specifically targets the dynamics and population of the mean and variance of the parameters. This term aims to retain the benefits of KL divergence while addressing related challenges. To ensure proper correspondence between network parameters and the data, our method introduces an importance-weighted Evidence Lower Bound term to capture data and parameter correlations. This enables storage of common and distinctive parameter hyperspace bases. The proposed method partitions the parameter space into common and distinctive subspaces, with conditions for effective backward and forward knowledge transfer, elucidating the network-parameter dataset correspondence. The experimental results demonstrate the effectiveness of our method across diverse datasets and various combinations of sequential datasets, yielding superior performance compared to existing approaches.

Efficient Curriculum based Continual Learning with Informative Subset Selection for Remote Sensing Scene Classification

Sep 03, 2023Abstract:We tackle the problem of class incremental learning (CIL) in the realm of landcover classification from optical remote sensing (RS) images in this paper. The paradigm of CIL has recently gained much prominence given the fact that data are generally obtained in a sequential manner for real-world phenomenon. However, CIL has not been extensively considered yet in the domain of RS irrespective of the fact that the satellites tend to discover new classes at different geographical locations temporally. With this motivation, we propose a novel CIL framework inspired by the recent success of replay-memory based approaches and tackling two of their shortcomings. In order to reduce the effect of catastrophic forgetting of the old classes when a new stream arrives, we learn a curriculum of the new classes based on their similarity with the old classes. This is found to limit the degree of forgetting substantially. Next while constructing the replay memory, instead of randomly selecting samples from the old streams, we propose a sample selection strategy which ensures the selection of highly confident samples so as to reduce the effects of noise. We observe a sharp improvement in the CIL performance with the proposed components. Experimental results on the benchmark NWPU-RESISC45, PatternNet, and EuroSAT datasets confirm that our method offers improved stability-plasticity trade-off than the literature.

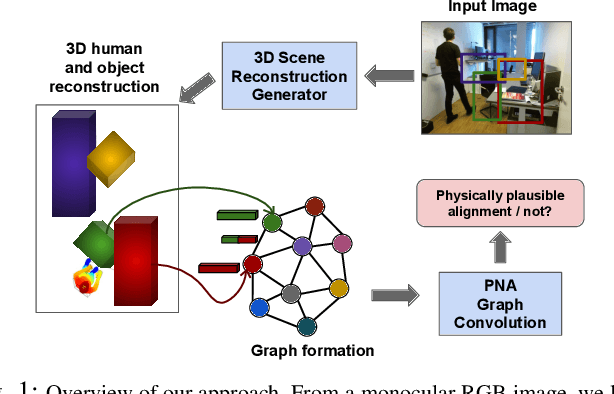

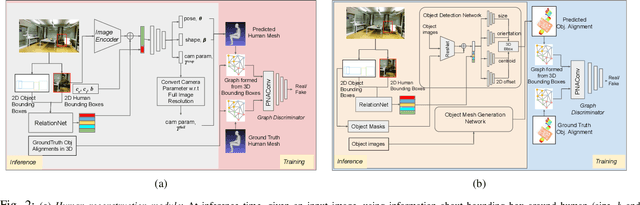

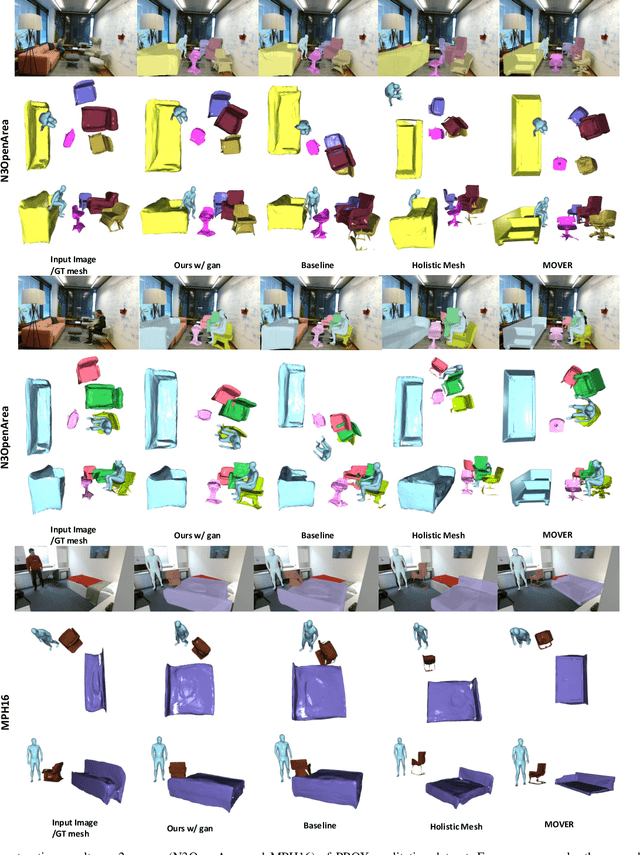

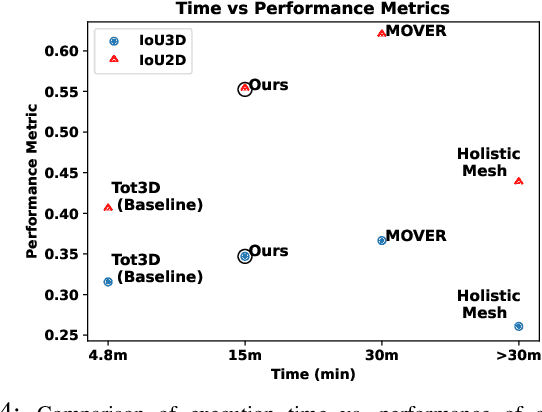

Physically Plausible 3D Human-Scene Reconstruction from Monocular RGB Image using an Adversarial Learning Approach

Jul 27, 2023

Abstract:Holistic 3D human-scene reconstruction is a crucial and emerging research area in robot perception. A key challenge in holistic 3D human-scene reconstruction is to generate a physically plausible 3D scene from a single monocular RGB image. The existing research mainly proposes optimization-based approaches for reconstructing the scene from a sequence of RGB frames with explicitly defined physical laws and constraints between different scene elements (humans and objects). However, it is hard to explicitly define and model every physical law in every scenario. This paper proposes using an implicit feature representation of the scene elements to distinguish a physically plausible alignment of humans and objects from an implausible one. We propose using a graph-based holistic representation with an encoded physical representation of the scene to analyze the human-object and object-object interactions within the scene. Using this graphical representation, we adversarially train our model to learn the feasible alignments of the scene elements from the training data itself without explicitly defining the laws and constraints between them. Unlike the existing inference-time optimization-based approaches, we use this adversarially trained model to produce a per-frame 3D reconstruction of the scene that abides by the physical laws and constraints. Our learning-based method achieves comparable 3D reconstruction quality to existing optimization-based holistic human-scene reconstruction methods and does not need inference time optimization. This makes it better suited when compared to existing methods, for potential use in robotic applications, such as robot navigation, etc.

MultiScale Probability Map guided Index Pooling with Attention-based learning for Road and Building Segmentation

Feb 18, 2023Abstract:Efficient road and building footprint extraction from satellite images are predominant in many remote sensing applications. However, precise segmentation map extraction is quite challenging due to the diverse building structures camouflaged by trees, similar spectral responses between the roads and buildings, and occlusions by heterogeneous traffic over the roads. Existing convolutional neural network (CNN)-based methods focus on either enriched spatial semantics learning for the building extraction or the fine-grained road topology extraction. The profound semantic information loss due to the traditional pooling mechanisms in CNN generates fragmented and disconnected road maps and poorly segmented boundaries for the densely spaced small buildings in complex surroundings. In this paper, we propose a novel attention-aware segmentation framework, Multi-Scale Supervised Dilated Multiple-Path Attention Network (MSSDMPA-Net), equipped with two new modules Dynamic Attention Map Guided Index Pooling (DAMIP) and Dynamic Attention Map Guided Spatial and Channel Attention (DAMSCA) to precisely extract the building footprints and road maps from remotely sensed images. DAMIP mines the salient features by employing a novel index pooling mechanism to retain important geometric information. On the other hand, DAMSCA simultaneously extracts the multi-scale spatial and spectral features. Besides, using dilated convolution and multi-scale deep supervision in optimizing MSSDMPA-Net helps achieve stellar performance. Experimental results over multiple benchmark building and road extraction datasets, ensures MSSDMPA-Net as the state-of-the-art (SOTA) method for building and road extraction.

Prototypical quadruplet for few-shot class incremental learning

Nov 09, 2022

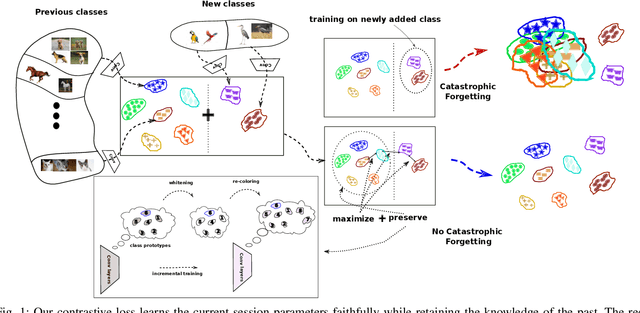

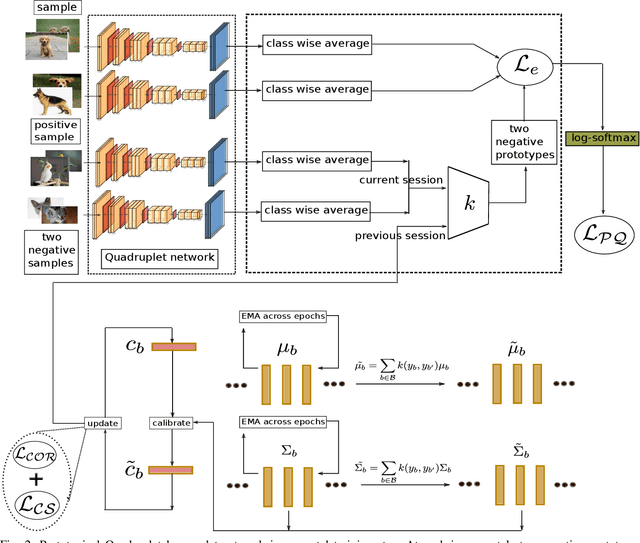

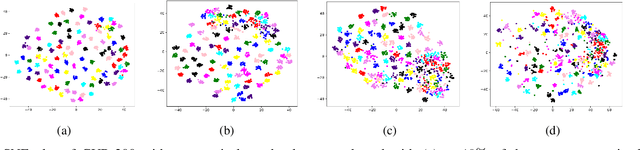

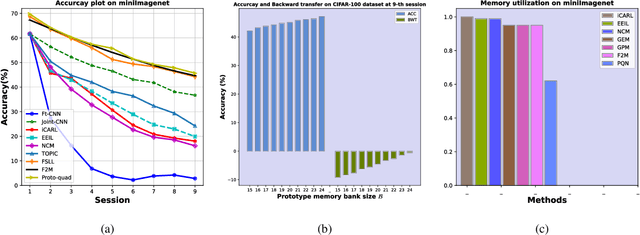

Abstract:Many modern computer vision algorithms suffer from two major bottlenecks: scarcity of data and learning new tasks incrementally. While training the model with new batches of data the model looses it's ability to classify the previous data judiciously which is termed as catastrophic forgetting. Conventional methods have tried to mitigate catastrophic forgetting of the previously learned data while the training at the current session has been compromised. The state-of-the-art generative replay based approaches use complicated structures such as generative adversarial network (GAN) to deal with catastrophic forgetting. Additionally, training a GAN with few samples may lead to instability. In this work, we present a novel method to deal with these two major hurdles. Our method identifies a better embedding space with an improved contrasting loss to make classification more robust. Moreover, our approach is able to retain previously acquired knowledge in the embedding space even when trained with new classes. We update previous session class prototypes while training in such a way that it is able to represent the true class mean. This is of prime importance as our classification rule is based on the nearest class mean classification strategy. We have demonstrated our results by showing that the embedding space remains intact after training the model with new classes. We showed that our method preformed better than the existing state-of-the-art algorithms in terms of accuracy across different sessions.

FRIDA -- Generative Feature Replay for Incremental Domain Adaptation

Jan 11, 2022

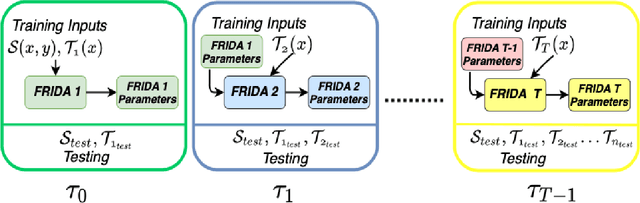

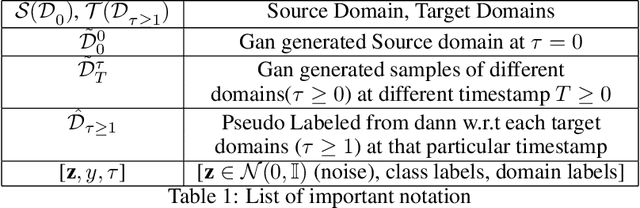

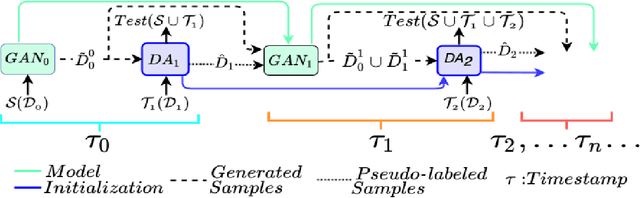

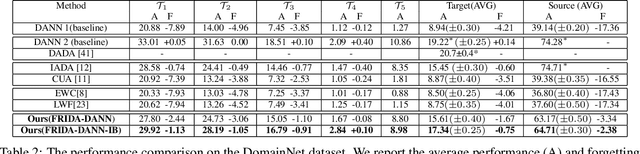

Abstract:We tackle the novel problem of incremental unsupervised domain adaptation (IDA) in this paper. We assume that a labeled source domain and different unlabeled target domains are incrementally observed with the constraint that data corresponding to the current domain is only available at a time. The goal is to preserve the accuracies for all the past domains while generalizing well for the current domain. The IDA setup suffers due to the abrupt differences among the domains and the unavailability of past data including the source domain. Inspired by the notion of generative feature replay, we propose a novel framework called Feature Replay based Incremental Domain Adaptation (FRIDA) which leverages a new incremental generative adversarial network (GAN) called domain-generic auxiliary classification GAN (DGAC-GAN) for producing domain-specific feature representations seamlessly. For domain alignment, we propose a simple extension of the popular domain adversarial neural network (DANN) called DANN-IB which encourages discriminative domain-invariant and task-relevant feature learning. Experimental results on Office-Home, Office-CalTech, and DomainNet datasets confirm that FRIDA maintains superior stability-plasticity trade-off than the literature.

Deep Learning based Novel View Synthesis

Jul 14, 2021

Abstract:Predicting novel views of a scene from real-world images has always been a challenging task. In this work, we propose a deep convolutional neural network (CNN) which learns to predict novel views of a scene from given collection of images. In comparison to prior deep learning based approaches, which can handle only a fixed number of input images to predict novel view, proposed approach works with different numbers of input images. The proposed model explicitly performs feature extraction and matching from a given pair of input images and estimates, at each pixel, the probability distribution (pdf) over possible depth levels in the scene. This pdf is then used for estimating the novel view. The model estimates multiple predictions of novel view, one estimate per input image pair, from given image collection. The model also estimates an occlusion mask and combines multiple novel view estimates in to a single optimal prediction. The finite number of depth levels used in the analysis may cause occasional blurriness in the estimated view. We mitigate this issue with simple multi-resolution analysis which improves the quality of the estimates. We substantiate the performance on different datasets and show competitive performance.

Maximizing Conditional Entropy for Batch-Mode Active Learning of Perceptual Metrics

Mar 16, 2021

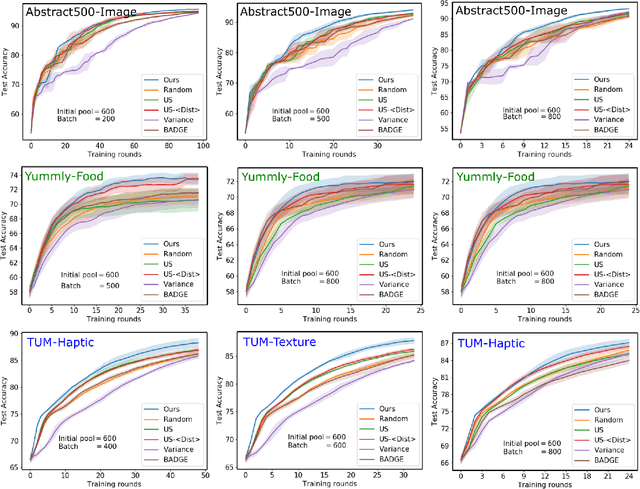

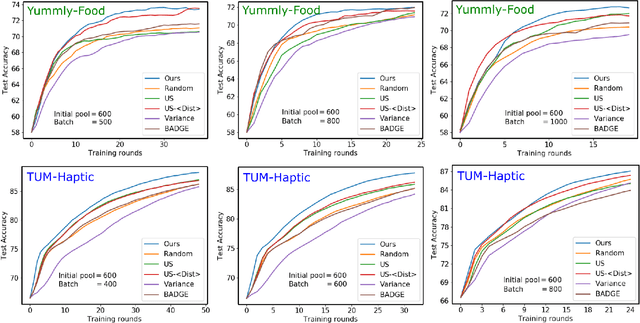

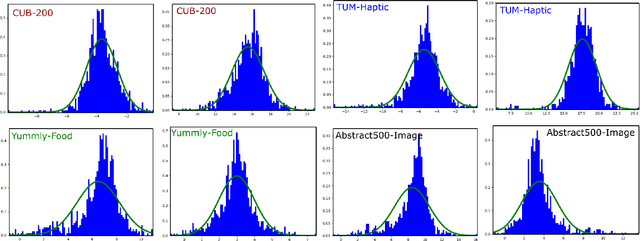

Abstract:Active metric learning is the problem of incrementally selecting batches of training data (typically, ordered triplets) to annotate, in order to progressively improve a learned model of a metric over some input domain as rapidly as possible. Standard approaches, which independently select each triplet in a batch, are susceptible to highly correlated batches with many redundant triplets and hence low overall utility. While there has been recent work on selecting decorrelated batches for metric learning \cite{kumari2020batch}, these methods rely on ad hoc heuristics to estimate the correlation between two triplets at a time. We present a novel approach for batch mode active metric learning using the Maximum Entropy Principle that seeks to collectively select batches with maximum joint entropy, which captures both the informativeness and the diversity of the triplets. The entropy is derived from the second-order statistics estimated by dropout. We take advantage of the monotonically increasing submodular entropy function to construct an efficient greedy algorithm based on Gram-Schmidt orthogonalization that is provably $\left( 1 - \frac{1}{e} \right)$-optimal. Our approach is the first batch-mode active metric learning method to define a unified score that balances informativeness and diversity for an entire batch of triplets. Experiments with several real-world datasets demonstrate that our algorithm is robust and consistently outperforms the state-of-the-art.

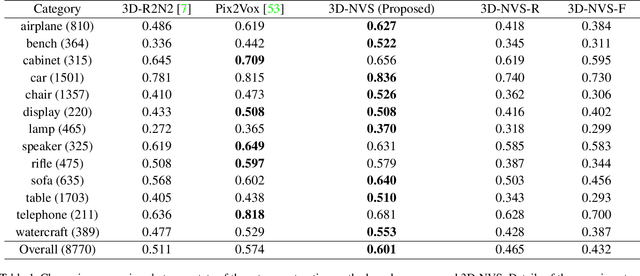

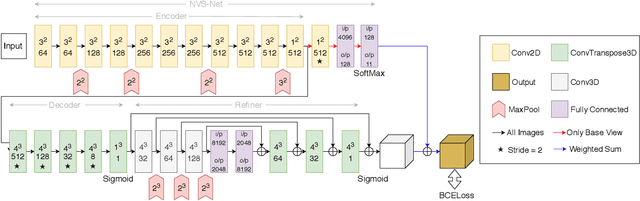

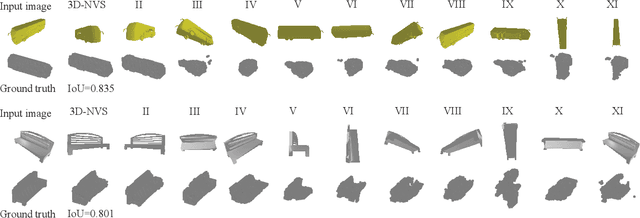

3D-NVS: A 3D Supervision Approach for Next View Selection

Dec 03, 2020

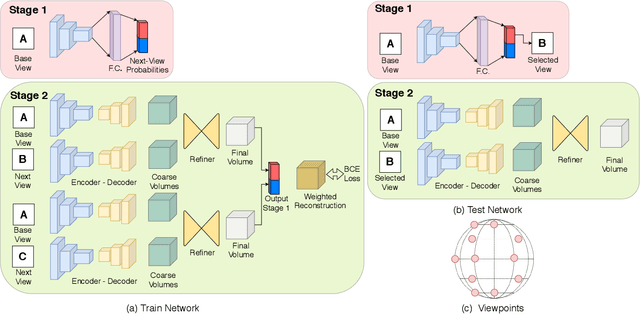

Abstract:We present a classification based approach for the next best view selection and show how we can plausibly obtain a supervisory signal for this task. The proposed approach is end-to-end trainable and aims to get the best possible 3D reconstruction quality with a pair of passively acquired 2D views. The proposed model consists of two stages: a classifier and a reconstructor network trained jointly via the indirect 3D supervision from ground truth voxels. While testing, the proposed method assumes no prior knowledge of the underlying 3D shape for selecting the next best view. We demonstrate the proposed method's effectiveness via detailed experiments on synthetic and real images and show how it provides improved reconstruction quality than the existing state of the art 3D reconstruction and the next best view prediction techniques.

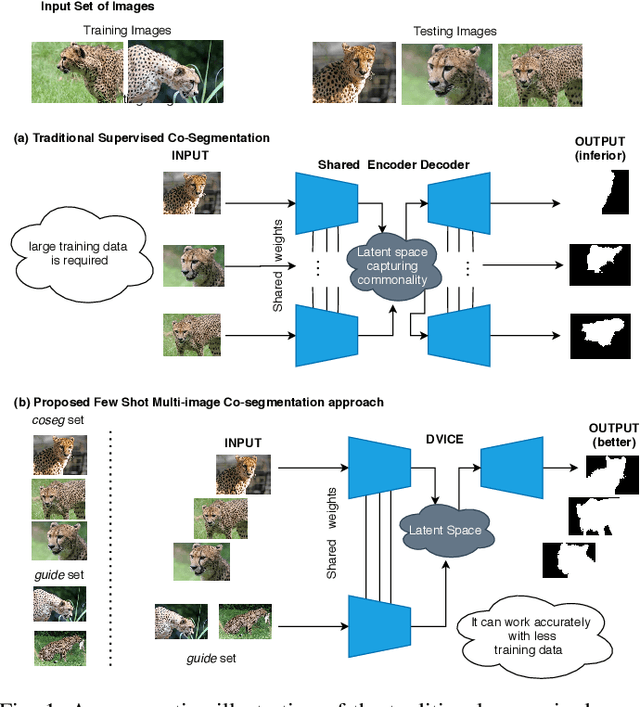

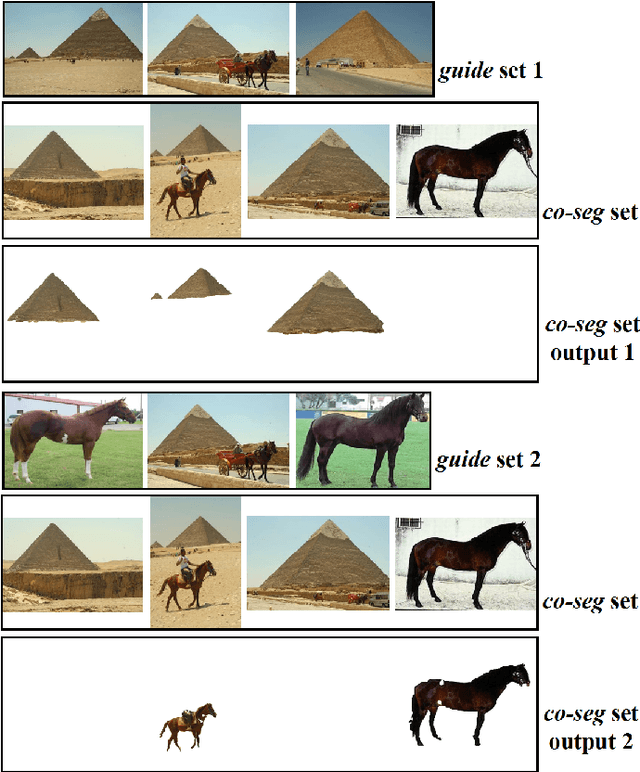

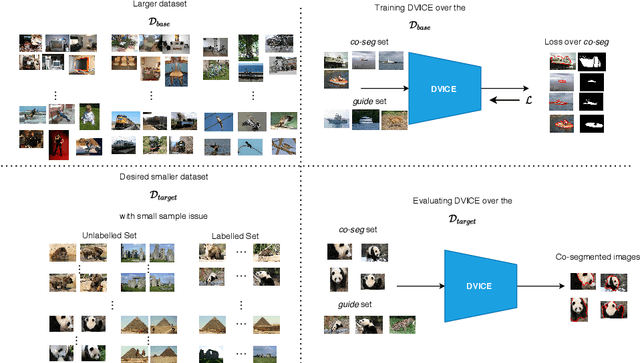

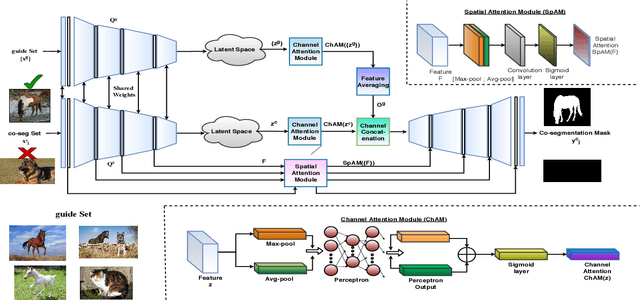

Directed Variational Cross-encoder Network for Few-shot Multi-image Co-segmentation

Oct 17, 2020

Abstract:In this paper, we propose a novel framework for multi-image co-segmentation using class agnostic meta-learning strategy by generalizing to new classes given only a small number of training samples for each new class. We have developed a novel encoder-decoder network termed as DVICE (Directed Variational Inference Cross Encoder), which learns a continuous embedding space to ensure better similarity learning. We employ a combination of the proposed DVICE network and a novel few-shot learning approach to tackle the small sample size problem encountered in co-segmentation with small datasets like iCoseg and MSRC. Furthermore, the proposed framework does not use any semantic class labels and is entirely class agnostic. Through exhaustive experimentation over multiple datasets using only a small volume of training data, we have demonstrated that our approach outperforms all existing state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge