Gemma Roig

Goethe University Frankfurt

Temporal Slowness in Central Vision Drives Semantic Object Learning

Feb 04, 2026Abstract:Humans acquire semantic object representations from egocentric visual streams with minimal supervision. Importantly, the visual system processes with high resolution only the center of its field of view and learns similar representations for visual inputs occurring close in time. This emphasizes slowly changing information around gaze locations. This study investigates the role of central vision and slowness learning in the formation of semantic object representations from human-like visual experience. We simulate five months of human-like visual experience using the Ego4D dataset and generate gaze coordinates with a state-of-the-art gaze prediction model. Using these predictions, we extract crops that mimic central vision and train a time-contrastive Self-Supervised Learning model on them. Our results show that combining temporal slowness and central vision improves the encoding of different semantic facets of object representations. Specifically, focusing on central vision strengthens the extraction of foreground object features, while considering temporal slowness, especially during fixational eye movements, allows the model to encode broader semantic information about objects. These findings provide new insights into the mechanisms by which humans may develop semantic object representations from natural visual experience.

The Representational Alignment between Humans and Language Models is implicitly driven by a Concreteness Effect

May 21, 2025Abstract:The nouns of our language refer to either concrete entities (like a table) or abstract concepts (like justice or love), and cognitive psychology has established that concreteness influences how words are processed. Accordingly, understanding how concreteness is represented in our mind and brain is a central question in psychology, neuroscience, and computational linguistics. While the advent of powerful language models has allowed for quantitative inquiries into the nature of semantic representations, it remains largely underexplored how they represent concreteness. Here, we used behavioral judgments to estimate semantic distances implicitly used by humans, for a set of carefully selected abstract and concrete nouns. Using Representational Similarity Analysis, we find that the implicit representational space of participants and the semantic representations of language models are significantly aligned. We also find that both representational spaces are implicitly aligned to an explicit representation of concreteness, which was obtained from our participants using an additional concreteness rating task. Importantly, using ablation experiments, we demonstrate that the human-to-model alignment is substantially driven by concreteness, but not by other important word characteristics established in psycholinguistics. These results indicate that humans and language models converge on the concreteness dimension, but not on other dimensions.

Human Gaze Boosts Object-Centered Representation Learning

Jan 06, 2025Abstract:Recent self-supervised learning (SSL) models trained on human-like egocentric visual inputs substantially underperform on image recognition tasks compared to humans. These models train on raw, uniform visual inputs collected from head-mounted cameras. This is different from humans, as the anatomical structure of the retina and visual cortex relatively amplifies the central visual information, i.e. around humans' gaze location. This selective amplification in humans likely aids in forming object-centered visual representations. Here, we investigate whether focusing on central visual information boosts egocentric visual object learning. We simulate 5-months of egocentric visual experience using the large-scale Ego4D dataset and generate gaze locations with a human gaze prediction model. To account for the importance of central vision in humans, we crop the visual area around the gaze location. Finally, we train a time-based SSL model on these modified inputs. Our experiments demonstrate that focusing on central vision leads to better object-centered representations. Our analysis shows that the SSL model leverages the temporal dynamics of the gaze movements to build stronger visual representations. Overall, our work marks a significant step toward bio-inspired learning of visual representations.

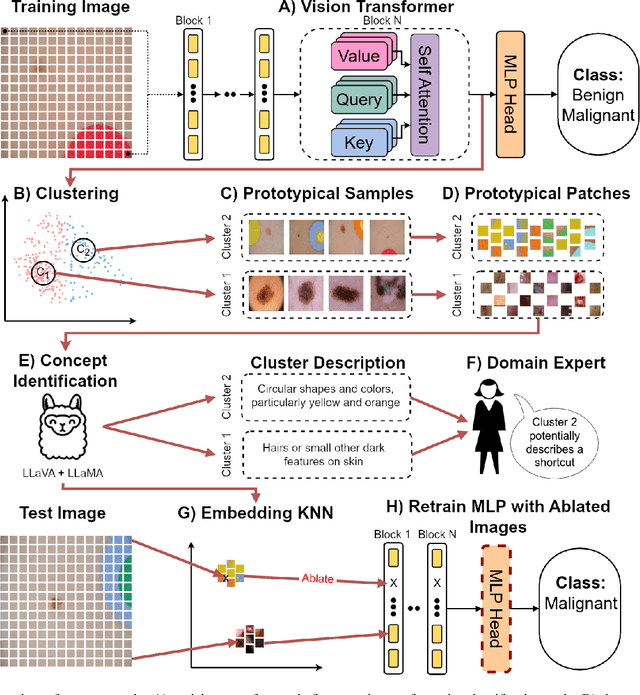

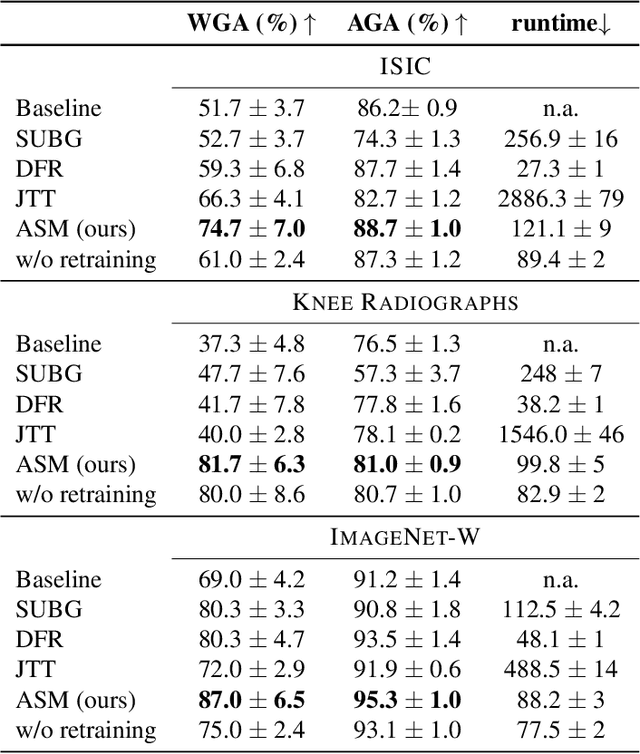

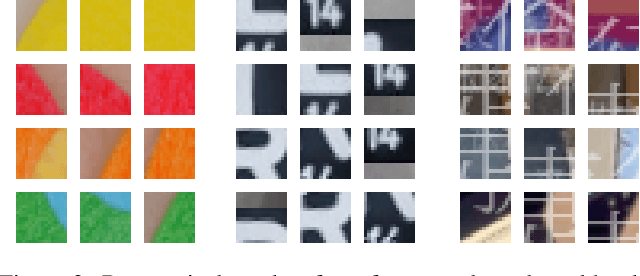

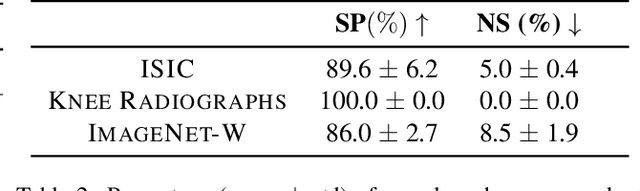

Efficient Unsupervised Shortcut Learning Detection and Mitigation in Transformers

Jan 01, 2025

Abstract:Shortcut learning, i.e., a model's reliance on undesired features not directly relevant to the task, is a major challenge that severely limits the applications of machine learning algorithms, particularly when deploying them to assist in making sensitive decisions, such as in medical diagnostics. In this work, we leverage recent advancements in machine learning to create an unsupervised framework that is capable of both detecting and mitigating shortcut learning in transformers. We validate our method on multiple datasets. Results demonstrate that our framework significantly improves both worst-group accuracy (samples misclassified due to shortcuts) and average accuracy, while minimizing human annotation effort. Moreover, we demonstrate that the detected shortcuts are meaningful and informative to human experts, and that our framework is computationally efficient, allowing it to be run on consumer hardware.

On Explaining Knowledge Distillation: Measuring and Visualising the Knowledge Transfer Process

Dec 18, 2024

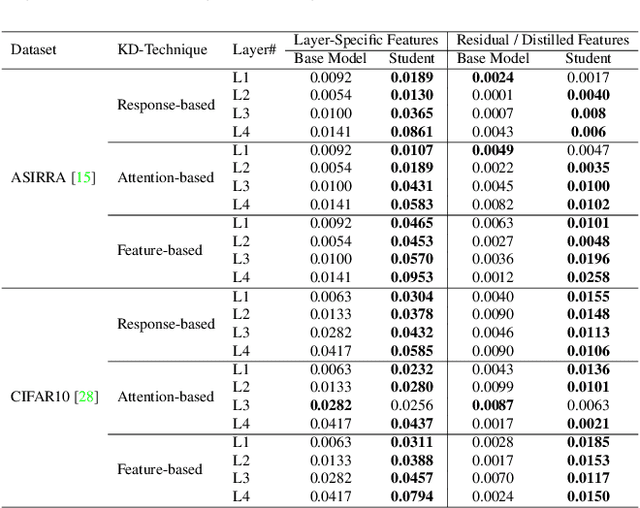

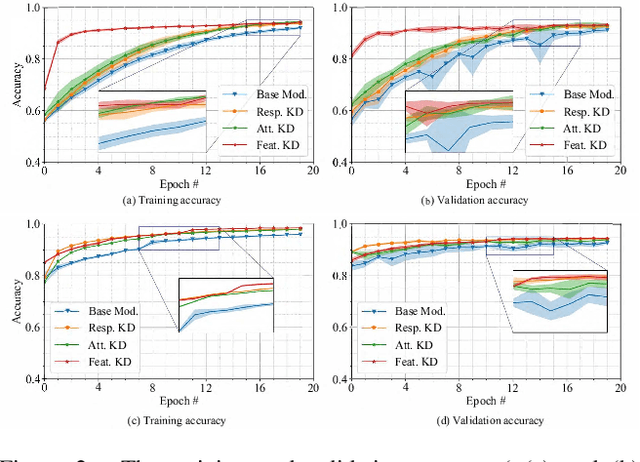

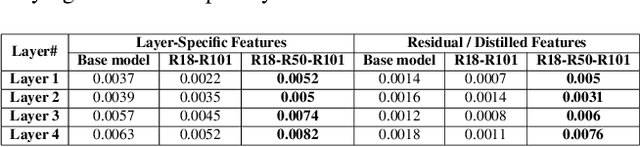

Abstract:Knowledge distillation (KD) remains challenging due to the opaque nature of the knowledge transfer process from a Teacher to a Student, making it difficult to address certain issues related to KD. To address this, we proposed UniCAM, a novel gradient-based visual explanation method, which effectively interprets the knowledge learned during KD. Our experimental results demonstrate that with the guidance of the Teacher's knowledge, the Student model becomes more efficient, learning more relevant features while discarding those that are not relevant. We refer to the features learned with the Teacher's guidance as distilled features and the features irrelevant to the task and ignored by the Student as residual features. Distilled features focus on key aspects of the input, such as textures and parts of objects. In contrast, residual features demonstrate more diffused attention, often targeting irrelevant areas, including the backgrounds of the target objects. In addition, we proposed two novel metrics: the feature similarity score (FSS) and the relevance score (RS), which quantify the relevance of the distilled knowledge. Experiments on the CIFAR10, ASIRRA, and Plant Disease datasets demonstrate that UniCAM and the two metrics offer valuable insights to explain the KD process.

Limited but consistent gains in adversarial robustness by co-training object recognition models with human EEG

Sep 05, 2024

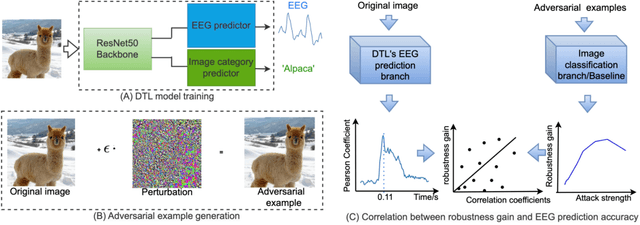

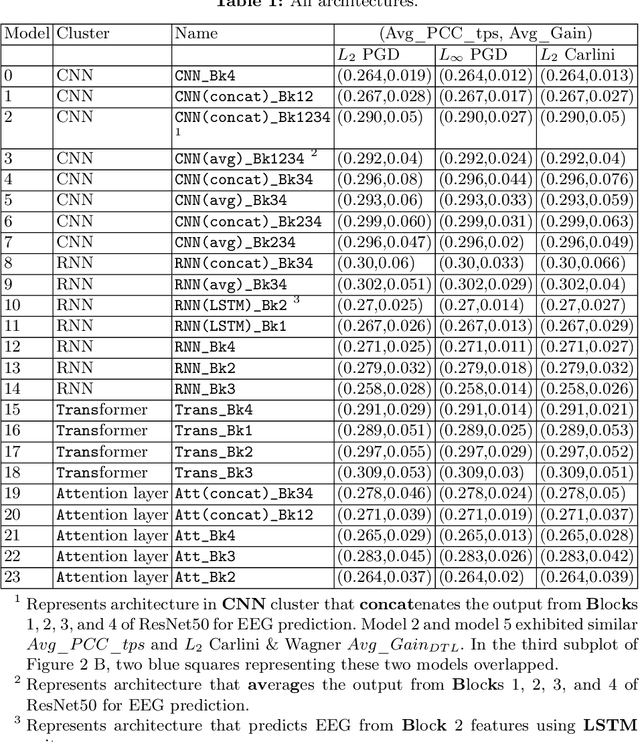

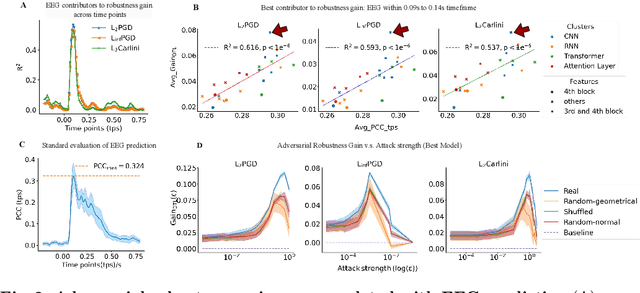

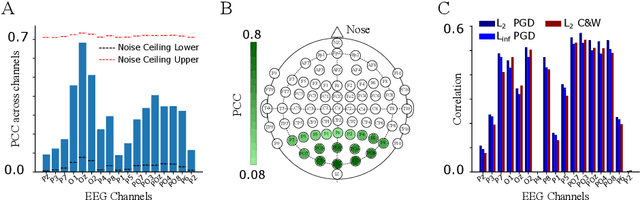

Abstract:In contrast to human vision, artificial neural networks (ANNs) remain relatively susceptible to adversarial attacks. To address this vulnerability, efforts have been made to transfer inductive bias from human brains to ANNs, often by training the ANN representations to match their biological counterparts. Previous works relied on brain data acquired in rodents or primates using invasive techniques, from specific regions of the brain, under non-natural conditions (anesthetized animals), and with stimulus datasets lacking diversity and naturalness. In this work, we explored whether aligning model representations to human EEG responses to a rich set of real-world images increases robustness to ANNs. Specifically, we trained ResNet50-backbone models on a dual task of classification and EEG prediction; and evaluated their EEG prediction accuracy and robustness to adversarial attacks. We observed significant correlation between the networks' EEG prediction accuracy, often highest around 100 ms post stimulus onset, and their gains in adversarial robustness. Although effect size was limited, effects were consistent across different random initializations and robust for architectural variants. We further teased apart the data from individual EEG channels and observed strongest contribution from electrodes in the parieto-occipital regions. The demonstrated utility of human EEG for such tasks opens up avenues for future efforts that scale to larger datasets under diverse stimuli conditions with the promise of stronger effects.

Human-inspired Explanations for Vision Transformers and Convolutional Neural Networks

Aug 20, 2024Abstract:We introduce Foveation-based Explanations (FovEx), a novel human-inspired visual explainability (XAI) method for Deep Neural Networks. Our method achieves state-of-the-art performance on both transformer (on 4 out of 5 metrics) and convolutional models (on 3 out of 5 metrics), demonstrating its versatility. Furthermore, we show the alignment between the explanation map produced by FovEx and human gaze patterns (+14\% in NSS compared to RISE, +203\% in NSS compared to gradCAM), enhancing our confidence in FovEx's ability to close the interpretation gap between humans and machines.

FovEx: Human-inspired Explanations for Vision Transformers and Convolutional Neural Networks

Aug 04, 2024Abstract:Explainability in artificial intelligence (XAI) remains a crucial aspect for fostering trust and understanding in machine learning models. Current visual explanation techniques, such as gradient-based or class-activation-based methods, often exhibit a strong dependence on specific model architectures. Conversely, perturbation-based methods, despite being model-agnostic, are computationally expensive as they require evaluating models on a large number of forward passes. In this work, we introduce Foveation-based Explanations (FovEx), a novel XAI method inspired by human vision. FovEx seamlessly integrates biologically inspired perturbations by iteratively creating foveated renderings of the image and combines them with gradient-based visual explorations to determine locations of interest efficiently. These locations are selected to maximize the performance of the model to be explained with respect to the downstream task and then combined to generate an attribution map. We provide a thorough evaluation with qualitative and quantitative assessments on established benchmarks. Our method achieves state-of-the-art performance on both transformers (on 4 out of 5 metrics) and convolutional models (on 3 out of 5 metrics), demonstrating its versatility among various architectures. Furthermore, we show the alignment between the explanation map produced by FovEx and human gaze patterns (+14\% in NSS compared to RISE, +203\% in NSS compared to GradCAM). This comparison enhances our confidence in FovEx's ability to close the interpretation gap between humans and machines.

Classification of freshwater snails of the genus \emph{Radomaniola} with multimodal triplet networks

Jul 29, 2024Abstract:In this paper, we present our first proposal of a machine learning system for the classification of freshwater snails of the genus \emph{Radomaniola}. We elaborate on the specific challenges encountered during system design, and how we tackled them; namely a small, very imbalanced dataset with a high number of classes and high visual similarity between classes. We then show how we employed triplet networks and the multiple input modalities of images, measurements, and genetic information to overcome these challenges and reach a performance comparable to that of a trained domain expert.

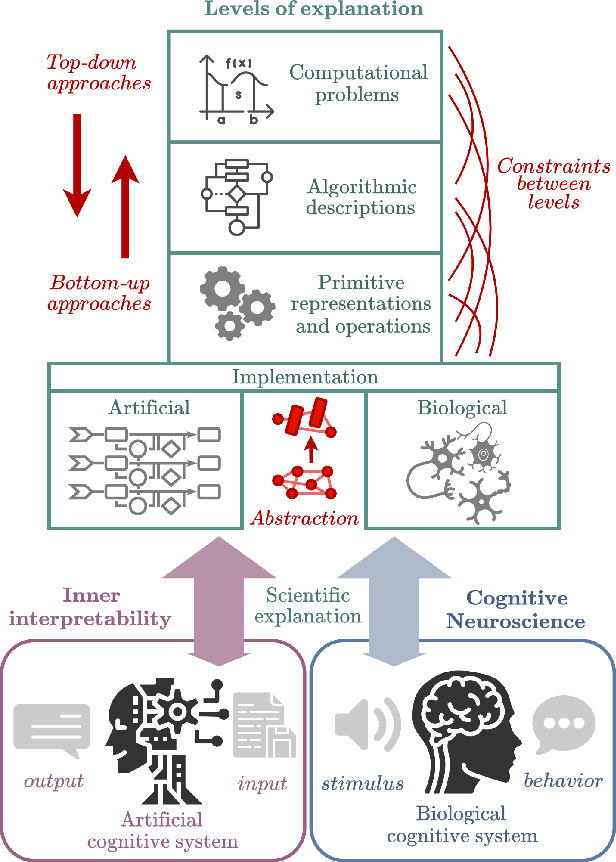

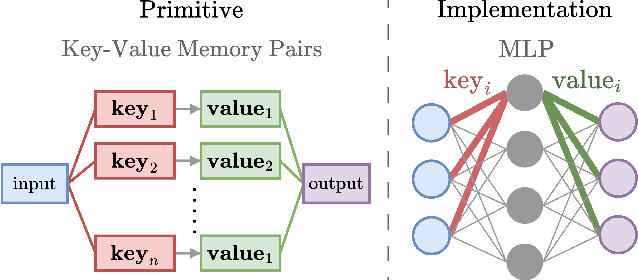

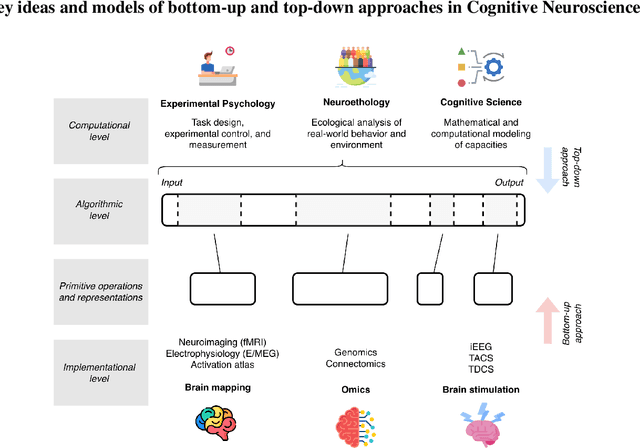

Position Paper: An Inner Interpretability Framework for AI Inspired by Lessons from Cognitive Neuroscience

Jun 03, 2024

Abstract:Inner Interpretability is a promising emerging field tasked with uncovering the inner mechanisms of AI systems, though how to develop these mechanistic theories is still much debated. Moreover, recent critiques raise issues that question its usefulness to advance the broader goals of AI. However, it has been overlooked that these issues resemble those that have been grappled with in another field: Cognitive Neuroscience. Here we draw the relevant connections and highlight lessons that can be transferred productively between fields. Based on these, we propose a general conceptual framework and give concrete methodological strategies for building mechanistic explanations in AI inner interpretability research. With this conceptual framework, Inner Interpretability can fend off critiques and position itself on a productive path to explain AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge