Priyadarshini Kumari

CosFairNet:A Parameter-Space based Approach for Bias Free Learning

Oct 19, 2024Abstract:Deep neural networks trained on biased data often inadvertently learn unintended inference rules, particularly when labels are strongly correlated with biased features. Existing bias mitigation methods typically involve either a) predefining bias types and enforcing them as prior knowledge or b) reweighting training samples to emphasize bias-conflicting samples over bias-aligned samples. However, both strategies address bias indirectly in the feature or sample space, with no control over learned weights, making it difficult to control the bias propagation across different layers. Based on this observation, we introduce a novel approach to address bias directly in the model's parameter space, preventing its propagation across layers. Our method involves training two models: a bias model for biased features and a debias model for unbiased details, guided by the bias model. We enforce dissimilarity in the debias model's later layers and similarity in its initial layers with the bias model, ensuring it learns unbiased low-level features without adopting biased high-level abstractions. By incorporating this explicit constraint during training, our approach shows enhanced classification accuracy and debiasing effectiveness across various synthetic and real-world datasets of different sizes. Moreover, the proposed method demonstrates robustness across different bias types and percentages of biased samples in the training data. The code is available at: https://visdomlab.github.io/CosFairNet/

Visual Transformer for Soil Classification

Sep 07, 2022

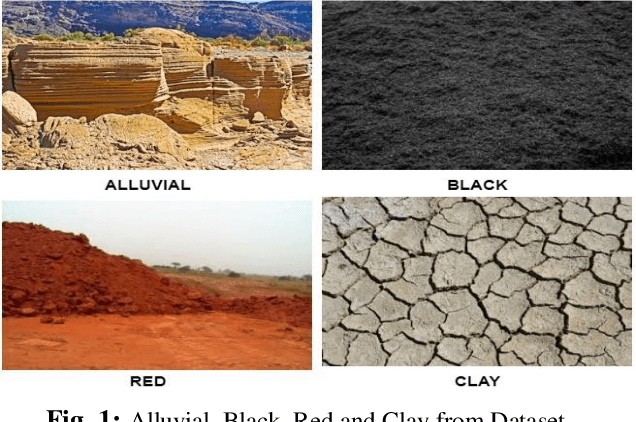

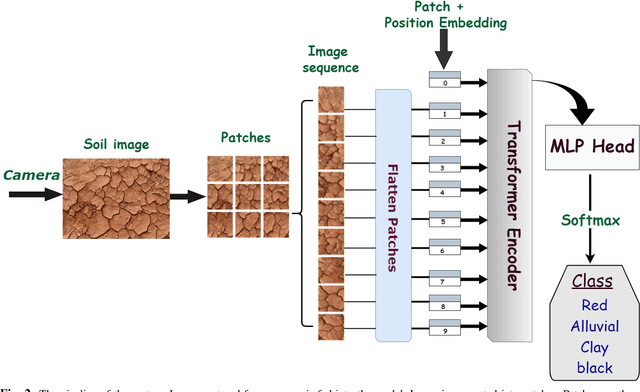

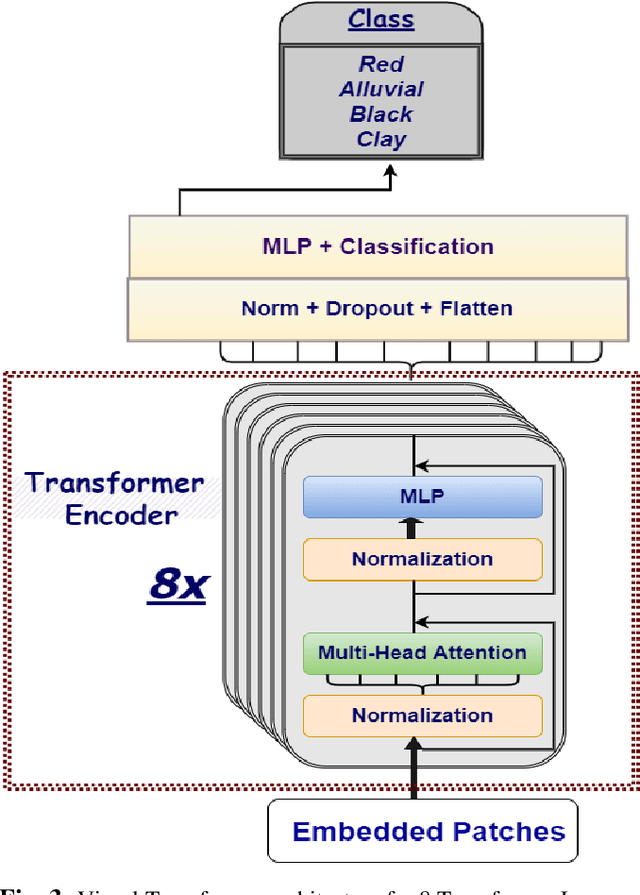

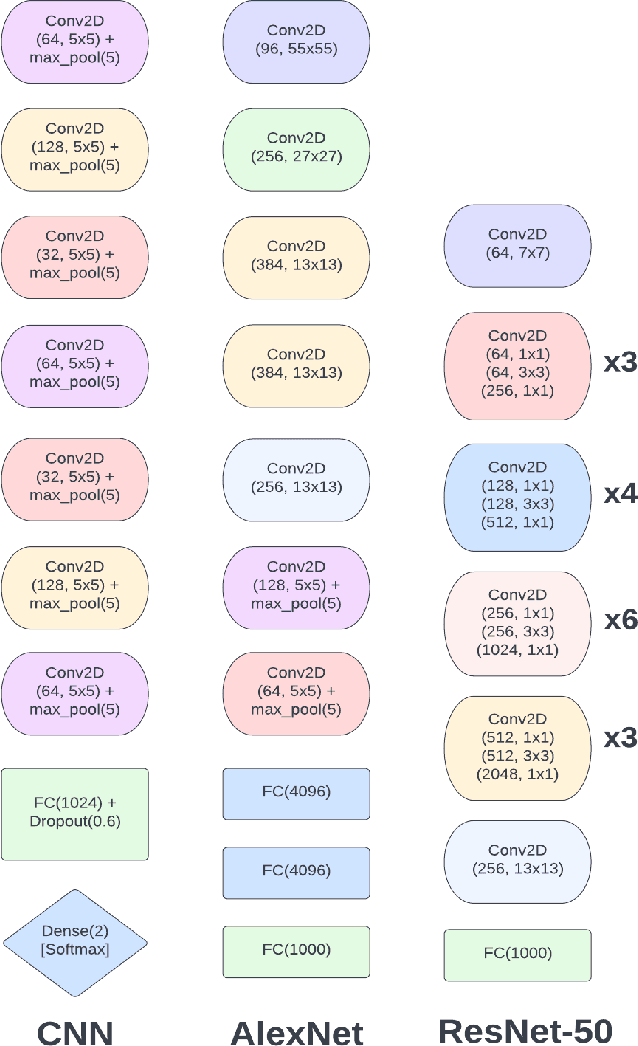

Abstract:Our food security is built on the foundation of soil. Farmers would be unable to feed us with fiber, food, and fuel if the soils were not healthy. Accurately predicting the type of soil helps in planning the usage of the soil and thus increasing productivity. This research employs state-of-the-art Visual Transformers and also compares performance with different models such as SVM, Alexnet, Resnet, and CNN. Furthermore, this study also focuses on differentiating different Visual Transformers architectures. For the classification of soil type, the dataset consists of 4 different types of soil samples such as alluvial, red, black, and clay. The Visual Transformer model outperforms other models in terms of both test and train accuracies by attaining 98.13% on training and 93.62% while testing. The performance of the Visual Transformer exceeds the performance of other models by at least 2%. Hence, the novel Visual Transformers can be used for Computer Vision tasks including Soil Classification.

Maximizing Conditional Entropy for Batch-Mode Active Learning of Perceptual Metrics

Mar 16, 2021

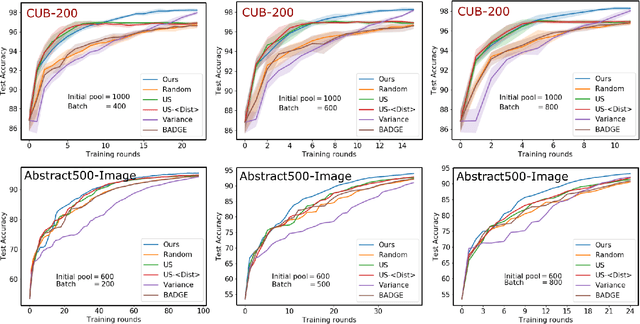

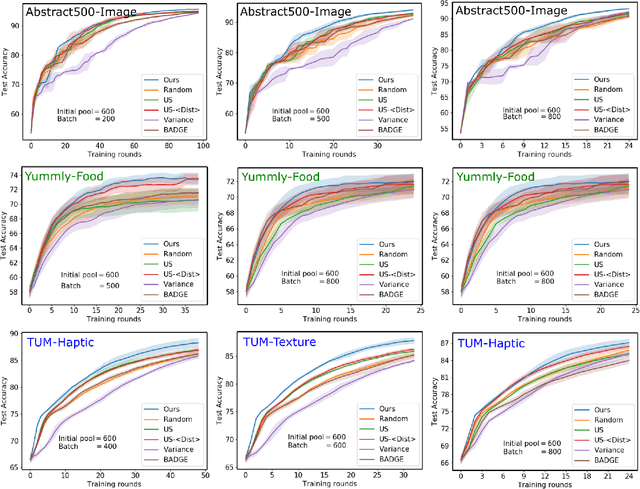

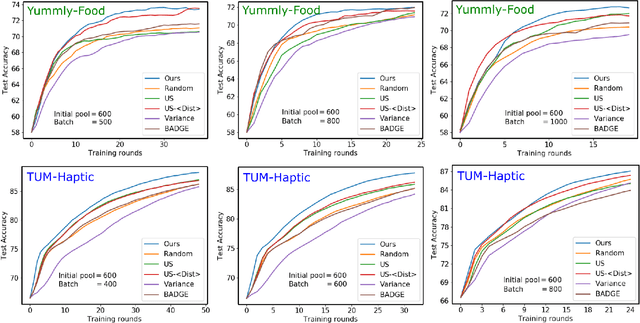

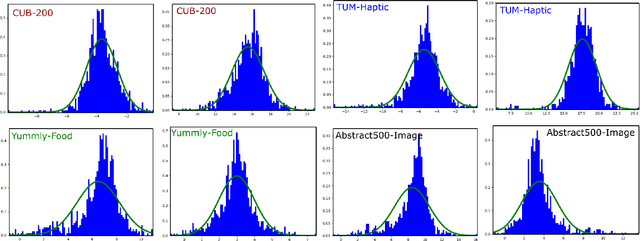

Abstract:Active metric learning is the problem of incrementally selecting batches of training data (typically, ordered triplets) to annotate, in order to progressively improve a learned model of a metric over some input domain as rapidly as possible. Standard approaches, which independently select each triplet in a batch, are susceptible to highly correlated batches with many redundant triplets and hence low overall utility. While there has been recent work on selecting decorrelated batches for metric learning \cite{kumari2020batch}, these methods rely on ad hoc heuristics to estimate the correlation between two triplets at a time. We present a novel approach for batch mode active metric learning using the Maximum Entropy Principle that seeks to collectively select batches with maximum joint entropy, which captures both the informativeness and the diversity of the triplets. The entropy is derived from the second-order statistics estimated by dropout. We take advantage of the monotonically increasing submodular entropy function to construct an efficient greedy algorithm based on Gram-Schmidt orthogonalization that is provably $\left( 1 - \frac{1}{e} \right)$-optimal. Our approach is the first batch-mode active metric learning method to define a unified score that balances informativeness and diversity for an entire batch of triplets. Experiments with several real-world datasets demonstrate that our algorithm is robust and consistently outperforms the state-of-the-art.

Boosted Semantic Embedding based Discriminative Feature Generation for Texture Analysis

Oct 10, 2020

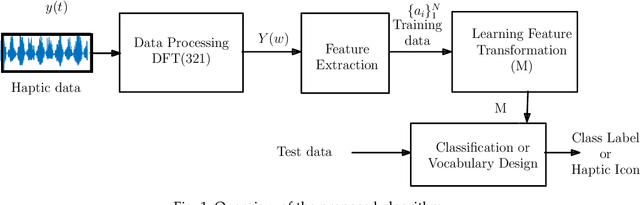

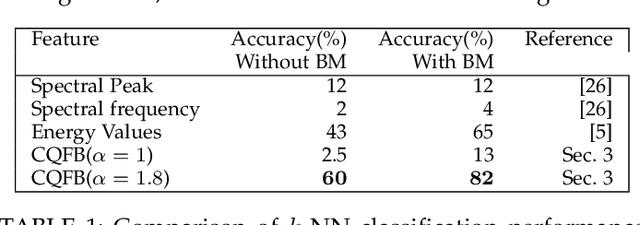

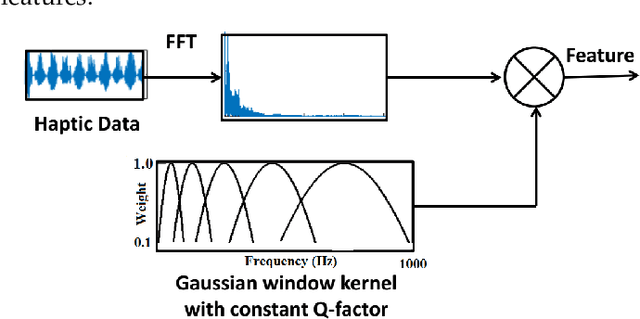

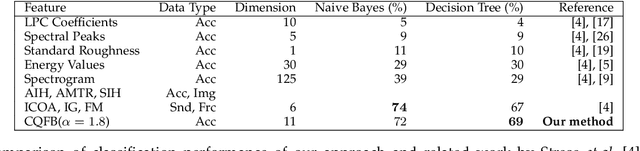

Abstract:Learning discriminative features is crucial for various robotic applications such as object detection and classification. In this paper, we present a general framework for the analysis of the discriminative properties of haptic signals. Our focus is on two crucial components of a robotic perception system: discriminative feature extraction and metric-based feature transformation to enhance the separability of haptic signals in the projected space. We propose a set of hand-crafted haptic features (generated only from acceleration data), which enables discrimination of real-world textures. Since the Euclidean space does not reflect the underlying pattern in the data, we propose to learn an appropriate transformation function to project the feature onto the new space and apply different pattern recognition algorithms for texture classification and discrimination tasks. Unlike other existing methods, we use a triplet-based method for improved discrimination in the embedded space. We further demonstrate how to build a haptic vocabulary by selecting a compact set of the most distinct and representative signals in the embedded space. The experimental results show that the proposed features augmented with learned embedding improves the performance of semantic discrimination tasks such as classification and clustering and outperforms the related state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge