Boosted Semantic Embedding based Discriminative Feature Generation for Texture Analysis

Paper and Code

Oct 10, 2020

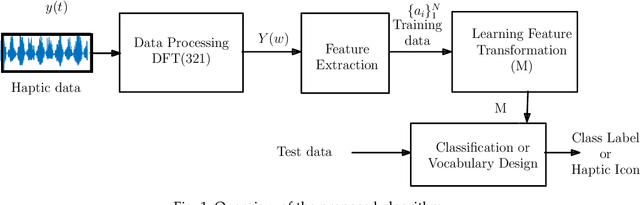

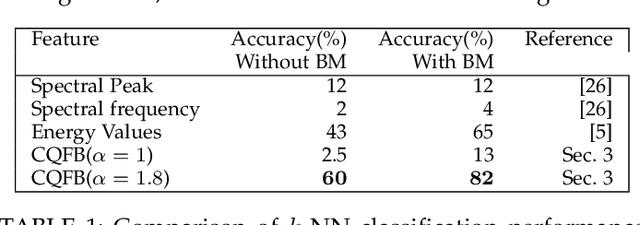

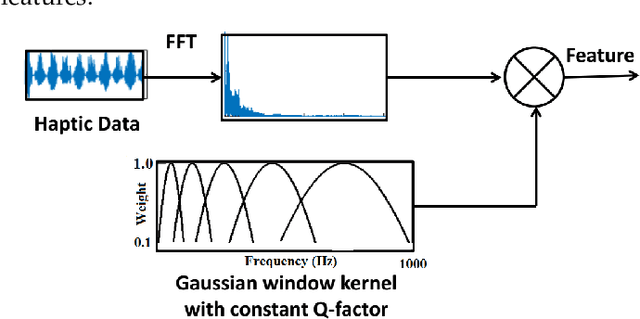

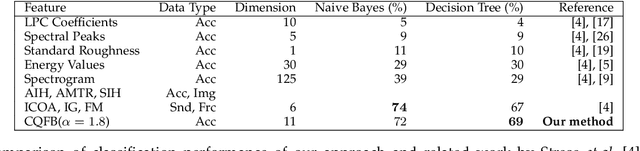

Learning discriminative features is crucial for various robotic applications such as object detection and classification. In this paper, we present a general framework for the analysis of the discriminative properties of haptic signals. Our focus is on two crucial components of a robotic perception system: discriminative feature extraction and metric-based feature transformation to enhance the separability of haptic signals in the projected space. We propose a set of hand-crafted haptic features (generated only from acceleration data), which enables discrimination of real-world textures. Since the Euclidean space does not reflect the underlying pattern in the data, we propose to learn an appropriate transformation function to project the feature onto the new space and apply different pattern recognition algorithms for texture classification and discrimination tasks. Unlike other existing methods, we use a triplet-based method for improved discrimination in the embedded space. We further demonstrate how to build a haptic vocabulary by selecting a compact set of the most distinct and representative signals in the embedded space. The experimental results show that the proposed features augmented with learned embedding improves the performance of semantic discrimination tasks such as classification and clustering and outperforms the related state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge