Umang Goenka

Improved Vehicle Sub-type Classification for Acoustic Traffic Monitoring

Feb 06, 2023Abstract:The detection and classification of vehicles on the road is a crucial task for traffic monitoring. Usually, Computer Vision (CV) algorithms dominate the task of vehicle classification on the road, but CV methodologies might suffer in poor lighting conditions and require greater amounts of computational power. Additionally, there is a privacy concern with installing cameras in sensitive and secure areas. In contrast, acoustic traffic monitoring is cost-effective, and can provide greater accuracy, particularly in low lighting conditions and in places where cameras cannot be installed. In this paper, we consider the task of acoustic vehicle sub-type classification, where we classify acoustic signals into 4 classes: car, truck, bike, and no vehicle. We experimented with Mel spectrograms, MFCC and GFCC as features and performed data pre-processing to train a simple, well optimized CNN that performs well at the task. When used with MFCC as features and careful data pre-processing, our proposed methodology improves upon the established state-of-the-art baseline on the IDMT Traffic dataset with an accuracy of 98.95%.

Visual Transformer for Soil Classification

Sep 07, 2022

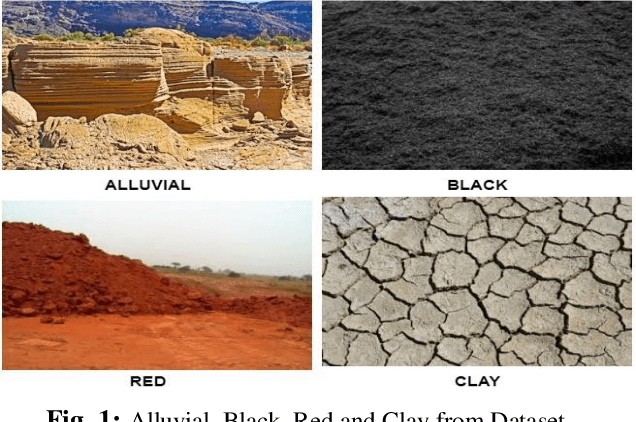

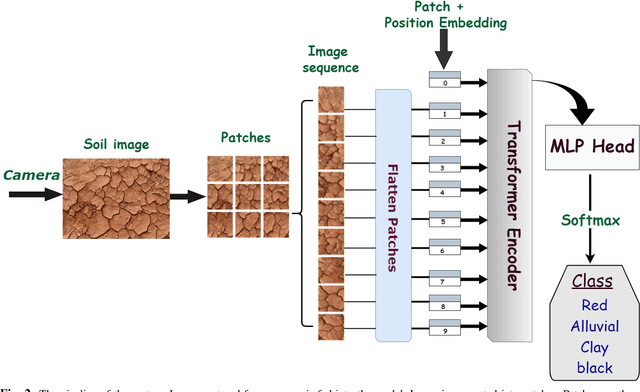

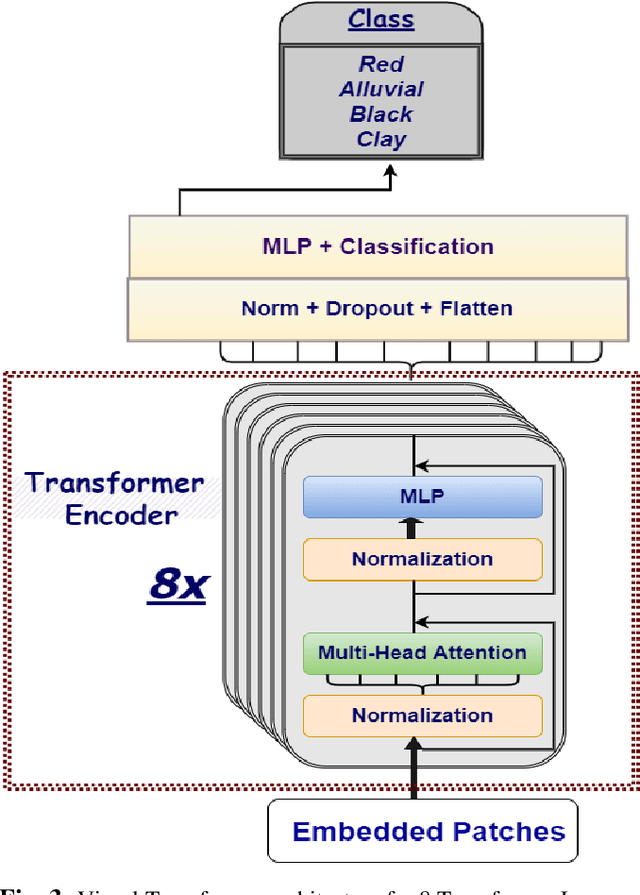

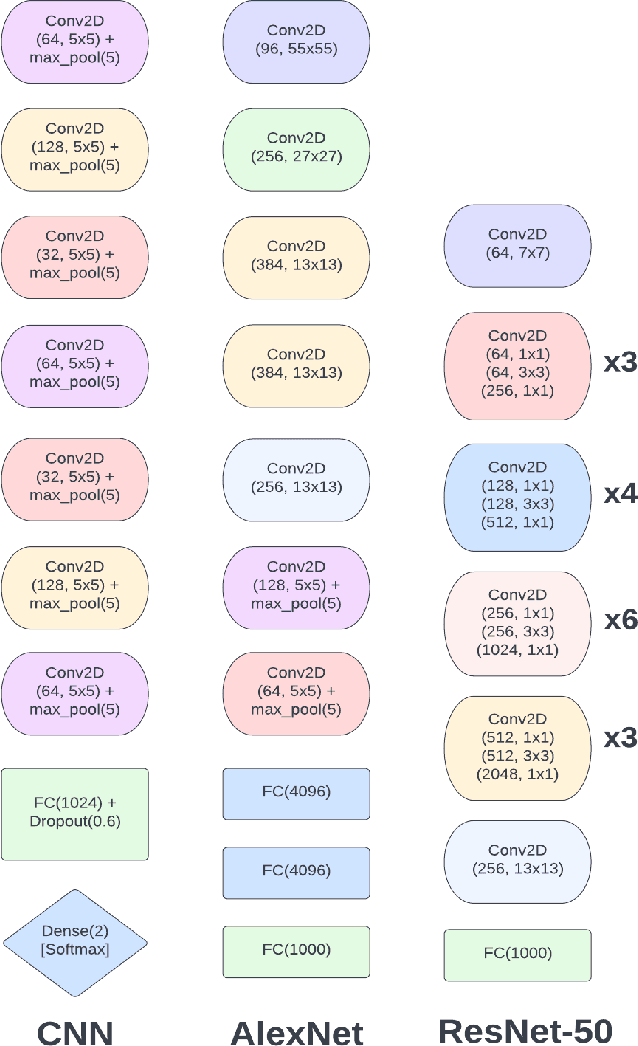

Abstract:Our food security is built on the foundation of soil. Farmers would be unable to feed us with fiber, food, and fuel if the soils were not healthy. Accurately predicting the type of soil helps in planning the usage of the soil and thus increasing productivity. This research employs state-of-the-art Visual Transformers and also compares performance with different models such as SVM, Alexnet, Resnet, and CNN. Furthermore, this study also focuses on differentiating different Visual Transformers architectures. For the classification of soil type, the dataset consists of 4 different types of soil samples such as alluvial, red, black, and clay. The Visual Transformer model outperforms other models in terms of both test and train accuracies by attaining 98.13% on training and 93.62% while testing. The performance of the Visual Transformer exceeds the performance of other models by at least 2%. Hence, the novel Visual Transformers can be used for Computer Vision tasks including Soil Classification.

Threat Detection In Self-Driving Vehicles Using Computer Vision

Sep 06, 2022

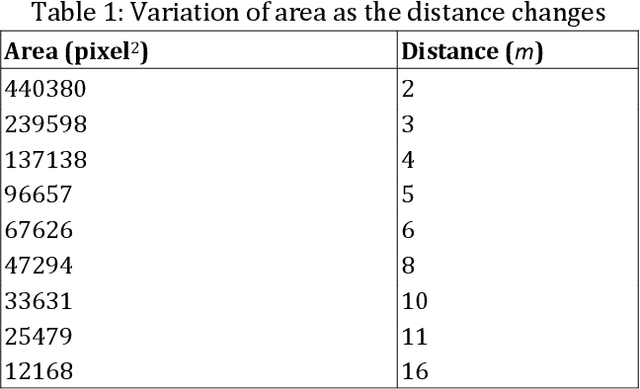

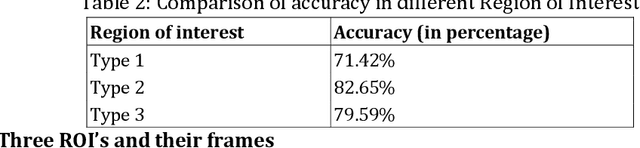

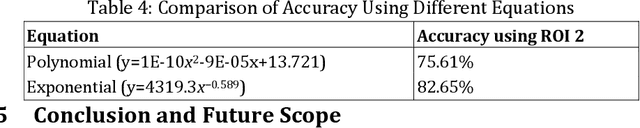

Abstract:On-road obstacle detection is an important field of research that falls in the scope of intelligent transportation infrastructure systems. The use of vision-based approaches results in an accurate and cost-effective solution to such systems. In this research paper, we propose a threat detection mechanism for autonomous self-driving cars using dashcam videos to ensure the presence of any unwanted obstacle on the road that falls within its visual range. This information can assist the vehicle's program to en route safely. There are four major components, namely, YOLO to identify the objects, advanced lane detection algorithm, multi regression model to measure the distance of the object from the camera, the two-second rule for measuring the safety, and limiting speed. In addition, we have used the Car Crash Dataset(CCD) for calculating the accuracy of the model. The YOLO algorithm gives an accuracy of around 93%. The final accuracy of our proposed Threat Detection Model (TDM) is 82.65%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge