Visual Transformer for Soil Classification

Paper and Code

Sep 07, 2022

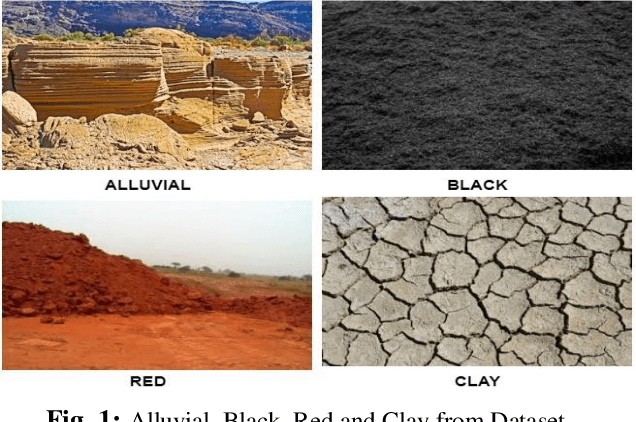

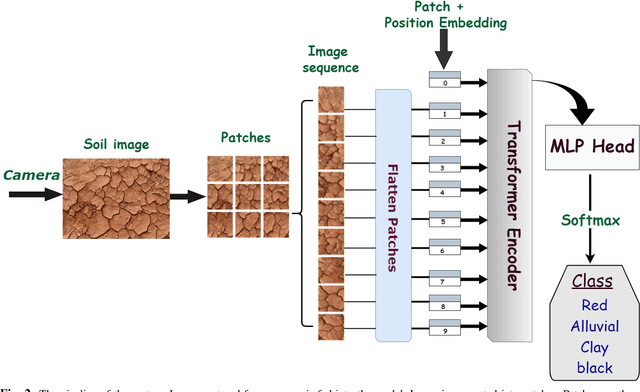

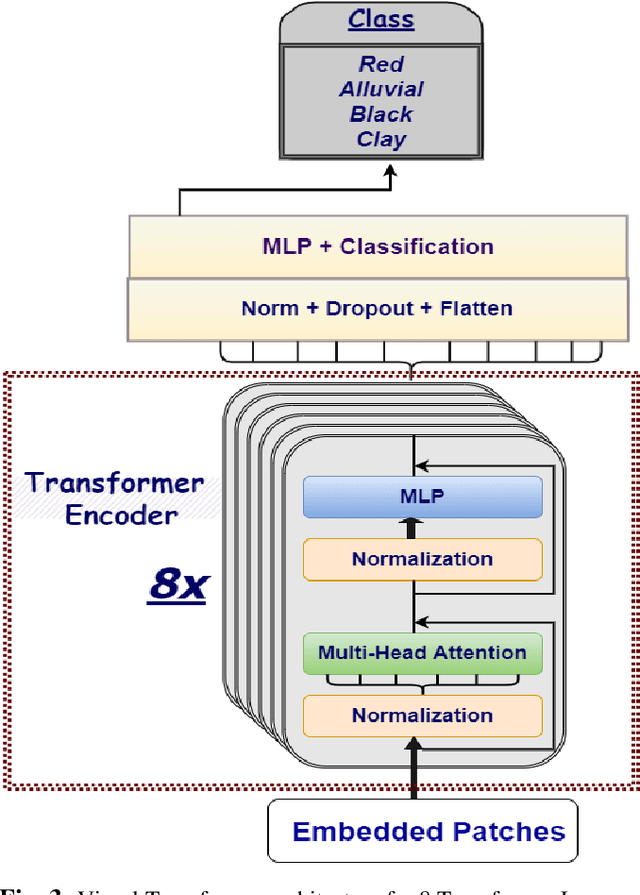

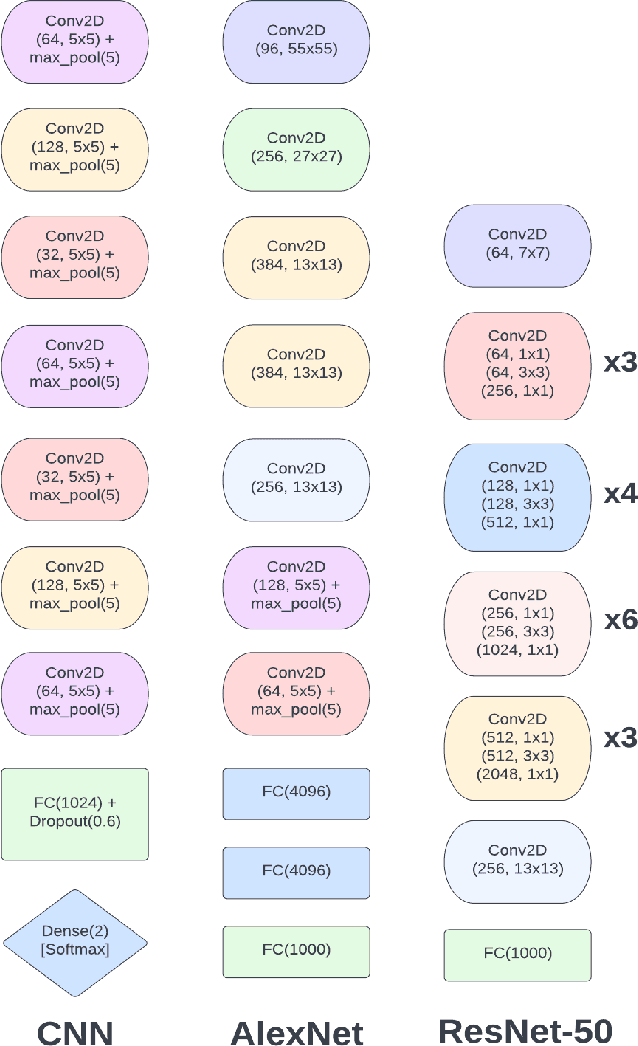

Our food security is built on the foundation of soil. Farmers would be unable to feed us with fiber, food, and fuel if the soils were not healthy. Accurately predicting the type of soil helps in planning the usage of the soil and thus increasing productivity. This research employs state-of-the-art Visual Transformers and also compares performance with different models such as SVM, Alexnet, Resnet, and CNN. Furthermore, this study also focuses on differentiating different Visual Transformers architectures. For the classification of soil type, the dataset consists of 4 different types of soil samples such as alluvial, red, black, and clay. The Visual Transformer model outperforms other models in terms of both test and train accuracies by attaining 98.13% on training and 93.62% while testing. The performance of the Visual Transformer exceeds the performance of other models by at least 2%. Hence, the novel Visual Transformers can be used for Computer Vision tasks including Soil Classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge