Spring Berman

Ant-inspired Walling Strategies for Scalable Swarm Separation: Reinforcement Learning Approaches Based on Finite State Machines

Oct 26, 2025Abstract:In natural systems, emergent structures often arise to balance competing demands. Army ants, for example, form temporary "walls" that prevent interference between foraging trails. Inspired by this behavior, we developed two decentralized controllers for heterogeneous robotic swarms to maintain spatial separation while executing concurrent tasks. The first is a finite-state machine (FSM)-based controller that uses encounter-triggered transitions to create rigid, stable walls. The second integrates FSM states with a Deep Q-Network (DQN), dynamically optimizing separation through emergent "demilitarized zones." In simulation, both controllers reduce mixing between subgroups, with the DQN-enhanced controller improving adaptability and reducing mixing by 40-50% while achieving faster convergence.

A Survey on Small-Scale Testbeds for Connected and Automated Vehicles and Robot Swarms

Aug 26, 2024

Abstract:Connected and automated vehicles and robot swarms hold transformative potential for enhancing safety, efficiency, and sustainability in the transportation and manufacturing sectors. Extensive testing and validation of these technologies is crucial for their deployment in the real world. While simulations are essential for initial testing, they often have limitations in capturing the complex dynamics of real-world interactions. This limitation underscores the importance of small-scale testbeds. These testbeds provide a realistic, cost-effective, and controlled environment for testing and validating algorithms, acting as an essential intermediary between simulation and full-scale experiments. This work serves to facilitate researchers' efforts in identifying existing small-scale testbeds suitable for their experiments and provide insights for those who want to build their own. In addition, it delivers a comprehensive survey of the current landscape of these testbeds. We derive 62 characteristics of testbeds based on the well-known sense-plan-act paradigm and offer an online table comparing 22 small-scale testbeds based on these characteristics. The online table is hosted on our designated public webpage www.cpm-remote.de/testbeds, and we invite testbed creators and developers to contribute to it. We closely examine nine testbeds in this paper, demonstrating how the derived characteristics can be used to present testbeds. Furthermore, we discuss three ongoing challenges concerning small-scale testbeds that we identified, i.e., small-scale to full-scale transition, sustainability, and power and resource management.

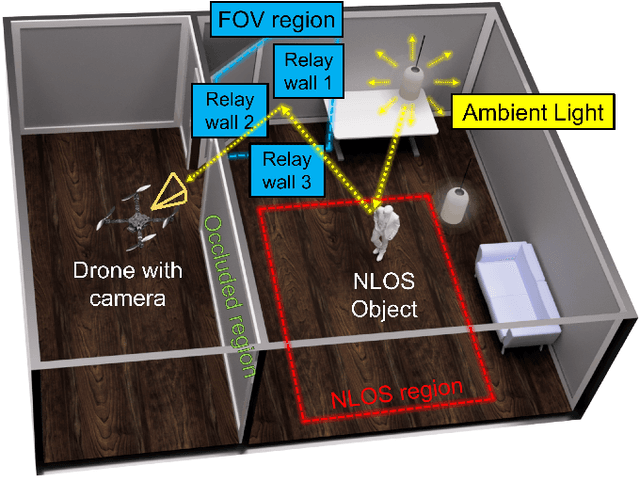

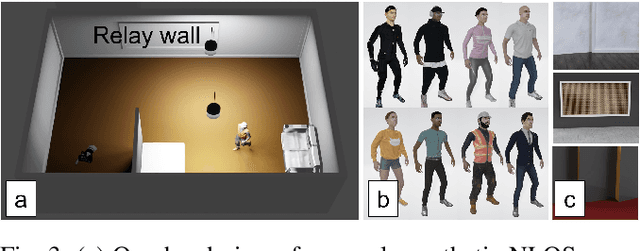

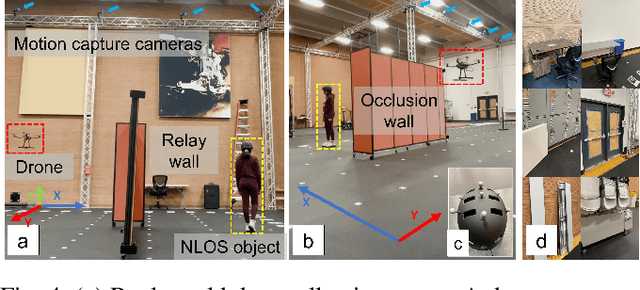

PathFinder: Attention-Driven Dynamic Non-Line-of-Sight Tracking with a Mobile Robot

Apr 07, 2024

Abstract:The study of non-line-of-sight (NLOS) imaging is growing due to its many potential applications, including rescue operations and pedestrian detection by self-driving cars. However, implementing NLOS imaging on a moving camera remains an open area of research. Existing NLOS imaging methods rely on time-resolved detectors and laser configurations that require precise optical alignment, making it difficult to deploy them in dynamic environments. This work proposes a data-driven approach to NLOS imaging, PathFinder, that can be used with a standard RGB camera mounted on a small, power-constrained mobile robot, such as an aerial drone. Our experimental pipeline is designed to accurately estimate the 2D trajectory of a person who moves in a Manhattan-world environment while remaining hidden from the camera's field-of-view. We introduce a novel approach to process a sequence of dynamic successive frames in a line-of-sight (LOS) video using an attention-based neural network that performs inference in real-time. The method also includes a preprocessing selection metric that analyzes images from a moving camera which contain multiple vertical planar surfaces, such as walls and building facades, and extracts planes that return maximum NLOS information. We validate the approach on in-the-wild scenes using a drone for video capture, thus demonstrating low-cost NLOS imaging in dynamic capture environments.

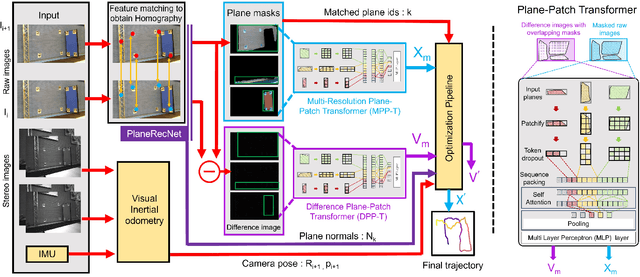

Stereo Visual Odometry with Deep Learning-Based Point and Line Feature Matching using an Attention Graph Neural Network

Aug 02, 2023

Abstract:Robust feature matching forms the backbone for most Visual Simultaneous Localization and Mapping (vSLAM), visual odometry, 3D reconstruction, and Structure from Motion (SfM) algorithms. However, recovering feature matches from texture-poor scenes is a major challenge and still remains an open area of research. In this paper, we present a Stereo Visual Odometry (StereoVO) technique based on point and line features which uses a novel feature-matching mechanism based on an Attention Graph Neural Network that is designed to perform well even under adverse weather conditions such as fog, haze, rain, and snow, and dynamic lighting conditions such as nighttime illumination and glare scenarios. We perform experiments on multiple real and synthetic datasets to validate the ability of our method to perform StereoVO under low visibility weather and lighting conditions through robust point and line matches. The results demonstrate that our method achieves more line feature matches than state-of-the-art line matching algorithms, which when complemented with point feature matches perform consistently well in adverse weather and dynamic lighting conditions.

Single-Shot Domain Adaptation via Target-Aware Generative Augmentation

Oct 29, 2022Abstract:The problem of adapting models from a source domain using data from any target domain of interest has gained prominence, thanks to the brittle generalization in deep neural networks. While several test-time adaptation techniques have emerged, they typically rely on synthetic data augmentations in cases of limited target data availability. In this paper, we consider the challenging setting of single-shot adaptation and explore the design of augmentation strategies. We argue that augmentations utilized by existing methods are insufficient to handle large distribution shifts, and hence propose a new approach SiSTA (Single-Shot Target Augmentations), which first fine-tunes a generative model from the source domain using a single-shot target, and then employs novel sampling strategies for curating synthetic target data. Using experiments with a state-of-the-art domain adaptation method, we find that SiSTA produces improvements as high as 20\% over existing baselines under challenging shifts in face attribute detection, and that it performs competitively to oracle models obtained by training on a larger target dataset.

Configuration Tracking Control of a Multi-Segment Soft Robotic Arm Using a Cosserat Rod Model

Oct 01, 2022

Abstract:Controlling soft continuum robotic arms is challenging due to their hyper-redundancy and dexterity. In this paper we demonstrate, for the first time, closed-loop control of the configuration space variables of a soft robotic arm, composed of independently controllable segments, using a Cosserat rod model of the robot and the distributed sensing and actuation capabilities of the segments. Our controller solves the inverse dynamic problem by simulating the Cosserat rod model in MATLAB using a computationally efficient numerical solution scheme, and it applies the computed control output to the actual robot in real time. The position and orientation of the tip of each segment are measured in real time, while the remaining unknown variables that are needed to solve the inverse dynamics are estimated simultaneously in the simulation. We implement the controller on a multi-segment silicone robotic arm with pneumatic actuation, using a motion capture system to measure the segments' positions and orientations. The controller is used to reshape the arm into configurations that are achieved through different combinations of bending and extension deformations in 3D space. The resulting tracking performance indicates the effectiveness of the controller and the accuracy of the simulated Cosserat rod model that is used to estimate the unmeasured variables.

CHARTOPOLIS: A Small-Scale Labor-art-ory for Research and Reflection on Autonomous Vehicles, Human-Robot Interaction, and Sociotechnical Imaginaries

Oct 01, 2022

Abstract:CHARTOPOLIS is a multi-faceted sociotechnical testbed meant to aid in building connections among engineers, psychologists, anthropologists, ethicists, and artists. Superficially, it is an urban autonomous-vehicle testbed that includes both a physical environment for small-scale robotic vehicles as well as a high-fidelity virtual replica that provides extra flexibility by way of computer simulation. However, both environments have been developed to allow for participatory simulation with human drivers as well. Each physical vehicle can be remotely operated by human drivers that have a driver-seat point of view that immerses them within the small-scale testbed, and those same drivers can also pilot high-fidelity models of those vehicles in a virtual replica of the environment. Juxtaposing human driving performance across these two contexts will help identify to what extent human driving behaviors are sensorimotor responses or involve psychological engagement with a system that has physical, not virtual, side effects and consequences. Furthermore, through collaboration with artists, we have designed the physical testbed to make tangible the reality that technological advancement causes the history of a city to fork into multiple, parallel timelines that take place within populations whose increasing isolation effectively creates multiple independent cities in one. Ultimately, CHARTOPOLIS is meant to challenge engineers to take a more holistic view when designing autonomous systems, while also enabling them to gather novel data that will assist them in making these systems more trustworthy.

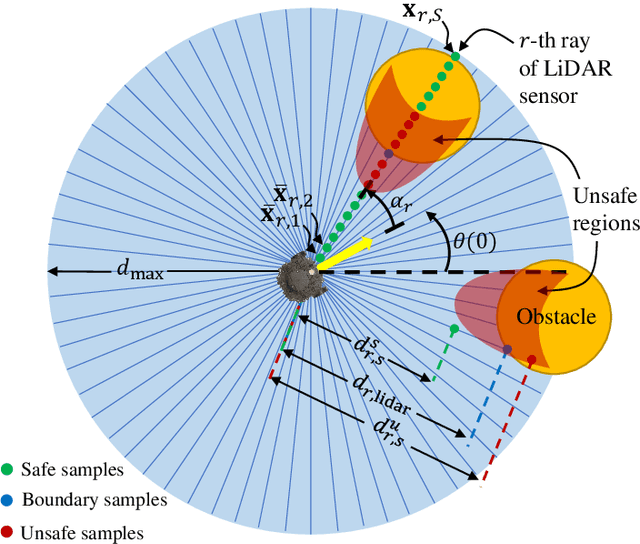

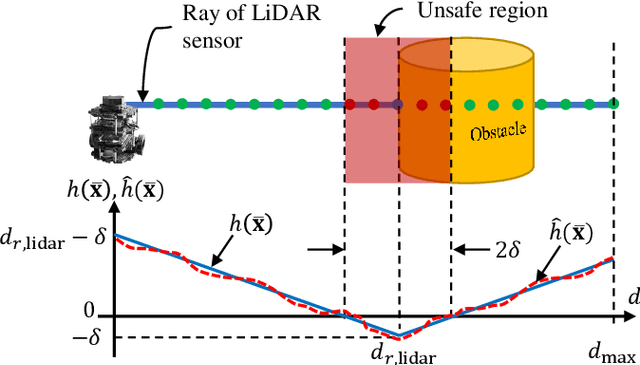

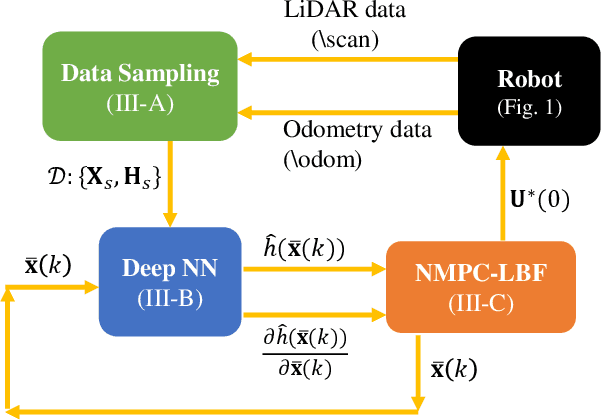

NMPC-LBF: Nonlinear MPC with Learned Barrier Function for Decentralized Safe Navigation of Multiple Robots in Unknown Environments

Aug 16, 2022

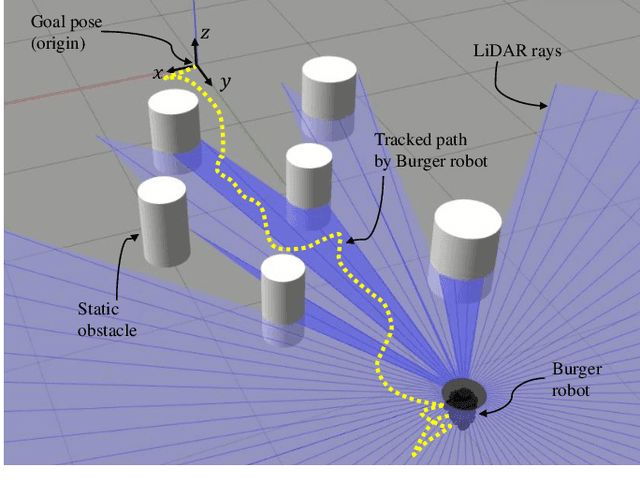

Abstract:In this paper, we present a decentralized control approach based on a Nonlinear Model Predictive Control (NMPC) method that employs barrier certificates for safe navigation of multiple nonholonomic wheeled mobile robots in unknown environments with static and/or dynamic obstacles. This method incorporates a Learned Barrier Function (LBF) into the NMPC design in order to guarantee safe robot navigation, i.e., prevent robot collisions with other robots and the obstacles. We refer to our proposed control approach as NMPC-LBF. Since each robot does not have a priori knowledge about the obstacles and other robots, we use a Deep Neural Network (DeepNN) running in real-time on each robot to learn the Barrier Function (BF) only from the robot's LiDAR and odometry measurements. The DeepNN is trained to learn the BF that separates safe and unsafe regions. We implemented our proposed method on simulated and actual Turtlebot3 Burger robot(s) in different scenarios. The implementation results show the effectiveness of the NMPC-LBF method at ensuring safe navigation of the robots.

Probabilistic Consensus on Feature Distribution for Multi-robot Systems with Markovian Exploration Dynamics

Feb 07, 2022

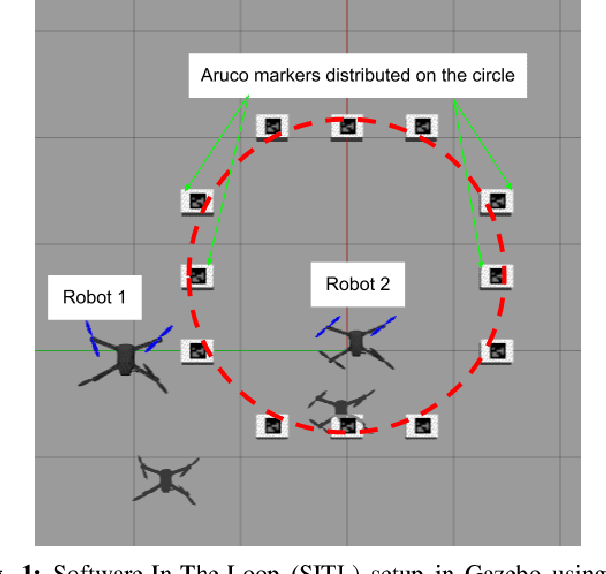

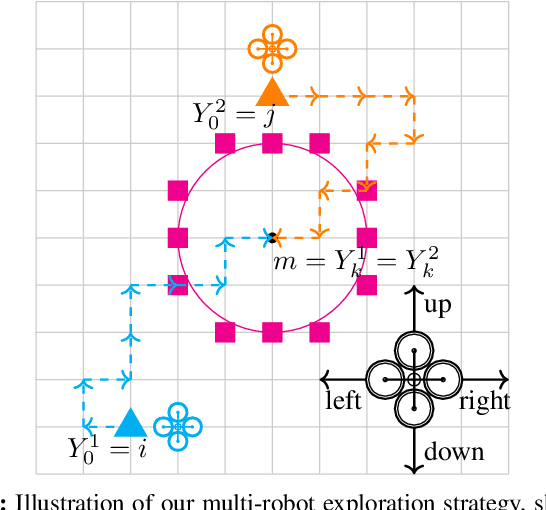

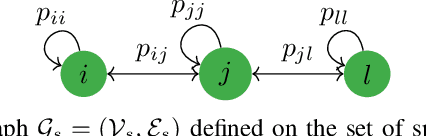

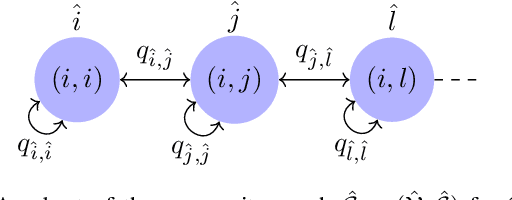

Abstract:In this paper, we present a consensus-based decentralized multi-robot approach to reconstruct a discrete distribution of features, modeled as an occupancy grid map, that represent information contained in a bounded planar environment, such as visual cues used for navigation or semantic labels associated with object detection. The robots explore the environment according to a random walk modeled by a discrete-time discrete-state (DTDS) Markov chain and estimate the feature distribution from their own measurements and the estimates communicated by neighboring robots, using a distributed Chernoff fusion protocol. We prove that under this decentralized fusion protocol, each robot's feature distribution converges to the actual distribution in an almost sure sense. We verify this result in numerical simulations that show that the Hellinger distance between the estimated and actual feature distributions converges to zero over time for each robot. We also validate our strategy through Software-In-The-Loop (SITL) simulations of quadrotors that search a bounded square grid for a set of visual features distributed on a discretized circle.

Towards Decentralized Human-Swarm Interaction by Means of Sequential Hand Gesture Recognition

Feb 04, 2021

Abstract:In this work, we present preliminary work on a novel method for Human-Swarm Interaction (HSI) that can be used to change the macroscopic behavior of a swarm of robots with decentralized sensing and control. By integrating a small yet capable hand gesture recognition convolutional neural network (CNN) with the next-generation Robot Operating System \emph{ros2}, which enables decentralized implementation of robot software for multi-robot applications, we demonstrate the feasibility of programming a swarm of robots to recognize and respond to a sequence of hand gestures that capable of correspond to different types of swarm behaviors. We test our approach using a sequence of gestures that modifies the target inter-robot distance in a group of three Turtlebot3 Burger robots in order to prevent robot collisions with obstacles. The approach is validated in three different Gazebo simulation environments and in a physical testbed that reproduces one of the simulated environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge