Sreenithy Chandran

PathFinder: Attention-Driven Dynamic Non-Line-of-Sight Tracking with a Mobile Robot

Apr 07, 2024

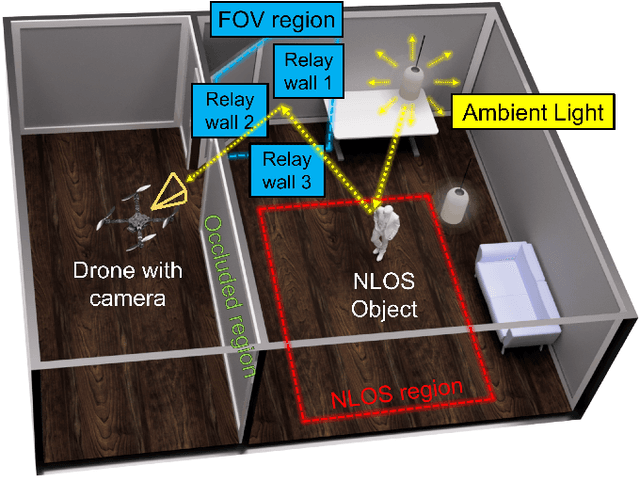

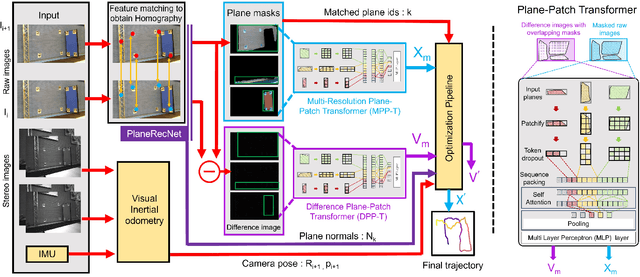

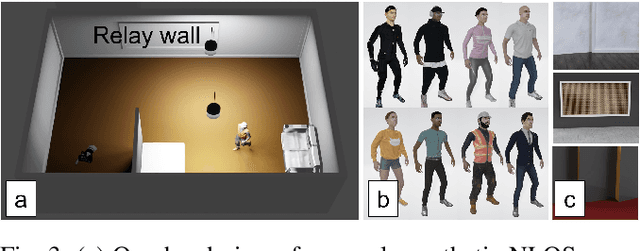

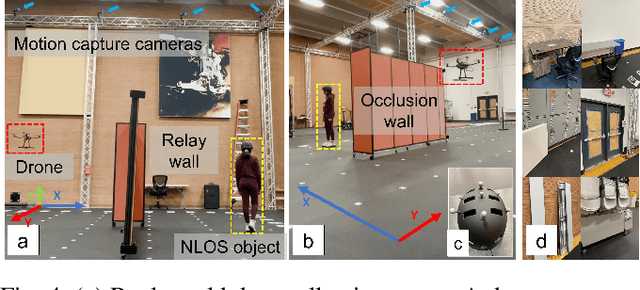

Abstract:The study of non-line-of-sight (NLOS) imaging is growing due to its many potential applications, including rescue operations and pedestrian detection by self-driving cars. However, implementing NLOS imaging on a moving camera remains an open area of research. Existing NLOS imaging methods rely on time-resolved detectors and laser configurations that require precise optical alignment, making it difficult to deploy them in dynamic environments. This work proposes a data-driven approach to NLOS imaging, PathFinder, that can be used with a standard RGB camera mounted on a small, power-constrained mobile robot, such as an aerial drone. Our experimental pipeline is designed to accurately estimate the 2D trajectory of a person who moves in a Manhattan-world environment while remaining hidden from the camera's field-of-view. We introduce a novel approach to process a sequence of dynamic successive frames in a line-of-sight (LOS) video using an attention-based neural network that performs inference in real-time. The method also includes a preprocessing selection metric that analyzes images from a moving camera which contain multiple vertical planar surfaces, such as walls and building facades, and extracts planes that return maximum NLOS information. We validate the approach on in-the-wild scenes using a drone for video capture, thus demonstrating low-cost NLOS imaging in dynamic capture environments.

Adaptive Lighting for Data-Driven Non-Line-of-Sight 3D Localization and Object Identification

May 28, 2019

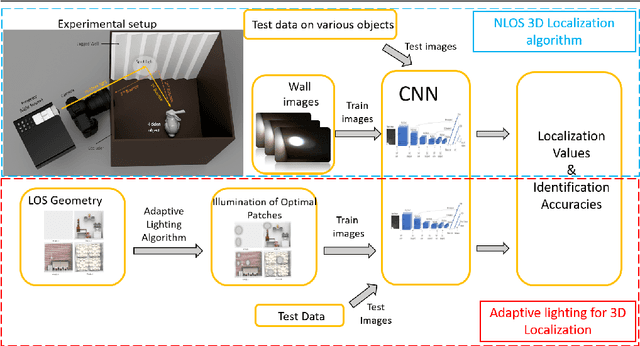

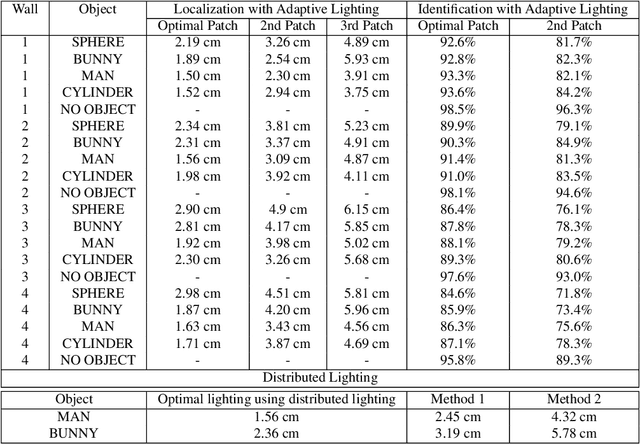

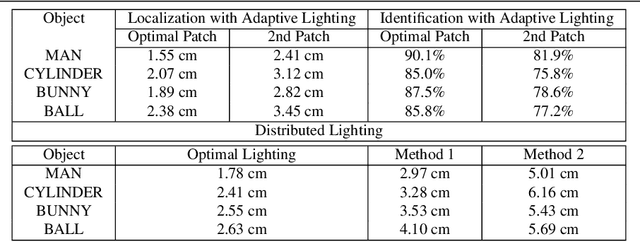

Abstract:Non-line-of-sight (NLOS) imaging of objects not visible to either the camera or illumination source is a challenging task with vital applications including surveillance and robotics. Recent NLOS reconstruction advances have been achieved using time-resolved measurements which requires expensive and specialized detectors and laser sources. In contrast, we propose a data-driven approach for NLOS 3D localization requiring only a conventional camera and projector. We achieve an average identification of 79% object identification for three classes of objects, and localization of the NLOS object's centroid for a mean-squared error (MSE) of 2.89cm in the occluded region for real data taken from a hardware prototype. To generalize to line-of-sight (LOS) scenes with non-planar surfaces, we introduce an adaptive lighting algorithm. This algorithm, based on radiosity, identifies and illuminates scene patches in the LOS which most contribute to the NLOS light paths, and can factor in system power constraints. We further improve our average NLOS object identification to 87.8% accuracy and localization to 1.94cm MSE on a complex LOS scene using adaptive lighting for real data, demonstrating the advantage of combining the physics of light transport with active illumination for data-driven NLOS imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge