Sidi Lu

Locas: Your Models are Principled Initializers of Locally-Supported Parametric Memories

Feb 04, 2026Abstract:In this paper, we aim to bridge test-time-training with a new type of parametric memory that can be flexibly offloaded from or merged into model parameters. We present Locas, a Locally-Supported parametric memory that shares the design of FFN blocks in modern transformers, allowing it to be flexibly permanentized into the model parameters while supporting efficient continual learning. We discuss two major variants of Locas: one with a conventional two-layer MLP design that has a clearer theoretical guarantee; the other one shares the same GLU-FFN structure with SOTA LLMs, and can be easily attached to existing models for both parameter-efficient and computation-efficient continual learning. Crucially, we show that proper initialization of such low-rank sideway-FFN-style memories -- performed in a principled way by reusing model parameters, activations and/or gradients -- is essential for fast convergence, improved generalization, and catastrophic forgetting prevention. We validate the proposed memory mechanism on the PG-19 whole-book language modeling and LoCoMo long-context dialogue question answering tasks. With only 0.02\% additional parameters in the lowest case, Locas-GLU is capable of storing the information from past context while maintaining a much smaller context window. In addition, we also test the model's general capability loss after memorizing the whole book with Locas, through comparative MMLU evaluation. Results show the promising ability of Locas to permanentize past context into parametric knowledge with minimized catastrophic forgetting of the model's existing internal knowledge.

Save the Good Prefix: Precise Error Penalization via Process-Supervised RL to Enhance LLM Reasoning

Jan 26, 2026Abstract:Reinforcement learning (RL) has emerged as a powerful framework for improving the reasoning capabilities of large language models (LLMs). However, most existing RL approaches rely on sparse outcome rewards, which fail to credit correct intermediate steps in partially successful solutions. Process reward models (PRMs) offer fine-grained step-level supervision, but their scores are often noisy and difficult to evaluate. As a result, recent PRM benchmarks focus on a more objective capability: detecting the first incorrect step in a reasoning path. However, this evaluation target is misaligned with how PRMs are typically used in RL, where their step-wise scores are treated as raw rewards to maximize. To bridge this gap, we propose Verifiable Prefix Policy Optimization (VPPO), which uses PRMs only to localize the first error during RL. Given an incorrect rollout, VPPO partitions the trajectory into a verified correct prefix and an erroneous suffix based on the first error, rewarding the former while applying targeted penalties only after the detected mistake. This design yields stable, interpretable learning signals and improves credit assignment. Across multiple reasoning benchmarks, VPPO consistently outperforms sparse-reward RL and prior PRM-guided baselines on both Pass@1 and Pass@K.

Can LLMs Guide Their Own Exploration? Gradient-Guided Reinforcement Learning for LLM Reasoning

Dec 17, 2025

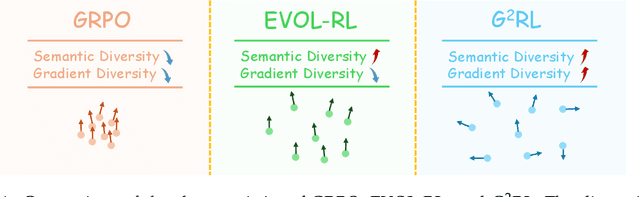

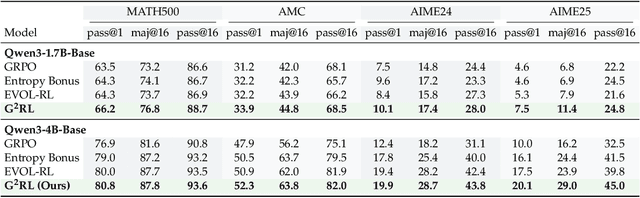

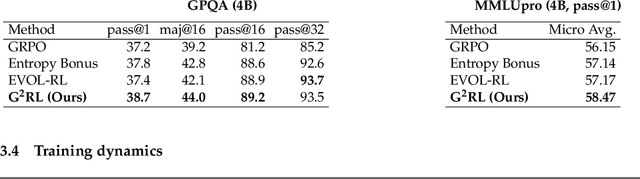

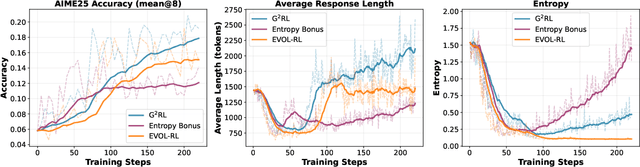

Abstract:Reinforcement learning has become essential for strengthening the reasoning abilities of large language models, yet current exploration mechanisms remain fundamentally misaligned with how these models actually learn. Entropy bonuses and external semantic comparators encourage surface level variation but offer no guarantee that sampled trajectories differ in the update directions that shape optimization. We propose G2RL, a gradient guided reinforcement learning framework in which exploration is driven not by external heuristics but by the model own first order update geometry. For each response, G2RL constructs a sequence level feature from the model final layer sensitivity, obtainable at negligible cost from a standard forward pass, and measures how each trajectory would reshape the policy by comparing these features within a sampled group. Trajectories that introduce novel gradient directions receive a bounded multiplicative reward scaler, while redundant or off manifold updates are deemphasized, yielding a self referential exploration signal that is naturally aligned with PPO style stability and KL control. Across math and general reasoning benchmarks (MATH500, AMC, AIME24, AIME25, GPQA, MMLUpro) on Qwen3 base 1.7B and 4B models, G2RL consistently improves pass@1, maj@16, and pass@k over entropy based GRPO and external embedding methods. Analyzing the induced geometry, we find that G2RL expands exploration into substantially more orthogonal and often opposing gradient directions while maintaining semantic coherence, revealing that a policy own update space provides a far more faithful and effective basis for guiding exploration in large language model reinforcement learning.

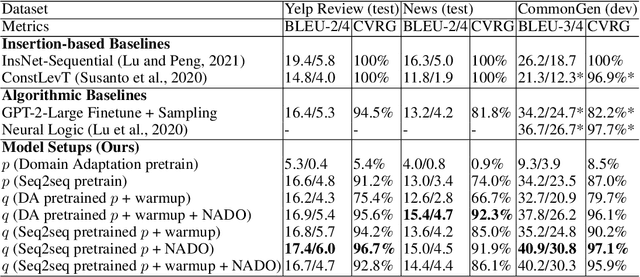

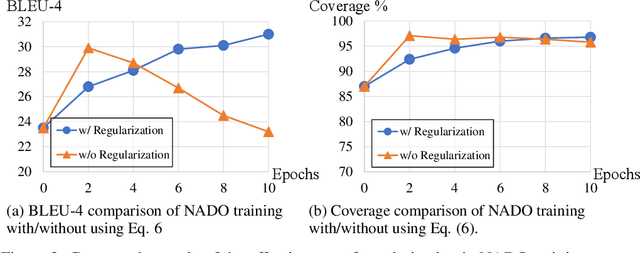

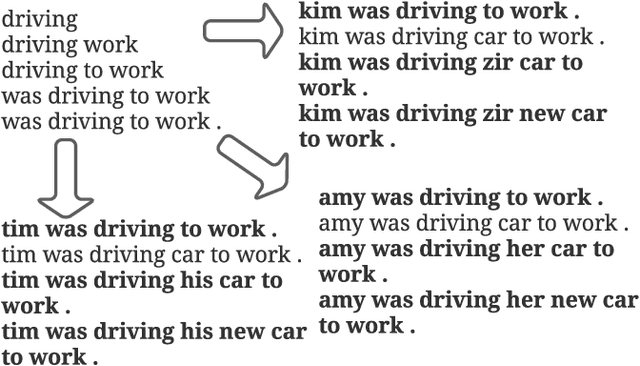

On Compositionality and Improved Training of NADO

Jun 20, 2023Abstract:NeurAlly-Decomposed Oracle (NADO) is a powerful approach for controllable generation with large language models. Differentiating from finetuning/prompt tuning, it has the potential to avoid catastrophic forgetting of the large base model and achieve guaranteed convergence to an entropy-maximized closed-form solution without significantly limiting the model capacity. Despite its success, several challenges arise when applying NADO to more complex scenarios. First, the best practice of using NADO for the composition of multiple control signals is under-explored. Second, vanilla NADO suffers from gradient vanishing for low-probability control signals and is highly reliant on the forward-consistency regularization. In this paper, we study the aforementioned challenges when using NADO theoretically and empirically. We show we can achieve guaranteed compositional generalization of NADO with a certain practice, and propose a novel alternative parameterization of NADO to perfectly guarantee the forward-consistency. We evaluate the improved training of NADO, i.e. NADO++, on CommonGen. Results show that NADO++ improves the effectiveness of the algorithm in multiple aspects.

Open-Domain Text Evaluation via Meta Distribution Modeling

Jun 20, 2023

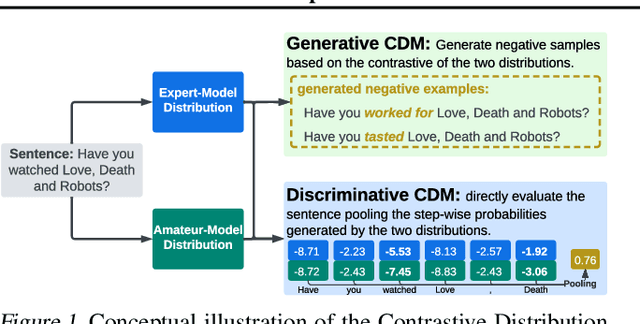

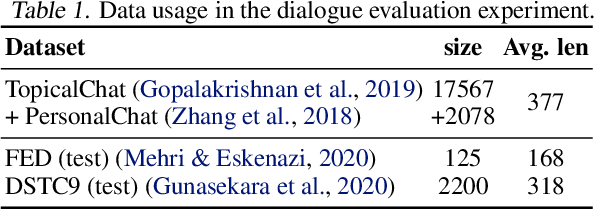

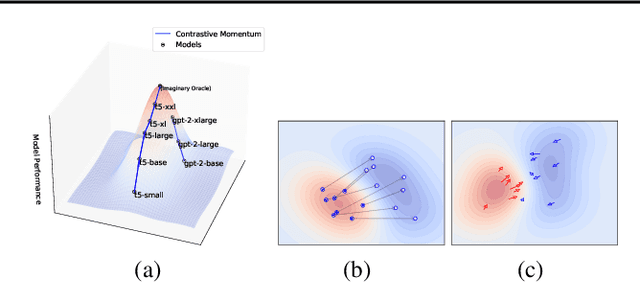

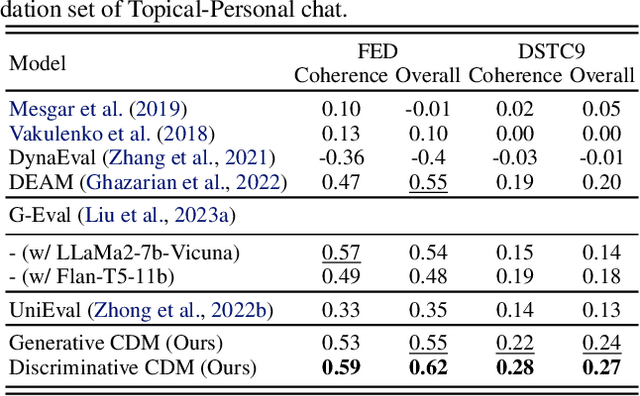

Abstract:Recent advances in open-domain text generation models powered by large pre-trained language models (LLMs) have achieved remarkable performance. However, evaluating and controlling these models for desired attributes remains a challenge, as traditional reference-based metrics such as BLEU, ROUGE, and METEOR are insufficient for open-ended generation tasks. Similarly, while trainable discriminator-based evaluation metrics show promise, obtaining high-quality training data is a non-trivial task. In this paper, we introduce a novel approach to evaluate open-domain generation - the Meta-Distribution Methods (MDM). Drawing on the correlation between the rising parameter counts and the improving performance of LLMs, MDM creates a mapping from the contrast of two probabilistic distributions -- one known to be superior to the other -- to quality measures, which can be viewed as a distribution of distributions i.e. Meta-Distribution. We investigate MDM for open-domain text generation evaluation under two paradigms: 1) \emph{Generative} MDM, which leverages the Meta-Distribution Methods to generate in-domain negative samples for training discriminator-based metrics; 2) \emph{Discriminative} MDM, which directly uses distribution discrepancies between two language models for evaluation. Our experiments on multi-turn dialogue and factuality in abstractive summarization demonstrate that MDMs correlate better with human judgment than existing automatic evaluation metrics on both tasks, highlighting the strong performance and generalizability of such methods.

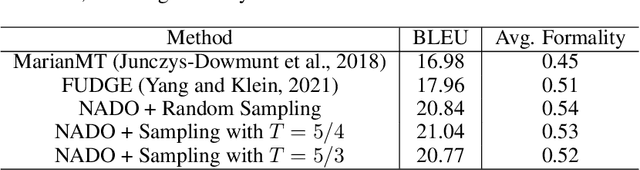

Controllable Text Generation with Neurally-Decomposed Oracle

May 27, 2022

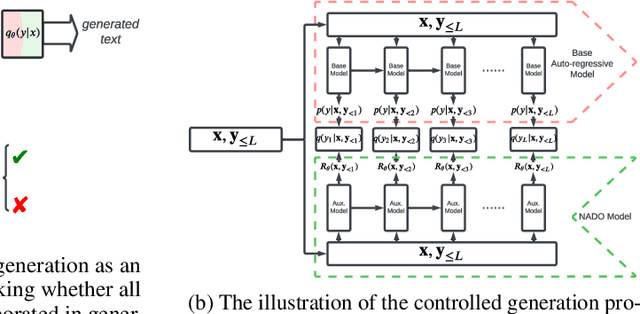

Abstract:We propose a general and efficient framework to control auto-regressive generation models with NeurAlly-Decomposed Oracle (NADO). Given a pre-trained base language model and a sequence-level boolean oracle function, we propose to decompose the oracle function into token-level guidance to steer the base model in text generation. Specifically, the token-level guidance is approximated by a neural model trained with examples sampled from the base model, demanding no additional auxiliary labeled data. We present the closed-form optimal solution to incorporate the token-level guidance into the base model for controllable generation. We further provide a theoretical analysis of how the approximation quality of NADO affects the controllable generation results. Experiments conducted on two applications: (1) text generation with lexical constraints and (2) machine translation with formality control demonstrate that our framework efficiently guides the base model towards the given oracle while maintaining high generation quality.

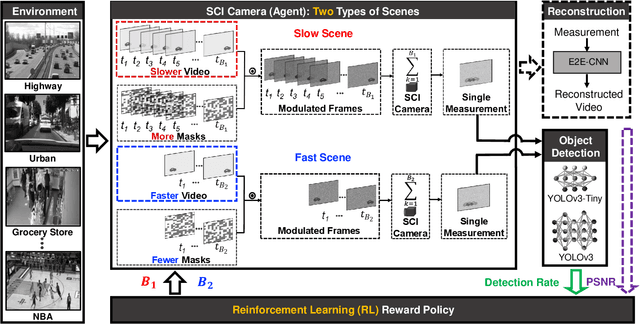

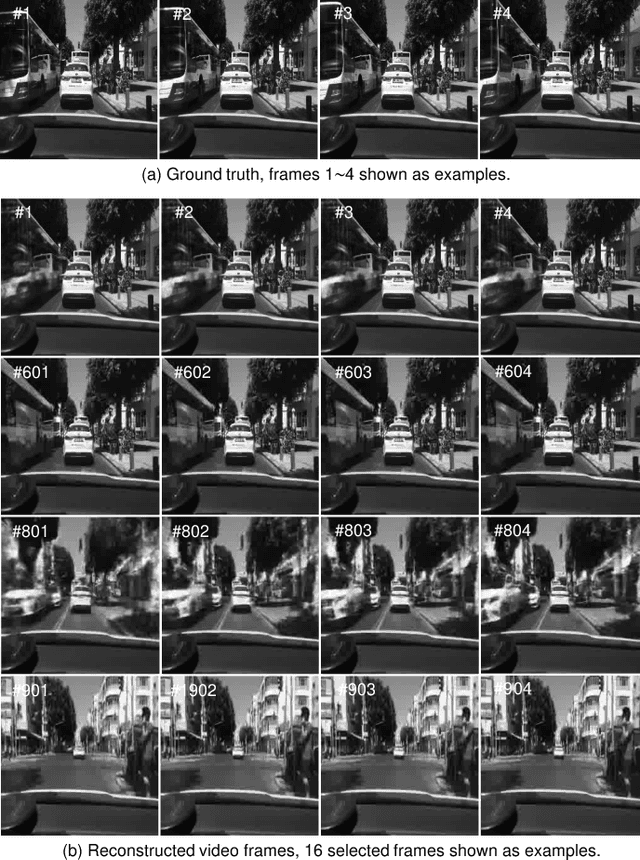

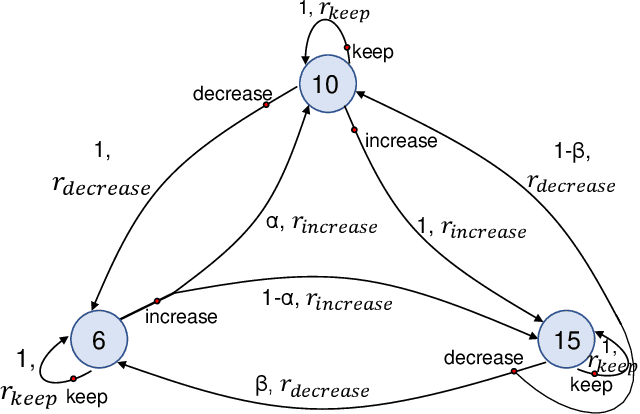

Reinforcement Learning for Adaptive Video Compressive Sensing

May 18, 2021

Abstract:We apply reinforcement learning to video compressive sensing to adapt the compression ratio. Specifically, video snapshot compressive imaging (SCI), which captures high-speed video using a low-speed camera is considered in this work, in which multiple (B) video frames can be reconstructed from a snapshot measurement. One research gap in previous studies is how to adapt B in the video SCI system for different scenes. In this paper, we fill this gap utilizing reinforcement learning (RL). An RL model, as well as various convolutional neural networks for reconstruction, are learned to achieve adaptive sensing of video SCI systems. Furthermore, the performance of an object detection network using directly the video SCI measurements without reconstruction is also used to perform RL-based adaptive video compressive sensing. Our proposed adaptive SCI method can thus be implemented in low cost and real time. Our work takes the technology one step further towards real applications of video SCI.

On Efficient Training, Controllability and Compositional Generalization of Insertion-based Language Generators

Mar 01, 2021

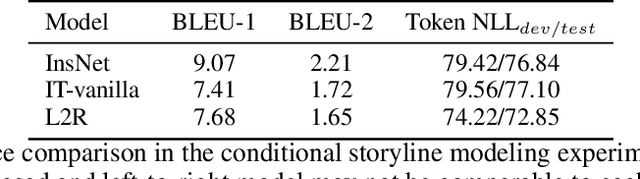

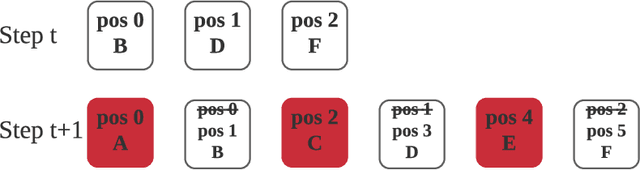

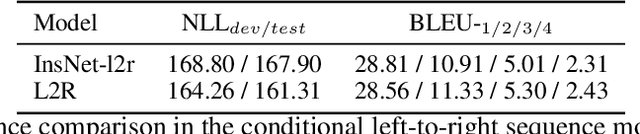

Abstract:Auto-regressive language models with the left-to-right generation order have been a predominant paradigm for language generation. Recently, out-of-order text generation beyond the traditional left-to-right paradigm has attracted extensive attention, with a notable variation of insertion-based generation, where a model is used to gradually extend the context into a complete sentence purely with insertion operations. However, since insertion operations disturb the position information of each token, it is often believed that each step of the insertion-based likelihood estimation requires a bi-directional \textit{re-encoding} of the whole generated sequence. This computational overhead prohibits the model from scaling up to generate long, diverse texts such as stories, news articles, and reports. To address this issue, we propose InsNet, an insertion-based sequence model that can be trained as efficiently as traditional transformer decoders while maintaining the same performance as that with a bi-directional context encoder. We evaluate InsNet on story generation and CleVR-CoGENT captioning, showing the advantages of InsNet in several dimensions, including computational costs, generation quality, the ability to perfectly incorporate lexical controls, and better compositional generalization.

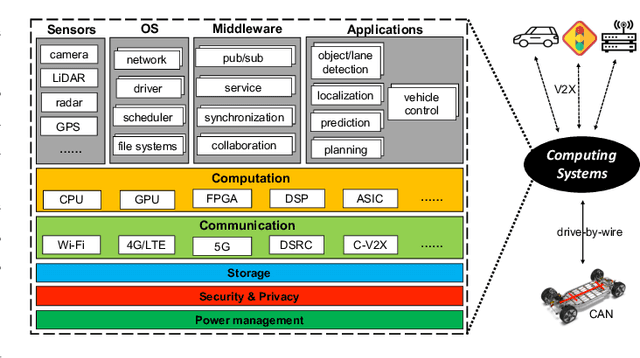

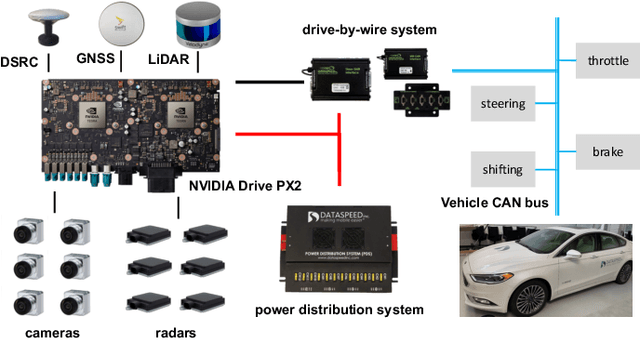

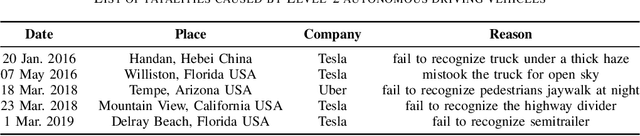

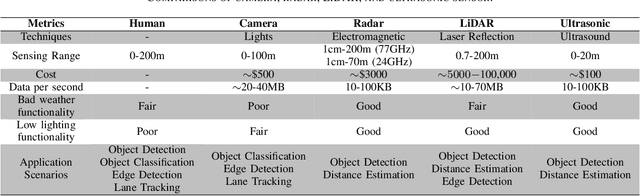

Computing Systems for Autonomous Driving: State-of-the-Art and Challenges

Sep 30, 2020

Abstract:The recent proliferation of computing technologies, e.g., sensors, computer vision, machine learning, hardware acceleration, and the broad deployment of communication mechanisms, e.g., DSRC, C-V2X, 5G, have pushed the horizon of autonomous driving, which automates the decision and control of vehicles by leveraging the perception results based on multiple sensors. The key to the success of these autonomous systems is making a reliable decision in a real-time fashion. However, accidents and fatalities caused by early deployed autonomous vehicles arise from time to time. The real traffic environment is too complicated for the current autonomous driving computing systems to understand and handle. In this paper, we present the state-of-the-art computing systems for autonomous driving, including seven performance metrics and nine key technologies, followed by eleven challenges and opportunities to realize autonomous driving. We hope this paper will gain attention from both the computing and automotive communities and inspire more research in this direction.

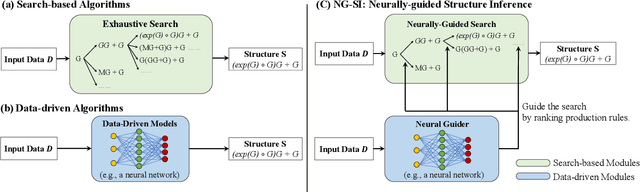

Neurally-Guided Structure Inference

Jun 17, 2019

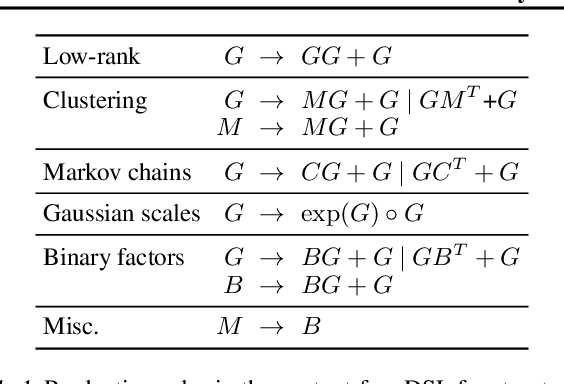

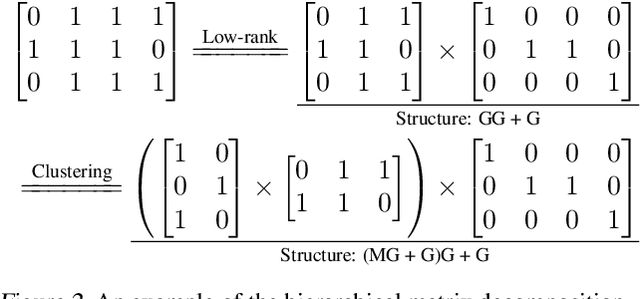

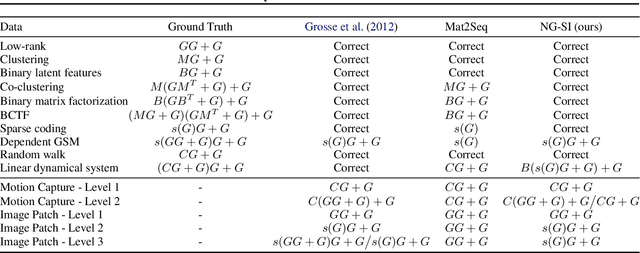

Abstract:Most structure inference methods either rely on exhaustive search or are purely data-driven. Exhaustive search robustly infers the structure of arbitrarily complex data, but it is slow. Data-driven methods allow efficient inference, but do not generalize when test data have more complex structures than training data. In this paper, we propose a hybrid inference algorithm, the Neurally-Guided Structure Inference (NG-SI), keeping the advantages of both search-based and data-driven methods. The key idea of NG-SI is to use a neural network to guide the hierarchical, layer-wise search over the compositional space of structures. We evaluate our algorithm on two representative structure inference tasks: probabilistic matrix decomposition and symbolic program parsing. It outperforms data-driven and search-based alternatives on both tasks.

* Proceedings of the 36th International Conference on Machine Learning (ICML 2019). First two authors contributed equally. Project page: http://ngsi.csail.mit.edu

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge