Weisong Shi

DAVOS: An Autonomous Vehicle Operating System in the Vehicle Computing Era

Jan 08, 2026Abstract:Vehicle computing represents a fundamental shift in how autonomous vehicles are designed and deployed, transforming them from isolated transportation systems into mobile computing platforms that support both safety-critical, real-time driving and data-centric services. In this setting, vehicles simultaneously support real-time driving pipelines and a growing set of data-driven applications, placing increased responsibility on the vehicle operating system to coordinate computation, data movement, storage, and access. These demands highlight recurring system considerations related to predictable execution, data and execution protection, efficient handling of high-rate sensor data, and long-term system evolvability, commonly summarized as Safety, Security, Efficiency, and Extensibility (SSEE). Existing vehicle operating systems and runtimes address these concerns in isolation, resulting in fragmented software stacks that limit coordination between autonomy workloads and vehicle data services. This paper presents DAVOS, the Delaware Autonomous Vehicle Operating System, a unified vehicle operating system architecture designed for the vehicle computing context. DAVOS provides a cohesive operating system foundation that supports both real-time autonomy and extensible vehicle computing within a single system framework.

CARScenes: Semantic VLM Dataset for Safe Autonomous Driving

Nov 18, 2025Abstract:CAR-Scenes is a frame-level dataset for autonomous driving that enables training and evaluation of vision-language models (VLMs) for interpretable, scene-level understanding. We annotate 5,192 images drawn from Argoverse 1, Cityscapes, KITTI, and nuScenes using a 28-key category/sub-category knowledge base covering environment, road geometry, background-vehicle behavior, ego-vehicle behavior, vulnerable road users, sensor states, and a discrete severity scale (1-10), totaling 350+ leaf attributes. Labels are produced by a GPT-4o-assisted vision-language pipeline with human-in-the-loop verification; we release the exact prompts, post-processing rules, and per-field baseline model performance. CAR-Scenes also provides attribute co-occurrence graphs and JSONL records that support semantic retrieval, dataset triage, and risk-aware scenario mining across sources. To calibrate task difficulty, we include reproducible, non-benchmark baselines, notably a LoRA-tuned Qwen2-VL-2B with deterministic decoding, evaluated via scalar accuracy, micro-averaged F1 for list attributes, and severity MAE/RMSE on a fixed validation split. We publicly release the annotation and analysis scripts, including graph construction and evaluation scripts, to enable explainable, data-centric workflows for future intelligent vehicles. Dataset: https://github.com/Croquembouche/CAR-Scenes

Slope Considered Online Nonlinear Trajectory Planning with Differential Energy Model for Autonomous Driving

Dec 12, 2024

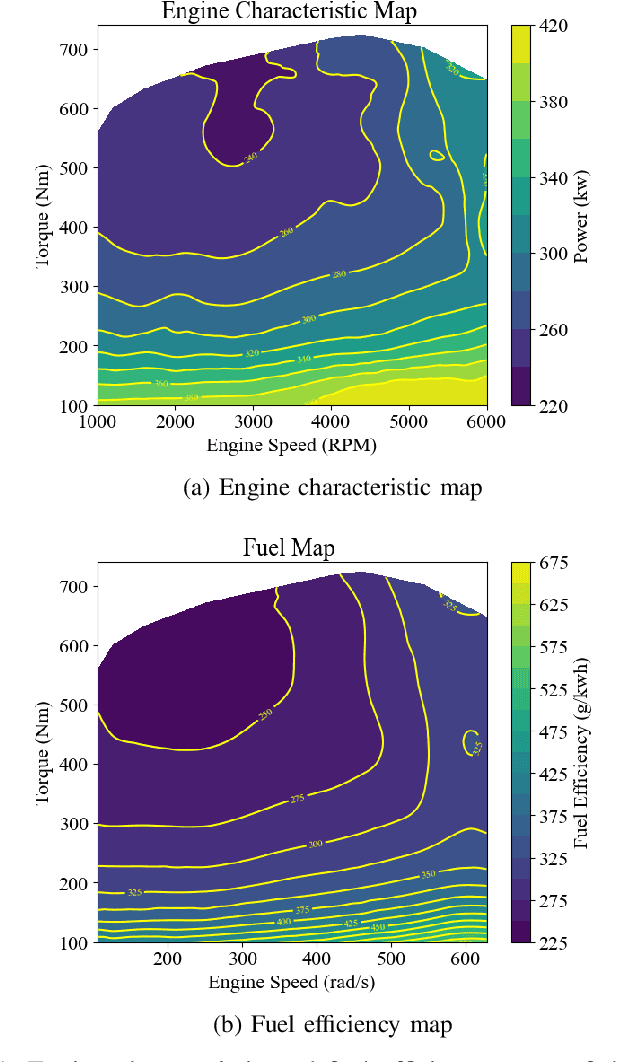

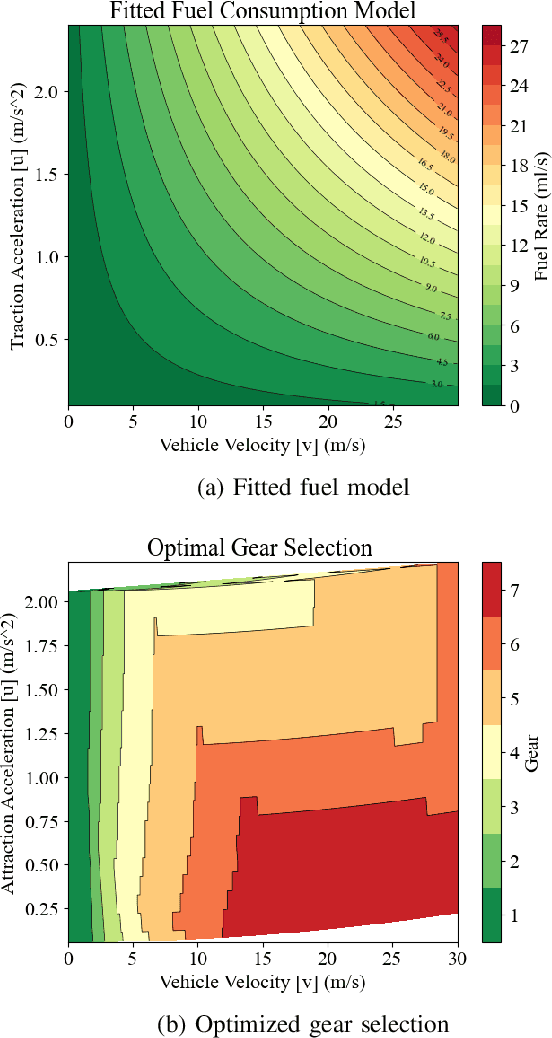

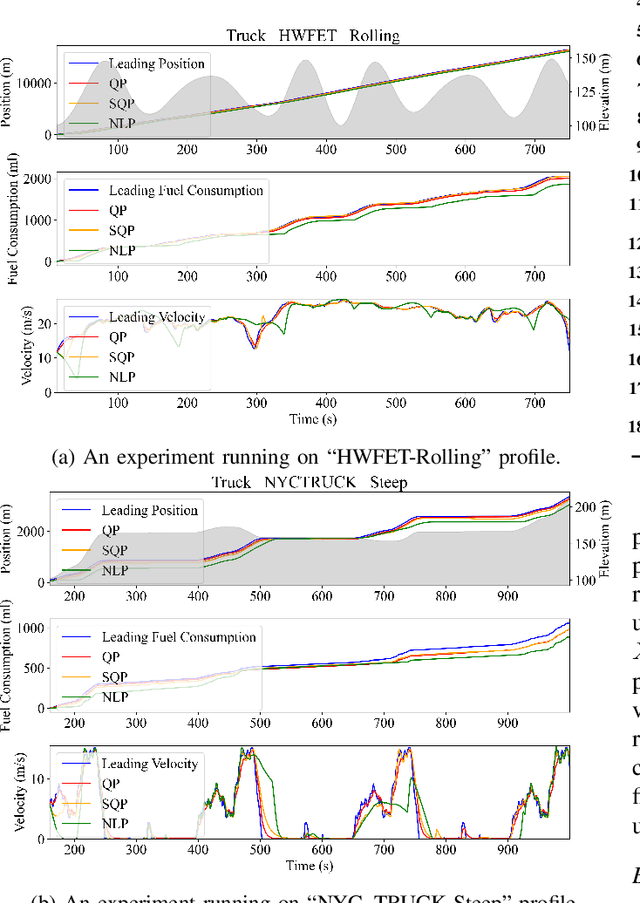

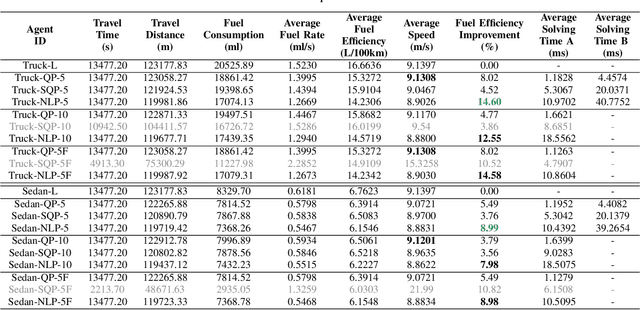

Abstract:Achieving energy-efficient trajectory planning for autonomous driving remains a challenge due to the limitations of model-agnostic approaches. This study addresses this gap by introducing an online nonlinear programming trajectory optimization framework that integrates a differentiable energy model into autonomous systems. By leveraging traffic and slope profile predictions within a safety-critical framework, the proposed method enhances fuel efficiency for both sedans and diesel trucks by 3.71\% and 7.15\%, respectively, when compared to traditional model-agnostic quadratic programming techniques. These improvements translate to a potential \$6.14 billion economic benefit for the U.S. trucking industry. This work bridges the gap between model-agnostic autonomous driving and model-aware ECO-driving, highlighting a practical pathway for integrating energy efficiency into real-time trajectory planning.

EMATO: Energy-Model-Aware Trajectory Optimization for Autonomous Driving

Dec 12, 2024Abstract:Autonomous driving lacks strong proof of energy efficiency with the energy-model-agnostic trajectory planning. To achieve an energy consumption model-aware trajectory planning for autonomous driving, this study proposes an online nonlinear programming method that optimizes the polynomial trajectories generated by the Frenet polynomial method while considering both traffic trajectories and road slope prediction. This study further investigates how the energy model can be leveraged in different driving conditions to achieve higher energy efficiency. Case studies, quantitative studies, and ablation studies are conducted in a sedan and truck model to prove the effectiveness of the method.

An Advanced Framework for Ultra-Realistic Simulation and Digital Twinning for Autonomous Vehicles

May 02, 2024

Abstract:Simulation is a fundamental tool in developing autonomous vehicles, enabling rigorous testing without the logistical and safety challenges associated with real-world trials. As autonomous vehicle technologies evolve and public safety demands increase, advanced, realistic simulation frameworks are critical. Current testing paradigms employ a mix of general-purpose and specialized simulators, such as CARLA and IVRESS, to achieve high-fidelity results. However, these tools often struggle with compatibility due to differing platform, hardware, and software requirements, severely hampering their combined effectiveness. This paper introduces BlueICE, an advanced framework for ultra-realistic simulation and digital twinning, to address these challenges. BlueICE's innovative architecture allows for the decoupling of computing platforms, hardware, and software dependencies while offering researchers customizable testing environments to meet diverse fidelity needs. Key features include containerization to ensure compatibility across different systems, a unified communication bridge for seamless integration of various simulation tools, and synchronized orchestration of input and output across simulators. This framework facilitates the development of sophisticated digital twins for autonomous vehicle testing and sets a new standard in simulation accuracy and flexibility. The paper further explores the application of BlueICE in two distinct case studies: the ICAT indoor testbed and the STAR campus outdoor testbed at the University of Delaware. These case studies demonstrate BlueICE's capability to create sophisticated digital twins for autonomous vehicle testing and underline its potential as a standardized testbed for future autonomous driving technologies.

ICAT: An Indoor Connected and Autonomous Testbed for Vehicle Computing

Mar 06, 2024

Abstract:Indoor autonomous driving testbeds have emerged to complement expensive outdoor testbeds and virtual simulations, offering scalable and cost-effective solutions for research in navigation, traffic optimization, and swarm intelligence. However, they often lack the robust sensing and computing infrastructure for advanced research. Addressing these limitations, we introduce the Indoor Connected Autonomous Testbed (ICAT), a platform that not only tackles the unique challenges of indoor autonomous driving but also innovates vehicle computing and V2X communication. Moreover, ICAT leverages digital twins through CARLA and SUMO simulations, facilitating both centralized and decentralized autonomy deployments.

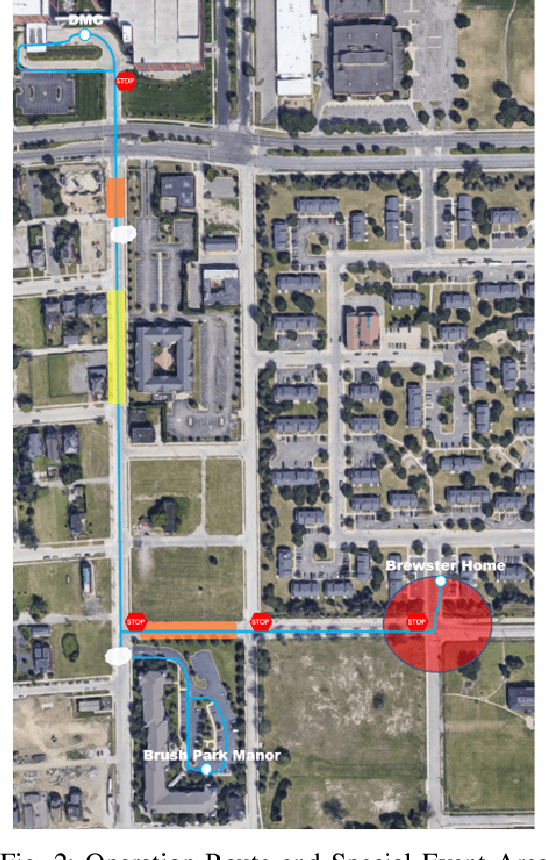

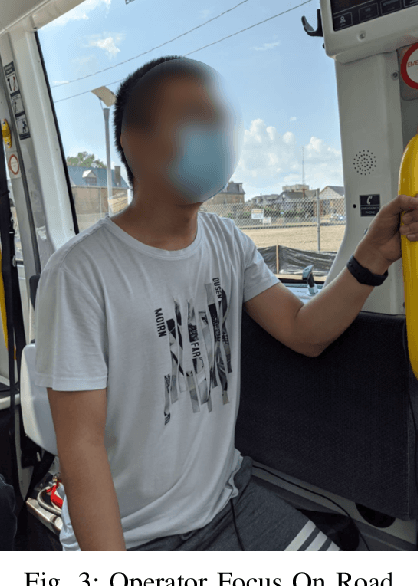

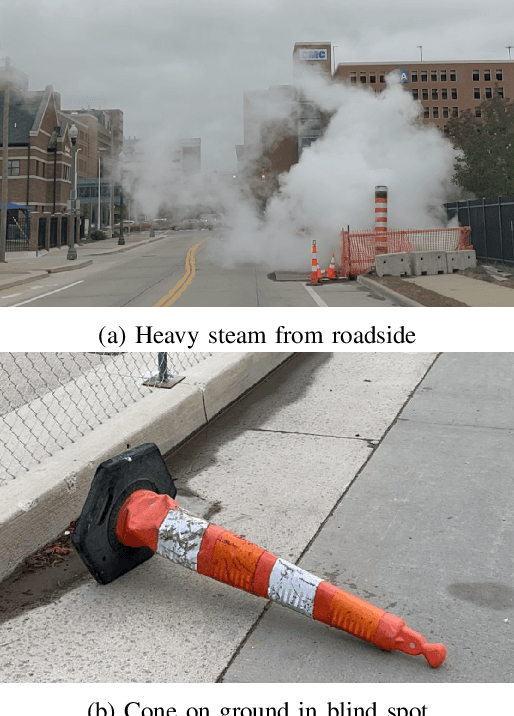

Autonomous Shuttle Operation for Vulnerable Populations: Lessons and Experiences

Feb 28, 2024

Abstract:The increasing shortage of drivers poses a significant threat to vulnerable populations, particularly seniors and disabled individuals who heavily depend on public transportation for accessing healthcare services and social events. Autonomous Vehicles (AVs) emerge as a promising alternative, offering potential improvements in accessibility and independence for these groups. However, current designs and studies often overlook the unique needs and experiences of these populations, leading to potential accessibility barriers. This paper presents a detailed case study of an autonomous shuttle test specifically tailored for seniors and disabled individuals, conducted during the early stages of the COVID-19 pandemic. The service, which lasted 13 weeks, catered to approximately 1500 passengers in an urban setting, aiming to facilitate access to essential services. Drawing from the safety operator's experiences and direct observations, we identify critical user experience and safety challenges faced by vulnerable passengers. Based on our findings, we propose targeted initiatives to enhance the safety, accessibility, and user education of AV technology for seniors and disabled individuals. These include increasing educational opportunities to familiarize these groups with AV technology, designing AVs with a focus on diversity and inclusion, and improving training programs for AV operators to address the unique needs of vulnerable populations. Through these initiatives, we aim to bridge the gap in AV accessibility and ensure that these technologies benefit all members of society.

Direct Punjabi to English speech translation using discrete units

Feb 25, 2024

Abstract:Speech-to-speech translation is yet to reach the same level of coverage as text-to-text translation systems. The current speech technology is highly limited in its coverage of over 7000 languages spoken worldwide, leaving more than half of the population deprived of such technology and shared experiences. With voice-assisted technology (such as social robots and speech-to-text apps) and auditory content (such as podcasts and lectures) on the rise, ensuring that the technology is available for all is more important than ever. Speech translation can play a vital role in mitigating technological disparity and creating a more inclusive society. With a motive to contribute towards speech translation research for low-resource languages, our work presents a direct speech-to-speech translation model for one of the Indic languages called Punjabi to English. Additionally, we explore the performance of using a discrete representation of speech called discrete acoustic units as input to the Transformer-based translation model. The model, abbreviated as Unit-to-Unit Translation (U2UT), takes a sequence of discrete units of the source language (the language being translated from) and outputs a sequence of discrete units of the target language (the language being translated to). Our results show that the U2UT model performs better than the Speech-to-Unit Translation (S2UT) model by a 3.69 BLEU score.

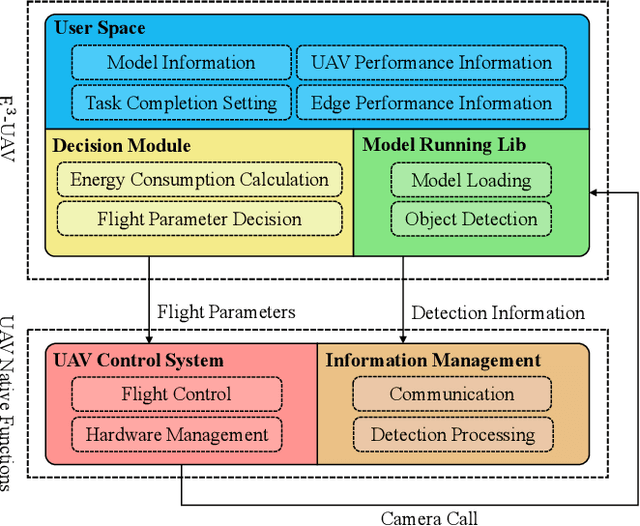

E3-UAV: An Edge-based Energy-Efficient Object Detection System for Unmanned Aerial Vehicles

Aug 09, 2023

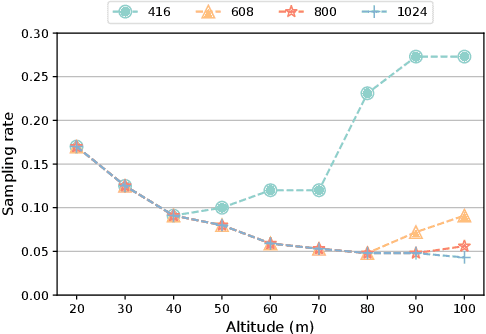

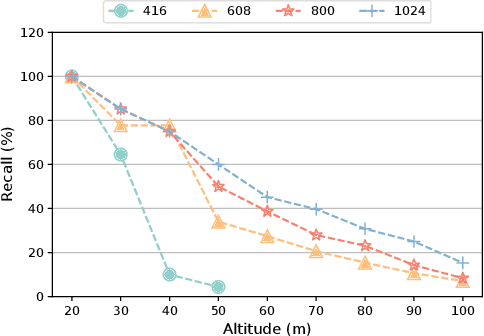

Abstract:Motivated by the advances in deep learning techniques, the application of Unmanned Aerial Vehicle (UAV)-based object detection has proliferated across a range of fields, including vehicle counting, fire detection, and city monitoring. While most existing research studies only a subset of the challenges inherent to UAV-based object detection, there are few studies that balance various aspects to design a practical system for energy consumption reduction. In response, we present the E3-UAV, an edge-based energy-efficient object detection system for UAVs. The system is designed to dynamically support various UAV devices, edge devices, and detection algorithms, with the aim of minimizing energy consumption by deciding the most energy-efficient flight parameters (including flight altitude, flight speed, detection algorithm, and sampling rate) required to fulfill the detection requirements of the task. We first present an effective evaluation metric for actual tasks and construct a transparent energy consumption model based on hundreds of actual flight data to formalize the relationship between energy consumption and flight parameters. Then we present a lightweight energy-efficient priority decision algorithm based on a large quantity of actual flight data to assist the system in deciding flight parameters. Finally, we evaluate the performance of the system, and our experimental results demonstrate that it can significantly decrease energy consumption in real-world scenarios. Additionally, we provide four insights that can assist researchers and engineers in their efforts to study UAV-based object detection further.

* 16 pages, 8 figures

Spatial-Temporal Synchronous Graph Transformer network (STSGT) for COVID-19 forecasting

Oct 31, 2022

Abstract:COVID-19 has become a matter of serious concern over the last few years. It has adversely affected numerous people around the globe and has led to the loss of billions of dollars of business capital. In this paper, we propose a novel Spatial-Temporal Synchronous Graph Transformer network (STSGT) to capture the complex spatial and temporal dependency of the COVID-19 time series data and forecast the future status of an evolving pandemic. The layers of STSGT combine the graph convolution network (GCN) with the self-attention mechanism of transformers on a synchronous spatial-temporal graph to capture the dynamically changing pattern of the COVID time series. The spatial-temporal synchronous graph simultaneously captures the spatial and temporal dependencies between the vertices of the graph at a given and subsequent time-steps, which helps capture the heterogeneity in the time series and improve the forecasting accuracy. Our extensive experiments on two publicly available real-world COVID-19 time series datasets demonstrate that STSGT significantly outperforms state-of-the-art algorithms that were designed for spatial-temporal forecasting tasks. Specifically, on average over a 12-day horizon, we observe a potential improvement of 12.19% and 3.42% in Mean Absolute Error(MAE) over the next best algorithm while forecasting the daily infected and death cases respectively for the 50 states of US and Washington, D.C. Additionally, STSGT also outperformed others when forecasting the daily infected cases at the state level, e.g., for all the counties in the State of Michigan. The code and models are publicly available at https://github.com/soumbane/STSGT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge