On Efficient Training, Controllability and Compositional Generalization of Insertion-based Language Generators

Paper and Code

Mar 01, 2021

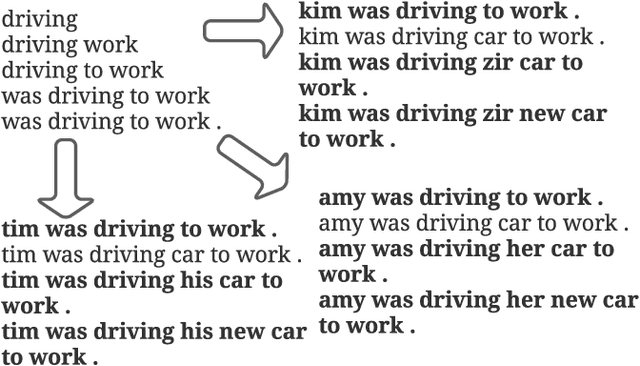

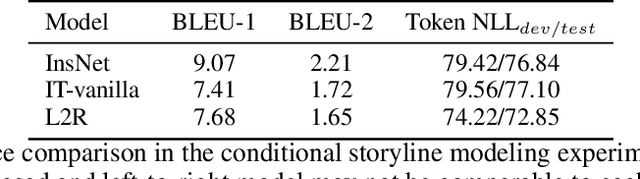

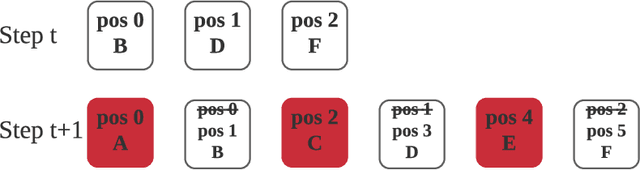

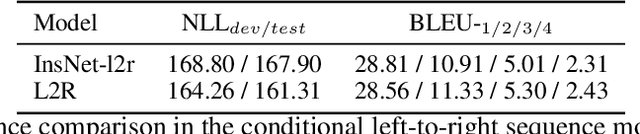

Auto-regressive language models with the left-to-right generation order have been a predominant paradigm for language generation. Recently, out-of-order text generation beyond the traditional left-to-right paradigm has attracted extensive attention, with a notable variation of insertion-based generation, where a model is used to gradually extend the context into a complete sentence purely with insertion operations. However, since insertion operations disturb the position information of each token, it is often believed that each step of the insertion-based likelihood estimation requires a bi-directional \textit{re-encoding} of the whole generated sequence. This computational overhead prohibits the model from scaling up to generate long, diverse texts such as stories, news articles, and reports. To address this issue, we propose InsNet, an insertion-based sequence model that can be trained as efficiently as traditional transformer decoders while maintaining the same performance as that with a bi-directional context encoder. We evaluate InsNet on story generation and CleVR-CoGENT captioning, showing the advantages of InsNet in several dimensions, including computational costs, generation quality, the ability to perfectly incorporate lexical controls, and better compositional generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge